python 异步数据库_异步Python和数据库

python 异步数据库

The asynchronous programming topic is difficult to cover. These days, it’s not just about one thing, and I’m mostly an outsider to it. However, because I deal a lot with relational databases and the Python stack’s interaction with them, I have to field a lot of questions and issues regarding asynchronous IO and database programming, both specific to SQLAlchemy as well as towards Openstack.

异步编程主题很难涵盖。 如今,这不仅是一件事,而且我基本上是一个局外人。 但是,由于我处理了很多关系数据库以及Python堆栈与它们之间的交互,因此我不得不就异步IO和数据库编程提出许多问题,这些问题既涉及SQLAlchemy ,也涉及OpenStack 。

As I don’t have a simple opinion on the matter, I’ll try to give a spoiler for the rest of the blog post here. I think that the Python asyncio library is very neat, promising, and fun to use, and organized well enough that it’s clear that some level of SQLAlchemy compatibility is feasible, most likely including most parts of the ORM. As asyncio is now a standard part of Python, this compatiblity layer is something I am interested in producing at some point.

由于我对此事没有简单的看法,因此我将在此处为其余博客文章提供一个破坏者。 我认为Python asyncio库非常简洁,有前途并且易于使用,并且组织得足够好,很明显,某种程度SQLAlchemy兼容性是可行的,很可能包括ORM的大部分。 由于asyncio现在是Python的标准部分,因此我有时希望对此兼容层进行生产。

All of that said, I still think that asynchronous programming is just one potential approach to have on the shelf, and is by no means the one we should be using all the time or even most of the time, unless we are writing HTTP or chat servers or other applications that specifically need to concurrently maintain large numbers of arbitrarily slow or idle TCP connections (where by “arbitrarily” we mean, we don’t care if individual connections are slow, fast, or idle, throughput can be maintained regardless). For standard business-style, CRUD-oriented database code, the approach given by asyncio is never necessary, will almost certainly hinder performance, and arguments as to its promotion of “correctness” are very questionable in terms of relational database programming. Applications that need to do non-blocking IO on the front end should leave the business-level CRUD code behind the thread pool.

综上所述,我仍然认为异步编程只是现成的一种潜在方法,绝不是我们一直或什至大部分时间都应该使用的方法,除非我们正在编写HTTP或聊天。服务器或其他特别需要同时维护大量任意慢速或空闲TCP连接的应用程序(“任意”是指我们不关心单个连接是慢速,快速还是空闲,无论如何都可以保持吞吐量) 。 对于标准的业务样式, 面向CRUD的数据库代码,asyncio所提供的方法从没有必要,几乎肯定会阻碍性能,并且有关其“正确性”的提升的争论在关系数据库编程方面非常可疑。 需要在前端执行非阻塞IO的应用程序应将业务级CRUD代码留在线程池后面。

With my assumedly entirely unsurprising viewpoint revealed, let’s get underway!

我的观点完全出乎意料地揭示了,让我们开始吧!

什么是异步IO? (What is Asynchronous IO?)

Asynchronous IO is an approach used to achieve concurrency by allowing processing to continue while responses from IO operations are still being waited upon. To achieve this, IO function calls are made to be non blocking, so that they return immediately, before the actual IO operation is complete or has even begun. A typically OS-dependent polling system (such as epoll) is used within a loop in order to query a set of file descriptors in search of the next one which has data available; when located, it is acted upon, and when the operation is complete, control goes back to the polling loop in order to act upon the next descriptor with data available.

异步IO是一种用于通过允许在继续等待IO操作的响应的同时继续处理来实现并发的方法。 为此,必须使IO函数调用成为非阻塞的 ,以便它们在实际IO操作完成或什至开始之前立即返回。 在循环中使用通常依赖于操作系统的轮询系统(例如epoll ),以查询一组文件描述符,以搜索下一个具有可用数据的文件描述符。 当找到时,将对其执行操作;当操作完成时,控制权将返回到轮询循环,以便对具有可用数据的下一个描述符进行操作。

Non-blocking IO in its classical use case is for those cases where it’s not efficient to dedicate a thread of execution towards waiting for a socket to have results. It’s an essential technique for when you need to listen to lots of TCP sockets that are arbitrarily “sleepy” or slow – the best example is a chat server, or some similar kind of messaging system, where you have lots of connections connected persistently, only sending data very occasionally; e.g. when a connection actually sends data, we consider it to be an “event” to be responded to.

非阻塞IO在其经典用例中是针对无法将执行线程专用于等待套接字产生结果的情况。 当您需要侦听许多“困”或慢的任意TCP套接字时,这是一项必不可少的技术–最好的例子是聊天服务器或某种类似的消息传递系统,其中您有许多连接持续存在,偶尔发送数据; 例如,当连接实际发送数据时,我们将其视为要响应的“事件”。

In recent years, the asynchronous IO approach has also been successfully applied to HTTP related servers and applications. The theory of operation is that a very large number of HTTP connections can be efficiently serviced without the need for the server to dedicate threads to wait on each connection individually; in particular, slow HTTP clients need not get in the way of the server being able to serve lots of other clients at the same time. Combine this with the renewed popularity of so-called long polling approaches, and non-blocking web servers like nginx have proven to work very well.

近年来,异步IO方法也已成功应用于与HTTP相关的服务器和应用程序。 工作原理是,可以有效地服务大量HTTP连接,而无需服务器专门让线程分别等待每个连接; 特别是,速度较慢的HTTP客户端不必妨碍服务器能够同时为许多其他客户端提供服务。 将其与重新流行的长轮询方法相结合,事实证明像nginx这样的无阻塞Web服务器可以很好地工作。

异步IO和脚本 (Asynchronous IO and Scripting)

Asynchronous IO programming in scripting languages is heavily centered on the notion of an event loop, which in its most classic form uses callback functions that receive a call once their corresponding IO request has data available. A critical aspect of this type of programming is that, since the event loop has the effect of providing scheduling for a series of functions waiting for IO, a scripting language in particular can replace the need for threads and OS-level scheduling entirely, at least within a single CPU. It can in fact be a little bit awkward to integrate multithreaded, blocking IO code with code that uses non-blocking IO, as they necessarily use different programming approaches when IO-oriented methods are invoked.

用脚本语言进行的异步IO编程主要集中在事件循环的概念上,事件循环以其最经典的形式使用回调函数,这些回调函数在其相应的IO请求具有可用数据后即接收呼叫。 此类编程的一个关键方面是,由于事件循环具有为一系列等待IO的功能提供调度的作用,因此至少脚本语言尤其可以完全替代对线程和OS级调度的需求在单个CPU中。 实际上,将多线程的阻塞IO代码与使用非阻塞IO的代码集成在一起可能有点尴尬,因为在调用面向IO的方法时,它们必然使用不同的编程方法。

The relationship of asynchronous IO to event loops, combined with its growing popularity for use in web-server oriented applications as well as its ability to provide concurrency in an intuitive and obvious way, found itself hitting a perfect storm of factors for it to become popular on one platform in particular, Javascript. Javascript was designed to be a client side scripting language for browsers. Browsers, like any other GUI app, are essentially event machines; all they do is respond to user-initiated events of button pushes, key presses, and mouse moves. As a result, Javascript has a very strong concept of an event loop with callbacks and, until recently, no concept at all of multithreaded programming.

异步IO与事件循环的关系,再加上它在面向Web服务器的应用程序中越来越流行,以及它以直观和明显的方式提供并发的能力,使其自身受到了各种因素的完美风暴在一个平台上,尤其是Javascript。 Javascript被设计为浏览器的客户端脚本语言。 就像任何其他GUI应用程序一样,浏览器本质上是事件机。 他们所做的只是响应用户启动的按钮按下,按键按下和鼠标移动事件。 结果,Javascript具有带回调的事件循环的强大概念,直到最近 ,才完全没有多线程编程的概念。

As an army of front-end developers from the 90’s through the 2000’s mastered the use of these client-side callbacks, and began to use them not just for user-initiated events but for network-initiated events via AJAX connections, the stage was set for a new player to come along, which would transport the ever growing community of Javascript programmers to a new place…

从90年代到2000年代的前端开发人员大军掌握了这些客户端回调的用法,并且不仅将它们用于用户启动的事件,还通过AJAX连接将其用于网络启动的事件,这一阶段已定一个新的参与者,这将把不断增长的Javascript程序员社区转移到一个新的地方……

服务器 (The Server)

Node.js is not the first attempt to make Javascript a server side language. However, a key reason for its success was that there were plenty of sophisticated and experienced Javascript programmers around by the time it was released, and that it also fully embraces the event-driven programming paradigm that client-side Javascript programmers are already well-versed in and comfortable with.

Node.js并不是使Javascript成为服务器端语言的首次尝试。 但是,其成功的一个关键原因是,在发布之时,周围已经有许多成熟和经验丰富的Javascript程序员,并且它也完全包含了事件驱动的编程范式,即客户端Javascript程序员已经精通在和舒适。

In order to sell this, it followed that the “non-blocking IO” approach needed to be established as appropriate not just for the classic case of “tending to lots of usually asleep or arbitrarily slow connections”, but as the de facto style in which all web-oriented software should be written. This meant that any network IO of any kind now had to be interacted with in a non-blocking fashion, and this of course includes database connections – connections which are normally relatively few per process, with numbers of 10-50 being common, are usually pooled so that the latency associated with TCP startup is not much of an issue, and for which the response times for a well-architected database, naturally served over the local network behind the firewall and often clustered, are extremely fast and predictable – in every way, the exact opposite of the use case for which non-blocking IO was first intended. The Postgresql database supports an asynchronous command API in libpq, stating a primary rationale for it as – surprise! using it in GUI applications.

为了卖这个,它遵循的是“非阻塞IO”的方式需要建立适当的不只是为“趋于很多平时睡眠或任意网络连接速度太慢”的经典案例,但作为事实上的风格应该编写所有面向Web的软件。 这意味着现在必须以非阻塞方式与任何类型的网络IO进行交互,并且这当然包括数据库连接-通常每个进程的连接相对较少,通常为10-50个集中存储,这样与TCP启动相关的延迟就不再是问题,而且对于结构良好的数据库(自然地通过防火墙后面的本地网络服务并且通常是群集的),其响应时间非常快且可预测–方式与最初打算使用非阻塞IO的用例完全相反。 Postgresql数据库在libpq中支持异步命令API,并指出其主要理由是–令人惊讶! 在GUI应用程序中使用它 。

node.js already benefits from an extremely performant JIT-enabled engine, so it’s likely that despite this repurposing of non-blocking IO for a case in which it was not intended, scheduling among database connections using non-blocking IO works acceptably well. (authors note: the comment here regarding libuv’s thread pool is removed, as this only regards file IO.)

node.js已经从性能卓越的启用JIT的引擎中受益,因此,尽管在非预期的情况下重新使用了非阻塞IO,但使用非阻塞IO在数据库连接之间进行调度仍然可以令人满意地工作。 (作者注意:此处关于libuv线程池的评论已删除,因为这仅涉及文件IO。)

线程的幽灵 (The Spectre of Threads)

Well before node.js was turning masses of client-side Javascript developers into async-only server side programmers, the multithreaded programming model had begun to make academic theorists complain that they produce non-deterministic programs, and asynchronous programming, having the side effect that the event-driven paradigm effectively provides an alternative model of programming concurrency (at least for any program with a sufficient proportion of IO to keep context switches high enough), quickly became one of several hammers used to beat multithreaded programming over the head, centered on the two critiques that threads are expensive to create and maintain in an application, being inappropriate for applications that wish to tend to hundreds or thousands of connections simultaneously, and secondly that multithreaded programming is difficult and non-deterministic. In the Python world, continued confusion over what the GIL does and does not do provided for a natural tilling of land fertile for the async model to take root more strongly than might have occurred in other scenarios.

在node.js将大量客户端Java开发人员转变为仅异步服务器端编程人员之前,多线程编程模型已经开始使学术理论家抱怨说,他们产生了不确定性程序和异步程序,这具有以下副作用:事件驱动范例有效地提供了编程并发的替代模型(至少对于具有足够比例的IO来保持上下文切换足够高的任何程序),Swift成为了击败头顶上的多线程编程的重锤之一两种批评认为,在应用程序中创建和维护线程很昂贵,不适用于希望同时趋向数百或数千个连接的应用程序,其次,多线程编程是困难且不确定的。 在Python世界中,关于GIL做什么和不做什么的持续困惑为异步模型提供了自然耕作的肥沃土地,从而使根植模型比在其他情况下更能扎根。

您觉得意大利面怎么样? (How do you like your Spaghetti?)

The callback style of node.js and other asynchronous paradigms was considered to be problematic; callbacks organized for larger scale logic and operations made for verbose and hard-to-follow code, commonly referred to as callback spaghetti. Whether callbacks were in fact spaghetti or a thing of beauty was one of the great arguments of the 2000’s, however I fortunately don’t have to get into it because the async community has clearly acknowledged the former and taken many great steps to improve upon the situation.

Node.js和其他异步范例的回调样式被认为是有问题的。 为更大规模的逻辑而组织的回调以及为冗长且难以遵循的代码进行的操作(通常称为回调意大利面条) 。 回调实际上是意大利面还是精美的东西是2000年代的重要论点之一,但是幸运的是,我不必介入,因为异步社区已经清楚地意识到了前者,并采取了许多重大步骤来改进情况。

In the Python world, one approach offered in order to allow for asyncrhonous IO while removing the need for callbacks is the “implicit async IO” approach offered by eventlet and gevent. These take the approach of instrumenting IO functions to be implicitly non-blocking, organized such that a system of green threads may each run concurrently, using a native event library such as libev to schedule work between green threads based on the points at which non-blocking IO is invoked. The effect of implicit async IO systems is that the vast majority of code which performs IO operations need not be changed at all; in most cases, the same code can literally be used in both blocking and non-blocking IO contexts without any changes (though in typical real-world use cases, certainly not without occasional quirks).

在Python世界中,一种在允许异步IO的同时消除回调需求的方法是eventlet和gevent提供的“隐式异步IO”方法。 这些方法采用将IO功能检测为隐式无阻塞的方法,组织起来使得绿色线程系统可以同时运行,使用诸如libev之类的本机事件库根据非线程之间的时间点调度绿色线程之间的工作。阻塞IO被调用。 隐式异步IO系统的作用是,执行IO操作的绝大多数代码完全不需要更改; 在大多数情况下,相同的代码实际上可以在阻塞和非阻塞IO上下文中使用,而无需进行任何更改(尽管在典型的实际用例中,当然也不会偶尔有怪癖)。

In constrast to implicit async IO is the very promising approach offered by Python itself in the form of the previously mentioned asyncio library, now available in Python 3. Asyncio brings to Python fully standardized concepts of “futures” and coroutines, where we can produce non-blocking IO code that flows in a very similar way to traditional blocking code, while still maintaining the explicit nature of when non blocking operations occur.

与隐式异步IO相反,Python本身以前面提到的asyncio库的形式提供了非常有前途的方法,该库现在可以在Python 3中使用。Asyncio将Python的“未来”和协程概念引入了完全标准化的概念,我们可以在其中产生非-阻塞IO代码的流动方式与传统阻塞代码非常相似,同时仍保持发生非阻塞操作时的显式性。

SQLAlchemy? 异步吗? 是? (SQLAlchemy? Asyncio? Yes?)

Now that asyncio is part of Python, it’s a common integration point for all things async. Because it maintains the concepts of meaningful return values and exception catching semantics, getting an asyncio version of SQLAlchemy to work for real is probably feasible; it will still require at least several external modules that re-implement key methods of SQLAlchemy Core and ORM in terms of async results, but it seems that the majority of code, even within execution-centric parts, can stay much the same. It no longer means a rewrite of all of SQLAlchemy, and the async aspects should be able to remain entirely outside of the central library itself. I’ve started playing with this. It will be a lot of effort but should be doable, even for the ORM where some of the patterns like “lazy loading” will just have to work in some more verbose way.

现在, 异步是Python的一部分,它是异步所有事物的通用集成点。 因为它保留了有意义的返回值和异常捕获语义的概念,所以使SQLAlchemy的异步版本真正运行可能是可行的; 仍然需要至少几个外部模块才能根据异步结果重新实现SQLAlchemy Core和ORM的关键方法,但是似乎大多数代码,即使是在以执行为中心的部分中,也可以保持不变。 它不再意味着要重写所有SQLAlchemy,并且异步方面应该可以完全保留在中央库之外。 我已经开始玩了。 这将是很多工作,但应该是可行的,即使对于ORM而言,诸如“惰性加载”之类的某些模式也将不得不以更为冗长的方式工作。

However. I don’t know that you really would generally want to use an async-enabled form of SQLAlchemy.

然而。 我不知道您通常真的想使用启用了异步SQLAlchemy形式。

进行异步Web扩展 (Taking Async Web Scale)

As anticipated, let’s get into where it’s all going wrong, especially for database-related code.

如预期的那样,让我们进入所有错误的地方,尤其是对于与数据库相关的代码。

问题一–异步作为魔术表演童话尘埃 (Issue One – Async as Magic Performance Fairy Dust)

Many (but certainly not all) within both the node.js community as well as the Python community continue to claim that asynchronous programming styles are innately superior for concurrent performance in nearly all cases. In particular, there’s the notion that the context switching approaches of explicit async systems such as that of asyncio can be had virtually for free, and as the Python has a GIL, that all adds up in some unspecified/non-illustrated/apples-to-oranges way to establish that asyncio will totally, definitely be faster than using any kind of threaded approach, or at the very least, not any slower. Therefore any web application should as quickly as possible be converted to use a front-to-back async approach for everything, from HTTP request to database calls, and performance enhancements will come for free.

node.js社区和Python社区中的许多(但不是全部)仍然声称,几乎在所有情况下,异步编程风格对于并发性能都具有天生的优越性。 特别是,有一种观念认为,像asyncio这样的显式异步系统的上下文切换方法几乎可以免费使用,并且由于Python具有GIL,所有这些加起来都包含了一些未指定/未示出的/ apples-to -建立异步将完全,绝对比使用任何一种线程方法快的橙色方法,或者至少没有任何慢的方法。 因此,任何Web应用程序都应尽快转换为对从HTTP请求到数据库调用的所有内容都使用从前到后的异步方法,并且性能增强将免费提供。

I will address this only in terms of database access. For HTTP / “chat” server styles of communication, either listening as a server or making client calls, asyncio may very well be superior as it can allow lots more sleepy/arbitrarily slow connections to be tended towards in a simple way. But for local database access, this is just not the case.

我只会在数据库访问方面解决这个问题。 对于HTTP /“聊天”服务器类型的通信,无论是作为服务器侦听还是进行客户端调用,asyncio可能都非常优越,因为它可以通过简单的方式允许更多的睡眠/任意慢速连接。 但是对于本地数据库访问,情况并非如此。

1.与您的数据库相比,Python非常非常慢 (1. Python is Very , Very Slow compared to your database)

Update – redditor Riddlerforce found valid issues with this section, in that I was not testing over a network connection. Results here are updated. The conclusion is the same, but not as hyperbolically amusing as it was before.

更新 – redditor Riddlerforce在本节中发现了有效的问题,因为我没有通过网络连接进行测试。 此处的结果已更新。 结论是相同的,但不像以前那样夸张有趣。

Let’s first review asynchronous programming’s sweet spot, the I/O Bound application:

让我们首先回顾异步编程的甜蜜点 ,在I / O密集型应用程序:

I/O Bound refers to a condition in which the time it takes to complete a computation is determined principally by the period spent waiting for input/output operations to be completed. This circumstance arises when the rate at which data is requested is slower than the rate it is consumed or, in other words, more time is spent requesting data than processing it.

I / O界限是指一种条件,其中完成计算所需的时间主要由等待输入/输出操作完成所花费的时间确定。 当请求数据的速度比消耗数据的速度慢,或者换句话说, 请求数据花费的时间多于处理数据的时间时,就会出现这种情况。

A great misconception I seem to encounter often is the notion that communication with the database takes up a majority of the time spent in a database-centric Python application. This perhaps is a common wisdom in compiled languages such as C or maybe even Java, but generally not in Python. Python is very slow, compared to such systems; and while Pypy is certainly a big help, the speed of Python is not nearly as fast as your database, when dealing in terms of standard CRUD-style applications (meaning: not running large OLAP-style queries, and of course assuming relatively low network latencies). As I worked up in my PyMySQL Evaluation for Openstack, whether a database driver (DBAPI) is written in pure Python or in C will incur significant additional Python-level overhead. For just the DBAPI alone, this can be as much as an order of magnitude slower. While network overhead will cause more balanced proportions between CPU and IO, just the CPU time spent by Python driver itself still takes up twice the time as the network IO, and that is without any additional database abstraction libraries, business logic, or presentation logic in place.

我似乎经常遇到的一个很大的误解是,与数据库的通信占用了以数据库为中心的Python应用程序所花费的大部分时间。 这在C甚至Java之类的编译语言中可能是一种常识,但在Python中通常不是。 与此类系统相比,Python 非常慢。 尽管Pypy无疑是一个很大的帮助,但是在处理标准CRUD风格的应用程序时(意味着:不运行大型OLAP风格的查询,并且当然假设网络相对较低),Python的速度几乎不及您数据库的速度。延迟)。 正如我在PyMySQL Openstack评估中所做的那样,无论数据库驱动程序(DBAPI)是用纯Python还是用C编写的,都会产生大量的Python级别的额外开销。 仅对于DBAPI,这可能会慢一个数量级。 尽管网络开销会导致CPU和IO之间的分配比例更加均衡,但Python驱动程序本身所花费的CPU时间仍然是网络IO的两倍,并且在其中没有任何其他数据库抽象库,业务逻辑或表示逻辑。地点。

This script, adapted from the Openstack entry, illustrates a pretty straightforward set of INSERT and SELECT statements, and virtually no Python code other than the barebones explicit calls into the DBAPI.

这个脚本是从Openstack条目改编而来的,它说明了一套非常简单的INSERT和SELECT语句,除了对DBAPI的准系统显式调用外,几乎没有Python代码。

MySQL-Python, a pure C DBAPI, runs it like the following over a network:

MySQL-Python是纯C DBAPI,可通过以下网络运行它:

DBAPI (cProfile): <module 'MySQLdb'>47503 function calls in 14.863 seconds

DBAPI (straight time): <module 'MySQLdb'>, total seconds 12.962214

With PyMySQL, a pure-Python DBAPI,and a network connection we’re about 30% slower:

使用PyMySQL,纯Python DBAPI和网络连接时,速度要慢30%:

DBAPI (cProfile): <module 'pymysql'>23807673 function calls in 21.269 seconds

DBAPI (straight time): <module 'pymysql'>, total seconds 17.699732

Running against a local database, PyMySQL is an order of magnitude slower than MySQLdb:

针对本地数据库运行,PyMySQL比MySQLdb慢一个数量级:

DBAPI: <module 'pymysql'>, total seconds 9.121727DBAPI: <module 'MySQLdb'>, total seconds 1.025674

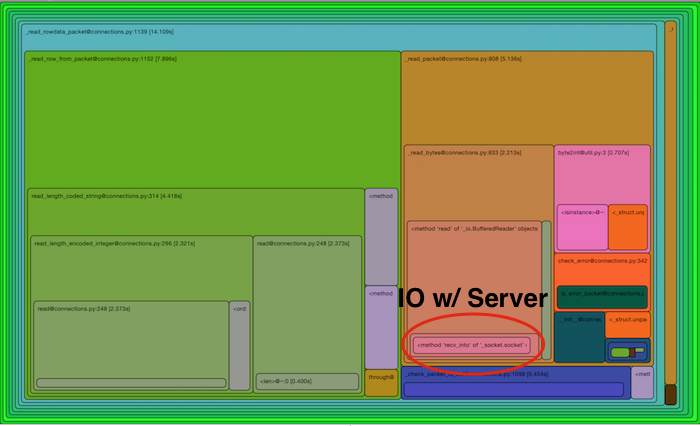

To highlight the actual proportion of these runs that’s spent in IO, the following two RunSnakeRun displays illustrate how much time is actually for IO within the PyMySQL run, both for local database as well as over a network connection. The proportion is not as dramatic over a network connection, but in that case network calls still only take 1/3rd of the total time; the other 2/3rds is spent in Python crunching the results. Keep in mind this is just the DBAPI alone; a real world application would have database abstraction layers, business and presentation logic surrounding these calls as well:

为了突出显示这些运行在IO中所花费的实际比例,以下两个RunSnakeRun显示说明了PyMySQL运行中IO在本地数据库以及通过网络连接上实际花费的时间。 在网络连接上,这个比例并不那么大,但是在那种情况下,网络呼叫仍然只占总时间的1/3。 其他2 / 3rd花费在Python处理结果上。 请记住,这仅仅是DBAPI本身 ; 现实世界中的应用程序还将具有围绕这些调用的数据库抽象层,业务和表示逻辑:

Local connection – clearly not IO bound.

本地连接–显然不受IO约束。

Network connection – not as dramatic, but still not IO bound (8.7 sec of socket time vs. 24 sec for the overall execute)

网络连接–不那么剧烈,但仍然不受IO限制(套接字时间8.7秒,而整体执行时间为24秒)

Let’s be clear here, that when using Python, calls to your database, unless you’re trying to make lots of complex analytical calls with enormous result sets that you would normally not be doing in a high performing application, or unless you have a very slow network, do not typically produce an IO bound effect. When we talk to databases, we are almost always using some form of connection pooling, so the overhead of connecting is already mitigated to a large extent; the database itself can select and insert small numbers of rows very fast on a reasonable network. The overhead of Python itself, just to marshal messages over the wire and produce result sets, gives the CPU plenty of work to do which removes any unique throughput advantages to be had with non-blocking IO. With real-world activities based around database operations, the proportion spent in CPU only increases.

让我们在这里明确一点,当使用Python时,对数据库的调用,除非您尝试使用大量结果集进行许多复杂的分析调用,而这些结果集通常是在高性能应用程序中不会执行的,或者除非您拥有网络速度较慢,通常不会产生IO绑定效应。 当我们与数据库对话时,我们几乎总是使用某种形式的连接池,因此连接的开销已在很大程度上得到减轻。 数据库本身可以在合理的网络上非常快速地选择并插入少量行。 Python本身的开销只是为了通过网络封送消息并生成结果集,这给CPU带来了很多工作要做,从而消除了非阻塞IO所具有的任何独特的吞吐量优势。 使用围绕数据库操作的实际活动,仅花费在CPU上的比例就会增加。

2. AsyncIO使用吸引人的但效率相对较低的Python范例 (2. AsyncIO uses appealing, but relatively inefficient Python paradigms)

At the core of asyncio is that we are using the @asyncio.coroutine decorator, which does some generator tricks in order to have your otherwise synchronous looking function defer to other coroutines. Central to this is the yield from technique, which causes the function to stop its execution at that point, while other things go on until the event loop comes back to that point. This is a great idea, and it can also be done using the more common yield statement as well. However, using yield from, we are able to maintain at least the appearance of the presence of return values:

asyncio的核心是我们使用@ asyncio.coroutine装饰器,该装饰器会执行一些生成器技巧,以使您本来同步的外观函数遵循其他协程。 技术的产量是技术的核心,它使函数在该点停止执行,而其他事情继续进行,直到事件循环回到该点为止。 这是一个好主意,也可以使用更常见的yield语句来完成。 但是,使用yield from ,我们至少可以保留返回值的外观:

@asyncio.coroutine

@asyncio.coroutine

def def some_coroutinesome_coroutine ():():conn conn = = yield from yield from dbdb .. connectconnect ()()return return conn

conn

That syntax is fantastic, I like it a lot, but unfortunately, the mechanism of that return conn statement is necessarily that it raises a StopIteration exception. This, combined with the fact that each yield from call more or less adds up to the overhead of an individual function call separately. I tweeted a simple demonstration of this, which I include here in abbreviated form:

该语法很棒,我非常喜欢它,但是不幸的是,该return conn语句的机制必定会引发StopIteration异常。 这与以下事实结合在一起:每次调用产生的收益或多或少会分别加总单个函数调用的开销。 我在推特上发布了一个简单的演示,并以缩写形式包含在此处:

The results we get are that the do-nothing yield from + StopIteration take about six times longer:

我们得到的结果是,+ StopIteration 产生的 无效操作花费的时间大约是原来的六倍:

yield from: 12.52761328802444

normal: 2.110536064952612

To which many people said to me, “so what? Your database call is much more of the time spent”. Never minding that we’re not talking here about an approach to optimize existing code, but to prevent making perfectly. The PyMySQL example should illustrate that Python overhead adds up very fast, even just within a pure Python driver, and in the overall profile dwarfs the time spent within the database itself. However, this argument still may not be convincing enough.

许多人对我说:“那又怎样? 您的数据库调用花费的时间更多。” 没关系,我们在这里不是在讨论一种优化现有代码的方法,而是要防止代码的完美实现。 PyMySQL示例应说明,即使只是在纯Python驱动程序中,Python的开销也会很快增加,并且在总体配置文件中,花费在数据库本身上的时间相形见war。 但是,这种说法可能仍不足以令人信服。

So, I will here present a comprehensive test suite which illustrates traditional threads in Python against asyncio, as well as gevent style nonblocking IO. We will use psycopg2 which is currently the only production DBAPI that even supports async, in conjunction with aiopg which adapts psycopg2’s async support to asyncio and psycogreen which adapts it to gevent.

因此,在这里,我将介绍一个全面的测试套件,该套件说明了针对asyncio的Python中的传统线程以及gevent样式的非阻塞IO。 我们将使用psycopg2(它是当前唯一一个甚至支持async的生产DBAPI)与aiopg结合使用, aiopg将psycopg2的异步支持调整为asyncio,而psycogreen使其适应于gevent。

The purpose of the test suite is to load a few million rows into a Postgresql database as fast as possible, while using the same general set of SQL instructions, such that we can see if in fact the GIL slows us down so much that asyncio blows right past us with ease. The suite can use any number of connections simultaneously; at the highest I boosted it up to using 350 concurrent connections, which trust me, will not make your DBA happy at all.

测试套件的目的是在使用相同的通用SQL指令集的同时,尽快将几百万行加载到Postgresql数据库中,这样我们就可以了解到,实际上GIL是否使我们的速度减慢了这么多,以至于异步被打击轻松地经过我们。 该套件可以同时使用任意数量的连接。 最高提升我它使用350个并发连接,这相信我,不会让所有的DBA高兴。

The results of several runs on different machines under different conditions are summarized at the bottom of the README. The best performance I could get was running the Python code on one laptop interfacing to the Postgresql database on another, but in virtually every test I ran, whether I ran just 15 threads/coroutines on my Mac, or 350 (!) threads/coroutines on my Linux laptop, threaded code got the job done much faster than asyncio in every case (including the 350 threads case, to my surprise), and usually faster than gevent as well. Below are the results from running 120 threads/processes/connections on the Linux laptop networked to the Postgresql database on a Mac laptop:

自述文件的底部总结了在不同条件下在不同机器上进行的几次运行的结果。 我可以获得的最佳性能是在一台笔记本电脑上运行Python代码,而另一台笔记本电脑与Postgresql数据库接口,但是在我运行的几乎所有测试中,无论我在Mac上仅运行15个线程/协程,还是运行350(!)个线程/协程在我的Linux笔记本电脑上,在每种情况下(包括350个线程的情况,令我惊讶的是),线程代码都能比asyncio更快地完成工作,并且通常也比gevent快。 以下是在联网到Mac笔记本电脑上的Postgresql数据库的Linux笔记本电脑上运行120个线程/进程/连接的结果:

Python2.7.8 threads (22k r/sec, 22k r/sec)

Python3.4.1 threads (10k r/sec, 21k r/sec)

Python2.7.8 gevent (18k r/sec, 19k r/sec)

Python3.4.1 asyncio (8k r/sec, 10k r/sec)

Above, we see asyncio significantly slower for the first part of the run (Python 3.4 seemed to have some issue here in both threaded and asyncio), and for the second part, fully twice as slow compared to both Python2.7 and Python3.4 interpreters using threads. Even running 350 concurrent connections, which is way more than you’d usually ever want a single process to run, asyncio could hardly approach the efficiency of threads. Even with the very fast and pure C-code psycopg2 driver, just the overhead of the aiopg library on top combined with the need for in-Python receipt of polling results with psycopg2’s asynchronous library added more than enough Python overhead to slow the script right down.

上面,我们看到运行的第一部分的asyncio明显慢得多(Python 3.4在线程和asyncio中似乎都存在一些问题),而在第二部分,与Python2.7和Python3.4相比,它慢了两倍解释器使用线程。 即使运行350个并发连接,这比您通常希望运行单个进程的方式要多得多,但asyncio几乎无法达到线程效率。 即使使用非常快速且纯净的C代码psycopg2驱动程序,仅aiopg库的顶部开销以及使用psycopg2的异步库在Python中接收轮询结果的需求,都增加了足够的Python开销来减慢脚本运行速度。

Remember, I wasn’t even trying to prove that asyncio is significantly slower than threads; only that it wasn’t any faster. The results I got were more dramatic than I expected. We see also that an extremely low-latency async approach, e.g. that of gevent, is also slower than threads, but not by much, which confirms first that async IO is definitely not faster in this scenario, but also because asyncio is so much slower than gevent, that it is in fact the in-Python overhead of asyncio’s coroutines and other Python constructs that are likely adding up to very significant additional latency on top of the latency of less efficient IO-based context switching.

记住,我什至没有试图证明asyncio比线程慢得多。 只是没有更快。 我得到的结果比我预期的要生动得多。 我们还看到,极低延迟的异步方法(例如gevent)也比线程慢,但幅度不大,这首先证实了异步IO在这种情况下绝对不是更快,而且还因为asyncio如此慢与gevent相比,实际上是asyncio的协程和其他Python构造在Python中的开销,除了基于IO的上下文切换效率较低的延迟之外,可能还会增加非常多的附加延迟。

问题二–异步使编码更容易 (Issue Two – Async as Making Coding Easier)

This is the flip side to the “magic fairy dust” coin. This argument expands upon the “threads are bad” rhetoric, and in its most extreme form goes that if a program at some level happens to spawn a thread, such as if you wrote a WSGI application and happen to run it under mod_wsgi using a threadpool, you are now doing “threaded programming”, of the caliber that is just as difficult as if you were doing POSIX threading exercises throughout your code. Despite the fact that a WSGI application should not have the slightest mention of anything to do with in-process shared and mutable state within in it, nope, you’re doing threaded programming, threads are hard, and you should stop.

这是“魔幻仙尘”硬币的反面。 这个论点扩展到“线程是坏的”的言论上,并且以其最极端的形式去表达,如果某个级别的程序碰巧产生了一个线程,例如您编写了WSGI应用程序并碰巧使用线程池在mod_wsgi下运行它,您现在正在执行“线程编程”,这与您在整个代码中进行POSIX线程练习一样困难。 尽管WSGI应用程序中几乎没有提及与进程中的共享状态和可变状态有关的任何事实,但是,不,您正在执行线程编程,线程很困难,应该停止。

The “threads are bad” argument has an interesting twist (ha!), which is that it is being used by explicit async advocates to argue against implicit async techniques. Glyph’s Unyielding post makes exactly this point very well. The premise goes that if you’ve accepted that threaded concurrency is a bad thing, then using the implicit style of async IO is just as bad, because at the end of the day, the code looks the same as threaded code, and because IO can happen anywhere, it’s just as non-deterministic as using traditional threads. I would happen to agree with this, that yes, the problems of concurrency in a gevent-like system are just as bad, if not worse, than a threaded system. One reason is that concurrency problems in threaded Python are fairly “soft” because already the GIL, as much as we hate it, makes all kinds of normally disastrous operations, like appending to a list, safe. But with green threads, you can easily have hundreds of them without breaking a sweat and you can sometimes stumble across pretty weird issues that are normally not possible to encounter with traditional, GIL-protected threads.

“线程不好”参数有一个有趣的转折(ha!),这是显式异步拥护者使用它来反对隐式异步技术。 Glyph的不屈不挠的帖子很好地说明了这一点。 前提是,如果您已经接受线程并发是一件坏事,那么使用异步IO的隐式样式也同样糟糕,因为到最后,代码看起来与线程代码相同,并且因为IO可以在任何地方发生,就像使用传统线程一样不确定。 我会恰好同意这一点,是的,类似gevent的系统中的并发问题与线程系统一样糟糕,甚至更糟。 原因之一是线程化Python中的并发问题相当“软”,因为就像我们讨厌的那样,GIL已经使所有通常灾难性的操作(如追加到列表中)变得安全。 但是,有了绿色线程,您就可以轻松拥有数百个线程而不会流汗,并且有时您会遇到一些奇怪的怪异问题 ,而这些问题通常是传统的,受GIL保护的线程无法解决的。

As an aside, it should be noted that Glyph takes a direct swipe at the “magic fairy dust” crowd:

顺便说一句,应该注意的是,雕文直接向“魔仙尘”人群滑动:

Unfortunately, “asynchronous” systems have often been evangelized by emphasizing a somewhat dubious optimization which allows for a higher level of I/O-bound concurrency than with preemptive threads, rather than the problems with threading as a programming model that I’ve explained above. By characterizing “asynchronousness” in this way, it makes sense to lump all 4 choices together.

不幸的是,“异步”系统经常通过强调某种可疑的优化而广为传播,这种优化比抢占式线程允许更高级别的I / O绑定并发,而不是上面已经解释过的作为编程模型的线程问题。 通过以这种方式描述“异步性”,将所有四个选择集中在一起是有意义的。

I’ve been guilty of this myself, especially in years past: saying that a system using Twisted is more efficient than one using an alternative approach using threads. In many cases that’s been true, but:

我对此一直感到内,尤其是在过去的几年里:说使用Twisted的系统比使用线程的替代方法更有效。 在很多情况下是正确的,但是:

- the situation is almost always more complicated than that, when it comes to performance,

- “context switching” is rarely a bottleneck in real-world programs, and

- it’s a bit of a distraction from the much bigger advantage of event-driven programming, which is simply that it’s easier to write programs at scale, in both senses (that is, programs containing lots of code as well as programs which have many concurrent users).

- 在性能方面,情况几乎总是比这更复杂,

- 在实际程序中,“上下文切换”很少成为瓶颈,并且

- 与事件驱动编程的更大优势相比,这有点分散注意力,这只是因为从两种意义上说,更容易按比例编写程序(即,包含大量代码的程序以及具有许多并发用户的程序) )。

People will quote Glyph’s post when they want to talk about how you’ll have fewer bugs in your program when you switch to asyncio, but continue to promise greater performance as well, for some reason choosing to ignore this part of this very well written post.

人们会想谈谈当您切换到asyncio时如何在程序中减少错误的情况,而人们会引用Glyph的帖子,但由于某些原因,由于某些原因,他们选择忽略了这篇写得很好的帖子的这一部分,因此人们会继续引用Glyph。 。

Glyph makes a great, and very clear, argument for the twin points that both non-blocking IO should be used, and that it should be explicit. But the reasoning has nothing to do with non-blocking IO’s original beginnings as a reasonable way to process data from a large number of sleepy and slow connections. It instead has to do with the nature of the event loop and how an entirely new concurrency model, removing the need to expose OS-level context switching, is emergent.

对于以下两点,字形提出了一个很好且非常明确的论点,即应该同时使用非阻塞IO,并且应该明确。 但是,这种推理与非阻塞IO的最初起点无关,后者是处理大量困倦而缓慢的连接中的数据的合理方式。 相反,它与事件循环的性质以及如何出现全新的并发模型(消除了公开OS级上下文切换的需要)有关。

While we’ve come a long way from writing callbacks and can now again write code that looks very linear with approaches like asyncio, the approach should still require that the programmer explicitly specify all those function calls where IO is known to occur. It begins with the following example:

尽管我们距离编写回调还有很长一段路要走,现在可以再次编写与asyncio之类的方法看起来非常线性的代码,但该方法仍应要求程序员明确指定所有已知发生IO的函数调用。 它从以下示例开始:

def def transfertransfer (( amountamount , , payerpayer , , payeepayee , , serverserver ):):if if not not payerpayer .. sufficient_funds_for_withdrawalsufficient_funds_for_withdrawal (( amountamount ):):raise raise InsufficientFundsInsufficientFunds ()()loglog (( "{payer} has sufficient funds.""{payer} has sufficient funds." , , payerpayer == payerpayer ))payeepayee .. depositdeposit (( amountamount ))loglog (( "{payee} received payment""{payee} received payment" , , payeepayee == payeepayee ))payerpayer .. withdrawwithdraw (( amountamount ))loglog (( "{payer} made payment""{payer} made payment" , , payerpayer == payerpayer ))serverserver .. update_balancesupdate_balances ([([ payerpayer , , payeepayee ])

])

The concurrency mistake here in a threaded perspective is that if two threads both run transfer() they both may withdraw from payer such that payer goes below InsufficientFunds, without this condition being raised.

从线程角度看,这里的并发错误是,如果两个线程都运行transfer(),则它们都可能退出付款人,从而使付款人低于InsufficientFunds ,而不会出现这种情况。

The explcit async version is then:

显式异步版本为:

Where now, within the scope of the process we’re in, we know that we are only allowing anything else to happen at the bottom, when we call yield from server.update_balances(). There is no chance that any other concurrent calls to payer.withdraw() can occur while we’re in the function’s body and have not yet reached the server.update_balances() call.

现在,在我们所处流程的范围内,当我们从server.update_balances()调用yield时,我们知道我们只允许在底部进行其他任何事情。 当我们在函数的主体中并且尚未到达server.update_balances()调用时,不可能发生对payer.withdraw()的任何其他并行调用。

He then makes a clear point as to why even the implicit gevent-style async isn’t sufficient. Because with the above program, the fact that payee.deposit() and payer.withdraw() do not do a yield from, we are assured that no IO might occur in future versions of these calls which would break into our scheduling and potentially run another transfer() before ours is complete.

然后,他明确指出了为什么即使隐式gevent风格的异步也是不够的。 因为使用上述程序, payee.deposit()和payer.withdraw() 不会 从产生收益 ,所以我们确信,在这些调用的将来版本中不会发生任何IO,这会打乱我们的计划并可能在运行我们完成之前的另一个transfer() 。

(As an aside, I’m not actually sure, in the realm of “we had to type yield from and that’s how we stay aware of what’s going on”, why the yield from needs to be a real, structural part of the program and not just, for example, a magic comment consumed by a gevent/eventlet-integrated linter that tests callstacks for IO and verifies that the corresponding source code has been annotated with special comments, as that would have the identical effect without impacting any libraries outside of that system and without incurring all the Python performance overhead of explicit async. But that’s a different topic.)

(顺便说一句,我实际上不确定,在“我们必须输入收益率 ,这就是我们如何知道发生了什么的境界”的领域,为什么收益率需要成为计划的真实,结构性部分而不只是例如,由gevent / eventlet集成的linter消耗的魔术注释,该注释对IO的调用栈进行测试并验证相应的源代码已使用特殊注释进行了注释,因为这将具有相同的效果而不会影响外部的任何库该系统,并且不会产生显式异步的所有Python性能开销。但这是不同的话题。)

Regardless of style of explicit coroutine, there’s two flaws with this approach.

不管显式协程的样式如何,此方法都有两个缺陷。

One is that asyncio makes it so easy to type out yield from that the idea that it prevents us from making mistakes loses a lot of its plausibility. A commenter on Hacker News made this great point about the notion of asynchronous code being easier to debug:

其中之一是,异步使我们很容易从中输入收益,以至于防止我们犯错的想法失去了很多可信度。 Hacker News的评论者指出了异步代码概念更易于调试的观点:

It’s basically, “I want context switches syntactically explicit in my code. If they aren’t, reasoning about it is exponentially harder.”

基本上是这样,“我希望上下文切换在我的代码中语法显式。 如果不是的话,就很难对它进行推理了。”

And I think that’s pretty clearly a strawman. Everything the author claims about threaded code is true of any re-entrant code, multi-threaded or not. If your function inadvertently calls a function which calls the original function recursively, you have the exact same problem.

我认为这显然是一个稻草人。 作者声称的有关线程代码的所有内容都适用于任何可重入的代码,无论是否为多线程。 如果您的函数无意中调用了一个以递归方式调用原始函数的函数,那么您将遇到完全相同的问题。

But, guess what, that just doesn’t happen that often. Most code isn’t re-entrant. Most state isn’t shared.

但是,你猜怎么着,那不会经常发生。 大多数代码不是可重入的。 大多数状态未共享。

For code that is concurrent and does interact in interesting ways, you are going to have to reason about it carefully. Smearing “yield from” all over your code doesn’t solve.

对于并发的并且确实以有趣的方式交互的代码,您将必须谨慎地对其进行推理。 在整个代码中涂抹“收益”并不能解决。

In practice, you’ll end up with so many “yield from” lines in your code that you’re right back to “well, I guess I could context switch just about anywhere”, which is the problem you were trying to avoid in the first place.

在实践中,您最终会在代码中产生很多“收益”行,以至于您回到“嗯,我想我可以在几乎任何地方进行上下文切换”,这就是您试图避免的问题。第一名。

In my benchmark code, one can see this last point is exactly true. Here’s a bit of the threaded version:

在我的基准代码中,可以看到最后一点是正确的。 以下是线程版本:

cursorcursor .. executeexecute (("select id from geo_record where fileid="select id from geo_record where fileid= %s%s and logrecno= and logrecno= %s%s "" ,,(( itemitem [[ 'fileid''fileid' ], ], itemitem [[ 'logrecno''logrecno' ])

])

)

)

row row = = cursorcursor .. fetchonefetchone ()

()

geo_record_id geo_record_id = = rowrow [[ 00 ]]cursorcursor .. executeexecute (("select d.id, d.index from dictionary_item as d ""select d.id, d.index from dictionary_item as d ""join matrix as m on d.matrix_id=m.id where m.segment_id="join matrix as m on d.matrix_id=m.id where m.segment_id= %s%s " ""order by m.sortkey, d.index""order by m.sortkey, d.index" ,,(( itemitem [[ 'cifsn''cifsn' ],)

],)

)

)

dictionary_ids dictionary_ids = = [[rowrow [[ 00 ] ] for for row row in in cursor

cursor

]

]

assert assert lenlen (( dictionary_idsdictionary_ids ) ) == == lenlen (( itemitem [[ 'items''items' ])])for for dictionary_iddictionary_id , , element element in in zipzip (( dictionary_idsdictionary_ids , , itemitem [[ 'items''items' ]):]):cursorcursor .. executeexecute (("insert into data_element ""insert into data_element ""(geo_record_id, dictionary_item_id, value) ""(geo_record_id, dictionary_item_id, value) ""values ("values ( %s%s , , %s%s , , %s%s )")" ,,(( geo_record_idgeo_record_id , , dictionary_iddictionary_id , , elementelement )))

)

Here’s a bit of the asyncio version:

这是asyncio版本的一部分:

Notice how they look exactly the same? The fact that yield from is present is not in any way changing the code that I write, or the decisions that I make – this is because in boring database code, we basically need to do the queries that we need to do, in order. I’m not going to try to weave an intelligent, thoughtful system of in-process concurrency into how I call into the database or not, or try to repurpose when I happen to need database data as a means of also locking out other parts of my program; if I need data I’m going to call for it.

注意它们看起来完全一样吗? 存在收益的事实并不会以任何方式改变我编写的代码或我做出的决定–这是因为在无聊的数据库代码中 ,我们基本上需要按顺序执行需要执行的查询。 我不会尝试将一个智能,周到的进程内并发系统编织到我如何调用数据库或不调用数据库的方法中,也不会尝试在我碰巧需要数据库数据时将其重新利用,以同时锁定数据库的其他部分。我的程序 如果我需要数据,我将要求它。

Whether or not that’s compelling, it doesn’t actually matter – using async or mutexes or whatever inside our program to control concurrency is in fact completely insufficient in any case. Instead, there is of course something we absolutely must always do in real world boring database code in the name of concurrency, and that is:

不管它是否引人注目,实际上都没有关系–在任何情况下,使用异步或互斥量或程序内部的任何东西来控制并发实际上都是不够的。 取而代之的是,在绝对无聊的数据库代码中,我们当然绝对必须以并发的名义做一些事情,那就是:

数据库代码通过ACID处理并发,而不是进程内同步 (Database Code Handles Concurrency through ACID, Not In-Process Synchronization)

Whether or not we’ve managed to use threaded code or coroutines with implicit or explicit IO and find all the race conditions that would occur in our process, that matters not at all if the thing we’re talking to is a relational database, especially in today’s world where everything runs in clustered / horizontal / distributed ways – the handwringing of academic theorists regarding the non-deterministic nature of threads is just the tip of the iceberg; we need to deal with entirely distinct processes, and regardless of what’s said, non-determinism is here to stay.

无论我们是否设法使用带有隐式或显式IO的线程代码或协程,并找到在过程中将发生的所有竞争条件,如果我们要谈论的是关系数据库,那都根本不重要在当今世界,一切都以集群/横向/分布式方式运行的情况下,学术理论家对线程的非确定性的nature之以鼻只是冰山一角。 我们需要处理完全不同的流程,无论怎么说,不确定性都会保留下来。

For database code, you have exactly one technique to use in order to assure correct concurrency, and that is by using ACID-oriented constructs and techniques. These unfortunately don’t come magically or via any known silver bullet, though there are great tools that are designed to help steer you in the right direction.

对于数据库代码,您可以使用一种确切的技术来确保正确的并发性,即使用面向ACID的构造和技术 。 不幸的是,尽管有许多出色的工具可以帮助您指引正确的方向,但是这些方法并不能神奇地通过任何已知的灵丹妙药来解决。

All of the example transfer() functions above are incorrect from a database perspective. Here is the correct one:

从数据库角度来看,上述所有示例transfer()函数都不正确。 这是正确的:

def def transfertransfer (( amountamount , , payerpayer , , payeepayee , , serverserver ):):with with transactiontransaction .. beginbegin ():():if if not not payerpayer .. sufficient_funds_for_withdrawalsufficient_funds_for_withdrawal (( amountamount , , locklock == TrueTrue ):):raise raise InsufficientFundsInsufficientFunds ()()loglog (( "{payer} has sufficient funds.""{payer} has sufficient funds." , , payerpayer == payerpayer ))payeepayee .. depositdeposit (( amountamount ))loglog (( "{payee} received payment""{payee} received payment" , , payeepayee == payeepayee ))payerpayer .. withdrawwithdraw (( amountamount ))loglog (( "{payer} made payment""{payer} made payment" , , payerpayer == payerpayer ))serverserver .. update_balancesupdate_balances ([([ payerpayer , , payeepayee ])

])

See the difference? Above, we use a transaction. To call upon the SELECT of the payer funds and then modify them using autocommit would be totally wrong. We then must ensure that we retrieve this value using some appropriate system of locking, so that from the time that we read it, to the time that we write it, it is not possible to change the value based on a stale assumption. We’d probably use a SELECT .. FOR UPDATE to lock the row we intend to update. Or, we might use “read committed” isolation in conjunction with a version counter for an optimistic approach, so that our function fails if a race condition occurs. But in no way does the fact that we’re using threads, greenlets, or whatever concurrency mechanism in our single process have any impact on what strategy we use here; our concurrency concerns involve the interaction of entirely separate processes.

看到不同? 上面,我们使用一个事务。 要求选择付款人的资金然后使用自动提交修改它们是完全错误的。 然后,我们必须确保使用某种适当的锁定系统来检索此值,以便从读取它到编写它的时间, 不可能基于过时的假设来更改该值。 我们可能会使用SELECT .. FOR UPDATE锁定要更新的行。 或者,我们可以将“读提交”隔离与版本计数器结合使用,以获得更乐观的方法,这样,如果出现竞争条件,我们的功能就会失败。 但是,在单个流程中使用线程,greenlet或任何并发机制这一事实绝对不会对我们在此处使用的策略产生任何影响; 我们的并发问题涉及完全独立的流程之间的相互作用。

总结! (Sum up!)

Please note I am not trying to make the point that you shouldn’t use asyncio. I think it’s really well done, it’s fun to use, and I still am interested in having more of a SQLAlchemy story for it, because I’m sure folks will still want this no matter what anyone says.

请注意,我并不是要指出您不应该使用asyncio。 我认为它确实做得很好,使用起来很有趣,而且我仍然对有更多关于SQLAlchemy的故事感兴趣,因为我敢肯定无论别人怎么说,人们仍然会想要这个。

My point is that when it comes to stereotypical database logic, there are no advantages to using it versus a traditional threaded approach, and you can likely expect a small to moderate decrease in performance, not an increase. This is well known to many of my colleagues, but recently I’ve had to argue this point nonetheless.

我的观点是,关于定型数据库逻辑,与传统的线程方法相比,使用它没有任何优势,并且您可能会期望性能有所降低 ,而不会有所提高 。 这是我的许多同事所熟知的,但是最近我仍然不得不争论这一点。

An ideal integration situation if one wants to have the advantages of non-blocking IO for receiving web requests without needing to turn their business logic into explicit async is a simple combination of nginx with uWsgi, for example.

例如,如果要具有无阻塞IO的优点来接收Web请求而不需要将其业务逻辑转换为显式异步,则理想的集成情况是nginx与uWsgi的简单组合。

翻译自: https://www.pybloggers.com/2015/02/asynchronous-python-and-databases/

python 异步数据库

python 异步数据库_异步Python和数据库相关推荐

- python 时间序列预测_使用Python进行动手时间序列预测

python 时间序列预测 Time series analysis is the endeavor of extracting meaningful summary and statistical ...

- python 概率分布模型_使用python的概率模型进行公司估值

python 概率分布模型 Note from Towards Data Science's editors: While we allow independent authors to publis ...

- python2异步编程_最新Python异步编程详解

我们都知道对于I/O相关的程序来说,异步编程可以大幅度的提高系统的吞吐量,因为在某个I/O操作的读写过程中,系统可以先去处理其它的操作(通常是其它的I/O操作),那么Python中是如何实现异步编程的 ...

- python打开excel数据库_使用python导入excel文件中的mssql数据库数据

我试图用python导入excel文件中的mssql数据库数据.我的数据在excel表格中的顺序不正确.e. g它显示第1列数据,然后是第3列,第2列,然后是第4列,依此类推. 我使用以下脚本:imp ...

- python cgi库_《Python 数据库 GUI CGI编程》

1. 上次写在前面,我们介绍了一篇关于开始使用Python.今天我们将介绍Python数据库,GUI, CGI编程和Python和Python的区别.2.连接到数据库标准Python数据库接口是Pyt ...

- python如何使用本地数据库_使用Python在虚拟机上怎么连接本地数据库

首先保证你的虚拟机和本机网络是处于同一网段的(一个局域网内), 然后明确数据库所占用的端口, 数据库账户密码就可以链接上了! python中把一个数据库对应的表封装成类就很好操作了! mysql的话: ...

- python处理teradata数据库_【Python连接数据库】Python连接Teradata数据库-ODBC方式(pyodbc包和teradata包)...

1.安装Python (1)前置安装包查看 rpm -qa |grep -i zlib rpm-qa |grep -i bzip2rpm-qa |grep -i ncurses rpm-qa |gre ...

- python通过什么对象连接数据库_「Python」连接数据库的三种方式

连接SQLite 要操作关系数据库,首先需要连接到数据库,一个数据库连接称为Connection: 连接到数据库后,需要打开游标,称之为Cursor,通过Cursor执行SQL语句,然后,获得执行结果 ...

- python 免费空间_用python做大数据

不学Python迟早会被淘汰?Python真有这么好的前景? 最近几年Python编程语言在国内引起不小的轰动,有超越Java之势,本来在美国这个编程语言就是最火的,应用的非常非常的广泛,而Pytho ...

最新文章

- Python 面向对象 编程(一)

- ADOQuery 查询 删除 修改 插入

- 通信教程 | 串口丢数据常见的原因

- 帝国CMS7.2 7.5微信登录插件

- signature=4c9125bac76ec40553ba356eaca47964,2008 SEM Honorary Members Conversations

- java中带包的类在命令行中的编译和执行中出现的问题及解决办法

- android中界面布局文件放在,android界面布局详解.doc

- 智慧环境应急平台建设方案

- 【历史上的今天】5 月 26 日:美国首个计算机软件程序专利;苹果市值首次超越微软;Wiki 的发明者出生

- php如何配置gii,深入浅析yii2-gii自定义模板的方法

- 分数统计设计java程序_(windows综合程序)设计一个学生平时成绩统计软件 最后的Java作业...

- 微信订阅号小技巧及相关知识普及

- Python基础三、2、list列表练习题 引用随机数

- WPF 仿微信聊天气泡

- WPS文档出现很多小箭头解决

- Thingsboard之魔鬼编译,编译失败问题整理,ServerUI

- OSChina 周三乱弹 ——学哪种编程语言能保住一头秀发?

- python——利用nmap进行端口扫描,爆破ftp密码,上传wellshell.

- 袁萌与王选谈方正的未来

- 图论:最大流最小割详解