tb计算机存储单位_如何节省数TB的云存储

tb计算机存储单位

Whatever cloud provider a company may use, costs are always a factor that influences decision-making, and the way software is written. As a consequence, almost any approach that helps save costs is likely worth investigating.

无论公司使用哪种云提供商,成本始终是影响决策和软件编写方式的因素。 结果,几乎所有有助于节省成本的方法都值得研究。

At Vendasta, we use the Google Cloud Platform. The software we maintain, design, and create, often makes use of Google Cloud Storage and Google CloudSQL; the team I am part of is no exception. The team works on a product called Website, which is a platform for website hosting. The product has two editions: Express (free, limited functionality), and Pro (paid, full functionality). Between the features that the product offers we find period backups of websites; these backups are archived as tar files. Each backup archive has an accompanying record stored in CloudSQL, which contains metadata such as the timestamp of the archive.

在Vendasta,我们使用Google Cloud Platform。 我们维护,设计和创建的软件经常使用Google Cloud Storage和Google CloudSQL ; 我所在的团队也不例外。 该团队致力于开发一种名为“网站”的产品,该产品是用于网站托管的平台。 该产品有两个版本:Express(免费,功能有限)和Pro(付费,完整功能)。 在产品提供的功能之间,我们可以找到网站的定期备份; 这些备份被存档为tar文件。 每个备份存档都有一个随附的记录存储在CloudSQL中,其中包含元数据,例如存档的时间戳。

Recently, the team I am currently working in wanted to design and implement an approach to delete old backups in order to reduce costs. The requirements were:

最近,我目前正在工作的团队希望设计并实施一种删除旧备份的方法,以降低成本。 要求是:

- Delete archives and records older than 1 year;删除超过1年的档案和记录;

- For the Express edition, keep archives and records that were created in the last 10 days. In addition, keep the backups from the beginning of each month for the last year;对于Express版本,请保留最近10天内创建的档案和记录。 此外,请保留上一年每个月初的备份;

- For the Pro edition, keep archives and records that were created in the last 60 days. In addition, keep the backups created at the beginning of each month for the last year;对于专业版,请保留最近60天内创建的档案和记录。 另外,保留在去年的每个月初创建的备份;

- Perform steps 1–3 every day for all the sites the product hosts.每天对产品托管的所有站点执行步骤1-3。

We are going to walk through how the requirements were satisfied for each step, and use Golang code, along with an SQL statement, to demonstrate how to execute the steps.

我们将逐步介绍如何满足每个步骤的要求,并使用Golang代码和SQL语句来演示如何执行这些步骤。

Google Cloud Storage offers the option of object lifecycle management, which allows users to set custom rules such as deleting bucket objects, or changing the storage option of a bucket, after a specific time period/age. While this may work for many use cases, it did not satisfy our requirements because of custom rules for product editions. In addition, transitioning the whole platform to use a different naming scheme for backups (no context of edition currently) to be able to use lifecycle management would have been an enormous amount of work. As a consequence, the team went ahead with a custom solution that is extensible as additional custom cleanups can be added to it in the future.

Google Cloud Storage提供了对象生命周期管理选项,允许用户在特定时间段/年龄之后设置自定义规则,例如删除存储桶对象或更改存储桶的存储选项。 尽管这可能适用于许多用例,但由于产品版本的自定义规则,它不能满足我们的要求。 此外,转换整个平台以使用不同的命名方案进行备份(当前没有版本上下文)以能够使用生命周期管理将是一项艰巨的工作。 因此,团队继续开发可扩展的自定义解决方案,因为将来可以在其中添加其他自定义清理功能。

The first thing that was implemented was the part that iterates over all the sites, and creates tasks for archive and record cleanups. We chose Golang Machinery, a library for asynchronous job scheduling and parsing. Our team often uses Memorystore, which was the storage layer of choice for our use of Machinery. The scheduling was performed by an internal project that the team uses for scheduling other types of work:

实施的第一件事是迭代所有站点,并创建用于存档和记录清理的任务。 我们选择了Golang Machinery ,该库用于异步作业调度和解析。 我们的团队经常使用Memorystore ,这是我们使用Machine的首选存储层。 调度是由一个内部项目执行的,团队使用该内部项目来调度其他类型的工作:

package schedulerimport ("time""github.com/RichardKnop/machinery/v1/tasks""github.com/robfig/cron"// fake packages, abstracts away internal code"github.com/website/internal/logging""github.com/website/internal/repository"

)const (// dailyBackupCleaningSchedule is the daily cron pattern for performing daily backup cleanupsdailyBackupCleaningSchedule string = "@daily"

)// StartBackupCleaningCron creates a cron that scheduled backup cleaning tasks

func StartBackupCleaningCron() {job := cron.New()if err := job.AddFunc(dailyBackupCleaningSchedule, func() {SheduleBackupCleaningTasks()}); err != nil {logging.Errorf("failed to schedule daily backup cleaning, err: %s", err)}job.Run()

}// ScheduleBackupCleaningTasks iterates over all the sites and creates a backup cleaning task

func ScheduleBackupCleaningTasks() {now := time.Now()cursor := repository.CursorBeginningfor cursor != repository.CursorEnd {sites, next, err := repository.List(cursor, repository.DefaultPageSize)if err != nil {// if a listing of sites fails, this will retry tomorrowcontinue}for _, site := range sites {// GetCleanupTask abstracted, see Machinery documentation on how to create a taskrecordCleanupTask := GetRecordingCleanupTask(site.ID(), site.EditionID(), now)// if a record cleanup task has been scheduled already, there's also a record cleanup task, so continuing at this step is sufficient// taskAlreadyQueued abstracted, check whether a task has beed scheduled based on the artificial UUIDif taskAlreadyQueued(recordCleanupTask.UUID) {continue}// GetArchiveCleanupTask abstracted, see Machinery documentation on how to create a taskarchiveCleanupTask := GetArchiveCleanupTask(site.ID, site.EditionID, now)// use a chain because the tasks should occur one after anotherif taskChain, err := tasks.NewChain(recordCleanupTask, archiveCleanupTask); err != nil {logging.Errorf("error creating backup cleanup task chain: %s -> %s, error: %s", recordCleanupTask.UUID, archiveCleanupTask.UUID, err)// machineryQ is not initialized in this script and is abstracted. See how to initialize the queue using Machinery docs} else if _, err := MachineryQueue.SendChain(taskChain); err != nil {logging.Errorf("error scheduling backup cleanup task chain: %s -> %s, error: %s", recordCleanupTask.UUID, archiveCleanupTask.UUID, err)}}cursor = next}return

}The code above fulfills three main objectives:

上面的代码实现了三个主要目标:

Creates a cron job for a daily task creation;

为日常任务创建创建cron作业;

- Iterates over all the sites;遍历所有站点;

- Creates the archive and record cleaning tasks.创建存档并记录清理任务。

The second part of the implementation was to design and create the software that consumes the tasks that were scheduled for cleaning. For this part, the team constructed an SQL query that returns all the records that have to be deleted, and satisfy the constraints imposed by the edition of the products; Express, or Pro.

实施的第二部分是设计和创建使用计划要进行清理的任务的软件。 对于这一部分,团队构建了一个SQL查询,该查询返回所有必须删除的记录,并满足产品版本所施加的约束。 Express或Pro。

package backupimport ("fmt"// fake package, you have to instantiate an SQL connection, and then execute queries using it"github.com/driver/sqlconn"// fake packages for abstraction"github.com/website/internal/gcsclient""github.com/website/internal/logging"

)const (// SQLDatetimeFormat is the SQL5.6 datetime formatSQLDatetimeFormat = "2006-01-02 15:04:05"

)// BackupRecordCleanup performs the work associated with cleaning up site backup records from CloudSQL

func BackupRecordCleanup(siteID, editionID, dateScheduled string) error {// GetCutoffBasedOnEdition is abstracted, but it only returns two dates based on the scheduled date of the taskminCutoff, maxCutoff, err := GetCutoffBasedOnEdition(editionID, dateScheduled)if err != nil {return fmt.Errorf("record cleanup failed to construct the date cutoffs for site %s, edition %s, err: %s", siteID, editionID, err)}query := GetRecordsDeleteStatement(siteID, minCutoff.Format(internal.SQLDatetimeFormat), maxCutoff.Format(internal.SQLDatetimeFormat))if res, err := sqlconn.Execute(query); err != nil {return fmt.Errorf("failed to execute delete statement for %s, edition %s, on %s, err: %s", siteID, editionID, dateScheduled, err)} else if numRowsAffected, err := res.RowsAffected(); err != nil {return fmt.Errorf("failed to obtain the number of affected rows after executing a deletion for %s, edition %s, on %s, err: %s", siteID, editionID, dateScheduled, err)} else if numRowsAffected == 0 {// while this expects at least 1 row to be affected, it is ok to not return here as the row might be gone alreadylogging.Infof(m.ctx, "expected deletion statement for %s, edition %s, on %s to affect at least 1 row, 0 affected", siteID, editionID, dateScheduled)}return nil

}// BackupArchiveCleanup performs the work associated with cleaning up site backup archives from GCS

func BackupArchiveCleanup(siteID, editionID, dateScheduled string) error {// SiteBackupCloudBucket abstracted because it's an environment variableobjects, err := gcsclient.Scan(SiteBackupCloudBucket, siteID, gcsclient.UnlimitedScan)if err != nil {return fmt.Errorf("failed to perform GCS scan for %s, err: %s", siteID, err)}minCutoff, maxCutoff, err := GetCutoffBasedOnEdition(editionID, dateScheduled)if err != nil {return fmt.Errorf("archive cleanup failed to construct the date cutoffs for site %s, edition %s, err: %s", siteID, editionID, err)}// sorting is performed to facilitate the deletion of archives first, oldest to most recentsortedBackupObjects, err := SortBackupObjects(m.ctx, objects)if err != nil {return fmt.Errorf("failed to sort backup objects for site %s, edition %s, err: %s", siteID, editionID, err)}if len(sortedBackupObjects) > 0 {// track the month changes in order to filter which backups to keep. The backup at position 0 is always// kept since it is the earliest backup this service has, for whatever month the backup was performed inprevMonth := int(sortedBackupObjects[0].at.Month())// iterations start at 1 since this always keeps the first backup. If a site ends up with two "first month"// backups, the second one will be deleted the next dayfor _, sbo := range sortedBackupObjects[1:] {if sbo.at.After(minCutoff) {// backups are sorted at this point, so this can stop early as any backup after this one will also satisfy the After()break}// no point in keeping backups that are older than maxCutoff, e.g 1 year, so those are deleted without regard// for the month they were created in. However, if a backup was created between min and maxCutoff, this will// keep the month's first backup, and delete everything else conditioned on the edition ID. For example, if sortedBackupObjects[0]// is a January 31st backup and the next one is February 1st, the months will be 1 and 2, respectively,// which will cause the condition to be false, and keep will be set to true, skipping the deletion// of the February 1st backup. The next iteration will set keep to false as the months are the samecurrMonth := int(sbo.at.Month())if sbo.at.Before(maxCutoff) || currMonth == prevMonth {if err := gcsclient.Delete(sbo.gcsObj.Bucket, sbo.gcsObj.Name); err != nil {logging.Infof(m.ctx, "failed to delete GCS archive %s/%s for site %s, edition %s, err: %s", sbo.gcsObj.Bucket, sbo.gcsObj.Name, siteID, editionID, err)}}prevMonth = currMonth}}return nil

}// GetRecordsDeleteStatement returns the SQL statement necessary for deleting a single SQL backup record. The

// statement will delete any backups that have been created before maxDateCutoff but will keep the monthly backups that

// have been created between min and maxDateCutoff. This is a public function so that it is easily testable

func GetRecordsDeleteStatement(siteID, minDateCutoff, maxDateCutoff string) string {internalDatabaseName := fmt.Sprintf("`%s`.`%s`", config.Env.InternalDatabaseName, config.Env.SiteBackupCloudSQLTable)baseStatement := `

-- the main database is website-pro, for both demo and prod

-- delete backup records that are older than 1 year (or whatever maxDateCutoff is set to)

DELETE FROM %[1]s

WHERE (site_id = "%[2]s"AND timestamp < CONVERT("%[4]s", datetime)

) OR (

-- delete the ones older than the date specified according to the edition ID of the site (or whatever minDateCutoff is set to)site_id = "%[2]s"AND timestamp < CONVERT("%[3]s", datetime)AND timestamp NOT IN (SELECT * FROM (SELECT MIN(timestamp)FROM %[1]sWHERE status = "done"AND site_id = "%[2]s"GROUP BY site_id, TIMESTAMPDIFF(month, "1970-01-01", timestamp)) AS to_keep)

);`return fmt.Sprintf(baseStatement,internalDatabaseName, // database name, along with table namesiteID, // the site ID for which old backups will be deletedminDateCutoff, // the min date cutoff for backup datesmaxDateCutoff, // the max date cutoff for backup dates)

}Note that the above code sample is incomplete. To make it a completely workable example, the Machinery task consumer has to be implemented, and BackupArchiveCleanup, along with BackupRecordCleanup, be set as the main functions that process the tasks created by ScheduleBackupCleaningTasks from the previous example. For the code sample above, let’s focus on the SQL query, as it was the part that was the most heavily tested by the team (we do not have backups of backups, and testing is important in general).

请注意,以上代码示例不完整。 为了使其成为一个完全可行的示例,必须实现“机械”任务使用者,并将BackupArchiveCleanup以及BackupRecordCleanup设置为主要函数,该函数处理上一个示例中的ScheduleBackupCleaningTasks创建的任务。 对于上面的代码示例,我们将重点放在SQL查询上,因为它是团队最严格测试的部分(我们没有备份的备份,并且测试通常很重要)。

The query scans the CloudSQL table that contains the records of the backups (internalDatabaseName) and deletes records with a timestamp older than a maximum date cutoff (the one year requirement). Any records with a timestamp that are not older than one year are deleted conditionally based on whether their timestamp is less than the minimum date cutoff (60 or 10, days, respectively). In addition, the timestamp should not be in the specified sub-query. The sub-query uses an SQL TIMESTAMPDIFF to group timestamps based on the difference in months, which results in the first backup of a month being selected (see documentation).

该查询将扫描包含备份记录( internalDatabaseName )的CloudSQL表,并删除时间戳早于最大截止日期(一年的要求)的记录。 时间戳不超过一年的任何记录都将根据其时间戳是否小于最小日期截止时间(分别为60天或10天)而有条件地删除。 此外,时间戳记不应位于指定的子查询中。 子查询使用SQL TIMESTAMPDIFF根据月份差异将时间戳分组,这将导致选择一个月的第一个备份(请参阅文档)。

The overall architecture of the cleanup process is represented by the diagram on the left. Generally, there is a service that creates cleanup tasks, one that processes them, both backed by the same underlying storage layer.

清理过程的总体架构由左侧的图表示。 通常,有一种服务可以创建清理任务,并执行清理任务,它们均由同一基础存储层支持。

结果 (Results)

The team started the development outlined in this blog with the intent to save storage costs. To measure that, there are two options:

为了节省存储成本,团队开始了本博客中概述的开发。 要衡量这一点,有两种选择:

Use

gsutil duto measure the total bytes used by specific buckets. However, because of the size of the buckets the team uses, it is recommended to not use this tool for measuring total bytes used;使用

gsutil du来衡量特定存储桶使用的总字节数。 但是,由于团队使用的存储桶的大小, 建议不要使用此工具来测量已使用的总字节数。Use Cloud Monitoring to get the total bytes used by GCS buckets.

使用Cloud Monitoring获取GCS存储桶使用的总字节数。

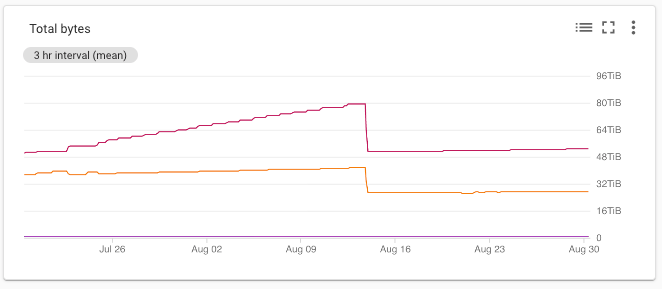

After testing for approximately one week in a demo environment, on a subset of websites (again, no backups of backups), the code was released to production. The result is outlined by the following diagram:

在演示环境中测试了大约一个星期后,在一部分网站上(同样,没有备份的备份),该代码已发布到生产环境中。 下图概述了结果:

The chart outlines the total amount of bytes, from the past six weeks, that our team is currently using in its main Google Cloud Platform project where backups are stored. The chart contains two lines because of the two types of storage the team uses, NEARLINE and COLDLINE. Throughout July and the beginning of August, the chart outlines a fast rate of increase in storage use — approximately 40TB. After releasing the cleanup process to the production environment, we notice a much more sustainable rate of growth for total bytes used. This has resulted in approximately $800/month in cost savings. While not much for a company the size of Vendasta, it alleviates the team from periodically performing manual backup cleaning, and automates a process that result in significant cost savings long-term. In addition, it is a process that can be used to replicate the cleanup of other types of data. As a note, you may have noticed that the team did not measure the cost savings from deleting CloudSQL records. The CloudSQL deleted table rows resulted only in MB of space savings, which are not significant by comparison to the storage savings from deleting archives.

该图表概述了我们团队目前在其用于存储备份的主要Google Cloud Platform项目中使用的过去六周的字节总数。 由于团队使用两种存储类型,因此图表包含两条线: NEARLINE和COLDLINE 。 在整个7月和8月初,该图表概述了存储使用量的快速增长-大约40TB。 将清理过程发布到生产环境后,我们注意到所使用的总字节数的增长速度更加可持续。 这样每月可节省约800美元。 对于一家像Vendasta这样规模的公司来说,这虽然不算多,但可以减轻团队定期执行手动备份清理的负担,并使流程自动化,从而可以长期节省大量成本。 此外,该过程可用于复制其他类型数据的清除。 注意,您可能已经注意到,该团队没有衡量删除CloudSQL记录所节省的成本。 CloudSQL删除的表行仅导致MB的空间节省,与删除档案所节省的存储空间相比,这并不重要。

Lastly, it is possible that your company’s data retention policy does not allow you to delete data at all. In that case, I suggest using the Archive storage option, which was released this year and provides the most affordable option for long-term data storage.

最后,您公司的数据保留政策可能根本不允许您删除数据。 在这种情况下,我建议使用今年发布的“ 存档存储”选项,该选项为长期数据存储提供了最经济的选择。

Vendasta helps small and medium-sized businesses around the world manage their online presence. A website is pivotal for managing online presence and performing eCommerce. My team is working on a website-hosting platform that faces numerous interesting scalability (millions of requests per day), performance (loading speed), and storage (read, and write speed) problems. Consider reaching out to me, or applying to work at Vendasta, if you’d like a challenge writing Go and working with Kubernetes, NGINX, Redis, and CloudSQL!

Vendasta帮助全球的中小型企业管理其在线业务。 网站对于管理在线状态和执行电子商务至关重要。 我的团队正在开发一个网站托管平台,该平台面临许多有趣的可伸缩性(每天数百万个请求),性能(加载速度)和存储(读和写速度)问题。 如果您想编写Go并与Kubernetes , NGINX , Redis和CloudSQL一起工作时遇到挑战,请考虑与我联系,或申请在Vendasta工作!

翻译自: https://medium.com/vendasta/how-to-save-terabytes-of-cloud-storage-be3643c29ce0

tb计算机存储单位

http://www.taodudu.cc/news/show-995348.html

相关文章:

- 数据可视化机器学习工具在线_为什么您不能跳过学习数据可视化

- python中nlp的库_用于nlp的python中的网站数据清理

- 怎么看另一个电脑端口是否通_谁一个人睡觉另一个看看夫妻的睡眠习惯

- tableau 自定义省份_在Tableau中使用自定义图像映射

- 熊猫烧香分析报告_熊猫分析进行最佳探索性数据分析

- 白裤子变粉裤子怎么办_使用裤子构建构建数据科学的monorepo

- 青年报告_了解青年的情绪

- map(平均平均精度_客户的平均平均精度

- 鲜活数据数据可视化指南_数据可视化实用指南

- 图像特征 可视化_使用卫星图像可视化建筑区域

- 海量数据寻找最频繁的数据_在数据中寻找什么

- 可视化 nlp_使用nlp可视化尤利西斯

- python的power bi转换基础

- 自定义按钮动态变化_新闻价值的变化定义

- 算法 从 数中选出_算法可以选出胜出的nba幻想选秀吗

- 插入脚注把脚注标注删掉_地狱司机不应该只是英国电影历史数据中的脚注,这说明了为什么...

- 贝叶斯统计 传统统计_统计贝叶斯如何补充常客

- 因为你的电脑安装了即点即用_即你所爱

- 团队管理新思考_需要一个新的空间来思考讨论和行动

- bigquery 教程_bigquery挑战实验室教程从数据中获取见解

- java职业技能了解精通_如何通过精通数字分析来提升职业生涯的发展,第8部分...

- kfc流程管理炸薯条几秒_炸薯条成为数据科学的最后前沿

- bigquery_到Google bigquery的sql查询模板,它将您的报告提升到另一个层次

- 数据科学学习心得_学习数据科学时如何保持动力

- python多项式回归_在python中实现多项式回归

- pd种知道每个数据的类型_每个数据科学家都应该知道的5个概念

- xgboost keras_用catboost lgbm xgboost和keras预测财务交易

- 走出囚徒困境的方法_囚徒困境的一种计算方法

- 平台api对数据收集的影响_收集您的数据不是那么怪异的api

- 逻辑回归 概率回归_概率规划的多逻辑回归

tb计算机存储单位_如何节省数TB的云存储相关推荐

- 什么是分布式_什么是分布式存储?分布式云存储有什么优势?

点击蓝色字关注 [悦好创富圈]! 一个出类拔萃的公众号 关注的人都发大财了 什么是分布式存储 分布式存储是数据存储技术.它通过网络使用企业中每台机器的磁盘空间.这些分散的存储资源构成了虚拟存储设备,数 ...

- 对象存储哪家价格便宜?最便宜的云存储推荐!

我们知道,对象存储是一种云存储服务,不同的云存储,价格也是有所不同的,而对象存储的价格取决于选择的存储容量和服务商.不同的存储容量,价格也是不同的,容量越大,价格也是越贵:不同的服务商,对象存储的价格 ...

- 什么是云存储,是怎么服务大家的,云存储有什么优点和缺点?

目前云存储的主要分为公有云.私有云和混合云.公有云通常指第三方提供商为用户提供的能够使用的云,公有云一般可通过互联网使用.这种云有许多实例,比如百度云盘.360云盘.OneDrive.阿里云.腾讯微云 ...

- 计算机的存储单位B KB MB GB TB···

bit(也读作"比特")缩写为:"b"(注意哦,是小写 b), 它表示的是计算机里的一个0或一个1 Byte(字节)缩写是:"B"(注意哦, ...

- 计算机存储单位全称KB/MB/GB/TB/PB/EB/ZB

单位 英文全称 中文全称 转换 KB Kilo Byte 千字节 1KB=1024B MB Mega Byte 兆字节 1MB=1024KB GB Giga Byte 千兆 1GB=1024MB TB ...

- 数据存储单位的换算关系(TB、PB、EB、ZB、YB)

- python 爬虫源码 selenium并存储数据库_使用pythonSelenium爬取内容并存储MySQL数据库的实例图解...

这篇文章主要介绍了python Selenium爬取内容并存储至MySQL数据库的实现代码,需要的朋友可以参考下 前面我通过一篇文章讲述了如何爬取CSDN的博客摘要等信息.通常,在使用Selenium ...

- 云视通手机下载的文件存储位置_小白版丨IPFS网络怎么存储、下载文件?怎么托管网站?...

IPFS是一种用于文件存储的对等网络协议,采用的是基于内容的寻址,而非基于位置.这意味着要查找文件,我们不需要知道它在哪里(abc.com/cat.png),而是它包含的内容(QmSNssW5a9S3 ...

- 高性能蓝光存储让你心中有‘数’,不浪费存储成本

社会,就是一个"数据工厂",有很多条流水线每天在生产着大量的数据,比如社交媒体线.电子商务线.医疗服务线.金融交易线以及政务线等等. 随着数字化的快速发展,这个社会生产数据的能力更 ...

最新文章

- Web Intents:Google的内部WebApp互联机制

- PyCharm中控制台输出日志分层级分颜色显示

- 跟我学AI建模:分子动力学仿真模拟之DeepMD-kit框架

- 罗振宇2021跨年演讲1:长大以后有多少责任和烦恼?

- 销售行业ERP数据统计分析都有哪些维度?

- mysql 限制单个用户资源_限制MySQL数据库单个用户最大连接数等的方法

- js基础知识汇总06

- 页面中引入js的几种方法

- 乱谈企业化信息规划与实施

- Grafana v5.2 设置

- 10分钟也不一定学会的灵敏度分析

- 多元函数偏导数连续、存在与可微的关系

- 从力的角度重新认识迭代次数

- Terracotta Server集群

- 《数字图像处理原理与实践(MATLAB版)》一书之代码Part3

- 最坏的不是面试被拒,而是没面试机会,以面试官视角分析哪些简历至少能有面试机会

- facebook 照片存储系统haystack的学习

- 人脸性别转换APP有什么?快把这些APP收好

- SAP甲方历程回顾-01 2017年转到甲方的故事~从乙方离职

- 世界上最难的视觉图_世界上最难攀登的十座高峰!让人步步惊心