martin fowler_Martin Kleppmann的大型访谈:“弄清楚分布式数据系统的未来”

martin fowler

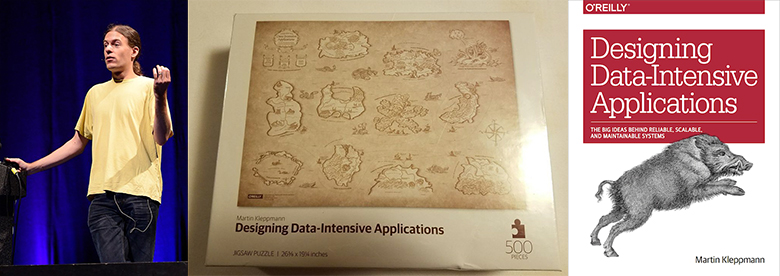

马丁·克莱普曼博士 (Dr. Martin Kleppmann)

is a researcher in distributed systems at the University of Cambridge, and the author of the highly acclaimed

是剑桥大学分布式系统研究人员,并受到高度赞扬

«Designing Data-Intensive Applications» (O'Reilly Media, 2017). 《设计数据密集型应用程序》 (O'Reilly Media,2017年)。

Kevin Scott, CTO at Microsoft once said: «This book should be required reading for software engineers. Designing Data-Intensive Applications is a rare resource that connects theory and practice to help developers make smart decisions as they design and implement data infrastructure and systems.»

微软首席技术官凯文·斯科特(Kevin Scott) 曾说过 :“软件工程师应读这本书。 设计数据密集型应用程序是一种罕见的资源,可将理论和实践联系起来,以帮助开发人员在设计和实现数据基础架构和系统时做出明智的决定。»

Martin’s main research interests include collaboration software, CRDTs, and formal verification of distributed algorithms. Previously he was a software engineer and an entrepreneur at several Internet companies including LinkedIn and Rapportive, where he worked on large-scale data infrastructure.

Martin的主要研究兴趣包括协作软件,CRDT和分布式算法的形式验证。 之前,他是包括LinkedIn和Rapportive在内的多家互联网公司的软件工程师和企业家,在那儿他从事大型数据基础架构的工作。

瓦迪姆(Vadim Tsesko) (Vadim Tsesko)

(

(

@incubos) is a lead software engineer at @incubos )是Odnoklassniki who works in Core Platform team. Vadim’s scientific and engineering interests include distributed systems, data warehouses and verification of software systems.Odnoklassniki的首席软件工程师,他在Core Platform团队中工作。 Vadim的科学和工程兴趣包括分布式系统,数据仓库和软件系统验证。

内容: (Contents:)

Moving from business to academic research;

从商业转向学术研究;

Discussion of «Designing Data-Intensive Applications»;

讨论“设计数据密集型应用程序”;

Common sense against artificial hype and aggressive marketing;

反对虚假宣传和积极营销的常识;

Pitfalls of CAP theorem and other industry mistakes;

CAP定理和其他行业错误的陷阱;

Benefits of decentralization;

权力下放的好处;

Blockchains, Dat, IPFS, Filecoin, WebRTC;

区块链,Dat,IPFS,Filecoin,WebRTC;

New CRDTs. Formal verification with Isabelle;

新的CRDT。 与Isabelle进行正式验证;

Event sourcing. Low level approach. XA transactions;

活动采购。 低级方法。 XA交易;

Apache Kafka, PostgreSQL, Memcached, Redis, Elasticsearch;

Apache Kafka,PostgreSQL,Memcached,Redis,Elasticsearch;

How to apply all that tools to real life;

如何将所有这些工具应用于现实生活;

Expected target audience of Martin’s talks and the Hydra conference.

马丁演讲和Hydra会议的预期目标受众。

从商业转向学术研究 (Moving from business to academic research)

瓦迪姆 (Vadim)

: The first question I would like to ask you is really important for me. You founded Go Test It and Rapportive, and you had been designing and engineering large-scale systems at LinkedIn for a while. Then you decided to switch from industrial engineering to academia. Could you please explain the motivation for that decision? What have you gained and what have you had to sacrifice?

:我想问你的第一个问题对我来说真的很重要。 您创建了Go Test It和Rapportive,并在LinkedIn上从事大型系统的设计和工程设计。 然后,您决定从工业工程学转向学术界。 您能解释一下做出此决定的动机吗? 您获得了什么,必须牺牲什么?

马丁 (Martin)

: It’s been a very interesting process. As you seem to be hinting at, not many people make the switch in that direction. A lot of people go from academia to industry, but not so many back. Which is understandable, because I had to take quite a large pay cut in order to go back to academia. But what I really love about research is the freedom to work on topics that I find interesting and that I think are important, even if those topics don’t immediately lead to a commercially viable product within the next 6 months or so. Of course, at a company the stuff you build needs to turn into a product that can be sold in some form or another. On the other hand, the things I’m now working on are topics that are really important for the future of how we build software and how the internet works. But we don’t really understand these topics well enough yet to go and start building commercial products: we are still at the level of trying to figure out, fundamentally, what these technologies need to look like. And since this is fundamental research I realized it’s better to do this at a university than to try to do it at a company, because at a university I’m free to work on things that might not become commercially viable for another ten years, and that is OK. It’s OK to work with a much longer time horizon when you’re in research.

:这是一个非常有趣的过程。 正如您似乎暗示的那样,朝这个方向切换的人并不多。 有很多人从学术界转到工业界,但没有那么多人回来。 这是可以理解的,因为为了回到学术界,我不得不大幅减薪。 但是,我真正喜欢研究的是可以自由处理我认为很有趣并且我认为很重要的主题,即使这些主题在接下来的6个月左右的时间内不会立即产生商业上可行的产品。 当然,在公司中,您创建的东西需要转变为可以以某种形式出售的产品。 另一方面,我现在正在研究的主题对于我们将来如何构建软件以及互联网如何工作非常重要。 但是,我们对这些主题的理解还不够深入,无法开始构建商业产品:我们仍处于尝试从根本上弄清楚这些技术需要什么样的水平。 而且由于这是基础研究,所以我意识到最好是在大学里做而不是在公司里做,因为在大学里,我可以自由地从事可能再十年无法实现商业化的工作,并且那没问题。 在进行研究时,可以在更长的时间范围内工作。

“设计数据密集型应用程序” (“Designing Data-Intensive Applications”)

瓦迪姆 (Vadim)

: We’ll definitely get back to your current research interests. Meanwhile let’s talk about your latest book

:我们一定会恢复您当前的研究兴趣的。 同时,让我们谈谈您的最新书

Designing Data-Intensive Applications. I’m a big fan of your book and I believe it’s one of the best guides for building modern distributed systems. You’ve covered almost all the notable achievements up to date.设计数据密集型应用程序 。 我是您的书的忠实拥护者,并且我相信这是构建现代分布式系统的最佳指南之一。 您已经涵盖了迄今为止几乎所有值得注意的成就。

马丁 (Martin)

: Thank you, I’m glad you find it useful.

:谢谢,很高兴您发现它有用。

瓦迪姆 (Vadim)

: Just for those unlucky readers who haven’t read your book yet, could you please name several major achievements in the field of distributed systems nowadays?

:对于那些还没有读完本书的不幸读者,您能说一下当今分布式系统领域的几项主要成就吗?

马丁 (Martin)

: Well, the goal of the book is not so much to explain one particular technology; the goal is rather to give you a guide to the entire landscape of different systems that are used for storing and processing data. There are so many different databases, stream processors, batch processing tools, all sorts of replication tools and so on, and it’s really hard to get an overview. If you’re trying to build a particular application it’s really hard to know which database you should use, and which tools are the most appropriate ones for the problem you’re trying to solve. A lot of existing computing books simply didn’t answer that problem in a satisfactory way. I found that if you’re reading a book on Cassandra for example, it would tell you why Cassandra is wonderful, but it generally wouldn’t tell you about things for which it’s not a good fit. So what I really wanted to do in this book was to identify the main questions that you need to ask yourself if you’re trying to build some kind of large-scale system. And through answering those questions you can then help figure out which technologies are appropriate and which are less appropriate for the particular problem you’re trying to solve — because, in general, there’s no one technology that is perfect for everything. And so, the book is trying to help you figure out the pros and cons of different technologies in different settings.

:嗯,本书的目的不是解释一种特定的技术。 目标是为您提供有关用于存储和处理数据的不同系统的整个情况的指南。 有这么多不同的数据库,流处理器,批处理工具,各种复制工具等等,因此很难一概而论。 如果您要构建特定的应用程序,那么很难知道应该使用哪个数据库,以及哪种工具最适合您要解决的问题。 许多现有的计算机书籍根本无法令人满意地回答该问题。 我发现,例如,如果您正在阅读有关Cassandra的书,它会告诉您Cassandra为何很棒,但通常不会告诉您不适合的事情。 因此,我在本书中真正想做的是确定您是否要尝试构建某种大规模系统时需要问自己的主要问题。 通过回答这些问题,您可以帮助找出哪种技术适合您要解决的特定问题,哪些不适用于您要解决的特定问题,因为总的来说,没有一种技术可以完美地解决所有问题。 因此,这本书试图帮助您了解不同环境下不同技术的利弊。

反对虚假宣传和积极营销的常识 (Common sense against artificial hype and aggressive marketing)

瓦迪姆 (Vadim)

: Indeed, often — if not always — there are many technologies with overlapping functions, features and data models. And you can’t believe all those marketing buzzwords. You need to read the white papers to learn the internals, and even try to read the source code to understand how it works exactly.

:的确,经常,即使不是总是如此,有许多技术具有重叠的功能,特征和数据模型。 而且,您无法相信所有这些营销流行语。 您需要阅读白皮书以了解内部知识,甚至尝试阅读源代码以了解其工作原理。

马丁 (Martin)

: And I found that you often have to read between the lines because often documentation doesn’t really tell you for which things a particular database sucks. The truth is that every database sucks at some kind of workload, the question is just to know which ones they are. So yes, sometimes you have to read the deployment guidelines for ops people and try to reverse-engineer from that what is actually going on in the system.

:而且,我发现您经常必须仔细阅读这些内容,因为文档通常并不能真正告诉您特定数据库的缺点。 事实是每个数据库都承受某种工作量,问题是仅仅知道它们是哪个。 因此,是的,有时您必须阅读运维人员的部署指南,并尝试对系统中实际发生的事情进行逆向工程。

瓦迪姆 (Vadim)

: Don’t you feel that the industry lacks the common vocabulary or a set of criteria to compare different solutions for the same problem? Similar things are called by different names, some things are omitted which should always be clear and stated explicitly, like transaction guarantees. What do you think?

:您是否不觉得该行业缺乏通用的词汇表或一套标准来比较同一问题的不同解决方案? 相似的事物用不同的名称来调用,某些事物则应省略,这些事物应始终清楚并明确声明,例如事务保证。 你怎么看?

马丁 (Martin)

: Yeah, I think a problem that our industry has is that often, when people talk about a particular tool, there’s a lot of hype about everything. Which is understandable, because the tools are made by various companies, and obviously those companies want to promote their products, and so those companies will send people to conferences to speak about how wonderful their product is, essentially. It will be disguised as a tech talk, but essentially it’s still a sales activity. As an industry, we really could do with more honesty about the advantages and disadvantages of some product. And part of that requires a common terminology, because otherwise you simply can’t compare things on an equal footing. But beyond a shared terminology we need ways of reasoning about things that certain technologies are good or bad at.

:是的,我认为我们行业经常遇到的问题是,当人们谈论一种特定的工具时,很多事情都会大肆宣传。 这是可以理解的,因为这些工具是由多家公司制造的,并且显然那些公司希望推广其产品,因此,这些公司将派人参加会议,就其产品的本质上讲是多么美妙。 它会被伪装成技术演讲,但本质上仍然是销售活动。 作为一个行业,我们确实可以更加诚实地了解某些产品的优缺点。 其中一部分需要通用的术语,因为否则您将无法在平等的基础上进行比较。 但是除了通用的术语外,我们还需要对某些技术的优缺点进行推理的方法。

CAP定理和其他行业错误的陷阱 (Pitfalls of CAP theorem and other industry mistakes)

瓦迪姆 (Vadim)

: My next question is quite a controversial one. Could you please name any major mistakes in the industry you have stumbled upon during your career? Maybe overvalued technologies or widely-practiced solutions we should have got rid of a long time ago? It might be a bad example, but compare JSON over HTTP/1.1 vs the much more efficient gRPC over HTTP/2. Or is there an alternative point of view?

:我的下一个问题是一个有争议的问题。 请问您在职业生涯中遇到的任何重大错误吗? 也许我们早就应该抛弃了被高估的技术或广泛采用的解决方案? 这可能是一个不好的例子,但是将HTTP / 1.1上的JSON与HTTP / 2上效率更高的gRPC进行比较。 还是有其他观点?

马丁 (Martin)

: I think in many cases there are very good reasons for why a technology does one thing and not another. So I’m very hesitant to call things mistakes, because in most cases it’s a question of trade-offs. In your example of JSON over HTTP/1.1 versus Protocol Buffers over HTTP/2, I think there are actually quite reasonable arguments for both sides there. For example, if you want to use Protocol Buffers, you have to define your schema, and a schema can be a wonderful thing because it helps document exactly what communication is going on. But some people find schemas annoying, especially if they’re at early stages of development and they’re changing data formats very frequently. So there you have it, there’s a question of trade-offs; in some situations one is better, in others the other is better.

:我认为,在很多情况下,为什么一项技术只做一件事情而不是另一件事是有很好的理由的。 因此,我非常不愿意将错误称为“错误”,因为在大多数情况下,这是权衡问题。 在您的HTTP / 1.1上的JSON与HTTP / 2上的协议缓冲区的示例中,我认为双方实际上都有相当合理的参数。 例如,如果要使用协议缓冲区,则必须定义架构,而架构可以是一件很了不起的事情,因为它可以帮助您准确记录正在进行的通信。 但是有些人会发现架构很烦人,特别是当它们处于开发的早期阶段并且他们非常频繁地更改数据格式时。 因此,您已经有了一个权衡的问题。 在某些情况下,一种更好,在其他情况下,另一种更好。

In terms of actual mistakes that I feel are simply bad, there’s only a fairly small number of things. One opinion that I have is that the CAP Theorem is fundamentally bad and just not useful. Whenever people use the CAP Theorem to justify design decisions, I think often they are either misinterpreting what CAP is actually saying, or stating the obvious in a way. CAP as a theorem has a problem that it is really just stating the obvious. Moreover, it talks about just one very narrowly defined consistency model, namely linearizability, and one very narrowly defined availability model, which is: you want every replica to be fully available for reads and writes, even if it cannot communicate with any other replicas. These are reasonable definitions, but they are very narrow, and many applications simply do not fall into the case of needing precisely that definition of consistency or precisely that definition of availability. And for all the applications that use a different definition of those words, the CAP Theorem doesn’t tell you anything at all. It’s simply an empty statement. So that, I feel, is a mistake.

就我认为很糟糕的实际错误而言,只有很少的事情。 我的一种观点是,CAP定理从根本上讲是不好的,只是没有用。 每当人们使用CAP定理证明设计决策合理时,我认为他们经常是在错误地解释CAP实际在说什么,或者以某种方式陈述显而易见的内容。 CAP作为一个定理有一个问题,那就是它只是说明了显而易见的事实。 此外,它仅讨论一种非常狭义的一致性模型,即线性化,以及一种非常狭义的可用性模型,即:您希望每个副本都完全可用于读写,即使它无法与任何其他副本通信。 这些是合理的定义,但范围非常狭窄,并且许多应用程序根本不属于需要精确定义一致性或精确定义可用性的情况。 对于使用这些单词的不同定义的所有应用程序,CAP定理根本不会告诉您任何信息。 这只是一个空语句。 因此,我认为这是一个错误。

And while we’re ranting, if you’re asking me to name mistakes, another big mistake that I see in the tech industry is the mining of cryptocurrencies, which I think is such an egregious waste of electricity. I just cannot fathom why people think that is a good idea.

而且,尽管我们在咆哮,但如果您要我说出错误的名字,我在技术行业中看到的另一个大错误是挖矿加密货币,我认为这是非常可观的电力浪费。 我只是无法理解为什么人们认为这是一个好主意。

瓦迪姆 (Vadim)

: Talking about the CAP Theorem, many storage technologies are actually tunable, in terms of things like AP or CP. You can choose the mode they operate in.

:谈到CAP定理,实际上许多存储技术都是可调的,例如AP或CP。 您可以选择他们操作的模式。

马丁 (Martin)

: Yes. Moreover, there are many technologies which are neither consistent nor available under the strict definition of the CAP Theorem. They are literally just P! Not CP, not CA, not AP, just P. Nobody says that, because that would look bad, but honestly, this could be a perfectly reasonable design decision to make. There are many systems for which that is actually totally fine. This is actually one of the reasons why I think that CAP is such an unhelpful way of talking about things: because there is a huge part of the design space that it simply does not capture, where there are perfectly reasonable good designs for software that it simply doesn’t allow you to talk about.

:是的。 此外,在CAP定理的严格定义下,有许多技术既不一致又不可用。 他们实际上只是P! 不是CP,不是CA,不是AP,只是P。没有人这么说,因为这看起来很糟糕,但是说实话,这可能是一个非常合理的设计决策。 实际上,有许多系统完全可以满足要求。 这实际上是我认为CAP这么无聊的谈论方式的原因之一:因为在设计空间中有很大一部分它根本无法捕捉到,而对于它而言,有完美合理的软件设计根本不允许您谈论。

权力下放的好处 (Benefits of decentralization)

瓦迪姆 (Vadim)

: Talking about data-intensive applications today, what other major challenges, unsolved problems or hot research topics can you name? As far as I know, you’re a major proponent of decentralized computation and storage.

:在谈论当今数据密集型应用程序时,您还能说出哪些其他主要挑战,未解决的问题或热门研究主题? 据我所知,您是分散计算和存储的主要支持者。

马丁 (Martin)

: Yes. One of the theses behind my research is that at the moment we rely too much on servers and centralization. If you think about how the Internet was originally designed back in the day when it evolved from ARPANET, it was intended as a very resilient network where packets could be sent via several different routes, and they would still get to the destination. And if a nuclear bomb hit a particular American city, the rest of the network would still work because it would just route around the failed parts of the system. This was a Cold War design.

:是的。 我研究的依据之一是,目前我们过于依赖服务器和集中化。 如果您考虑一下Internet在最初从ARPANET演进时的最初设计,它原本是一个非常灵活的网络,可以通过数种不同的路径发送数据包,但这些数据包仍然可以到达目的地。 而且,如果一枚核弹袭击了一个特定的美国城市,该网络的其余部分仍将正常工作,因为它只会绕过系统的故障部分。 这是冷战设计。

And then we decided to put everything in the cloud, and now basically everything has to go via one of AWS’s datacenters, such as us-east-1 somewhere in Virginia. We’ve taken away this ideal of being able to decentrally use various different parts of the network, and we’ve put in these servers that everything relies on, and now it’s extremely centralized. So I’m interested in decentralization, in the sense of moving some of the power and control over data away from those servers and back to the end users.

然后,我们决定将所有内容都放入云中,现在基本上所有内容都必须通过AWS的数据中心之一进行传输,例如弗吉尼亚州的us-east-1。 我们已经取消了能够分散使用网络的各个不同部分的理想,并且我们安装了所有依赖的服务器,现在它已高度集中化。 因此,我对权力下放很感兴趣,从某种意义上说,是将权力和对数据的控制权从这些服务器转移回了最终用户。

One thing I want to add in this context is that a lot of people talking about decentralization are talking about things like cryptocurrencies, because they are also attempting a form of decentralization whereby control is moved away from a central authority like a bank and into a network of cooperating nodes. But that’s not really the sort of decentralization that I’m interested in: I find that these cryptocurrencies are actually still extremely centralized, in the sense that if you want to make a Bitcoin transaction, you have to make it on the Bitcoin network — you have to use the network of Bitcoin, so everything is centralized on that particular network. The way it’s built is decentralized in the sense that it doesn’t have a single controlling node, but the network as a whole is extremely centralized in that any transaction you have to make you have to do through this network. You can’t do it in some other way. I feel that it’s still a form of centralization.

在这种情况下,我想补充的一件事是,很多人在谈论去中心化工作,他们谈论的是加密货币,因为他们还试图通过一种去中心化的方式将控制权从中央机构(如银行)转移到网络中。协作节点的数量。 但这并不是我真正感兴趣的去中心化:我发现这些加密货币实际上仍然非常集中,在某种意义上,如果您想进行比特币交易,则必须在比特币网络上进行-您必须使用比特币网络,因此一切都集中在该特定网络上。 它的构建方式是分散的,因为它没有单个控制节点,但是整个网络非常集中,因为您必须通过该网络进行任何交易。 您无法通过其他方式做到这一点。 我觉得它仍然是集中化的一种形式。

In the case of a cryptocurrency this centralization might be inevitable, because you need to do stuff like avoid double spending, and doing that is difficult without a network that achieves consensus about exactly which transactions have happened and which have not. And this is exactly what the Bitcoin network does. But there are many applications that do not require something like a blockchain, which can actually cope with a much more flexible model of data flowing around the system. And that’s the type of decentralized system that I’m most interested in.

在使用加密货币的情况下,这种集中化可能是不可避免的,因为您需要做一些事情,例如避免双重支出,而如果没有一个网络就达成的交易和未达成的交易达成共识,这很难做到。 而这正是比特币网络所做的。 但是有许多应用程序不需要像区块链之类的东西,而区块链实际上可以应付系统中流动的更为灵活的数据模型。 这就是我最感兴趣的去中心化系统的类型。

瓦迪姆 (Vadim)

: Could you please name any promising or undervalued technologies in the field of decentralized systems apart from blockchain? I have been using IPFS for a while.

:除了区块链之外,您能否列举一下去中心化系统领域中任何有前途或被低估的技术? 我已经使用IPFS一段时间了。

马丁 (Martin)

: For IPFS, I have looked into it a bit though I haven’t actually used it myself. We’ve done some work with the

:对于IPFS,尽管我自己并未实际使用它,但我对其进行了一些研究。 我们已经与

Dat project, which is somewhat similar to Dat项目,从某种意义上说它也是一种去中心化存储技术,在某种程度上类似于IPFS in the sense that it is also a decentralized storage technology. The difference is that IPFS has IPFS 。 区别在于IPFS附加了Filecoin, a cryptocurrency, attached to it as a way of paying for storage resources, whereas Dat does not have any blockchain attached to it — it is purely a way of replicating data across multiple machines in a P2P manner.Filecoin (一种加密货币)作为支付存储资源的方式,而Dat却没有附加任何区块链-纯粹是一种以P2P方式跨多台计算机复制数据的方式。

For the project that I’ve been working on, Dat has been quite a good fit, because we wanted to build collaboration software in which several different users could each edit some document or database, and any changes to that data would get sent to anyone else who needs to have a copy of this data. We can use Dat to do this replication in a P2P manner, and Dat takes care of all the networking-level stuff, such as NAT traversal and getting through firewalls — it’s quite a tricky problem just to get the packets from one end to the other. And then we built a layer on top of that, using CRDTs, which is a way of allowing several people to edit some document or dataset and to exchange those edits in an efficient way. I think you can probably build this sort of thing on IPFS as well: you can probably ignore the Filecoin aspect and just use the P2P replication aspect, and it will probably do the job just as well.

对于我正在从事的项目,Dat非常适合,因为我们要构建协作软件,其中几个不同的用户可以每个人编辑一些文档或数据库,并且对该数据的任何更改都可以发送给任何人。其他需要此数据副本的人。 我们可以使用Dat以P2P方式进行此复制,并且Dat可以处理所有网络级的工作,例如NAT遍历和通过防火墙-仅将数据包从一端传送到另一端是一个非常棘手的问题。 。 然后,我们使用CRDT在此基础上构建一层,这是一种允许多个人编辑某些文档或数据集并以有效方式交换这些编辑的方式。 我认为您也可以在IPFS上构建这种类型的东西:您可以忽略Filecoin方面,而仅使用P2P复制方面,它也可能会做得很好。

瓦迪姆 (Vadim)

: Sure, though using IPFS might lead to lower responsiveness, because WebRTC underlying Dat connects P2P nodes directly, and IPFS works like a distributed hash table thing.

:当然,尽管使用IPFS可能会导致响应速度降低,因为WebRTC底层Dat直接连接P2P节点,而IPFS的工作方式类似于分布式哈希表。

马丁 (Martin)

: Well, WebRTC is at a different level of the stack, since it’s intended mostly for connecting two people together who might be having a video call; in fact, the software we’re using for this interview right now may well be using WebRTC. And WebRTC does give you a data channel that you can use for sending arbitrary binary data over it, but building a full replication system on top of that is still quite a bit of work. And that’s something that Dat or IPFS do already.

:好吧,WebRTC处于堆栈的不同层次,因为它主要用于将可能正在进行视频通话的两个人连接在一起。 实际上,我们现在用于这次采访的软件很可能正在使用WebRTC。 WebRTC确实为您提供了一个数据通道,您可以使用该通道在其上发送任意二进制数据,但是在此之上构建完整的复制系统仍然需要大量工作。 Dat或IPFS已经做到了这一点。

You mentioned responsiveness — that is certainly one thing to think about. Say you wanted to build the next Google Docs in a decentralized way. With Google Docs, the unit of changes that you make is a single keystroke. Every single letter that you type on your keyboard may get sent in real time to your collaborators, which is great from the point of view of fast real-time collaboration. But it also means that over the course of writing a large document you might have hundreds of thousands of these single-character edits that accumulate, and a lot of these technologies right now are not very good at compressing this kind of editing data. You can keep all of the edits that you’ve ever made to your document, but even if you send just a hundred bytes for every single keystroke that you make and you write a slightly larger document with, say, 100,000 keystrokes, you suddenly now have 10 MB of data for a document that would only be a few tens of kilobytes normally. So we have this huge overhead for the amount of data that needs to be sent around, unless we get more clever at compressing and packaging up changes.

您提到了响应能力-这当然是要考虑的一件事。 假设您想以分散的方式构建下一个Google文档。 使用Google文档,您所做的更改单位是一个按键。 您在键盘上输入的每个字母都可以实时发送给协作者,从快速实时协作的角度来看,这非常好。 但这也意味着在编写大型文档的过程中,您可能会积累成千上万的单字符编辑,并且当前许多此类技术都不善于压缩这种编辑数据。 您可以保留对文档所做的所有编辑,但是即使您每次进行一次按键发送仅发送100个字节,并且编写了一个较大的文档(例如,有100,000次按键),您现在突然一个文档有10 MB的数据,通常只有几十KB。 因此,除非需要更聪明地压缩和打包更改,否则我们将为需要发送的数据量承担巨大的开销。

Rather than sending somebody the full list of every character that has ever been typed, we might just send the current state of the document, and after that we send any updates that have happened since. But a lot of these peer-to-peer systems don’t yet have a way of doing those state snapshots in a way that would be efficient enough to use them for something like Google Docs. This is actually an area I’m actively working on, trying to find better algorithms for synchronizing up different users for something like a text document, where we don’t want to keep every single keystroke because that would be too expensive, and we want to make more efficient use of the network bandwidth.

我们可能不发送任何人曾经输入过的每个字符的完整列表,而只是发送文档的当前状态,然后再发送此后发生的任何更新。 但是,许多此类点对点系统还没有一种方式来做那些状态快照,而这种方式足以将它们用于Google Docs。 实际上,这是我正在积极努力的一个领域,试图找到更好的算法来同步不同用户,例如文本文档,在此我们不想保留每一个按键,因为那太昂贵了,我们想要以更有效地利用网络带宽。

新的CRDT。 Isabelle的正式验证 (New CRDTs. Formal verification with Isabelle)

瓦迪姆 (Vadim)

: Have you managed to compress that keystroke data substantially? Have you invented new CRDTs or anything similar?

:您是否设法大幅压缩了击键数据? 您是否发明了新的CRDT或类似的东西?

马丁 (Martin)

: Yes. So far we only have prototypes for this, it’s not yet fully implemented, and we still need to do some more experiments to measure how efficient it actually is in practice. But we have developed some compression schemes that look very promising. In my prototype I reduced it from about 100 bytes per edit to something like 1.7 bytes of overhead per edit. And that’s a lot more reasonable of course. But as I say, these experiments are still ongoing, and the number might still change slightly. But I think the bottom line is that there’s a lot of room there for optimization still, so we can still make it a lot better.

:是的。 到目前为止,我们仅对此有一个原型,还没有完全实现,并且我们仍然需要做更多的实验来衡量其实际效率。 但是我们已经开发了一些看起来很有希望的压缩方案。 在我的原型中,我将其从每次编辑大约100字节减少到每次编辑大约1.7字节的开销。 当然,这更合理。 但正如我所说,这些实验仍在进行中,并且数量可能仍会略有变化。 但我认为最重要的是,仍有大量的优化空间,因此我们仍然可以使其更好。

瓦迪姆 (Vadim)

: So this is what your talk will be about at the

:所以这就是您在

Hydra conference, am I right?九头蛇会议 ,对吗?

马丁 (Martin)

: Yes, exactly. I’ll give a quick introduction to the area of CRDTs, collaborative software and some of the problems that arise in that context. Then I’ll describe some of the research that we’ve been doing in this area. It’s been quite fun because the research we’ve been doing has been across a whole range of different concerns. On the very applied side, we’ve got a JavaScript implementation of these algorithms, and we’re using that to build real pieces of software, trying to use that software ourselves to see how it behaves. On the other end of the spectrum, we’ve been working with formal methods to prove these algorithms correct, because some of these algorithms are quite subtle and we want to be very sure that the systems we’re making are actually correct, i.e. that they always reach a consistent state. There have been a lot of algorithms in the past that have actually failed to do that, which were simply wrong, that is, in certain edge cases, they would remain permanently inconsistent. And so, in order to avoid these problems that algorithms have had in the past, we’ve been using formal methods to prove our algorithms correct.

: 对,就是这样。 我将快速介绍CRDT,协作软件以及在此背景下出现的一些问题。 然后,我将描述我们在这一领域一直在进行的一些研究。 之所以非常有趣,是因为我们一直在进行的研究涉及到各种各样的问题。 在非常实际的方面,我们已经对这些算法进行了JavaScript的实现,并且我们正在使用它来构建实际的软件,并尝试自己使用该软件查看其行为。 另一方面,我们一直在使用形式化方法来证明这些算法是正确的,因为其中一些算法非常微妙,我们希望确保所构建的系统实际上是正确的,即他们总是达到一致的状态。 过去,有很多算法实际上没有做到这一点,这只是错误的,也就是说,在某些极端情况下,它们将始终保持不一致。 因此,为了避免算法过去遇到的这些问题,我们一直在使用形式化方法来证明我们的算法正确。

瓦迪姆 (Vadim)

: Wow. Do you really use theorem provers, like Coq or Isabelle or anything else?

: 哇。 您是否真的使用定理证明,例如Coq或Isabelle或其他任何东西?

马丁 (Martin)

: Exactly, we’ve been using Isabelle for that.

:确实,我们一直在使用Isabelle。

Martin’s talk «Correctness proofs of distributed systems with Isabelle» at The Strange Loop conference in September.Martin的演讲 “使用Isabelle进行分布式系统的正确性证明”。

瓦迪姆 (Vadim)

: Sounds great! Are those proofs going to be published?

:听起来不错! 那些证据要出版吗?

马丁 (Martin)

: Yes, our first set of proofs is already public. We

:是的,我们的第一套证明已经公开。 我们

published that a year and a half ago: it was a framework for verifying CRDTs, and we verified three particular CRDTs within that framework, the main one of which was RGA (出版 ,一年半前:这是用于验证CRDTs一个框架,我们在这一框架内核实三个特别的CRDTs,其中主要的一个是RGA( Replicated Growable Array), which is a CRDT for collaborative text editing. While it is not very complicated, it is quite a subtle algorithm, and so it’s a good case where proof is needed, because it’s not obvious just from looking at it that it really is correct. And so the proof gives us the additional certainty that it really is correct. Our previous work there was on verifying a couple of existing CRDTs, and our most recent work in this area is about our own CRDTs for new data models we’ve been developing, and proving our own CRDTs correct as well.复制阵列可增长 ),这是一个协作文本编辑一个CRDT。 尽管它不是很复杂,但是它是一个微妙的算法,因此它是需要证明的一个很好的例子,因为仅从它的角度来看并不能证明它确实是正确的。 因此,证明为我们提供了额外的确定性,即它确实是正确的。 我们之前的工作是验证几个现有的CRDT,而我们在该领域的最新工作是关于我们自己的CRDT,以用于我们正在开发的新数据模型,并证明我们自己的CRDT也正确。

瓦迪姆 (Vadim)

: How much larger is the proof compared to the description of the algorithm? Because it can be a problem sometimes.

:证明与算法描述相比要大多少? 因为有时可能会出现问题。

马丁 (Martin)

: Yes, that is a problem — the proofs are often a lot of work. I think in our latest example… Actually, let me have a quick look at the code. The description of the algorithm and the data structures is about 60 lines of code. So it’s quite a small algorithm. The proof is over 800 lines. So we’ve got roughly 12:1 ratio between the proof and the code. And that is unfortunately quite typical. The proof is a large amount of additional work. On the other hand, once we have the proof, we have gained very strong certainty in the correctness of the algorithm. Moreover, we have ourselves, as humans, understood the algorithm much better. Often I find that through trying to formalize it, we end up understanding the thing we’re trying to formalize much better than we did before. And that in itself is actually a useful outcome from this work: besides the proof itself we gain a deeper understanding, and that is often very helpful for creating better implementations.

:是的,这是一个问题-证明通常是很多工作。 我认为在我们的最新示例中……实际上,让我快速看一下代码。 该算法和数据结构的描述约为60行代码。 因此,这是一个很小的算法。 证明超过800行。 因此,证明和代码之间的比例大约为12:1。 不幸的是,这很典型。 证明是大量的额外工作。 另一方面,一旦有了证明,我们就在算法的正确性上获得了很强的确定性。 而且,作为人类,我们对算法有更好的了解。 我通常会发现,通过尝试将其形式化,我们最终比以前更了解要进行形式化的事物。 这实际上是这项工作的有益结果:除了证明本身,我们还获得了更深入的了解,这通常对创建更好的实现很有帮助。

瓦迪姆 (Vadim)

: Could you please describe the target audience of your talk, how hardcore is it going to be? What is the preliminary knowledge you expect the audience to have?

:您能否描述一下您的演讲的目标受众,这将有多重要? 您希望听众有什么初步知识?

马丁 (Martin)

: I like to make my talks accessible with as little previous knowledge requirement as possible, and I try to lift everybody up to the same level. I cover a lot of material, but I start at a low base. I would expect people to have some general distributed systems experience: how do you send some data over a network using TCP, or maybe a rough idea of how Git works, which is quite a good model for these things. But that’s about all you need, really. Then, understanding the work we’ve been doing on top of that is actually not too difficult. I explain everything by example, using pictures to illustrate everything. Hopefully, everybody will be able to follow along.

:我希望尽可能减少以前的知识要求就可以进行演讲,并且尝试使每个人都达到同一水平。 我涵盖了很多内容,但是我的基础很低。 我希望人们会有一些一般的分布式系统经验:如何使用TCP在网络上发送一些数据,或者大概了解Git的工作方式,这对于这些事情来说是一个很好的模型。 但这确实是您所需要的。 然后,了解我们在此之上所做的工作实际上并不太困难。 我通过示例来解释一切,使用图片来说明一切。 希望每个人都能跟随。

活动采购。 低级方法。 XA交易 (Event sourcing. Low level approach. XA transactions)

瓦迪姆 (Vadim)

: Sounds really great. Actually, we have some time and I would like to discuss one of your

:听起来真的很棒。 其实,我们有一段时间,我想讨论一下您

有关在线事件处理的recent articles about online event processing. You’re a great supporter of the idea of event sourcing, is that correct?最新文章 。 您是事件源创意的大力支持者,对吗?

马丁 (Martin)

: Yes, sure.

:是的,可以。

瓦迪姆 (Vadim)

: Nowadays this approach is getting momentum, and in the pursuit of all the advantages of globally ordered log of operations, many engineers try to deploy it everywhere. Could you please describe some cases where event sourcing is not the best option? Just to prevent its misuse and possible disappointment with the approach itself.

:如今,这种方法正在蓬勃发展,为了追求全球有序操作日志的所有优势,许多工程师试图将其部署到任何地方。 您能否描述事件外包不是最佳选择的一些情况? 只是为了防止其滥用和使用方法本身可能造成的失望。

马丁 (Martin)

: There are two different layers of the stack that we need to talk about first. Event sourcing, as proposed by Greg Young and some others, is intended as a mechanism for data modeling, that is: if you have a database schema and you’re starting to lose control of it because there are so many different tables and they’re all getting modified by different transactions — then event sourcing is a way of bringing better clarity to this data model, because the events can express very directly what is happening at a business level. What is the action that the user took? And then, the consequences of that action might be updating various tables and so on.Effectively, what you’re doing with event sourcing is you’re separating out the action (the event) from its effects, which happen somewhere downstream.

:首先需要讨论堆栈的两个不同层。 正如Greg Young和其他人提出的那样,事件源旨在用作数据建模的机制,即:如果您有数据库模式,并且由于有太多不同的表而开始失去对它的控制,所有这些都会被不同的事务修改-那么事件源是使此数据模型更清晰的一种方法,因为事件可以非常直接地表示业务级别的情况。 用户采取了什么行动? 然后,该动作的结果可能是更新各种表,等等。实际上,您在使用事件源进行的操作是将动作(事件)与其效果分开,该效果发生在下游某处。

I’ve come to this area from a slightly different angle, which is a lower-level point of view of using systems like Kafka for building highly scalable systems. This view is similar in the sense that if you’re using something like Kafka you are using events, but it doesn’t mean you’re necessarily using event sourcing. And conversely, you don’t need to be using Kafka in order to do event sourcing; you could do event sourcing in a regular database, or you could use a special database that was designed specifically for event sourcing. So these two ideas are similar, but neither requires the other, they just have some overlap.

我从一个稍微不同的角度来到了这个领域,这是使用Kafka之类的系统来构建高度可扩展的系统的较低层次的观点。 从某种意义上说,这种观点是相似的,如果您使用的是类似Kafka的东西,那么您是在使用事件,但这并不意味着您一定在使用事件源。 相反,您无需使用Kafka来进行事件来源。 您可以在常规数据库中进行事件源,也可以使用专门为事件源设计的特殊数据库。 因此,这两个想法是相似的,但是两者都不需要,只是有些重叠。

The case for wanting to use a system like Kafka is mostly the scalability argument: in that case you’ve simply got so much data coming in that you cannot realistically process it on a single-node database, so you have to partition it in some way, and using an event log like Kafka gives you a good way of spreading that work over multiple machines. It provides a good, principled way for scaling systems. It’s especially useful if you want to integrate several different storage systems. So if, for example, you want to update not just your relational database but also, say, a full-text search index like Elasticsearch, or a caching system like Memcached or Redis or something like that, and you want one event to have an updating effect on all of these different systems, then something like Kafka is very useful.

想要使用像Kafka这样的系统的情况主要是可伸缩性参数:在这种情况下,您只是收到了太多数据,因此无法在单节点数据库上实际处理它,因此您必须将其分区方式,并使用像Kafka这样的事件日志为您提供了一种在多台计算机上分布工作的好方法。 它为缩放系统提供了一种良好的原则方法。 如果要集成多个不同的存储系统,则特别有用。 因此,例如,如果您不仅要更新关系数据库,而且还想更新全文搜索索引(例如Elasticsearch)或缓存系统(例如Memcached或Redis或类似的东西),并且您希望一个事件具有一个更新所有这些不同系统上的效果,那么类似Kafka的功能就非常有用。

In terms of the question you asked (what are the situations in which I would not use this event sourcing or event log approach) — I think it’s difficult to say precisely, but as a rule of thumb I would say: use whatever is the simplest. That is, whatever is closest to the domain that you’re trying to implement. And so, if the thing you’re trying to implement maps very nicely to a relational database, in which you just insert and update and delete some rows, then just use a relational database and insert and update and delete some rows. There’s nothing wrong with relational databases and using them as they are. They have worked fine for us for quite a long time and they continue to do so. But if you’re finding yourself in a situation where you’re really struggling to use that kind of database, for example because the complexity of the data model is getting out of hand, then it makes sense to switch to something like an event sourcing approach.

关于您提出的问题(在什么情况下我将不使用这种事件源或事件日志方法)–我认为很难准确地说出,但根据经验,我会说:使用最简单的方法。 也就是说,最接近您要实现的域的任何域。 因此,如果您要实现的对象非常好地映射到关系数据库,而您只需在其中插入,更新和删除一些行,则只需使用关系数据库并插入,更新和删除一些行即可。 关系数据库并按原样使用它们并没有错。 他们为我们工作了很长一段时间,而且他们继续这样做。 但是,如果您发现自己确实在使用这种数据库时遇到困难,例如,由于数据模型的复杂性已经失控,那么切换到事件源之类的方法就很有意义。方法。

And similarly, on the lower level (scalability), if the size of your data is such that you can just put it in PostgreSQL on a single machine — that’s probably fine, just use PostgreSQL on a single machine. But if you’re at the point where there is no way that a single machine can handle your load, you have to scale across a large system, then it starts making sense to look into more distributed systems like Kafka. I think the general principle here is: use whatever is simplest for the particular task you’re trying to solve.

同样,在较低级别(可伸缩性)上,如果数据大小足够大,您可以将其放在一台机器上的PostgreSQL中-可能很好,只需在一台机器上使用PostgreSQL。 但是,如果您无法在一台机器上处理负载,则必须在大型系统上进行扩展,那么开始研究像Kafka这样的分布式系统就变得有意义。 我认为这里的一般原则是:对您要解决的特定任务使用最简单的方法。

瓦迪姆 (Vadim)

: It’s really good advice. As your system evolves you can’t precisely predict the direction of development, all the queries, patterns and data flows.

:这确实是个好建议。 随着系统的发展,您无法精确预测开发方向,所有查询,模式和数据流。

马丁 (Martin)

: Exactly, and for those kinds of situations relational databases are amazing, because they are very flexible, especially if you include the JSON support that they now have. PostgreSQL now has pretty good support for JSON. You can just add a new index if you want to query in a different way. You can just change the schema and keep running with the data in a different structure. And so if the size of the data set is not too big and the complexity is not too great, relational databases work well and provide a great deal of flexibility.

:的确如此,在这种情况下,关系数据库非常出色,因为它们非常灵活,特别是如果您包括它们现在具有的JSON支持。 PostgreSQL现在对JSON有很好的支持。 如果要以其他方式查询,则可以仅添加一个新索引。 您可以只更改模式,并以不同的结构继续运行数据。 因此,如果数据集的大小不是太大,复杂性也不是太大,则关系数据库可以很好地工作并提供很大的灵活性。

瓦迪姆 (Vadim)

: Let’s talk a little bit more about event sourcing. You mentioned an interesting example with several consumers consuming events from one queue based on Kafka or something similar. Imagine that new documents get published, and several systems are consuming events: a search system based on Elasticsearch, which makes the documents searchable, a caching system which puts them into key-value cache based on Memcached, and a relational database system which updates some tables accordingly. A document might be a car selling offer or a realty advert. All these consuming systems work simultaneously and concurrently.

:让我们多谈谈事件源。 您提到了一个有趣的示例,有几个使用者使用基于Kafka或类似方法的队列中的事件。 想象一下,新文档已经发布,并且有多个系统正在消耗事件:基于Elasticsearch的搜索系统(使文档可搜索),将其放入基于Memcached的键值缓存中的缓存系统以及更新一些内容的关系数据库系统表相应。 文件可能是汽车销售要约或房地产广告。 所有这些消耗系统都同时并发工作。

马丁 (Martin)

: So your question is how do you deal with the fact that if you have these several consumers, some of them might have been updated, but the others have not yet seen an update and are still lagging behind slightly?

:因此,您的问题是,如果您拥有这几个使用者,其中一些使用者可能已经更新,而其他使用者尚未看到更新,但仍略有滞后,您将如何处理呢?

瓦迪姆 (Vadim)

: Yes, exactly. A user comes to your website, enters a search query, gets some search results and clicks a link. But she gets 404 HTTP status code because there is no such entity in the database, which hasn’t been able to consume and persist the document yet.

: 对,就是这样。 用户访问您的网站,输入搜索查询,获取一些搜索结果,然后单击链接。 但是她得到了404 HTTP状态代码,因为数据库中没有这样的实体,该实体还无法使用和保留文档。

马丁 (Martin)

: Yes, this is a bit of a challenge actually. Ideally, what you want is what we would call «causal consistency» across these different storage systems. If one system contains some data that you depend on, then the other systems that you look at will also contain those dependencies. Unfortunately, putting together that kind of causal consistency across different storage technologies is actually very hard, and this is not really the fault of event sourcing, because no matter what approach or what system you use to send the updates to the various different systems, you can always end up with some kind of concurrency issues.

:是的,这实际上是一个挑战。 理想情况下,您想要的是跨这些不同存储系统的所谓“因果一致性”。 如果一个系统包含您依赖的某些数据,那么您所查看的其他系统也将包含那些依赖关系。 不幸的是,将跨不同存储技术的这种因果关系整合在一起实际上是非常困难的,这并不是事件源的真正错,因为无论您使用哪种方法或系统将更新发送到各种不同的系统,总是会遇到某种并发问题。

In your example of writing data to both Memcached and Elasticsearch, even if you try to do the writes to the two systems simultaneously you might have a little bit of network delay, which means that they arrive at slightly different times on those different systems, and get processed with slightly different timing. And so somebody who’s reading across those two systems may see an inconsistent state. Now, there are some research projects that are at least working towards achieving that kind of causal consistency, but it’s still difficult if you just want to use something like Elasticsearch or Memcached or so off the shelf.

在将数据同时写入Memcached和Elasticsearch的示例中,即使尝试同时写入两个系统,您也可能会有一点网络延迟,这意味着它们在不同的系统上到达的时间略有不同,并且得到的处理时间略有不同。 因此,正在阅读这两个系统的人可能会看到不一致的状态。 现在,有一些研究项目至少正在努力实现这种因果一致性,但是如果您只想使用Elasticsearch或Memcached之类的工具,仍然很难。

A good solution here would be that you get presented, conceptually, with a consistent point-in-time snapshot across both the search index and the cache and the database. If you’re working just within a relational database, you get something called snapshot isolation, and the point of snapshot isolation is that if you’re reading from the database, it looks as though you’ve got your own private copy of the entire database. Anything you look at in the database, any data you query will be the state as of that point in time, according to the snapshot. So even if the data has afterwards been changed by another transaction, you will actually see the older data, because that older data forms part of a consistent snapshot.

一个很好的解决方案是,从概念上讲,您将在搜索索引,缓存和数据库上获得一致的时间点快照。 如果您仅在关系数据库中工作,则会得到称为快照隔离的内容,快照隔离的要点是,如果您正在从数据库中读取数据,则好像您已经拥有整个数据库的私有副本一样。数据库。 根据快照,您在数据库中查看的任何内容,所查询的任何数据将成为该时间点的状态。 因此,即使此后数据又被另一个事务更改,您实际上也会看到较旧的数据,因为该较旧的数据构成了一致快照的一部分。

And so now, in the case where you’ve got Elasticsearch and Memcached, really what you would ideally want is a consistent snapshot across these two systems. But unfortunately, neither Memcached nor Redis nor Elasticsearch have an efficient mechanism for making those kinds of snapshots that can be coordinated with different storage systems. Each storage system just thinks for itself and typically presents you the latest value of every key, and it doesn’t have this facility for looking back and presenting a slightly older version of the data, because the most recent version of the data is not yet consistent.

因此,现在,在拥有Elasticsearch和Memcached的情况下,理想情况下,您真正想要的是这两个系统之间的快照一致。 But unfortunately, neither Memcached nor Redis nor Elasticsearch have an efficient mechanism for making those kinds of snapshots that can be coordinated with different storage systems. Each storage system just thinks for itself and typically presents you the latest value of every key, and it doesn't have this facility for looking back and presenting a slightly older version of the data, because the most recent version of the data is not yet consistent.

I don’t really have a good answer for what the solution would look like. I fear that the solution would require code changes to any of the storage systems that participate in this kind of thing. So it will require changes to Elasticsearch and to Redis and to Memcached and any other systems. And they would have to add some kind of mechanism for point-in-time snapshots that is cheap enough that you can be using it all the time, because you might be wanting the snapshot several times per second — it’s not just a once-a-day snapshot, it’s very fine-grained. And at the moment the underlying systems are not there in terms of being able to do these kinds of snapshots across different storage systems. It’s a really interesting research topic. I’m hoping that somebody will work on it, but I haven’t seen any really convincing answers to that problem yet so far.

I don't really have a good answer for what the solution would look like. I fear that the solution would require code changes to any of the storage systems that participate in this kind of thing. So it will require changes to Elasticsearch and to Redis and to Memcached and any other systems. And they would have to add some kind of mechanism for point-in-time snapshots that is cheap enough that you can be using it all the time, because you might be wanting the snapshot several times per second — it's not just a once-a-day snapshot, it's very fine-grained. And at the moment the underlying systems are not there in terms of being able to do these kinds of snapshots across different storage systems. It's a really interesting research topic. I'm hoping that somebody will work on it, but I haven't seen any really convincing answers to that problem yet so far.

瓦迪姆 (Vadim)

: Yeah, we need some kind of shared

: Yeah, we need some kind of shared

Multiversion Concurrency Control.Multiversion Concurrency Control .

马丁 (Martin)

: Exactly, like the distributed transaction systems. XA distributed transactions will get you some of the way there, but unfortunately XA, as it stands, is not really very well suited because it only works if you’re using locking-based concurrency control. This means that if you read some data, you have to take a lock on it so that nobody can modify that data while you have that lock. And that kind of locking-based concurrency control has terrible performance, so no system actually uses that in practice nowadays. But if you don’t have that locking then you don’t get the necessary isolation behavior in a system like XA distributed transactions. So maybe what we need is a new protocol for distributed transactions that allows snapshot isolation as the isolation mechanism across different systems. But I don’t think I’ve seen anything that implements that yet.

: Exactly, like the distributed transaction systems. XA distributed transactions will get you some of the way there, but unfortunately XA, as it stands, is not really very well suited because it only works if you're using locking-based concurrency control. This means that if you read some data, you have to take a lock on it so that nobody can modify that data while you have that lock. And that kind of locking-based concurrency control has terrible performance, so no system actually uses that in practice nowadays. But if you don't have that locking then you don't get the necessary isolation behavior in a system like XA distributed transactions. So maybe what we need is a new protocol for distributed transactions that allows snapshot isolation as the isolation mechanism across different systems. But I don't think I've seen anything that implements that yet.

瓦迪姆 (Vadim)

: Yes, I hope somebody is working on it.

: Yes, I hope somebody is working on it.

马丁 (Martin)

: Yes, it would be really important. Also in the context of microservices, for example: the way that people promote that you should build microservices is that each microservice has its own storage, its own database, and you don’t have one service directly accessing the database of another service, because that would break the encapsulation of the service. Therefore, each service just manages its own data.

: Yes, it would be really important. Also in the context of microservices, for example: the way that people promote that you should build microservices is that each microservice has its own storage, its own database, and you don't have one service directly accessing the database of another service, because that would break the encapsulation of the service. Therefore, each service just manages its own data.

For example, you have a service for managing users, and it has a database for the users, and everyone else who wants to find out something about users has to go through the user service. From the point of view of encapsulation that is nice: you’re hiding details of the database schema from the other services for example.

For example, you have a service for managing users, and it has a database for the users, and everyone else who wants to find out something about users has to go through the user service. From the point of view of encapsulation that is nice: you're hiding details of the database schema from the other services for example.

But from the point of view of consistency across different services — well, you’ve got a huge problem now, because of exactly the thing we were discussing: we might have data in two different services that depends upon each other in some way, and you could easily end up with one service being slightly ahead of or slightly behind the other in terms of timing, and then you could end up with someone who reads across different services, getting inconsistent results. And I don’t think anybody building microservices currently has an answer to that problem.

But from the point of view of consistency across different services — well, you've got a huge problem now, because of exactly the thing we were discussing: we might have data in two different services that depends upon each other in some way, and you could easily end up with one service being slightly ahead of or slightly behind the other in terms of timing, and then you could end up with someone who reads across different services, getting inconsistent results. And I don't think anybody building microservices currently has an answer to that problem.

瓦迪姆 (Vadim)

: It is somewhat similar to workflows in our society and government, which are inherently asynchronous and there are no guarantees of delivery. You can get your passport number, then you can change it, and you need to prove that you changed it, and that you are the same person.

: It is somewhat similar to workflows in our society and government, which are inherently asynchronous and there are no guarantees of delivery. You can get your passport number, then you can change it, and you need to prove that you changed it, and that you are the same person.

马丁 (Martin)

: Yes, absolutely. As humans we have ways of dealing with this, for example, we might know that oh, sometimes that database is a bit outdated, I’ll just check back tomorrow. And then tomorrow it’s fine. But if it’s software that we’re building, we have to program all that kind of handling into the software. The software can’t think for itself.

: Yes, absolutely. As humans we have ways of dealing with this, for example, we might know that oh, sometimes that database is a bit outdated, I'll just check back tomorrow. And then tomorrow it's fine. But if it's software that we're building, we have to program all that kind of handling into the software. The software can't think for itself.

瓦迪姆 (Vadim)

: Definitely, at least not yet. I have another question about the advantages of event sourcing. Event sourcing gives you the ability to stop processing events in case of a bug, and resume consuming events having deployed the fix, so that the system is always consistent. It’s a really strong and useful property, but it might not be acceptable in some cases like banking where you can imagine a system that continues to accept financial transactions, but the balances are stale due to suspended consumers waiting for a bugfix from developers. What might be a workaround in such cases?

: Definitely, at least not yet. I have another question about the advantages of event sourcing. Event sourcing gives you the ability to stop processing events in case of a bug, and resume consuming events having deployed the fix, so that the system is always consistent. It's a really strong and useful property, but it might not be acceptable in some cases like banking where you can imagine a system that continues to accept financial transactions, but the balances are stale due to suspended consumers waiting for a bugfix from developers. What might be a workaround in such cases?

马丁 (Martin)

: I think it’s a bit unlikely to stop the consumer, deploying the fix and then restart it, because, as you say, the system has got to continue running, you can’t just stop it. I think what is more likely to happen is: if you discover a bug, you let the system continue running, but while it continues running with the buggy code, you produce another version of the code that is fixed, you deploy that fixed version separately and run the two in parallel for a while. In the fixed version of the code you might go back in history and reprocess all of the input events that have happened since the buggy code was deployed, and maybe write the results to a different database. Once you’ve caught up again you’ve got two versions of the database, which are both based on the same event inputs, but one of the two processed events with the buggy code and the other processed the events with the correct code. At that point you can do the switchover, and now everyone who reads the data is going to read the correct version instead of the buggy version, and you can shut down the buggy version. That way you never need to stop the system from running, everything keeps working all the time. And you can take the time to fix the bug, and you can recover from the bug because you can reprocess those input events again.

: I think it's a bit unlikely to stop the consumer, deploying the fix and then restart it, because, as you say, the system has got to continue running, you can't just stop it. I think what is more likely to happen is: if you discover a bug, you let the system continue running, but while it continues running with the buggy code, you produce another version of the code that is fixed, you deploy that fixed version separately and run the two in parallel for a while. In the fixed version of the code you might go back in history and reprocess all of the input events that have happened since the buggy code was deployed, and maybe write the results to a different database. Once you've caught up again you've got two versions of the database, which are both based on the same event inputs, but one of the two processed events with the buggy code and the other processed the events with the correct code. At that point you can do the switchover, and now everyone who reads the data is going to read the correct version instead of the buggy version, and you can shut down the buggy version. That way you never need to stop the system from running, everything keeps working all the time. And you can take the time to fix the bug, and you can recover from the bug because you can reprocess those input events again.

瓦迪姆 (Vadim)

: Indeed, it’s a really good option if the storage systems are under your control, and we are not talking about side effects applied to external systems.

: Indeed, it's a really good option if the storage systems are under your control, and we are not talking about side effects applied to external systems.

马丁 (Martin)

: Yes, you’re right, once we send the data to external systems it gets more difficult because you might not be able to easily correct it. But this is again something you find in financial accounting, for example. In a company, you might have quarterly accounts. At the end of the quarter, everything gets frozen, and all of the revenue and profit calculations are based on the numbers for that quarter. But then it can happen that actually, some delayed transaction came in, because somebody forgot to file a receipt in time. The transaction comes in after the calculations for the quarter have been finalized, but it still belongs in that earlier quarter.

: Yes, you're right, once we send the data to external systems it gets more difficult because you might not be able to easily correct it. But this is again something you find in financial accounting, for example. In a company, you might have quarterly accounts. At the end of the quarter, everything gets frozen, and all of the revenue and profit calculations are based on the numbers for that quarter. But then it can happen that actually, some delayed transaction came in, because somebody forgot to file a receipt in time. The transaction comes in after the calculations for the quarter have been finalized, but it still belongs in that earlier quarter.

What accountants do in this case is that in the next quarter, they produce corrections to the previous quarter’s accounts. And typically those corrections will be a small number, and that’s no problem because it doesn’t change the big picture. But at the same time, everything is still accounted for correctly. At the human level of these accounting systems that has been the case ever since accounting systems were invented, centuries ago. It’s always been the case that some late transactions would come in and change the result for some number that you thought was final, but actually, it wasn’t because the correction could still come in. And so we just build the system with the mechanism to perform such corrections. I think we can learn from accounting systems and apply similar ideas to many other types of data storage systems, and just accept the fact that sometimes they are mostly correct but not 100% correct and the correction might come in later.

What accountants do in this case is that in the next quarter, they produce corrections to the previous quarter's accounts. And typically those corrections will be a small number, and that's no problem because it doesn't change the big picture. But at the same time, everything is still accounted for correctly. At the human level of these accounting systems that has been the case ever since accounting systems were invented, centuries ago. It's always been the case that some late transactions would come in and change the result for some number that you thought was final, but actually, it wasn't because the correction could still come in. And so we just build the system with the mechanism to perform such corrections. I think we can learn from accounting systems and apply similar ideas to many other types of data storage systems, and just accept the fact that sometimes they are mostly correct but not 100% correct and the correction might come in later.

瓦迪姆 (Vadim)

: It’s a different point of view to building systems.

: It's a different point of view to building systems.

马丁 (Martin)

: It is a bit of a new way of thinking, yes. It can be disorienting when you come across it at first. But I don’t think there’s really a way round it, because this impreciseness is inherent in the fact that we do not know the entire state of the world — it is fundamental to the way distributed systems work. We can’t just hide it, we can’t pretend that it doesn’t happen, because that imprecision is necessarily exposed in the way we process the data.

: It is a bit of a new way of thinking, yes. It can be disorienting when you come across it at first. But I don't think there's really a way round it, because this impreciseness is inherent in the fact that we do not know the entire state of the world — it is fundamental to the way distributed systems work. We can't just hide it, we can't pretend that it doesn't happen, because that imprecision is necessarily exposed in the way we process the data.

Professional growth and development (Professional growth and development)

瓦迪姆 (Vadim)

: Do you think that conferences like

: Do you think that conferences like

Hydra are anticipated? Most distributed systems are quite different, and it is hard to imagine that many attendees will get to work and will start applying what they have learned in day-to-day activities.Hydra are anticipated? Most distributed systems are quite different, and it is hard to imagine that many attendees will get to work and will start applying what they have learned in day-to-day activities.

马丁 (Martin)

: It is broad, but I think that a lot of the interesting ideas in distributed systems are conceptual. So the insights are not necessarily like «use this database» or «use this particular technology». They are more like ways of thinking about systems and about software. And those kinds of ideas can be applied quite widely. My hope is that when attendees go away from this conference, the lessons they take away are not so much what piece of software they should be using or which programming language they should be using – really, I don’t mind about that – but more like how to

: It is broad, but I think that a lot of the interesting ideas in distributed systems are conceptual. So the insights are not necessarily like «use this database» or «use this particular technology». They are more like ways of thinking about systems and about software. And those kinds of ideas can be applied quite widely. My hope is that when attendees go away from this conference, the lessons they take away are not so much what piece of software they should be using or which programming language they should be using – really, I don't mind about that – but more like how to

think about the systems they are building.think about the systems they are building.

瓦迪姆 (Vadim)

: Why do you think it’s important to give conference talks on such complex topics as your talk, compared to publishing papers, covering all their details and intricacies? Or should anyone do both?

: Why do you think it's important to give conference talks on such complex topics as your talk, compared to publishing papers, covering all their details and intricacies? Or should anyone do both?

马丁 (Martin)

: I think they serve different purposes. When we write papers, the purpose is to have a very definitive, very precise analysis of a particular problem, and to go really deep in that. On the other hand, the purpose of a talk is more to get people interested in a topic and to start a conversation around it. I love going to conferences partly because of the discussions I then have around the talk, where people come to me and say: «oh, we tried something like this, but we ran into this problem and that problem, what do you think about that?» Then I get to think about other people’s problems, and that’s really interesting because I get to learn a lot from that.

: I think they serve different purposes. When we write papers, the purpose is to have a very definitive, very precise analysis of a particular problem, and to go really deep in that. On the other hand, the purpose of a talk is more to get people interested in a topic and to start a conversation around it. I love going to conferences partly because of the discussions I then have around the talk, where people come to me and say: «oh, we tried something like this, but we ran into this problem and that problem, what do you think about that?» Then I get to think about other people's problems, and that's really interesting because I get to learn a lot from that.

So, from my point of view, the selfish reason for going to conferences is really to learn from other people, what their experiences have been, and to help share the experiences that we’ve made in the hope that other people will find them useful as well. But fundamentally, a conference talk is often an introduction to a subject, whereas a paper is a deep analysis of a very narrow question. I think those are different genres and I think we need both of them.

So, from my point of view, the selfish reason for going to conferences is really to learn from other people, what their experiences have been, and to help share the experiences that we've made in the hope that other people will find them useful as well. But fundamentally, a conference talk is often an introduction to a subject, whereas a paper is a deep analysis of a very narrow question. I think those are different genres and I think we need both of them.

瓦迪姆 (Vadim)

: And the last question. How do you personally grow as a professional engineer and a researcher? Could you please recommend any conferences, blogs, books, communities for those who wish to develop themselves in the field of distributed systems?

: And the last question. How do you personally grow as a professional engineer and a researcher? Could you please recommend any conferences, blogs, books, communities for those who wish to develop themselves in the field of distributed systems?

马丁 (Martin)

: That’s a good question. Certainly, there are things to listen to and to read. There’s no shortage of conference talks that have been recorded and put online. There are books like my own book for example, which provides a bit of an introduction to the topic, but also lots of references to further reading. So if there are any particular detailed questions that you’re interested in, you can follow those references and find the original papers where these ideas were discussed. They can be a very valuable way of learning about something in greater depth.

: That's a good question. Certainly, there are things to listen to and to read. There's no shortage of conference talks that have been recorded and put online. There are books like my own book for example, which provides a bit of an introduction to the topic, but also lots of references to further reading. So if there are any particular detailed questions that you're interested in, you can follow those references and find the original papers where these ideas were discussed. They can be a very valuable way of learning about something in greater depth.

A really important part is also trying to implement things and seeing how they work out in practice, and talking to other people and sharing your experiences. Part of the value of a conference is that you get to talk to other people as well, live. But you can have that through other mechanisms as well; for example, there’s a Slack channel that people have set up for people interested in distributed systems. If that’s your thing you can join that. You can, of course, talk to your colleagues in your company and try to learn from them. I don’t think there’s one right way of doing this — there are many different ways through which you can learn and get a deeper experience, and different paths will work for different people.

A really important part is also trying to implement things and seeing how they work out in practice, and talking to other people and sharing your experiences. Part of the value of a conference is that you get to talk to other people as well, live. But you can have that through other mechanisms as well; for example, there's a Slack channel that people have set up for people interested in distributed systems . If that's your thing you can join that. You can, of course, talk to your colleagues in your company and try to learn from them. I don't think there's one right way of doing this — there are many different ways through which you can learn and get a deeper experience, and different paths will work for different people.

瓦迪姆 (Vadim)

: Thank you very much for your advice and interesting discussion! It has been a pleasure talking to you.

: Thank you very much for your advice and interesting discussion! It has been a pleasure talking to you.

马丁 (Martin)

: No problem, yeah, it’s been nice talking to you.

: No problem, yeah, it's been nice talking to you.

瓦迪姆 (Vadim)

: Let’s meet

: Let's meet

at the conference.at the conference .

翻译自: https://habr.com/en/company/jugru/blog/458056/

martin fowler

martin fowler_Martin Kleppmann的大型访谈:“弄清楚分布式数据系统的未来”相关推荐

- 技术领导力: 深度访谈《深入分布式缓存》

于君泽,蚂蚁金服支付核算技术部负责人.互联网金融业务近8年,电信业务8年经验.兴趣在高可用分布式架构应用,研发管理,内建质量等.维护公众号:技术琐话.<深入分布式缓存>一书联合作者,总策划 ...

- 大型网站架构之分布式消息队列

2019独角兽企业重金招聘Python工程师标准>>> 大型网站架构之分布式消息队列 以下是消息队列以下的大纲,本文主要介绍消息队列概述,消息队列应用场景和消息中间件示例(电商,日志 ...

- 微软 CEO 纳德拉访谈:人工智能的大方向与未来是什么?

来源:36氪 概要:我们都对科技的发展抱有乐观的态度,我们坚信,人工智能带来的挑战是让我们和社会定义什么是真正的人类.未来将会是一个人工智能强化和增强人类能力的世界,而不是让人类变得更加无用. 人工智 ...

- 【InfoQ】博睿数据CTO孟曦东访谈实录:可观测性技术是未来发展方向

差不多在五年前,分布式系统已经成熟,微服务架构尚未普及,可观测问题就已经在桎梏技术团队的工作效率.一个To C的软件使用问题可能由客服发起,整条支撑链路的所有技术部门,都要逐一排查接口和日志,流程非常 ...

- 大型网站架构与分布式架构

解决问题的通用思路是将分而治之(divide-and-conquer),将大问题分为若干个小问题,各个击破.在大型互联网的架构实践中,无一不体现这种思想. 架构目标 低成本:任何公司存在的价值都是为了 ...

- 一文弄懂“分布式锁”

关注我们,下载更多资源 来源:向南l www.cnblogs.com/xiangnanl/p/9833965.html 多线程情况下对共享资源的操作需要加锁,避免数据被写乱,在分布式系统中,这个问题也 ...

- 王义辉:浅谈网站用户深度访谈

既然是浅谈深度访谈,那么肯定有很多比较"浅"的地方不能满足读者"深度"的需求,因为时间的关系,还望读者见谅.(工作太忙,从前天晚上到现在一共睡了三个半小时,大家 ...

- 阿里云数据库专家白宸:Redis带你尽享丝滑!(图灵访谈)

访谈嘉宾: 本名郑明杭,现阿里云NoSQL数据库技术专家.先后从事Tair分布式系统.Memcached云服务及阿里云Redis数据库云服务开发,关注分布式系统及NoSQL存储技术前沿. 作为嘉宾,曾 ...

- 大型网站技术架构 读书笔记

1.任何环节都要考虑服务器崩溃,考虑最坏情况,数据一定要做好备份 无论是服务层数据层还是应用层,都把服务器弄成分布式,通过路由算法调度访问,实现可伸缩性 2.让页面加载尽可能少的需要从网络请求 经常访 ...

最新文章

- 活动报名 | 新国立尤洋:FastFold——将AlphaFold训练时间从11天减少到67小时

- linux粘着位的使用(t权限)

- 众所周知,YouTube是个学习网站

- uchome 数据库结构 数据库字典

- unity asset store下载不了_Unity手游实战:从0开始SLG——资源管理系统-基础篇(三)AssetBundle原理...

- 动态规划——最大上升子序列(hdu1087)

- RPMB原理介绍【转】

- Web API 设计摘要

- 如何修改Myeclipse中代码的字体大小?

- android 日历 定制,Android自定义View(CustomCalendar-定制日历控件)

- 架构蓝图--软件架构 4+1 视图模型

- Lunar Pro for Mac v5.2.2 – 实用的外接显示器屏幕亮度调节工具

- RGB 颜色格式转换

- day20 网络编程(上)

- [转帖]房博士教你购房(二)

- 怎么免费提取PDF页面?

- 记阿里巴巴的一次面试,教你怎样应对到来的“金三银四

- mysql 改列定义_如何更改MySQL列定义?

- 知识产权行业获客难?一招解决

- 一起来DIY一个人工智能实验室吧