python 爬取了租房数据

爬取链接:https://sh.lianjia.com/zufang/

代码如下:

import requests

# 用于解析html数据的框架

from bs4 import BeautifulSoup

# 用于操作excel的框架

from xlwt import *

import json# 创建一个工作

book = Workbook(encoding='utf-8');

# 向表格中增加一个sheet表,sheet1为表格名称 允许单元格覆盖

sheet = book.add_sheet('sheet1', cell_overwrite_ok=True)

# 设置样式

style = XFStyle();

pattern = Pattern();

pattern.pattern = Pattern.SOLID_PATTERN;

pattern.pattern_fore_colour="0x00";

style.pattern = pattern;

# 设置列标题

sheet.write(0, 0, "标题")

sheet.write(0, 1, "地址")

sheet.write(0, 2, "价格")

sheet.write(0, 3, "建筑年代")

sheet.write(0, 4, "满年限")

sheet.write(0, 5, "离地铁")# 设置列宽度

sheet.col(0).width = 0x0d00 + 200*50

sheet.col(1).width = 0x0d00 + 20*50

sheet.col(2).width = 0x0d00 + 10*50

sheet.col(3).width = 0x0d00 + 120*50

sheet.col(4).width = 0x0d00 + 1*50

sheet.col(5).width = 0x0d00 + 50*50# 指定爬虫所需的上海各个区域名称

citys = ['pudong', 'minhang', 'baoshan', 'xuhui', 'putuo', 'yangpu', 'changning', 'songjiang','jiading', 'huangpu', 'jinan', 'zhabei', 'hongkou', 'qingpu', 'fengxian', 'jinshan', 'chongming','shanghaizhoubian']def getHtml(city):url = 'http://sh.lianjia.com/ershoufang/%s/' % cityheaders = {'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36'}request = requests.get(url=url, headers=headers)# 获取源码内容比request.text好,对编码方式优化好respons = request.content# 使用bs4模块,对响应的链接源代码进行html解析,后面是python内嵌的解释器,也可以安装使用lxml解析器soup = BeautifulSoup(respons, 'html.parser')# 获取类名为c-pagination的div标签,是一个列表pageDiv = soup.select('div .page-box')[0]pageData =dict(pageDiv.contents[0].attrs)['page-data'];pageDataObj =json.loads(pageData);totalPage =pageDataObj['totalPage']curPage =pageDataObj['curPage'];print(pageData);# 如果标签a标签数大于1,说明多页,取出最后的一个页码,也就是总页数for i in range(totalPage):pageIndex=i+1;print(city+"=========================================第 " + str(pageIndex) + " 页")print("\n")saveData(city, url, pageIndex);# 调用方法解析每页数据,并且保存到表格中

def saveData(city, url, pageIndex):headers = {'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36'}urlStr ='%spg%s' % (url, pageIndex);print(urlStr);html = requests.get(urlStr, headers=headers).content;soup = BeautifulSoup(html, 'lxml')liList = soup.findAll("li", {"class": "clear LOGCLICKDATA"})print(len(liList));index=0;for info in liList:title =info.find("div",class_="title").find("a").text;address =info.find("div",class_="address").find("a").textflood = info.find("div", class_="flood").textsubway = info.find("div", class_="tag").findAll("span", {"class", "subway"});subway_col="";if len(subway) > 0:subway_col = subway[0].text;taxfree = info.find("div", class_="tag").findAll("span", {"class", "taxfree"});taxfree_col="";if len(taxfree) > 0:taxfree_col = taxfree[0].text;priceInfo =info.find("div",class_="priceInfo").find("div",class_="totalPrice").text;print(flood);global rowsheet.write(row, 0, title)sheet.write(row, 1, address)sheet.write(row, 2, priceInfo)sheet.write(row, 3, flood)sheet.write(row, 4,taxfree_col)sheet.write(row, 5,subway_col)row+=1;index=row;# 判断当前运行的脚本是否是该脚本,如果是则执行

# 如果有文件xxx继承该文件或导入该文件,那么运行xxx脚本的时候,这段代码将不会执行

if __name__ == '__main__':# getHtml('jinshan')row=1for i in citys:getHtml(i)# 最后执行完了保存表格,参数为要保存的路径和文件名,如果不写路径则默然当前路径book.save('lianjia-shanghai.xls')如下图:

思路是:

- 先爬取每个区域的 url 和名称,跟主 url 拼接成一个完整的 url,循环 url 列表,依次爬取每个区域的租房信息。

- 在爬每个区域的租房信息时,找到最大的页码,遍历页码,依次爬取每一页的二手房信息。

post 代码之前,先简单讲一下这里用到的几个爬虫 Python 包:

- requests:是用来请求对链家网进行访问的包。

- lxml:解析网页,用 Xpath 表达式与正则表达式一起来获取网页信息,相比 bs4 速度更快。

代码如下:

import requests

import time

import re

from lxml import etree # 获取某市区域的所有链接

def get_areas(url): print('start grabing areas') headers = { 'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.108 Safari/537.36'} resposne = requests.get(url, headers=headers) content = etree.HTML(resposne.text) areas = content.xpath("//dd[@data-index = '0']//div[@class='option-list']/a/text()") areas_link = content.xpath("//dd[@data-index = '0']//div[@class='option-list']/a/@href") for i in range(1,len(areas)): area = areas[i] area_link = areas_link[i] link = 'https://bj.lianjia.com' + area_link print("开始抓取页面") get_pages(area, link) #通过获取某一区域的页数,来拼接某一页的链接

def get_pages(area,area_link): headers = { 'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.108 Safari/537.36'} resposne = requests.get(area_link, headers=headers) pages = int(re.findall("page-data=\'{\"totalPage\":(\d+),\"curPage\"", resposne.text)[0]) print("这个区域有" + str(pages) + "页") for page in range(1,pages+1): url = 'https://bj.lianjia.com/zufang/dongcheng/pg' + str(page) print("开始抓取" + str(page) +"的信息") get_house_info(area,url) #获取某一区域某一页的详细房租信息

def get_house_info(area, url): headers = { 'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.108 Safari/537.36'} time.sleep(2) try: resposne = requests.get(url, headers=headers) content = etree.HTML(resposne.text) info=[] for i in range(30): title = content.xpath("//div[@class='where']/a/span/text()")[i] room_type = content.xpath("//div[@class='where']/span[1]/span/text()")[i] square = re.findall("(\d+)",content.xpath("//div[@class='where']/span[2]/text()")[i])[0] position = content.xpath("//div[@class='where']/span[3]/text()")[i].replace(" ", "") try: detail_place = re.findall("([\u4E00-\u9FA5]+)租房", content.xpath("//div[@class='other']/div/a/text()")[i])[0] except Exception as e: detail_place = "" floor =re.findall("([\u4E00-\u9FA5]+)\(", content.xpath("//div[@class='other']/div/text()[1]")[i])[0] total_floor = re.findall("(\d+)",content.xpath("//div[@class='other']/div/text()[1]")[i])[0] try: house_year = re.findall("(\d+)",content.xpath("//div[@class='other']/div/text()[2]")[i])[0] except Exception as e: house_year = "" price = content.xpath("//div[@class='col-3']/div/span/text()")[i] with open('链家北京租房.txt','a',encoding='utf-8') as f: f.write(area + ',' + title + ',' + room_type + ',' + square + ',' +position+

','+ detail_place+','+floor+','+total_floor+','+price+','+house_year+'\n') print('writing work has done!continue the next page') except Exception as e: print( 'ooops! connecting error, retrying.....') time.sleep(20) return get_house_info(area, url) def main(): print('start!') url = 'https://bj.lianjia.com/zufang' get_areas(url) if __name__ == '__main__': main() 由于每个楼盘户型差别较大,区域位置比较分散,每个楼盘具体情况还需具体分析

代码:

#北京路段_房屋均价分布图 detail_place = df.groupby(['detail_place']) house_com = detail_place['price'].agg(['mean','count']) house_com.reset_index(inplace=True) detail_place_main = house_com.sort_values('count',ascending=False)[0:20] attr = detail_place_main['detail_place'] v1 = detail_place_main['count'] v2 = detail_place_main['mean'] line = Line("北京主要路段房租均价") line.add("路段",attr,v2,is_stack=True,xaxis_rotate=30,yaxix_min=4.2, mark_point=['min','max'],xaxis_interval=0,line_color='lightblue', line_width=4,mark_point_textcolor='black',mark_point_color='lightblue', is_splitline_show=False) bar = Bar("北京主要路段房屋数量") bar.add("路段",attr,v1,is_stack=True,xaxis_rotate=30,yaxix_min=4.2, xaxis_interval=0,is_splitline_show=False) overlap = Overlap() overlap.add(bar) overlap.add(line,yaxis_index=1,is_add_yaxis=True) overlap.render('北京路段_房屋均价分布图.html')

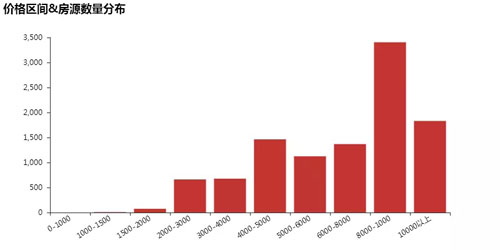

面积&租金分布呈阶梯性

#房源价格区间分布图

price_info = df[['area', 'price']] #对价格分区

bins = [0,1000,1500,2000,2500,3000,4000,5000,6000,8000,10000]

level = ['0-1000','1000-1500', '1500-2000', '2000-3000', '3000-4000', '4000-5000', '5000-6000', '6000-8000', '8000-1000','10000以上']

price_stage = pd.cut(price_info['price'], bins = bins,labels = level).value_counts().sort_index() attr = price_stage.index

v1 = price_stage.values bar = Bar("价格区间&房源数量分布")

bar.add("",attr,v1,is_stack=True,xaxis_rotate=30,yaxix_min=4.2, xaxis_interval=0,is_splitline_show=False) overlap = Overlap()

overlap.add(bar)

overlap.render('价格区间&房源数量分布.html')

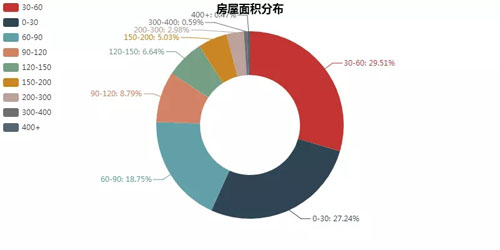

#房屋面积分布

bins =[0,30,60,90,120,150,200,300,400,700]

level = ['0-30', '30-60', '60-90', '90-120', '120-150', '150-200', '200-300','300-400','400+']

df['square_level'] = pd.cut(df['square'],bins = bins,labels = level) df_digit= df[['area', 'room_type', 'square', 'position', 'total_floor', 'floor', 'house_year', 'price', 'square_level']]

s = df_digit['square_level'].value_counts() attr = s.index

v1 = s.values pie = Pie("房屋面积分布",title_pos='center') pie.add( "", attr, v1, radius=[40, 75], label_text_color=None, is_label_show=True, legend_orient="vertical", legend_pos="left",

) overlap = Overlap()

overlap.add(pie)

overlap.render('房屋面积分布.html') #房屋面积&价位分布

bins =[0,30,60,90,120,150,200,300,400,700]

level = ['0-30', '30-60', '60-90', '90-120', '120-150', '150-200', '200-300','300-400','400+']

df['square_level'] = pd.cut(df['square'],bins = bins,labels = level) df_digit= df[['area', 'room_type', 'square', 'position', 'total_floor', 'floor', 'house_year', 'price', 'square_level']] square = df_digit[['square_level','price']]

prices = square.groupby('square_level').mean().reset_index()

amount = square.groupby('square_level').count().reset_index() attr = prices['square_level']

v1 = prices['price'] pie = Bar("房屋面积&价位分布布")

pie.add("", attr, v1, is_label_show=True)

pie.render()

bar = Bar("房屋面积&价位分布")

bar.add("",attr,v1,is_stack=True,xaxis_rotate=30,yaxix_min=4.2, xaxis_interval=0,is_splitline_show=False) overlap = Overlap()

overlap.add(bar)

overlap.render('房屋面积&价位分布.html')

摘录:爬取了上万条租房数据,你还要不要北漂

python 爬取了租房数据相关推荐

- python 爬取自如租房的租房数据,使用图像识别获取价格信息

python 爬取自如租房的租房数据 完整代码下载:https://github.com/tanjunchen/SpiderProject/tree/master/ziru #!/usr/bin/py ...

- python爬虫爬取58网站数据_Python爬虫,爬取58租房数据 字体反爬

Python爬虫,爬取58租房数据 这俩天项目主管给了个爬虫任务,要爬取58同城上福州区域的租房房源信息.因为58的前端页面做了base64字体加密所以爬取比较费力,前前后后花了俩天才搞完. 项目演示 ...

- Python应用实战-Python爬取4000+股票数据,并用plotly绘制了树状热力图(treemap)

目录: 1. 准备工作 2. 开始绘图 2.1. 简单的例子 2.2. px.treemap常用参数介绍 2.3. color_continuous_scale参数介绍 2.4. 大A股市树状热力图来 ...

- python爬去朋友圈_利用Python爬取朋友圈数据,爬到你开始怀疑人生

人生最难的事是自我认知,用Python爬取朋友圈数据,让我们重新审视自己,审视我们周围的圈子. 文:朱元禄(@数据分析-jacky) 哲学的两大问题:1.我是谁?2.我们从哪里来? 本文 jacky试 ...

- python 爬取拉钩数据

Python通过Request库爬取拉钩数据 爬取方法 数据页面 建表存储职位信息 解析页面核心代码 完整代码 结果展示 爬取方法 采用python爬取拉钩数据,有很多方法可以爬取,我采用的是通过Re ...

- python 爬取拉钩网数据

python 爬取拉钩网数据 完整代码下载:https://github.com/tanjunchen/SpiderProject/blob/master/lagou/LaGouSpider.py # ...

- 利用Python爬取国家水稻数据中心的品种数据

利用Python爬取国家水稻数据中心的品种数据 一.页面获取 python可以进行对网页的访问,主要用到requests,beautifulsoup4包. 首先新建一个page的py文件,用来获取页面 ...

- 利用Python爬取朋友圈数据,爬到你开始怀疑人生

人生最难的事是自我认知,用Python爬取朋友圈数据,让我们重新审视自己,审视我们周围的圈子. 文:朱元禄(@数据分析-jacky) 哲学的两大问题:1.我是谁?2.我们从哪里来? 本文 jacky试 ...

- 利用python爬取2019-nCoV确诊数据并制作pyecharts可视化地图

1.本章利用python爬取2019-nCoV确诊数据并制作pyecharts可视化地图: 2.主要内容为绘制出中国各省疫情数据,疫情数据从四个维度进行可视化展示:累积确诊人数.现存确诊人数.治愈人数 ...

最新文章

- linux下有关phy的命令,linux – 如何为Debian安装b43-lpphy-installer?

- [NOI2002] 银河英雄传说(带权并查集好题)

- 计算机二级题31套资料,计算机等级考试:二级VFP机试第31套

- 关于mysql的change和modify

- 吴恩达《机器学习》学习笔记八——逻辑回归(多分类)代码

- php对帖子分类,php – MySQL:从类别中获取帖子

- 购物车功能完整版12.13

- java ArrayList扩容入门

- scss转换成css,hotcss

- html禁止页面动画,Animate.css 超强CSS3动画库,三行代码搞定H5页面动画特效!

- 【蓝牙学习笔记】Arduino设置蓝牙模块HC-06 CC2540 CC2541自动初始化

- HTML鼠标悬停的语法

- JAVA上传smartupload_java使用smartupload组件实现文件上传的方法

- sqrt函数用法c语言 linux,C语言中sqrt函数如何使用

- 聊聊精密测量仪器的气源维护知识

- VirtualBox系统虚拟盘格式转换vdi/vhd/vmdk

- 《Web安全之深度学习实战》笔记:第六章 垃圾邮件识别

- 自动控制原理(2) - 线性化和传递函数

- 利用PS实现图片的镜像处理

- 两个集合相等的例题_集合的相等答案