One by One [ 1 x 1 ] Convolution - counter-intuitively useful

Whenever I discuss or show GoogleNet architecture, one question always comes up -

"Why 1x1 convolution ? Is it not redundant ?

left : Convolution with kernel of size 3x3 right : Convolution with kernel of size 1x1

Simple Answer

Most simplistic explanation would be that 1x1 convolution leads to dimension reductionality. For example, an image of 200 x 200 with 50 features on convolution with 20 filters of 1x1 would result in size of 200 x 200 x 20. But then again, is this is the best way to do dimensionality reduction in the convoluational neural network? What about the efficacy vs efficiency?

Complex Answer

Feature transformation

Although 1x1 convolution is a ‘feature pooling’ technique, there is more to it than just sum pooling of features across various channels/feature-maps of a given layer. 1x1 convolution acts like coordinate-dependent transformation in the filter space[1]. It is important to note here that this transformation is strictly linear, but in most of application of 1x1 convolution, it is succeeded by a non-linear activation layer like ReLU. This transformation is learned through the (stochastic) gradient descent. But an important distinction is that it suffers with less over-fitting due to smaller kernel size (1x1).

Deeper Network

One by One convolution was first introduced in this paper titled Network in Network. In this paper, the author’s goal was to generate a deeper network without simply stacking more layers. It replaces few filters with a smaller perceptron layer with mixture of 1x1 and 3x3 convolutions. In a way, it can be seen as “going wide” instead of “deep”, but it should be noted that in machine learning terminology, ‘going wide’ is often meant as adding more data to the training. Combination of 1x1 (x F) convolution is mathematically equivalent to a multi-layer perceptron.[2].

Inception Module

In GoogLeNet architecture, 1x1 convolution is used for two purposes

- To make network deep by adding an “inception module” like Network in Network paper, as described above.

- To reduce the dimensions inside this “inception module”.

- To add more non-linearity by having ReLU immediately after every 1x1 convolution.

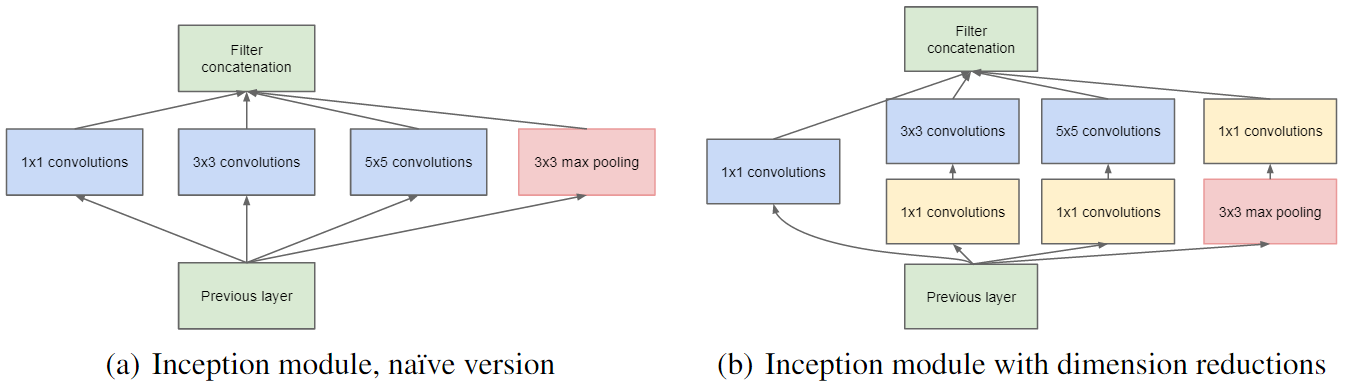

Here is the scresnshot from the paper, which elucidates above points :

1x1 convolutions in GoogLeNet

It can be seen from the image on the right, that 1x1 convolutions (in yellow), are specially used before 3x3 and 5x5 convolution to reduce the dimensions. It should be noted that a two step convolution operation can always to combined into one, but in this case and in most other deep learning networks, convolutions are followed by non-linear activation and hence convolutions are no longer linear operators and cannot be combined.

In designing such a network, it is important to note that initial convolution kernel should be of size larger than 1x1 to have a receptive field capable of capturing locally spatial information. According to the NIN paper, 1x1 convolution is equivalent to cross-channel parametric pooling layer. From the paper - “This cascaded cross channel parameteric pooling structure allows complex and learnable interactions of cross channel information”.

Cross channel information learning (cascaded 1x1 convolution) is biologically inspired because human visual cortex have receptive fields (kernels) tuned to different orientation. For e.g

Different orientation tuned receptive field profiles in the human visual cortex Source

More Uses

- 1x1 Convolution can be combined with Max pooling

Pooling with 1x1 convolution

Pooling with 1x1 convolution

- 1x1 Convolution with higher strides leads to even more redution in data by decreasing resolution, while losing very little non-spatially correlated information.

1x1 convolution with strides

1x1 convolution with strides

- Replace fully connected layers with 1x1 convolutions as Yann LeCun believes they are the same - > In Convolutional Nets, there is no such thing as “fully-connected layers”. There are only convolution layers with 1x1 convolution kernels and a full connection table. – Yann LeCun

Convolution gif images generated using this wonderful code, more images on 1x1 convolutions and 3x3 convolutions can be found here

One by One [ 1 x 1 ] Convolution - counter-intuitively useful相关推荐

- 深度学习(6)之卷积的几种方式:1D、2D和3D卷积的不同卷积原理(全网最全!)

深度学习(6)之卷积的几种方式:1D.2D和3D卷积的不同卷积原理(全网最全!) 英文原文 :A Comprehensive Introduction to Different Types of Co ...

- 经历过黑暗才更渴望黎明_黑暗的图案,你如何操纵以给予更多

经历过黑暗才更渴望黎明 On every major website, a game is being played. 在每个主要网站上,都在玩游戏. The aim? To take as much ...

- 18岁误入网站_是市场驱动的技术领先现代医学误入歧途

18岁误入网站 Medicine was created to protect, preserve and extend human life. Over centuries we have come ...

- 【转】史上最全!多图带你读懂各种常见卷积类型

英文原文: A Comprehensive Introduction to Different Types of Convolutions in Deep Learning 如果你在深度学习中听说过不 ...

- Python标准库——collections模块的Counter类

更多16 最近在看一本名叫<Python Algorithm: Mastering Basic Algorithms in the Python Language>的书,刚好看到提到这个C ...

- Octave Convolution卷积

Octave Convolution卷积 MXNet implementation 实现for: Drop an Octave: Reducing Spatial Redundancy in Conv ...

- 转置卷积Transposed Convolution

转置卷积Transposed Convolution 我们为卷积神经网络引入的层,包括卷积层和池层,通常会减小输入的宽度和高度,或者保持不变.然而,语义分割和生成对抗网络等应用程序需要预测每个像素的值 ...

- Python collections 模块 namedtuple、Counter、defaultdict

1. namedtuple 假设有两个列表,如下,要判断两个列表中的某一个索引值是否相等. In [7]: p = ['001', 'wohu', '100', 'Shaanxi']In [8]: t ...

- 从BloomFilter到Counter BloomFilter

文章目录 前言 1. Traditional BloomFilter 2. Counter BloomFilter 本文traditional bloomfilter 和 counter bloomf ...

- 求逆元 - HNU 13412 Cookie Counter

Cookie Counter Problem's Link: http://acm.hnu.cn/online/?action=problem&type=show&id=13412& ...

最新文章

- Xamarin Anroid开发教程之Anroid开发工具及应用介绍

- python骨灰教学_python+mongodb+flask的基本使用

- 存货编码数字_用友T3软件存货编码与存货代码有什么不同?

- QQ浏览器如何修改截屏快捷键?QQ浏览器修改截屏快捷键的方法

- 网络协议栈深入分析(五)--套接字的绑定、监听、连接和断开

- 图解 Linux 最常用命令

- WPS中的公式编辑器如何打空格

- 一文看懂三维建模到底是什么?

- PHP连接MySQL数据库过程

- 第二人生的源码分析(二十)显示人物名称

- 我的世界Java种子算法_Minecraft:说说“种子”的使用和原理吧

- python子图加标题_python – matplotlib的子图中的行和列标题

- 云计算基础:云计算运用越来越广泛,我们应该如何去学习云计算

- 圣诞节,1inch狂撒3亿美金红包,币圈大佬在线炫富,我酸了...

- Linux一条命令----同步网络时间

- 为啥Spring事务失效了,你踩坑了吗?

- 凯尔学院在课堂上向学生提供LiFi

- JAVA环境配置多环境(全,细,简单)

- 吐槽大会,加个好友,分享资源

- NBU OJ1211:一个笼子里面关了鸡和兔子(鸡有2只脚,兔子有4只脚)。已经知道了笼子里面脚的总数a,问笼子里面至少有多少只动物,至多有多少只动物。