iOS_直播类app_HTTP Live Streaming

这样做相比使用 RTSP 协议的好处在于,一旦切分完成,之后的分发过程完全不需要额外使用任何专门软件,普通的网络服务器即可,大大降低了 CDN 边缘服务器的配置要求,可以使用任何现成的 CDN。

对于非实时视频,同样的好处也是存在的:如果你要在一段长达一小时的视频中跳转,如果使用单个 MP4 格式的视频文件,并且也是用 HTTP 协议,那么需要代理服务器支持 HTTP range request 以获取大文件中的一部分。不是所有的代理服务器都对此有良好的支持。

此外,HTTP Live Streaming 还有一个巨大优势:自适应码率流播(adaptive streaming)。效果就是客户端会根据网络状况自动选择不同码率的视频流,条件允许的情况下使用高码率,网络繁忙的时候使用低码率,并且自动在二者间随意切换。

至于为什么要用 TS 而不是 MP4,这是因为两个 TS 片段可以无缝拼接,播放器能连续播放,而 MP4 文件由于编码方式的原因,两段 MP4 不能无缝拼接,播放器连续播放两个 MP4 文件会出现破音和画面间断,影响用户体验。

前两年我尝试过一个基于 HTML5 < audio > 标签 + CBR MP3 格式 + Icecast 流媒体服务器的网络广播台的网页应用(预想是给 http://apple4.us 做 Livecast 的,就是听众只需要访问一个网页就能够几乎实时听到访谈节目),采用的正是 HTTP Live Streaming 的思路。通过对 MP3 音频流进行帧切分,基本能做到连续播放。唯一问题是浏览器不支持 TS 格式, < audio > 标签在两段 MP3 之前切换时会破音。这样只能对谈话类内容适用,如果播放连续的音乐有时候会听出破绽。

iOS 设备上启用 HTTP Live Streaming 非常简单,也是苹果官方推荐的方式。

常用的流媒体协议主要有 HTTP 渐进下载和基于RTSP/RTP 的实时流媒体协议,

这二种基本是完全不同的东西,目前比较方便又好用的我建议使用 HTTP 渐进下载的方法。

在这个中 apple 公司的 HTTP Live Streaming 是这个方面的代表。它最初是苹果公司针对iPhone、iPod、iTouch和iPad等移动设备而开发的流.现在见到在桌面也有很多应用了, HTML5 是直接支持这个。

我们可以看看 HTTP Live Streaming 是怎么样工作的。平时的直播技术中,播放模式中必须等待整个文件下载完才行,在 HLS 技术中 Web 服务器向客户端提供接近实时的音视频流。但在使用的过程中是使用的标准的 HTTP 协议,所以这时,只要使用 HLS 的技术,就能在普通的 HTTP 的应用上直接提供点播和直播。

要详细了解原理,我们先看看这个所需要的步骤。

视频采集 ->编码器 -> 流分割 -> 普通 web 服务(索引文件和视频文件) -> 客户端内容准备的过程大约二种,一是视频采集,编码器首先将摄像机实时采集的音视频数据压缩编码为符合特定标准的音视频基本流,也可以拿编码完了的文件,有一点必须保证,就是一定要使用H.264视频和AAC音频,因为发明这个的是苹果公司,只支持这个。

然后给这些封装成成为符合MPEG-2(MPEG 2 TS、MPEG2 PS之所以使用这个,主要是因为声音和视频会交织在一起,也会有关键帧来让视频可以直接播放).

流分割部分在这个中,比起 RTSP 之类和普通点播的最大不同,就是他会给 MPEG-2 分割成很多个 ts 的文件。分割过程大多是按时间来切,根据国外的资料,建议切 10s 一个的文件,如果码流高可以 5 秒一次。

在分割还有一点不同,就是这时流分割器会生成一个含有指向这些小TS文件指针的索引文件,所以这个文件也必须在 web 服务器上,不能少。每多 10s 时,就会多一个 ts 文件,所以索引也会根着修改成最新的几段视频。

最后,这些切分了的小的一系列的 ts 文件,放到普通的 web 服务器中就行了。这时在 CDN 中也是一样,因为请求这些文件会使用标准的 HTTP 协议。

索引文件后缀是.m3u8 ,索引文件采用扩展的M3U播放列表格式,其实就一文本。

内部的视频的地址会是如下

http://media.example.com/s_96ksegment1.ts

http://media.example.com/s_96ksegment2.ts

http://media.example.com/s_96ksegment3.ts所以这时可以直接做 Cache 和直接放到 Web 服务器中,简单方便。

如果 MIME 的信息输出不对的话,记的要修改这加入 ts 和 m3u8 的后缀支持

.m3u8 application/x-mpegURL

.ts video/MP2T最后就是客户端,如果是 HTML 直接在 HTML5 中直接支持这种视频可以使用如下标签

<video tabindex="0" height="480" width="640">

<source src="/content1/content1.m3u8">

</video>如果是应用客户端(Safari QuickTime之类),就得装软件来支持,客户端会根据选择的流的索引来下载文件,当下载了最少二段后开始播放。直接 m3u8 的索引结束。

另外,HTTP可以设计成的自适应比特率流,在不同网络环境,选择下载不同码流的视频。

所以整个 HTTP Live Streaming 无论是直播还是点播,都能做到近似实时的方式来进行流播放。理论的最小时延是每个切片的长.

HTTP Live Streaming直播(iOS直播)技术分析与实现

HTTP Live Streaming(HLS)技术,并实现了一个HLS编码器HLSLiveEncoder,C++写的。

其功能是采集摄像头与麦克风,实时进行H.264视频编码和AAC音频编码,并按照HLS的协议规范,生成分段的标准TS文件以及m3u8索引文件。

通过HLSLiveEncoder和第三方Http服务器(例如:Nginx),成功实现了HTTP Live Streaming直播,并在iphone上测试通过。

HLS技术要点分析

HTTP Live Streaming(HLS)是苹果公司(Apple Inc.)实现的基于HTTP的流媒体传输协议,可实现流媒体的直播和点播,主要应用在iOS系统,为iOS设备(如iPhone、iPad)提供音视频直播和点播方案。

HLS点播,基本上就是常见的分段HTTP点播,不同在于,它的分段非常小。要实现HLS点播,重点在于对媒体文件分段,目前有不少开源工具可以使用,只谈HLS直播技术。

相对于常见的流媒体直播协议,例如RTMP协议、RTSP协议、MMS协议等,HLS直播最大的不同在于,直播客户端获取到的,并不是一个完整的数据流。

HLS协议在服务器端将直播数据流存储为连续的、很短时长的媒体文件(MPEG-TS格式),而客户端则不断的下载并播放这些小文件,因为服务器端总是会将最新的直播数据生成新的小文件,这样客户端只要不停的按顺序播放从服务器获取到的文件,就实现了直播。

由此可见,基本上可以认为,HLS是以点播的技术方式来实现直播。由于数据通过HTTP协议传输,所以完全不用考虑防火墙或者代理的问题,而且分段文件的时长很短,客户端可以很快的选择和切换码率,以适应不同带宽条件下的播放。

不过HLS的这种技术特点,决定了它的延迟一般总是会高于普通的流媒体直播协议。

根据以上的了解要实现HTTP Live Streaming直播,需要研究并实现以下技术关键点

- 采集视频源和音频源的数据

- 对原始数据进行H264编码和AAC编码

- 视频和音频数据封装为MPEG-TS包

- HLS分段生成策略及m3u8索引文件

- HTTP传输协议

其中第1点和第2点,我之前的文章中已经提到过了,而最后一点,我们可以借助现有的HTTP服务器,所以,实现第3点和第4点是关键所在。

原文:http://www.cnblogs.com/haibindev/archive/2013/01/30/2880764.html

程序框架与实现

通过以上分析,实现HLS LiveEncoder直播编码器,其逻辑和流程基本上很清楚了:分别开启音频与视频编码线程,通过DirectShow(或其他)技术来实现音视频采集,随后分别调用libx264和libfaac进行视频和音频编码。两个编码线程实时编码音视频数据后,根据自定义的分片策略,存储在某个MPEG-TS格式分段文件中,当完成一个分段文件的存储后,更新m3u8索引文件。如下图所示:

上图中HLSLiveEncoder当收到视频和音频数据后,需要首先判断,当前分片是否应该结束,并创建新分片,以延续TS分片的不断生成。

需要注意的是,新的分片,应当从关键帧开始,防止播放器解码失败。核心代码如下所示:

TsMuxer的接口也是比较简单的。

HLS分段生成策略和m3u8

1. 分段策略

- HLS的分段策略,基本上推荐是10秒一个分片,当然,具体时间还要根据分好后的分片的实际时长做标注

- 通常来说,为了缓存等方面的原因,在索引文件中会保留最新的三个分片地址,以类似“滑动窗口”的形式,进行更新。

2. m3u8文件简介

m3u8,是HTTP Live Streaming直播的索引文件。

m3u8基本上可以认为就是.m3u格式文件,

区别在于,m3u8文件使用UTF-8字符编码。

#EXTM3U m3u文件头,必须放在第一行

#EXT-X-MEDIA-SEQUENCE 第一个TS分片的序列号

#EXT-X-TARGETDURATION 每个分片TS的最大的时长

#EXT-X-ALLOW-CACHE 是否允许cache

#EXT-X-ENDLIST m3u8文件结束符

#EXTINF extra info,分片TS的信息,如时长,带宽等一个简单的m3u8索引文件

运行效果

在Nginx工作目录下启动HLSLiveEncoder,并用VLC播放器连接播放

通过iPhone播放的效果

苹果官方对于视频直播服务提出了 HLS (HTTP Live Streaming) 解决方案,该方案主要适用范围在于:

- 使用 iPhone 、iPod touch、 iPad 以及 Apple TV 进行流媒体直播功能。(MAC 也能用)

- 不使用特殊的服务软件进行流媒体直播。

- 需要通过加密和鉴定(authentication)的视频点播服务。

首先,需要大家先对 HLS 的概念进行预览。

HLS 的目的在于,让用户可以在苹果设备(包括MAC OS X)上通过普通的网络服务完成流媒体的播放。 HLS 同时支持流媒体的实时广播和点播服务。同时也支持不同 bit 速率的多个备用流(平时根据当前网速去自适应视频的清晰度),这样客户端也好根据当前网络的带宽去只能调整当前使用的视频流。安全方面,HLS 提供了通过 HTTPS 加密对媒体文件进行加密并 对用户进行验证,允许视频发布者去保护自己的网络。

HLS 是苹果公司QuickTime X和iPhone软件系统的一部分。它的工作原理是把整个流分成一个个小的基于HTTP的文件来下载,每次只下载一些。当媒体流正在播放时,客户端可以选择从许多不同的备用源中以不同的速率下载同样的资源,允许流媒体会话适应不同的数据速率。

在开始一个流媒体会话时,客户端会下载一个包含元数据的extended M3U (m3u8) playlist文件,用于寻找可用的媒体流。

HLS只请求基本的HTTP报文,与实时传输协议(RTP)不同,HLS可以穿过任何允许HTTP数据通过的防火墙或者代理服务器。它也很容易使用内容分发网络来传输媒体流。

苹果对于自家的 HLS 推广也是采取了强硬措施,当你的直播内容持续十分钟

或者每五分钟内超过 5 MB 大小时,你的 APP 直播服务必须采用 HLS 架构,否则不允许上架。(详情)

相关服务支持环境 (重要组成)

Adobe Flash Media Server:从4.5开始支持HLS、Protected HLS(PHLS)。5.0改名为Adobe Media Server- Flussonic Media Server:2009年1月21日,版本3.0开始支持VOD、HLS、时移等。

- RealNetworks的

Helix Universal Server:2010年4月,版本15.0开始支持iPhone, iPad和iPod的HTTP直播、点播H.264/AAC内容,最新更新在2012年11月。 - 微软的IIS Media Services:从4.0开始支持HLS。

Nginx RTMP Module:支持直播模式的HLS。- Nimber Streamer

- Unified Streaming Platform

- VLC Media Player:从2.0开始支持直播和点播HLS。

- Wowza Media Server:2009年12月9日发布2.0,开始全面支持HLS。

- VODOBOX Live Server:始支持HLS。

- Gstreamill是一个支持hls输出的,基于gstreamer的实时编码器。

相关客户端支持环境

- iOS从3.0开始成为标准功能。

- Adobe Flash Player从11.0开始支持HLS。

- Google的Android自Honeycomb(3.0)开始支持HLS。

- VODOBOX HLS Player (Android,iOS, Adobe Flash Player)

- JW Player (Adobe Flash player)

- Windows 10 的 EDGE 浏览器开始支持HLS。

其中输入视频源是由摄像机预先录制好的。

之后这些源会被编码 MPEG-4(H.264 video 和 AAC audio)格式然后用硬件打包到MPEG-2 的传输流中。

MPEG-2 传输流会被分散为小片段然后保存为一个或多个系列的.ts 格式的媒体文件。

这个过程需要借助编码工具来完成,比如 Apple stream segmenter。

纯音频会被编码为一些音频小片段,通常为包含 ADTS头的AAC、MP3、或者 AC-3格式。

ADTS全称是(Audio Data Transport Stream),是AAC的一种十分常见的传输格式。

一般的AAC解码器都需要把AAC的ES流打包成ADTS的格式,一般是在AAC ES流前添加7个字节的ADTS header。

ES流- Elementary Streams (原始流):对视频、音频信号及其他数据进行编码压缩后 的数据流称为原始流。原始流包括访问单元,比如视频原始流的访问单元就是一副图像的编码数据。

同时上面提到的那个切片器(segmenter)也会创建一个索引文件,通常会包含这些媒体文件的一个列表,也能包含元数据。他一般都是一个.M38U 个hi的列表。列表元素会关联一个 URL 用于客户端访问。然后按序去请求这些 URL。

服务器端

服务端可以采用硬件编码和软件编码两种形式,其功能都是按照上文描述的规则对现有的媒体文件进行切片并使用索引文件进行管理。而软件切片通常会使用 Apple 公司提供的工具或者第三方的集成工具。

媒体编码

媒体编码器获取到音视频设备的实时信号,将其编码后压缩用于传输。而编码格式必须配置为客户端所支持的格式,比如 H.264 视频和HE-AAC 音频。当前,支持 用于视频的 MPEG-2 传输流和 纯音频 MPEG 基本流。编码器通过本地网络将 MPEG-2 传输流分发出去,送到流切片器那里。标准传输流和压缩传输流无法混合使用。传输流可以被打包成很多种不同的压缩格式,这里有两个表详细列举了支持的压缩格式类型。

- Audio Technologies

- Vedio Technologies

[重点]在编码中图,不要修改视频编码器的设置,比如视频大小或者编码解码器类型。如果避免不了,那修改动作必须发生在一个片段边界。并且需要早之后相连的片段上用EXT-X-DISCONTINUITY 进行标记。

流切片器

流切片器(通常是一个软件)会通过本地网络从上面的媒体编码器中读取数据,然后将着这些数据一组相等时间间隔的 小 媒体文件。虽然没一个片段都是一个单独的文件,但是他们的来源是一个连续的流,切完照样可以无缝重构回去。

切片器在切片同时会创建一个索引文件,索引文件会包含这些切片文件的引用。每当一个切片文件生成后,索引文件都会进行更新。索引用于追踪切片文件的有效性和定位切片文件的位置。切片器同时也可以对你的媒体片段进行加密并且创建一个密钥文件作为整个过程的一部分。

文件切片器(相对于上面的流切片器)

如果已近有编码后的文件(而不是编码流),你可以使用文件切片器,通过它对编码后的媒体文件进行 MPEG-2 流的封装并且将它们分割为等长度的小片段。切片器允许你使用已经存在的音视频库用于 HLS 服务。它和流切片器的功能相似,但是处理的源从流替换流为了文件。

媒体片段文件

媒体片段是由切片器生成的,基于编码后的媒体源,并且是由一系列的 .ts 格式的文件组成,其中包含了你想通过 MPEG-2 传送流携带的 H.264 视频 和 AAC

/MP3/AC-3 音频。对于纯音频的广播,切片器可以生产 MPEG 基础音频流,其中包含了 ADTS头的AAC、MP3、或者AC3等音频。

索引文件(PlayLists)

通常由切片器附带生成,保存为 .M3U8 格式,.m3u 一般用于 MP3 音频的索引文件。

Note如果你的扩展名是.m3u,并且系统支持.mp3文件,那客户的软件可能要与典型的 MP3 playList 保持一致来完成 流网络音频的播放。

下面是一个 .M3U8 的 playlist 文件样例,其中包含了三个没有加密的十秒钟的媒体文件:

#EXT-X-VERSION:3

#EXTM3U

#EXT-X-TARGETDURATION:10

#EXT-X-MEDIA-SEQUENCE:1# Old-style integer duration; avoid for newer clients.

#EXTINF:10,

http://media.example.com/segment0.ts# New-style floating-point duration; use for modern clients.

#EXTINF:10.0,

http://media.example.com/segment1.ts

#EXTINF:9.5,

http://media.example.com/segment2.ts

#EXT-X-ENDLIST为了更精确,你可以在 version 3 或者之后的协议版本中使用 float 数来标记媒体片段的时长,并且要明确写明版本号,如果没有版本号,则必须与 version 1 协议保持一致。你可以使用官方提供的切片器去生产各种各样的 playlist 索引文件,详见媒体文件切片器

分布式部分

分布式系统是一个网络服务或者一个网络缓存系统,用于通过 HTTP 向客户端发送媒体文件和索引文件。不用自定义模块发送内容。通常仅仅需要很简单的网络配置即可使用。而且这种配置一般就是限制指定 .M38U 文件和 .ts 文件的 MIME 类型。详见部署 HTTP Live Streaming

客户端部分

客户端开始时回去抓取 索引文件(.m3u8/.m3u),其中用URL来标记不同的流。索引文件可以指定可用媒体文件的位置,解密的密钥,以及任何可以切换的流。对于选中的流,客户端会有序的下载每一个可获得的文件。每一个文件都包含流中的连环碎片。一旦下载到足够量的数据,客户端会开始向用户展示重新装配好的媒体资源。

客户端负责抓取任何解密密钥,认证或者展示一个用于认证的界面,之后再解密需要的文件。

这个过程会一直持续知道出现 结束标记 #EXT-X-ENDLIST。如果结束标记不出现,该索引就是用于持续广播的。客户端会定期的加载一些新的索引文件。客户端会从新更新的索引文件中去查找加密密钥并且将关联的URL加入到请求队列中去。

HLS 的使用

使用 HLS 需要使用一些工具,当然大部分工具都是服务器端使用的,这里简单了解一下就行,包括 media stream segmenter, a media file segmenter, a stream validator, an id3 tag generator, a variant playlist generator.这些工具用英文注明是为了当你在苹果开发中心中寻找时方便一些。

会话模式

通常包含 Live 和 VOD (点播)两种

点播VOD的特点就是可以获取到一个静态的索引文件,其中那个包含一套完整的资源文件地址。这种模式允许客户端访问全部节目。VOD点播拥有先进的下载技术,包括加密认证技术和动态切换文件传输速率的功能(通常用于不同分辨率视频之间的切换)。

Live 会话就是实时事件的录制展示。它的索引文件一直处于动态变化的,你需要不断的更新索引文件 playlist 然后移除旧的索引文件。这种类型通过向索引文件添加媒体地址可以很容易的转化为VOD类型。在转化时不要移除原来旧的源,而是通过添加一个#ET-X-ENDLIST 标记来终止实时事件。转化时如果你的索引文件中包含EXT-X-PLAYLIST-TYPE 标签,你需要将值从EVENT 改为 VOD。

ps:自己抓了一个直播的源,从索引中看到的结果是第一次回抓到代表不同带宽的playList(抓取地址:http://dlhls.cdn.zhanqi.tv/zqlive/34338_PVMT5.m3u8)

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-STREAM-INF:PROGRAM-ID=1,PUBLISHEDTIME=1453914627,CURRENTTIME=1454056509,BANDWIDTH=700000,RESOLUTION=1280x720

34338_PVMT5_700/index.m3u8?Dnion_vsnae=34338_PVMT5

#EXT-X-STREAM-INF:PROGRAM-ID=1,PUBLISHEDTIME=1453914627,CURRENTTIME=1454056535,BANDWIDTH=400000

34338_PVMT5_400/index.m3u8?Dnion_vsnae=34338_PVMT5

#EXT-X-STREAM-INF:PROGRAM-ID=1,PUBLISHEDTIME=1453914627,CURRENTTIME=1454056535,BANDWIDTH=1024000

34338_PVMT5_1024/index.m3u8?Dnion_vsnae=34338_PVMT5这里面的链接不是视频源URL,而是一个用于流切换的主索(下面会有介绍)引我猜想是需要对上一次的抓包地址做一个拼接

组合的结果就是:http://dlhls.cdn.zhanqi.tv/zqlive/34338_PVMT5_1024/index.m3u8?Dnion_vsnae=34338_PVMT5(纯属小学智力题。。。)将它作为抓取地址再一次的结果

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-MEDIA-SEQUENCE:134611

#EXT-X-TARGETDURATION:10

#EXTINF:9.960,

35/1454056634183_128883.ts?Dnion_vsnae=34338_PVMT5

#EXTINF:9.960,

35/1454056644149_128892.ts?Dnion_vsnae=34338_PVMT5

#EXTINF:9.960,

35/1454056654075_128901.ts?Dnion_vsnae=34338_PVMT5同理,继续向下抓:(拼接地址:http://dlhls.cdn.zhanqi.tv/zqlive/34338_PVMT5_1024/index.m3u8?Dnion_vsnae=34338_PVMT5/35/1454056634183_128883.ts?Dnion_vsnae=34338_PVMT5/36/1454059958599_131904.ts?Dnion_vsnae=34338_PVMT5)

抓取结果:

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-MEDIA-SEQUENCE:134984

#EXT-X-TARGETDURATION:10

#EXTINF:9.280,

36/1454059988579_131931.ts?Dnion_vsnae=34338_PVMT5

#EXTINF:9.960,

36/1454059998012_131940.ts?Dnion_vsnae=34338_PVMT5

#EXTINF:9.960,

36/1454060007871_131949.ts?Dnion_vsnae=34338_PVMT5相比于第二次又获取了一个片段的索引,而且只要是第二次之后,资源地址都会包含 .ts,说明里面是有视频资源URL的,不过具体的截取方法还是需要查看前面提到的IETF的那套标准的HLS的协议,利用里面的协议应该就能拼接出完整的资源路径进行下载。反正我用苹果自带的MPMoviePlayerController直接播放是没有问题的,的确是直播资源。与之前说过的苹果自带的QuickTime类似,都遵循了HLS协议用于流媒体播放。而每次通过拼接获取下一次的索引,符合协议里提到的不断的更替索引的动作。

内容加密

如果内容需要加密,你可以在索引文件中找到密钥的相关信息。如果索引文件中包含了一个密钥文件的信息,那接下来的媒体文件就必须使用密钥解密后才能解密打开了。当前的 HLS 支持使用16-octet 类型密钥的 AES-128 加密。这个密钥格式是一个由着在二进制格式中的16个八进制组的数组打包而成的。

加密的配置模式通常包含三种:

- 模式一:允许你在磁盘上制定一个密钥文件路径,切片器会在索引文件中插入存在的密钥文件的 URL。所有的媒体文件都使用该密钥进行加密。

- 模式二:切片器会生成一个随机密钥文件,将它保存在指定的路径,并在索引文件中引用它。所有的媒体文件都会使用这个随机密钥进行加密。

- 模式三:每 n 个片段生成一个随机密钥文件,并保存到指定的位置,在索引中引用它。这个模式的密钥处于轮流加密状态。每一组 n 个片段文件会使用不同的密钥加密。

理论上,不定期的碎片个数生成密钥会更安全,但是定期的生成密钥不会对系统的性能产生太大的影响。

你可以通过 HTTP 或者 HTTPS 提供密钥。也可以选择使用你自己的基于会话的认证安排去保护发送的key。更多详情可以参考通过 HTTPS 安全的提供预约

密钥文件需要一个 initialization vector (IV) 去解码加密的媒体文件。IV 可以随着密钥定期的改变。

缓存和发送协议

HTTPS通常用于发送密钥,同时,他也可以用于平时的媒体片段和索引文件的传输。但是当扩展性更重要时,这样做是不推荐的。HTTPS 请求通常都是绕开 web 服务缓存,导致所有内容请求都是通过你的服务进行转发,这有悖于分布式网络连接系统的目的。

处于这个原因,确保你发送的网络内容都明白非常重要。当处于实况广播模式时索引文件不会像分片媒体文件一样长时间的被缓存,他会动态不停地变化。

流切换

如果你的视频具备流切换功能,这对于用户来说是一个非常棒的体验,处于不同的带宽、不同的网速播放不同清晰度的视频流,这样只能的流切换可以保证用户感觉到非常流畅的观影体验,同时不同的设备也可以作为选择的条件,比如视网膜屏可以再网速良好的情况下播放清晰度更高的视频流。

这种功能的实现在于,索引文件的特殊结构

有别于普通的索引,具备流热切换的索引通常由主索引和链接不同带宽速率的资源的子索引,由子索引再链接对引得.ts视频切片文件。其中主索引只下载一次,而子索引则会不停定期的下载,通常会先使用主索引中列出的第一个子索引,之后才会根据当时的网络情况去动态切换合适的流。客户端会在任何时间去切换不同的流。比如连入或者退出一个 wifi 热点。所有的切换都会使用相同的音频文件(换音频没多大意思相对于视频)在不同的流之间平滑的进行切换。

这一套不同速率的视频都是有工具生成的,使用variantplaylistcreator 工具并且为 mediafilesegmenter 或者mediastreamsegmenter 指定 -generate-variant-playlist 选项,详情参考下载工具

概念先写到这吧,前面的知识够对HSL的整体结构做一个初步的了解。

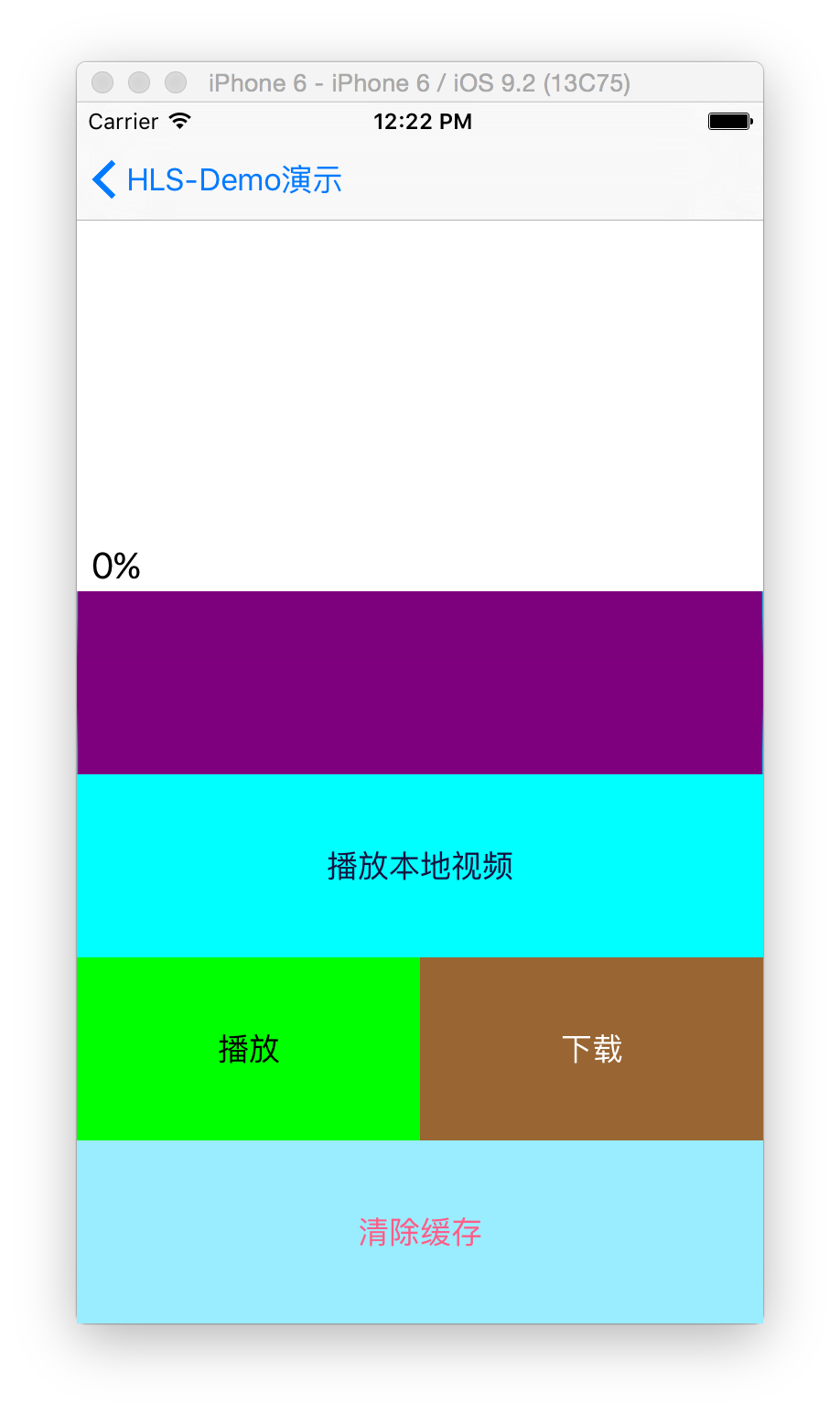

Demo配置原理:

1、 需要导入第三方库:ASIHttpRequest,CocoaHTTPServer,m3u8(其中ASI用于网络请求,CocoaHTTPServer用于在ios端搭建服务器使用,m3u8是用来对返回的索引文件进行解析的)

2、导入系统库:libsqlite3.dylib、libz.dylib、libxml2.dylib、CoreTelephony.framework、SystemConfiguration.framework、MobileCoreServices.framework、Security.framework、CFNetwork.framework、MediaPlayer.framework

3、添加头文件

YCHLS-Demo.h4、demo介绍

- 播放:直接播放在线的直播链接,是由系统的MPMoviePlayer完成的,它自带解析HLS直播链的功能。

- 下载:遵循HLS的协议,通过索引文件的资源路径下载相关的视频切片并保存到手机本地。

- 播放本地视频:使用下载好的视频文件片段进行连续播放。

- 清除缓存:删除下载好的视频片段

原理:

- 通过ASI请求链接,通过m3u8库解析返回的m3u8索引文件。

- 再通过ASI下载解析出的视频资源地址,仿照HLS中文件存储路径存储。

- 利用CocoaHTTPServer在iOS端搭建本地服务器,并开启服务,端口号为:12345(高位端口即可)。配置服务器路径与步骤二存储路径一致。

- 设置播放器直播链接为本地服务器地址,直接播放,由于播放器遵守HLS协议,所以能够解析我们之前使用HLS协议搭建的本地服务器地址。

- 点击在线播放,校验是否与本地播放效果一致。

上面是HLS中服务器存储视频文件切片和索引文件的结构图

整个流程就是:

- 先点击下载,通过解析m3u8的第三方库解析资源。(m3u8的那个库只能解析一种特定格式的m3u8文件,代码里会有标注)

- 点击播放本地视频播放下载好的资源。

- 点击播放是用来预览直播的效果,与整个流程无关。

- 其中进度条用来显示下载进度。

总结:

整个Demo并不只是让我们搭建一个Hls服务器或者一个支持Hls的播放器。目的在于了解Hls协议的具体实现,以及服务器端的一些物理架构。通过Demo的学习,可以详细的了解Hls直播具体的实现流程。

部分源码贴出

开启本地服务器:

- (void)openHttpServer

{self.httpServer = [[HTTPServer alloc] init];[self.httpServer setType:@"_http._tcp."]; // 设置服务类型[self.httpServer setPort:12345]; // 设置服务器端口// 获取本地Library/Cache路径下downloads路径NSString *webPath = [kLibraryCache stringByAppendingPathComponent:kPathDownload];NSLog(@"-------------\\nSetting document root: %@\\n", webPath);// 设置服务器路径[self.httpServer setDocumentRoot:webPath];NSError *error;if(![self.httpServer start:&error]){NSLog(@"-------------\\nError starting HTTP Server: %@\\n", error);}视频下载:

- (IBAction)downloadStreamingMedia:(id)sender {UIButton *downloadButton = sender;// 获取本地Library/Cache路径NSString *localDownloadsPath = [kLibraryCache stringByAppendingPathComponent:kPathDownload];// 获取视频本地路径NSString *filePath = [localDownloadsPath stringByAppendingPathComponent:@"XNjUxMTE4NDAw/movie.m3u8"];NSFileManager *fileManager = [NSFileManager defaultManager];// 判断视频是否缓存完成,如果完成则播放本地缓存if ([fileManager fileExistsAtPath:filePath]) {[downloadButton setTitle:@"已完成" forState:UIControlStateNormal];downloadButton.enabled = NO;}else{M3U8Handler *handler = [[M3U8Handler alloc] init];handler.delegate = self;// 解析m3u8视频地址[handler praseUrl:TEST_HLS_URL];// 开启网络指示器[[UIApplication sharedApplication] setNetworkActivityIndicatorVisible:YES];}

}播放本地视频:

- (IBAction)playVideoFromLocal:(id)sender {NSString * playurl = [NSString stringWithFormat:@"http://127.0.0.1:12345/XNjUxMTE4NDAw/movie.m3u8"];NSLog(@"本地视频地址-----%@", playurl);// 获取本地Library/Cache路径NSString *localDownloadsPath = [kLibraryCache stringByAppendingPathComponent:kPathDownload];// 获取视频本地路径NSString *filePath = [localDownloadsPath stringByAppendingPathComponent:@"XNjUxMTE4NDAw/movie.m3u8"];NSFileManager *fileManager = [NSFileManager defaultManager];// 判断视频是否缓存完成,如果完成则播放本地缓存if ([fileManager fileExistsAtPath:filePath]) {MPMoviePlayerViewController *playerViewController =[[MPMoviePlayerViewController alloc]initWithContentURL:[NSURL URLWithString: playurl]];[self presentMoviePlayerViewControllerAnimated:playerViewController];}else{UIAlertView *alertView = [[UIAlertView alloc] initWithTitle:@"Sorry" message:@"当前视频未缓存" delegate:self cancelButtonTitle:@"确定" otherButtonTitles:nil, nil];[alertView show];}

}播放在线视频

- (IBAction)playLiveStreaming {NSURL *url = [[NSURL alloc] initWithString:TEST_HLS_URL];MPMoviePlayerViewController *player = [[MPMoviePlayerViewController alloc] initWithContentURL:url];[self presentMoviePlayerViewControllerAnimated:player];

}ADTS是个啥

ADTS全称是(Audio Data Transport Stream),是AAC的一种十分常见的传输格式。

一般的AAC解码器都需要把AAC的ES流打包成ADTS的格式,一般是在AAC ES流前添加7个字节的ADTS header。

也就是说你可以吧ADTS这个头看作是AAC的frameheader。

|

ADTS AAC

|

||||||

| ADTS_header | AAC ES | ADTS_header | AAC ES |

...

|

ADTS_header | AAC ES |

2.ADTS内容及结构

ADTS 头中相对有用的信息采样率、声道数、帧长度。

想想也是,我要是解码器的话,你给我一堆得AAC音频 ES流我也解不出来。

每一个带ADTS头信息的AAC流会清晰的告送解码器他需要的这些信息。

一般情况下ADTS的头信息都是7个字节,7*8 = 56 个bit ,分为2部分:

固定头 adts_fixed_header(); 28bit

可变头 adts_variable_header(); 28bit

其中:

syncword:同步头 总是0xFFF,12 bit, all bits must be 1,代表着一个ADTS帧的开始

ID:MPEG Version: 0 for MPEG-4, 1 for MPEG-2

Layer:always: '00'

profile:表示使用哪个级别的AAC,有些芯片只支持AAC LC 。

在MPEG-2 AAC中定义了3种:

sampling_frequency_index:

表示使用的采样率下标,通过这个下标在 Sampling Frequencies[ ]数组中查找得知采样率的值。

There are 13 supported frequencies:

- 0: 96000 Hz

- 1: 88200 Hz

- 2: 64000 Hz

- 3: 48000 Hz

- 4: 44100 Hz

- 5: 32000 Hz

- 6: 24000 Hz

- 7: 22050 Hz

- 8: 16000 Hz

- 9: 12000 Hz

- 10: 11025 Hz

- 11: 8000 Hz

- 12: 7350 Hz

- 13: Reserved

- 14: Reserved

- 15: frequency is written explictly

channel_configuration:表示声道数

- 0: Defined in AOT Specifc Config

- 1: 1 channel: front-center

- 2: 2 channels: front-left, front-right

- 3: 3 channels: front-center, front-left, front-right

- 4: 4 channels: front-center, front-left, front-right, back-center

- 5: 5 channels: front-center, front-left, front-right, back-left, back-right

- 6: 6 channels: front-center, front-left, front-right, back-left, back-right, LFE-channel

- 7: 8 channels: front-center, front-left, front-right, side-left, side-right, back-left, back-right, LFE-channel

- 8-15: Reserved

可变头 28bit

frame_length :

一个ADTS帧的长度包括ADTS头和AAC原始流.

adts_buffer_fullness:0x7FF 说明是码率可变的码流

3.将AAC打包成ADTS格式

如果是通过嵌入式高清解码芯片做产品的话,一般情况的解码工作都是由硬件来完成的。所以大部分的工作是把AAC原始流打包成ADTS的格式,然后丢给硬件就行了。

通过对ADTS格式的了解,很容易就能把AAC打包成ADTS。我们只需得到封装格式里面关于音频采样率、声道数、元数据长度、aac格式类型等信息。然后在每个AAC原始流前面加上个ADTS头就OK了。

贴上ffmpeg中添加ADTS头的代码,就可以很清晰的了解ADTS头的结构:

- int ff_adts_write_frame_header(ADTSContext *ctx,

- uint8_t *buf, int size, int pce_size)

- {

- PutBitContext pb;

- init_put_bits(&pb, buf, ADTS_HEADER_SIZE);

- /* adts_fixed_header */

- put_bits(&pb, 12, 0xfff); /* syncword固定的 */

- put_bits(&pb, 1, 0); /* ID */

- put_bits(&pb, 2, 0); /* layer */

- put_bits(&pb, 1, 1); /* protection_absent */

- put_bits(&pb, 2, ctx->objecttype); /* profile_objecttype */

- put_bits(&pb, 4, ctx->sample_rate_index);

- put_bits(&pb, 1, 0); /* private_bit */

- put_bits(&pb, 3, ctx->channel_conf); /* channel_configuration */

- put_bits(&pb, 1, 0); /* original_copy */

- put_bits(&pb, 1, 0); /* home */

- /* adts_variable_header */

- put_bits(&pb, 1, 0); /* copyright_identification_bit */

- put_bits(&pb, 1, 0); /* copyright_identification_start */

- put_bits(&pb, 13, ADTS_HEADER_SIZE + size + pce_size); /* aac_frame_length */

- put_bits(&pb, 11, 0x7ff); /* adts_buffer_fullness */

- put_bits(&pb, 2, 0); /* number_of_raw_data_blocks_in_frame */

- flush_put_bits(&pb);

- return 0;

- }

Introduction

If you are interested in any of the following:

Streaming audio or video to iPhone, iPod touch, iPad, or Apple TV

Streaming live events without special server software

Sending video on demand with encryption and authentication

you should learn about HTTP Live Streaming.

HTTP Live Streaming lets you send audio and video over HTTP from an ordinary web server for playback on iOS-based devices—including iPhone, iPad, iPod touch, and Apple TV—and on desktop computers (Mac OS X).

HTTP Live Streaming supports both live broadcasts and prerecorded content (video on demand). HTTP Live Streaming supports multiple alternate streams at different bit rates, and the client software can switch streams intelligently as network bandwidth changes. HTTP Live Streaming also provides for media encryption and user authentication over HTTPS, allowing publishers to protect their work.

All devices running iOS 3.0 and later include built-in client software for HTTP Live Streaming. The Safari browser can play HTTP streams within a webpage on iPad and desktop computers, and Safari launches a full-screen media player for HTTP streams on iOS devices with small screens, such as iPhone and iPod touch. Apple TV 2 and later includes an HTTP Live Streaming client.

Important: iPhone and iPad apps that send large amounts of audio or video data over cellular networks are required to use HTTP Live Streaming. See Requirements for Apps.

Safari plays HTTP Live streams natively as the source for the<video>tag. Mac OS X developers can use the QTKit and AVFoundation frameworks to create desktop applications that play HTTP Live Streams, and iOS developers can use the MediaPlayer and AVFoundation frameworks to create iOS apps.

Important:Where possible, use the <video>tag to embed HTTP Live Streaming, and use the<object>or<embed>tags only to specify fallback content ???

Because it uses HTTP, this kind of streaming is automatically supported by nearly all edge servers, media distributors, caching systems, routers, and firewalls.

Note: Many existing streaming services require specialized servers to distribute content to end users. These servers require specialized skills to set up and maintain, and in a large-scale deployment this can be costly. HTTP Live Streaming avoids this by using standard HTTP to deliver the media. Additionally, HTTP Live Streaming is designed to work seamlessly in conjunction with media distribution networks for large scale operations.

The HTTP Live Streaming specification is an IETF Internet-Draft. For a link to the specification, see the See Also section below.

At a Glance

HTTP Live Streaming is a way to send audio and video over HTTP from a web server to client software on the desktop or to iOS-based devices.

You Can Send Audio and Video Without Special Server Software

You can serve HTTP Live Streaming audio and video from an ordinary web server. The client software can be the Safari browser or an app that you’ve written for iOS or Mac OS X.

HTTP Live Streaming sends audio and video as a series of small files, typically of about 10 seconds duration, called media segment files. An index file, or playlist, gives the clients the URLs of the media segment files. The playlist can be periodically refreshed to accommodate live broadcasts, where media segment files are constantly being produced. You can embed a link to the playlist in a webpage or send it to an app that you’ve written.

Relevant Chapter: HTTP Streaming Architecture

You Can Send Live Streams or Video on Demand, with Optional Encryption

For video on demand from prerecorded media, Apple provides a free tool to make media segment files and playlists from MPEG-4 video or QuickTime movies with H.264 video compression, or audio files with AAC or MP3 compression. The playlists and media segment files can be used for video on demand or streaming radio, for example.

For live streams, Apple provides a free tool to make media segment files and playlists from live MPEG-2 transport streams carrying H.264 video, AAC audio, or MP3 audio. There are a number of hardware and software encoders that can create MPEG-2 transport streams carrying MPEG-4 video and AAC audio in real time.

These tools can be instructed to encrypt your media and generate decryption keys. You can use a single key for all your streams, a different key for each stream, or a series of randomly generated keys that change at intervals during a stream. Keys are further protected by the requirement for an initialization vector, which can also be set to change periodically.

Relevant Chapter: Using HTTP Live Streaming

Prerequisites

You should have a general understanding of common audio and video file formats and be familiar with how web servers and browsers work.

See Also

iOS Human Interface Guidelines—how to design web content for iOS-based devices.

HTTP Live Streaming protocol—the IETF Internet-Draft of the HTTP Live Streaming specification.

HTTP Live Streaming Resources—a collection of information and tools to help you get started.

MPEG-2 Stream Encryption Format for HTTP Live Streaming—a detailed description of an encryption format.

HTTP Streaming Architecture

HTTP Live Streaming allows you to send live or prerecorded audio and video, with support for encryption and authentication, from an ordinary web server to any device running iOS 3.0 or later (including iPad and Apple TV), or any computer with Safari 4.0 or later installed.

Overview

Conceptually, HTTP Live Streaming consists of three parts: the server component, the distribution component, and the client software.

The server component is responsible for taking input streams of media and encoding them digitally, encapsulating them in a format suitable for delivery, and preparing the encapsulated media for distribution.

The distribution component consists of standard web servers. They are responsible for accepting client requests and delivering prepared media and associated resources to the client. For large-scale distribution, edge networks or other content delivery networks can also be used.

The client software is responsible for determining the appropriate media to request, downloading those resources, and then reassembling them so that the media can be presented to the user in a continuous stream. Client software is included on iOS 3.0 and later and computers with Safari 4.0 or later installed.

In a typical configuration, a hardware encoder takes audio-video input, encodes it as H.264 video and AAC audio, and outputs it in an MPEG-2 Transport Stream, which is then broken into a series of short media files by a software stream segmenter. These files are placed on a web server. The segmenter also creates and maintains an index file containing a list of the media files. The URL of the index file is published on the web server. Client software reads the index, then requests the listed media files in order and displays them without any pauses or gaps between segments.

An example of a simple HTTP streaming configuration is shown in Figure 1-1.

Figure 1-1 A basic configuration

Input can be live or from a prerecorded source. It is typically encoded as MPEG-4 (H.264 video and AAC audio) and packaged in an MPEG-2 Transport Stream by off-the-shelf hardware. The MPEG-2 transport stream is then broken into segments and saved as a series of one or more .ts media files. This is typically accomplished using a software tool such as the Apple stream segmenter.

Audio-only streams can be a series of MPEG elementary audio files formatted as AAC with ADTS headers, as MP3, or as AC-3.

The segmenter also creates an index file. The index file contains a list of media files. The index file also contains metadata. The index file is an .M3U8 playlist. The URL of the index file is accessed by clients, which then request the indexed files in sequence.

Server Components

The server requires a media encoder, which can be off-the-shelf hardware, and a way to break the encoded media into segments and save them as files, which can either be software such as the media stream segmenter provided by Apple or part of an integrated third-party solution.

Media Encoder

The media encoder takes a real-time signal from an audio-video device, encodes the media, and encapsulates it for transport. Encoding should be set to a format supported by the client device, such as H.264 video and HE-AAC audio. Currently, the supported delivery format is MPEG-2 Transport Streams for audio-video, or MPEG elementary streams for audio-only.

The encoder delivers the encoded media in an MPEG-2 Transport Stream over the local network to the stream segmenter. MPEG-2 transport streams should not be confused with MPEG-2 video compression. The transport stream is a packaging format that can be used with a number of different compression formats. The Audio Technologies and Video Technologies list supported compression formats.

Important: The video encoder should not change stream settings—such as video dimensions or codec type—in the midst of encoding a stream. If a stream settings change is unavoidable, the settings must change at a segment boundary, and the EXT-X-DISCONTINUITY tag must be set on the following segment.

Stream Segmenter

The stream segmenter is a process—typically software—that reads the Transport Stream from the local network and divides it into a series of small media files of equal duration. Even though each segment is in a separate file, video files are made from a continuous stream which can be reconstructed seamlessly.

The segmenter also creates an index file containing references to the individual media files. Each time the segmenter completes a new media file, the index file is updated. The index is used to track the availability and location of the media files. The segmenter may also encrypt each media segment and create a key file as part of the process.

Media segments are saved as .ts files (MPEG-2 transport stream files). Index files are saved as .M3U8 playlists.

File Segmenter

If you already have a media file encoded using supported codecs, you can use a file segmenter to encapsulate it in an MPEG-2 transport stream and break it into segments of equal length. The file segmenter allows you to use a library of existing audio and video files for sending video on demand via HTTP Live Streaming. The file segmenter performs the same tasks as the stream segmenter, but it takes files as input instead of streams.

Media Segment Files

The media segment files are normally produced by the stream segmenter, based on input from the encoder, and consist of a series of .ts files containing segments of an MPEG-2 Transport Stream carrying H.264 video and AAC, MP3, or AC-3 audio. For an audio-only broadcast, the segmenter can produce MPEG elementary audio streams containing either AAC audio with ADTS headers, MP3 audio, or AC-3 audio.

Index Files (Playlists)

Index files are normally produced by the stream segmenter or file segmenter, and saved as .M3U8 playlists, an extension of the .m3u format used for MP3 playlists.

Note: Because the index file format is an extension of the .m3u playlist format, and because the system also supports .mp3 audio media files, the client software may also be compatible with typical MP3 playlists used for streaming Internet radio.

Here is a very simple example of an index file, in the form of an .M3U8 playlist, that a segmenter might produce if the entire stream were contained in three unencrypted 10-second media files:

#EXT-X-VERSION:3 |

#EXTM3U |

#EXT-X-TARGETDURATION:10 |

#EXT-X-MEDIA-SEQUENCE:1 |

|

|

# Old-style integer duration; avoid for newer clients. |

#EXTINF:10, |

http://media.example.com/segment0.ts |

|

|

# New-style floating-point duration; use for modern clients. |

#EXTINF:10.0, |

http://media.example.com/segment1.ts |

#EXTINF:9.5, |

http://media.example.com/segment2.ts |

#EXT-X-ENDLIST |

For maximum accuracy, you should specify all durations as floating-point values when sending playlists to clients that support version 3 of the protocol or later. (Older clients support only integer values.) You must specify a protocol version when using floating-point lengths; if the version is omitted, the playlist must conform to version 1 of the protocol.

Note: You can use the file segmenter provided by Apple to generate a variety of example playlists, using an MPEG-4 video or AAC or MP3 audio file as a source. For details, see Media File Segmenter.

The index file may also contain URLs for encryption key files and alternate index files for different bandwidths. For details of the index file format, see the IETF Internet-Draft of the HTTP Live Streaming specification.

Index files are normally created by the same segmenter that creates the media segment files. Alternatively, it is possible to create the .M3U8 file and the media segment files independently, provided they conform the published specification. For audio-only broadcasts, for example, you could create an .M3U8 file using a text editor, listing a series of existing .MP3 files.

Distribution Components

The distribution system is a web server or a web caching system that delivers the media files and index files to the client over HTTP. No custom server modules are required to deliver the content, and typically very little configuration is needed on the web server.

Recommended configuration is typically limited to specifying MIME-type associations for .M3U8 files and .ts files.

For details, see Deploying HTTP Live Streaming.

Client Component

The client software begins by fetching the index file, based on a URL identifying the stream. The index file in turn specifies the location of the available media files, decryption keys, and any alternate streams available. For the selected stream, the client downloads each available media file in sequence. Each file contains a consecutive segment of the stream. Once it has a sufficient amount of data downloaded, the client begins presenting the reassembled stream to the user.

The client is responsible for fetching any decryption keys, authenticating or presenting a user interface to allow authentication, and decrypting media files as needed.

This process continues until the client encounters the #EXT-X-ENDLIST tag in the index file. If no #EXT-X-ENDLIST tag is present, the index file is part of an ongoing broadcast. During ongoing broadcasts, the client loads a new version of the index file periodically. The client looks for new media files and encryption keys in the updated index and adds these URLs to its queue.

=============================================================================

=============================================================================

Using HTTP Live Streaming

Download the Tools

There are several tools available that can help you set up an HTTP Live Streaming service. The tools include a media stream segmenter, a media file segmenter, a stream validator, an id3 tag generator, and a variant playlist generator.

The tools are frequently updated, so you should download the current version of the HTTP Live Streaming Tools from the Apple Developer website. You can access them if you are a member of the iOS Developer Program. One way to navigate to the tools is to log onto developer.apple.com, then use the search feature.

Media Stream Segmenter

The mediastreamsegmenter command-line tool takes an MPEG-2 transport stream as an input and produces a series of equal-length files from it, suitable for use in HTTP Live Streaming. It can also generate index files (also known as playlists), encrypt the media, produce encryption keys, optimize the files by reducing overhead, and create the necessary files for automatically generating multiple stream alternates. For details, verify you have installed the tools, and type man mediastreamsegmenter from the terminal window.

Usage example: mediastreamsegmenter -s 3 -D -f /Library/WebServer/Documents/stream 239.4.1.5:20103

The usage example captures a live stream from the network at address 239.4.1.5:20103 and creates media segment files and index files from it. The index files contain a list of the current three media segment files (-s 3). The media segment files are deleted after use (-D). The index files and media segment files are stored in the directory /Library/WebServer/Documents/stream.

Media File Segmenter

The mediafilesegmenter command-line tool takes an encoded media file as an input, wraps it in an MPEG-2 transport stream, and produces a series of equal-length files from it, suitable for use in HTTP Live Streaming. The media file segmenter can also produce index files (playlists) and decryption keys. The file segmenter behaves very much like the stream segmenter, but it works on existing files instead of streams coming from an encoder. For details, type man mediafilesegmenter from the terminal window.

Media Stream Validator

The mediastreamvalidator command-line tool examines the index files, stream alternates, and media segment files on a server and tests to determine whether they will work with HTTP Live Streaming clients. For details, type man mediastreamvalidator from the terminal window.

Variant Playlist Creator

The variantplaylistcreator command-line tool creates a master index file, or playlist, listing the index files for alternate streams at different bit rates, using the output of themediafilesegmenter. The mediafilesegmenter must be invoked with the -generate-variant-playlist argument to produce the required output for the variant playlist creator. For details, type man variantplaylistcreator from the terminal window.

Metadata Tag Generator

The id3taggenerator command-line tool generates ID3 metadata tags. These tags can either be written to a file or inserted into outgoing stream segments. For details, see Adding Timed Metadata.

Session Types

The HTTP Live Streaming protocol supports two types of sessions: events (live broadcasts) and video on demand (VOD).

VOD Sessions

For VOD sessions, media files are available representing the entire duration of the presentation. The index file is static and contains a complete list of all files created since the beginning of the presentation. This kind of session allows the client full access to the entire program.

VOD can also be used to deliver “canned” media. HTTP Live Streaming offers advantages over progressive download for VOD, such as support for media encryption and dynamic switching between streams of different data rates in response to changing connection speeds. (QuickTime also supports multiple-data-rate movies using progressive download, but QuickTime movies do not support dynamically switching between data rates in mid-movie.)

Live Sessions

Live sessions (events) can be presented as a complete record of an event, or as a sliding window with a limited time range the user can seek within.

For live sessions, as new media files are created and made available, the index file is updated. The new index file lists the new media files. Older media files can be removed from the index and discarded, presenting a moving window into a continuous stream—this type of session is suitable for continuous broadcasts. Alternatively, the index can simply add new media files to the existing list—this type of session can be easily converted to VOD after the event completes.

It is possible to create a live broadcast of an event that is instantly available for video on demand. To convert a live broadcast to VOD, do not remove the old media files from the server or delete their URLs from the index file; instead, add an #EXT-X-ENDLIST tag to the index when the event ends. This allows clients to join the broadcast late and still see the entire event. It also allows an event to be archived for rebroadcast with no additional time or effort.

If your playlist contains an EXT-X-PLAYLIST-TYPE tag, you should also change the value from EVENT to VOD.

Content Protection

Media files containing stream segments may be individually encrypted. When encryption is employed, references to the corresponding key files appear in the index file so that the client can retrieve the keys for decryption.

When a key file is listed in the index file, the key file contains a cipher key that must be used to decrypt subsequent media files listed in the index file. Currently HTTP Live Streaming supports AES-128 encryption using 16-octet keys. The format of the key file is a packed array of these 16 octets in binary format.

The media stream segmenter available from Apple provides encryption and supports three modes for configuring encryption.

The first mode allows you to specify a path to an existing key file on disk. In this mode the segmenter inserts the URL of the existing key file in the index file. It encrypts all media files using this key.

The second mode instructs the segmenter to generate a random key file, save it in a specified location, and reference it in the index file. All media files are encrypted using this randomly generated key.

The third mode instructs the segmenter to generate a new random key file every n media segments, save it in a specified location, and reference it in the index file. This mode is referred to as key rotation. Each group of n files is encrypted using a different key.

Note: All media files may be encrypted using the same key, or new keys may be required at intervals. The theoretical limit is one key per media file, but because each media key adds a file request and transfer to the overhead for presenting the subsequent media segments, changing to a new key periodically is less likely to impact system performance than changing keys for each segment.

You can serve key files using either HTTP or HTTPS. You may also choose to protect the delivery of the key files using your own session-based authentication scheme. For details, see Serving Key Files Securely Over HTTPS.

Key files require an initialization vector (IV) to decode encrypted media. The IVs can be changed periodically, just as the keys can.

Caching and Delivery Protocols

HTTPS is commonly used to deliver key files. It may also be used to deliver the media segment files and index files, but this is not recommended when scalability is important, since HTTPS requests often bypass web server caches, causing all content requests to be routed through your server and defeating the purpose of edge network distribution systems.

For this very reason, however, it is important to make sure that any content delivery network you use understands that the .M3U8 index files are not to be cached for longer than one media segment duration for live broadcasts, where the index file is changing dynamically.

Stream Alternates

A master index file may reference alternate streams of content. References can be used to support delivery of multiple streams of the same content with varying quality levels for different bandwidths or devices. HTTP Live Streaming supports switching between streams dynamically if the available bandwidth changes. The client software uses heuristics to determine appropriate times to switch between the alternates. Currently, these heuristics are based on recent trends in measured network throughput.

The master index file points to alternate streams of media by including a specially tagged list of other index files, as illustrated in Figure 2-1

Figure 2-1 Stream alternates

Both the master index file and the alternate index files are in .M3U8 playlist format. The master index file is downloaded only once, but for live broadcasts the alternate index files are reloaded periodically. The first alternate listed in the master index file is the first stream used—after that, the client chooses among the alternates by available bandwidth.

Note that the client may choose to change to an alternate stream at any time, such as when a mobile device enters or leaves a WiFi hotspot. All alternates should use identical audio to allow smooth transitions among streams.

You can create a set of stream alternates by using the variantplaylistcreator tool and specifying the -generate-variant-playlist option for either the mediafilesegmenter tool or themediastreamsegmenter tool (see Download the Tools for details).

When using stream alternates, it is important to bear the following considerations in mind:

The first entry in the variant playlist is played when a user joins the stream and is used as part of a test to determine which stream is most appropriate. The order of the other entries is irrelevant.

Where possible, encode enough variants to provide the best quality stream across a wide range of connection speeds. For example, encode variants at 150 kbps, 350 kbps, 550 kbps, 900 kbps, 1500 kbps.

When possible, use relative path names in variant playlists and in the individual

.M3U8playlist filesThe video aspect ratio on alternate streams must be exactly the same, but alternates can have different pixel dimensions, as long as they have the same aspect ratio. For example, two stream alternates with the same 4:3 aspect ratio could have dimensions of 400 x 300 and 800 x 600.

The

RESOLUTIONfield in theEXT-X-STREAM-INFshould be included to help the client choose an appropriate stream.If you are an iOS app developer, you can query the user’s device to determine whether the initial connection is cellular or WiFi and choose an appropriate master index file.

To ensure the user has a good experience when the stream is first played, regardless of the initial network connection, you should have more than one master index file consisting of the same alternate index files but with a different first stream.

A 150k stream for the cellular variant playlist is recommended.

A 240k or 440k stream for the Wi-Fi variant playlist is recommended.

Note: For details on how to query an iOS-based device for its network connection type, see the following sample code: Reachability.

When you specify the bitrates for stream variants, it is important that the

BANDWIDTHattribute closely match the actual bandwidth required by a given stream. If the actual bandwidth requirement is substantially different than theBANDWIDTHattribute, automatic switching of streams may not operate smoothly or even correctly.

Video Over Cellular Networks

When you send video to a mobile device such as iPhone or iPad, the client’s Internet connection may move to or from a cellular network at any time.

HTTP Live Streaming allows the client to choose among stream alternates dynamically as the network bandwidth changes, providing the best stream as the device moves between cellular and WiFi connections, for example, or between 3G and EDGE connections. This is a significant advantage over progressive download.

It is strongly recommended that you use HTTP Live Streaming to deliver video to all cellular-capable devices, even for video on demand, so that your viewers have the best experience possible under changing conditions.

In addition, you should provide cellular-capable clients an alternate stream at 64 Kbps or less for slower data connections. If you cannot provide video of acceptable quality at 64 Kbps or lower, you should provide an audio-only stream, or audio with a still image.

A good choice for pixel dimensions when targeting cellular network connections is 400 x 224 for 16:9 content and 400 x 300 for 4:3 content (see Preparing Media for Delivery to iOS-Based Devices).

Requirements for Apps

Warning: iOS apps submitted for distribution in the App Store must conform to these requirements.

If your app delivers video over cellular networks, and the video exceeds either 10 minutes duration or 5 MB of data in a five minute period, you are required to use HTTP Live Streaming. (Progressive download may be used for smaller clips.)

If your app uses HTTP Live Streaming over cellular networks, you are required to provide at least one stream at 64 Kbps or lower bandwidth (the low-bandwidth stream may be audio-only or audio with a still image).

These requirements apply to iOS apps submitted for distribution in the App Store for use on Apple products. Non-compliant apps may be rejected or removed, at the discretion of Apple.

Redundant Streams

If your playlist contains alternate streams, they can not only operate as bandwidth or device alternates, but as failure fallbacks. Starting with iOS 3.1, if the client is unable to reload the index file for a stream (due to a 404 error, for example), the client attempts to switch to an alternate stream.

In the event of an index load failure on one stream, the client chooses the highest bandwidth alternate stream that the network connection supports. If there are multiple alternates at the same bandwidth, the client chooses among them in the order listed in the playlist.

You can use this feature to provide redundant streams that will allow media to reach clients even in the event of severe local failures, such as a server crashing or a content distributor node going down.

To support redundant streams, create a stream—or multiple alternate bandwidth streams—and generate a playlist file as you normally would. Then create a parallel stream, or set of streams, on a separate server or content distribution service. Add the list of backup streams to the playlist file, so that the backup stream at each bandwidth is listed after the primary stream. For example, if the primary stream comes from server ALPHA, and the backup stream is on server BETA, your playlist file might look something like this:

#EXTM3U |

#EXT-X-STREAM-INF:PROGRAM-ID=1, BANDWIDTH=200000, RESOLUTION=720x480 |

http://ALPHA.mycompany.com/lo/prog_index.m3u8 |

#EXT-X-STREAM-INF:PROGRAM-ID=1, BANDWIDTH=200000, RESOLUTION=720x480 |

http://BETA.mycompany.com/lo/prog_index.m3u8 |

|

|

#EXT-X-STREAM-INF:PROGRAM-ID=1, BANDWIDTH=500000, RESOLUTION=1920x1080 |

http://ALPHA.mycompany.com/md/prog_index.m3u8 |

#EXT-X-STREAM-INF:PROGRAM-ID=1, BANDWIDTH=500000, RESOLUTION=1920x1080 |

http://BETA.mycompany.com/md/prog_index.m3u8 |

Note that the backup streams are intermixed with the primary streams in the playlist, with the backup at each bandwidth listed after the primary for that bandwidth.

You are not limited to a single backup stream set. In the example above, ALPHA and BETA could be followed by GAMMA, for instance. Similarly, you need not provide a complete parallel set of streams. You could provide a single low-bandwidth stream on a backup server, for example.

Adding Timed Metadata

You can add various kinds of metadata to media stream segments. For example, you can add the album art, artist’s name, and song title to an audio stream. As another example, you could add the current batter’s name and statistics to video of a baseball game.

If an audio-only stream includes an image as metadata, the Apple client software automatically displays it. Currently, the only metadata that is automatically displayed by the Apple-supplied client software is a still image accompanying an audio-only stream.

If you are writing your own client software, however, using either MPMoviePlayerController or AVPlayerItem, you can access streamed metadata using the timedMetaData property.

If you are writing your own segmenter, you can read about the stream format for timed metadata information in Timed Metadata for HTTP Live Streaming.

If you are using Apple’s tools, you can add timed metadata by specifying a metadata file in the -F command line option to either the stream segmenter or the file segmenter. The specified metadata source can be a file in ID3 format or an image file (JPEG or PNG). Metadata specified this way is automatically inserted into every media segment.

This is called timed metadata because it is inserted into a media stream at a given time offset. Timed metadata can optionally be inserted into all segments after a given time.

To add timed metadata to a live stream, use the id3taggenerator tool, with its output set to the stream segmenter. The tool generates ID3 metadata and passes it the stream segmenter for inclusion in the outbound stream.

The tag generator can be run from a shell script, for example, to insert metadata at the desired time, or at desired intervals. New timed metadata automatically replaces any existing metadata.

Once metadata has been inserted into a media segment, it is persistent. If a live broadcast is re-purposed as video on demand, for example, it retains any metadata inserted during the original broadcast.

Adding timed metadata to a stream created using the file segmenter is slightly more complicated.

First, generate the metadata samples. You can generate ID3 metadata using the

id3taggeneratorcommand-line tool, with the output set to file.Next, create a metadata macro file—a text file in which each line contains the time to insert the metadata, the type of metadata, and the path and filename of a metadata file.

For example, the following metadata macro file would insert a picture at 1.2 seconds into the stream, then an ID3 tag at 10 seconds:

1.2 picture /meta/images/picture.jpg10 id3 /meta/id3/title.id3Finally, specify the metadata macro file by name when you invoke the media file segmenter, using the

-Mcommand line option.

For additional details, see the man pages for mediastreamsegmenter, mediafilesegmenter, and id3taggenerator.

Adding Closed Captions

HTTP Live Streaming supports closed captions within streams. If you are using the stream segmenter, you need to add CEA-608 closed captions to the MPEG-2 transport stream (in the main video elementary stream) as specified in ATSC A/72. If you are using the file segmenter, you should encapsulate your media in a QuickTime movie file and add a closed captions track ('clcp'). If you are writing an app, the AVFoundation framework supports playback of closed captions.

Live streaming also supports multiple subtitle and closed caption tracks in Web Video Text Tracks (WebVTT) format. The sample in Listing 2-1 shows two closed captions tracks in a master playlist.

Listing 2-1 Master playlist with multiple closed captions tracks

#EXTM3U |

|

|

#EXT-X-MEDIA:TYPE=CLOSED-CAPTIONS,GROUP-ID="cc",NAME="CC1",LANGUAGE="en",DEFAULT=YES,AUTOSELECT=YES,INSTREAM-ID="CC1" |

#EXT-X-MEDIA:TYPE=CLOSED-CAPTIONS,GROUP-ID="cc",NAME="CC2",LANGUAGE="sp",AUTOSELECT=YES,INSTREAM-ID="CC2" |

|

|

#EXT-X-STREAM-INF:BANDWIDTH=1000000,SUBTITLES="subs",CLOSED-CAPTIONS="cc" |

x.m3u8 |

In the encoding process, the WebVTT files are broken into segments just as audio and video media. The resulting media playlist includes segment durations to sync text with the correct point in the associated video.

Advanced features of live streaming subtitles and closed captions include semantic metadata, CSS styling, and simple animation.

For more information on implementation of WebVTT is available in WWDC 2012: What's New in HTTP Live Streaming and WebVTT: The Web Video Text Tracks Format specification.

Preparing Media for Delivery to iOS-Based Devices

The recommended encoder settings for streams used with iOS-based devices are shown in the following four tables. For live streams, these settings should be available from your hardware or software encoder. If you are re-encoding from a master file for video on demand, you can use a video editing tool such as Compressor.

File format for the file segmenter can be a QuickTime movie, MPEG-4 video, or MP3 audio, using the specified encoding.

Stream format for the stream segmenter must be MPEG elementary audio and video streams, wrapped in an MPEG-2 transport stream, and using the following encoding. The Audio Technologiesand Video Technologies list supported compression formats.

Encode video using H.264 compression

H.264 Baseline 3.0: All devices

H.264 Baseline 3.1: iPhone 3G and later, and iPod touch 2nd generation and later.

H.264 Main profile 3.1: iPad (all versions), Apple TV 2 and later, and iPhone 4 and later.

H.264 Main Profile 4.0: Apple TV 3 and later, iPad 2 and later, and iPhone 4S and later

H.264 High Profile 4.0: Apple TV 3 and later, iPad 2 and later, and iPhone 4S and later.

H.264 High Profile 4.1: iPad 2 and later and iPhone 4S and later.

A frame rate of 10 fps is recommended for video streams under 200 kbps. For video streams under 300 kbps, a frame rate of 12 to 15 fps is recommended. For all other streams, a frame rate of 29.97 is recommended.

Encode audio as either of the following:

HE-AAC or AAC-LC, stereo

MP3 (MPEG-1 Audio Layer 3), stereo

Optionally include an AC-3 audio track for Apple TV (or AirPlay to an Apple TV) when used with a surround sound receiver or TV that supports AC-3 input.

A minimum audio sample rate of 22.05 kHz and audio bit rate of 40 kbps is recommended in all cases, and higher sampling rates and bit rates are strongly encouraged when streaming over Wi-Fi.

|

Connection |

Dimensions |

Total bit rate |

Video bit rate |

Keyframes |

|---|---|---|---|---|

|

Cellular |

400 x 224 |

64 kbps |

audio only |

none |

|

Cellular |

400 x 224 |

150 kbps |

110 kbps |

30 |

|

Cellular |

400 x 224 |

240 kbps |

200 kbps |

45 |

|

Cellular |

400 x 224 |

440 kbps |

400 kbps |

90 |

|

WiFi |

640 x 360 |

640 kbps |

600 kbps |

90 |

|

Connection |

Dimensions |

Total bit rate |

Video bit rate |

Keyframes |

|---|---|---|---|---|

|

Cellular |

400 x 300 |

64 kbps |

audio only |

none |

|

Cellular |

400 x 300 |

150 kbps |

110 kbps |

30 |

|

Cellular |

400 x 300 |

240 kbps |

200 kbps |

45 |

|

Cellular |

400 x 300 |

440 kbps |

400 kbps |

90 |

|

WiFi |

640 x 480 |

640 kbps |

600 kbps |

90 |

|

Connection |

Dimensions |

Total bit rate |

Video bit rate |

Keyframes |

|---|---|---|---|---|

|

WiFi |

640 x 360 |

1240 kbps |

1200 kbps |

90 |

|

WiFi |

960 x 540 |

1840 kbps |

1800 kbps |

90 |

|

WiFi |

1280 x 720 |

2540 kbps |

1500 kbps |

90 |

|

WiFi |

1280 x 720 |

4540 kbps |

4500 kbps |

90 |

|

Connection |

Dimensions |

Total bit rate |

Video bit rate |

Keyframes |

|---|---|---|---|---|

|

WiFi |

640 x 480 |

1240 kbps |

1200 kbps |

90 |

|

WiFi |

960 x 720 |

1840 kbps |

1800 kbps |

90 |

|

WiFi |

960 x 720 |

2540 kbps |

2500 kbps |

90 |

|

WiFi |

1280 x 960 |

4540 kbps |

4500 kbps |

90 |

|

Connection |

Dimensions |

Total bit rate |

Video bit rate |

Keyframes |

|---|---|---|---|---|

|

WiFi |

1920 x 1080 |

12000 kbps |

11000 kbps |

90 |

|

WiFi |

1920 x 1080 |

25000 kbps |

24000 kbps |

90 |

|

WiFi |

1920 x 1080 |

40000 kbps |

39000 kbps |

90 |

Sample Streams

There are a series of HTTP streams available for testing on Apple’s developer site. These examples show proper formatting of HTML to embed streams, .M3U8 files to index the streams, and .tsmedia segment files. The streams can be accessed from the HTTP Live Streaming Resources.

=============================================================================

Deploying HTTP Live Streaming

To actually deploy HTTP Live Streaming, you need to create either an HTML page for browsers or a client app to act as a receiver. You also need the use of a web server and a way to either encode live streams as MPEG-2 transport streams or to create MP3 or MPEG-4 media files with H.264 and AAC encoding from your source material.

You can use the Apple-provided tools to segment the streams or media files, and to produce the index files and variant playlists (see Download the Tools).

You should use the Apple-provided media stream validator prior to serving your streams, to ensure that they are fully compliant with HTTP Live Streaming.

You may want to encrypt your streams, in which case you probably also want to serve the encryption key files securely over HTTPS, so that only your intended clients can decrypt them.

Creating an HTML Page

The easiest way to distribute HTTP Live Streaming media is to create a webpage that includes the HTML5 <video> tag, using an .M3U8 playlist file as the video source. An example is shown inListing 3-1.

Listing 3-1 Serving HTTP Live Streaming in a webpage

<html> |

<head> |

<title>HTTP Live Streaming Example</title> |

</head> |

<body> |

<video |

src="http://devimages.apple.com/iphone/samples/bipbop/bipbopall.m3u8" |

height="300" width="400" |

> |

</video> |

</body> |

</html> |

For browsers that don’t support the HTML5 video element, or browsers that don’t support HTTP Live Streaming, you can include fallback code between the <video> and </video> tags. For example, you could fall back to a progressive download movie or an RTSP stream using the QuickTime plug-in. See Safari HTML5 Audio and Video Guide for examples.

Configuring a Web Server

HTTP Live Streaming can be served from an ordinary web server; no special configuration is necessary, apart from associating the MIME types of the files being served with their file extensions.

Configure the following MIME types for HTTP Live Streaming:

|

File Extension |

MIME Type |

|

|

|

|

|

|

If your web server is constrained with respect to MIME types, you can serve files ending in .m3u with MIME type audio/mpegURL for compatibility.

Index files can be long and may be frequently redownloaded, but they are text files and can be compressed very efficiently. You can reduce server overhead by enabling on-the-fly .gzipcompression of .M3U8 index files; the HTTP Live Streaming client automatically unzips compressed index files.

Shortening time-to-live (TTL) values for .M3U8 files may also be needed to achieve proper caching behavior for downstream web caches, as these files are frequently overwritten during live broadcasts, and the latest version should be downloaded for each request. Check with your content delivery service provider for specific recommendations. For VOD, the index file is static and downloaded only once, so caching is not a factor.

Validating Your Streams

The mediastreamvalidator tool is a command-line utility for validating HTTP Live Streaming streams and servers (see Download the Tools for details on obtaining the tool).

The media stream validator simulates an HTTP Live Streaming session and verifies that the index file and media segments conform to the HTTP Live Streaming specification. It performs several checks to ensure reliable streaming. If any errors or problems are found, a detailed diagnostic report is displayed.

You should always run the validator prior to serving a new stream or alternate stream set.

The media stream validator shows a listing of the streams you provide, followed by the timing results for each of those streams. (It may take a few minutes to calculate the actual timing.) An example of validator output follows.

$ mediastreamvalidator -d iphone http://devimages.apple.com/iphone/samples/bipbop/gear3/prog_index.m3u8 |

mediastreamvalidator: Beta Version 1.1(130423) |

|

|

Validating http://devimages.apple.com/iphone/samples/bipbop/gear3/prog_index.m3u8 |

|

|

-------------------------------------------------------------------------------- |

http://devimages.apple.com/iphone/samples/bipbop/gear3/prog_index.m3u8 |

-------------------------------------------------------------------------------- |

|

|

Playlist Syntax: OK |

|

|

Segments: OK |

|

|

Average segment duration: 9.91 seconds |

Segment bitrate: Average: 509.56 kbits/sec, Max: 840.74 kbits/sec |

Average segment structural overhead: 97.49 kbits/sec (19.13 %) |

For more information, read Media Stream Validator Tool Results Explained.

Serving Key Files Securely Over HTTPS

You can protect your media by encrypting it. The file segmenter and stream segmenter both have encryption options, and you can tell them to change the encryption key periodically. Who you share the keys with is up to you.

Key files require an initialization vector (IV) to decode encrypted media. The IVs can be changed periodically, just as the keys can. Current recommendations for encrypting media while minimizing overhead is to change the key every 3-4 hours and change the IV after every 50 Mb of data.

Even with restricted access to keys, however, it is possible for an eavesdropper to obtain copies of the key files if they are sent over HTTP. One solution to this problem is to send the keys securely over HTTPS.

Before you attempt to serve key files over HTTPS, you should do a test serving the keys from an internal web server over HTTP. This allows you to debug your setup before adding HTTPS to the mix. Once you have a known working system, you are ready to make the switch to HTTPS.

There are three conditions you must meet in order to use HTTPS to serve keys for HTTP Live Streaming:

You need to install an SSL certificate signed by a trusted authority on your HTTPS server.

The authentication domain for the key files must be the same as the authentication domain for the first playlist file. The simplest way to accomplish this is to serve the variant playlist file from the HTTPS server—the variant playlist file is downloaded only once, so this shouldn’t cause an excessive burden. Other playlist files can be served using HTTP.

You must either initiate your own dialog for the user to authenticate, or you must store the credentials on the client device—HTTP Live Streaming does not provide user dialogs for authentication. If you are writing your own client app, you can store credentials, whether cookie-based or HTTP digest based, and supply the credentials in the

didReceiveAuthenticationChallengecallback (see Using NSURLConnection and Authentication Challenges and TLS Chain Validation for details). The credentials you supply are cached and reused by the media player.

Important: You must obtain an SSL certificate signed by a trusted authority in order to use an HTTPS server with HTTP Live Streaming.

If your HTTPS server does not have an SSL certificate signed by a trusted authority, you can still test your setup by creating a self-signed SSL Certificate Authority and a leaf certificate for your server. Attach the certificate for the certificate authority to an email, send it to a device you want to use as a Live Streaming client, and tap on the attachment in Mail to make the device trust the server.

A sample-level encryption format is documented in MPEG-2 Stream Encryption Format for HTTP Live Streaming.

=============================================================================

Frequently Asked Questions

What kinds of encoders are supported?

The protocol specification does not limit the encoder selection. However, the current Apple implementation should interoperate with encoders that produce MPEG-2 Transport Streams containing H.264 video and AAC audio (HE-AAC or AAC-LC). Encoders that are capable of broadcasting the output stream over UDP should also be compatible with the current implementation of the Apple provided segmenter software.

What are the specifics of the video and audio formats supported?

Although the protocol specification does not limit the video and audio formats, the current Apple implementation supports the following formats:

Video:

H.264 Baseline Level 3.0, Baseline Level 3.1, Main Level 3.1, and High Profile Level 4.1.

Audio:

HE-AAC or AAC-LC up to 48 kHz, stereo audio

MP3 (MPEG-1 Audio Layer 3) 8 kHz to 48 kHz, stereo audio

AC-3 (for Apple TV, in pass-through mode only)

Note: iPad, iPhone 3G, and iPod touch (2nd generation and later) support H.264 Baseline 3.1. If your app runs on older versions of iPhone or iPod touch, however, you should use H.264 Baseline 3.0 for compatibility. If your content is intended solely for iPad, Apple TV, iPhone 4 and later, and Mac OS X computers, you should use Main Level 3.1.

What duration should media files be?

The main point to consider is that shorter segments result in more frequent refreshes of the index file, which might create unnecessary network overhead for the client. Longer segments will extend the inherent latency of the broadcast and initial startup time. A duration of 10 seconds of media per file seems to strike a reasonable balance for most broadcast content.

How many files should be listed in the index file during a continuous, ongoing session?

The normal recommendation is 3, but the optimum number may be larger.

The important point to consider when choosing the optimum number is that the number of files available during a live session constrains the client's behavior when doing play/pause and seeking operations. The more files in the list, the longer the client can be paused without losing its place in the broadcast, the further back in the broadcast a new client begins when joining the stream, and the wider the time range within which the client can seek. The trade-off is that a longer index file adds to network overhead—during live broadcasts, the clients are all refreshing the index file regularly, so it does add up, even though the index file is typically small.

What data rates are supported?

The data rate that a content provider chooses for a stream is most influenced by the target client platform and the expected network topology. The streaming protocol itself places no limitations on the data rates that can be used. The current implementation has been tested using audio-video streams with data rates as low as 64 Kbps and as high as 3 Mbps to iPhone. Audio-only streams at 64 Kbps are recommended as alternates for delivery over slow cellular connections.

For recommended data rates, see Preparing Media for Delivery to iOS-Based Devices.

Note: If the data rate exceeds the available bandwidth, there is more latency before startup and the client may have to pause to buffer more data periodically. If a broadcast uses an index file that provides a moving window into the content, the client will eventually fall behind in such cases, causing one or more segments to be dropped. In the case of VOD, no segments are lost, but inadequate bandwidth does cause slower startup and periodic stalling while data buffers.

What is a .ts file?

A

.tsfile contains an MPEG-2 Transport Stream. This is a file format that encapsulates a series of encoded media samples—typically audio and video. The file format supports a variety of compression formats, including MP3 audio, AAC audio, H.264 video, and so on. Not all compression formats are currently supported in the Apple HTTP Live Streaming implementation, however. (For a list of currently supported formats, see Media Encoder.MPEG-2 Transport Streams are containers, and should not be confused with MPEG-2 compression.

What is an .M3U8 file?

An

.M3U8file is a extensible playlist file format. It is an m3u playlist containing UTF-8 encoded text. The m3u file format is a de facto standard playlist format suitable for carrying lists of media file URLs. This is the format used as the index file for HTTP Live Streaming. For details, see IETF Internet-Draft of the HTTP Live Streaming specification.How does the client software determine when to switch streams?

The current implementation of the client observes the effective bandwidth while playing a stream. If a higher-quality stream is available and the bandwidth appears sufficient to support it, the client switches to a higher quality. If a lower-quality stream is available and the current bandwidth appears insufficient to support the current stream, the client switches to a lower quality.

Note: For seamless transitions between alternate streams, the audio portion of the stream should be identical in all versions.

Where can I find a copy of the media stream segmenter from Apple?

The media stream segmenter, file stream segmenter, and other tools are frequently updated, so you should download the current version of the HTTP Live Streaming Tools from the Apple Developer website. See Download the Tools for details.

What settings are recommended for a typical HTTP stream, with alternates, for use with the media segmenter from Apple?

See Preparing Media for Delivery to iOS-Based Devices.

These settings are the current recommendations. There are also certain requirements. The current

mediastreamsegmentertool works only with MPEG-2 Transport Streams as defined in ISO/IEC 13818. The transport stream must contain H.264 (MPEG-4, part 10) video and AAC or MPEG audio. If AAC audio is used, it must have ADTS headers. H.264 video access units must use Access Unit Delimiter NALs, and must be in unique PES packets.The segmenter also has a number of user-configurable settings. You can obtain a list of the command line arguments and their meanings by typing

man mediastreamsegmenterfrom the Terminal application. A target duration (length of the media segments) of 10 seconds is recommended, and is the default if no target duration is specified.How can I specify what codecs or H.264 profile are required to play back my stream?

Use the

CODECSattribute of theEXT-X-STREAM-INFtag. When this attribute is present, it must include all codecs and profiles required to play back the stream. The following values are currently recognized:AAC-LC

"mp4a.40.2"HE-AAC

"mp4a.40.5"MP3

"mp4a.40.34"H.264 Baseline Profile level 3.0

"avc1.42001e"or"avc1.66.30"Note: Use

"avc1.66.30"for compatibility with iOS versions 3.0 to 3.1.2.H.264 Baseline Profile level 3.1

"avc1.42001f"H.264 Main Profile level 3.0

"avc1.4d001e"or"avc1.77.30"Note: Use

"avc1.77.30"for compatibility with iOS versions 3.0 to 3.12.H.264 Main Profile level 3.1

"avc1.4d001f"H.264 Main Profile level 4.0

"avc1.4d0028"H.264 High Profile level 3.1

"avc1.64001f"H.264 High Profile level 4.0

"avc1.640028"H.264 High Profile level 4.1

"avc1.640029"The attribute value must be in quotes. If multiple values are specified, one set of quotes is used to contain all values, and the values are separated by commas. An example follows.

#EXTM3U

#EXT-X-STREAM-INF:PROGRAM-ID=1, BANDWIDTH=500000, RESOLUTION=720x480

mid_video_index.M3U8

#EXT-X-STREAM-INF:PROGRAM-ID=1, BANDWIDTH=800000, RESOLUTION=1280x720

wifi_video_index.M3U8

#EXT-X-STREAM-INF:PROGRAM-ID=1, BANDWIDTH=3000000, CODECS="avc1.4d001e,mp4a.40.5", RESOLUTION=1920x1080

h264main_heaac_index.M3U8

#EXT-X-STREAM-INF:PROGRAM-ID=1, BANDWIDTH=64000, CODECS="mp4a.40.5"

aacaudio_index.M3U8

How can I create an audio-only stream from audio/video input?

Add the

-audio-onlyargument when invoking the stream or files segmenter.How can I add a still image to an audio-only stream?

Use the

-meta-fileargument when invoking the stream or file segmenter with-meta-type=pictureto add an image to every segment. For example, this would add an image named poster.jpg to every segment of an audio stream created from the file track01.mp3:mediafilesegmenter -f/Dir/outputFile-a --meta-file=poster.jpg --meta-type=picture track01.mp3Remember that the image is typically resent every ten seconds, so it’s best to keep the file size small.

How can I specify an audio-only alternate to an audio-video stream?

Use the

CODECSandBANDWIDTHattributes of theEXT-X-STREAM-INFtag together.The

BANDWIDTHattribute specifies the bandwidth required for each alternate stream. If the available bandwidth is enough for the audio alternate, but not enough for the lowest video alternate, the client switches to the audio stream.If the

CODECSattribute is included, it must list all codecs required to play the stream. If only an audio codec is specified, the stream is identified as audio-only. Currently, it is not required to specify that a stream is audio-only, so use of theCODECSattribute is optional.The following is an example that specifies video streams at 500 Kbps for fast connections, 150 Kbps for slower connections, and an audio-only stream at 64 Kbps for very slow connections. All the streams should use the same 64 Kbps audio to allow transitions between streams without an audible disturbance.

#EXTM3U

#EXT-X-STREAM-INF:PROGRAM-ID=1, BANDWIDTH=500000, RESOLUTION=1920x1080

mid_video_index.M3U8

#EXT-X-STREAM-INF:PROGRAM-ID=1, BANDWIDTH=150000, RESOLUTION=720x480

3g_video_index.M3U8

#EXT-X-STREAM-INF:PROGRAM-ID=1, BANDWIDTH=64000, CODECS="mp4a.40.5"

aacaudio_index.M3U8

What are the hardware requirements or recommendations for servers?

See question #1 for encoder hardware recommendations.

The Apple stream segmenter is capable of running on any Intel-based Mac. We recommend using a Mac with two Ethernet network interfaces, such as a Mac Pro or an XServe. One network interface can be used to obtain the encoded stream from the local network, while the second network interface can provide access to a wider network.

Does the Apple implementation of HTTP Live Streaming support DRM?

No. However, media can be encrypted, and key access can be limited by requiring authentication when the client retrieves the key from your HTTPS server.

What client platforms are supported?