《K8S进阶》 (下)

文章目录

- 第11章:Pod数据持久化

- 11.1 emptyDir

- 11.2 hostPath

- 11.3 网络NFS存储

- 11.4 PV&PVC

- 11.5 PV静态供给

- 11.6 PV动态供给

- 11.7 PV动态供给实践(NFS)

- 第12章:再谈有状态应用部署

- 前言

- 1.StatefulSet控制器概述

- 2.稳定的网络ID

- 3.稳定的存储

- 小结

- 第13章:Kubernetes 鉴权框架与用户权限分配

- 1.Kubernetes的安全框架

- 2.传输安全,认证,授权,准入控制

- 3.使用RBAC授权

第11章:Pod数据持久化

参考文档:https://kubernetes.io/docs/concepts/storage/volumes/

Kubernetes中的Volume提供了在容器中挂载外部存储的能力

Pod需要设置卷来源(spec.volume)和挂载点(spec.containers.volumeMounts)两个信息后才可以使用相应的Volume

11.1 emptyDir

创建一个空卷,挂载到Pod中的容器。Pod删除该卷也会被删除。

应用场景:Pod中容器之间数据共享

apiVersion: v1

kind: Pod

metadata:name: my-pod "pod名称"

spec: "pod资源规格"containers: "容器规格,下面是一个pod创建两个容器"- name: write "write容器"image: centoscommand: ["bash","-c","for i in {1..100};do echo $i >> /data/hello;sleep 1;done"] "写入/data/hello文件"volumeMounts:- name: data "挂载的数据卷名称"mountPath: /data "挂载统一个目录/data"- name: read "read容器"image: centoscommand: ["bash","-c","tail -f /data/hello"] "读/data/hello"volumeMounts:- name: data "挂载的数据卷名称"mountPath: /data "挂载统一个目录/data"volumes: "提供源数据卷"- name: data "元数据卷名称data"emptyDir: {} "空卷"

[root@k8s-master ~]# kubectl delete pod my-pod[root@k8s-master ~]# vim emptyDir.yaml

apiVersion: v1

kind: Pod

metadata:name: my-pod

spec:containers:- name: writeimage: centoscommand: ["bash","-c","for i in {1..100};do echo $i >> /data/hello;sleep 1;done"]volumeMounts:- name: datamountPath: /data- name: readimage: centoscommand: ["bash","-c","tail -f /data/hello"]volumeMounts:- name: datamountPath: /datavolumes:- name: dataemptyDir: {}

[root@k8s-master ~]# kubectl apply -f emptyDir.yaml

[root@k8s-master ~]# kubectl logs my-pod -c read

[root@k8s-master ~]# kubectl logs my-pod -c write

[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-pod 1/2 CrashLoopBackOff 4 12m 10.244.0.199 k8s-node2 <none> <none>

//到node2查看

[root@k8s-node2 data]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a25621c72944 centos "bash -c 'for i in {…" 10 seconds ago Up 10 seconds k8s_write_my-pod_default_c7e0bb20-2a7a-4863-a128-915d62dcc56e_5

//podid 为pod_default_c7e0bb20-2a7a-4863-a128-915d62dcc56e_5 根据pod id 在宿主机找存放的数据

[root@k8s-node2 data]# ls

hello

[root@k8s-node2 data]# pwd

/var/lib/kubelet/pods/c7e0bb20-2a7a-4863-a128-915d62dcc56e/volumes/kubernetes.io~empty-dir/data11.2 hostPath

https://kubernetes.io/zh/docs/concepts/storage/volumes/#hostpath

挂载Node文件系统上文件或者目录到Pod中的容器。

应用场景:Pod中容器需要访问宿主机文件

emptyDir == volume (类似于docker 的volume)

hostPath == bindmount (类似于docker的挂载数据卷) 挂载到容器 给日志采集agent、监控agent /proc

apiVersion: v1

kind: Pod

metadata:name: my-pod

spec:containers:- name: busyboximage: busyboxargs:- /bin/sh- -c- sleep 36000volumeMounts: "数据卷挂载到容器"- name: data "挂载的数据卷名称"mountPath: /data "挂载到容器位置"volumes: "卷的来源"- name: data "卷的来源名称"hostPath: "指定卷的类型hostpath"path: /tmp "提供宿主机的/tmp目录供容器挂载"type: Directory "类型为目录"

验证:进入Pod中的/data目录内容与当前运行Pod的节点内容一样。

示例:

[root@k8s-master ~]# vim hostpath.yaml

apiVersion: v1

kind: Pod

metadata:name: my-pod

spec:containers:- name: busyboximage: busyboxargs:- /bin/sh- -c- sleep 36000volumeMounts:- name: datamountPath: /datavolumes:- name: datahostPath:path: /tmptype: Directory

[root@k8s-master ~]# kubectl apply -f hostpath.yaml

pod/my-pod created

[root@k8s-master ~]# kubectl exec -it my-pod sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead.

/ # ls /data/

a.txt

k8s

systemd-private-7127d5a57d1a41af850a0bb25658b596-bolt.service-KGlreQ

systemd-private-7127d5a57d1a41af850a0bb25658b596-chronyd.service-DDWWhp

systemd-private-7127d5a57d1a41af850a0bb25658b596-colord.service-6UxhsE

systemd-private-7127d5a57d1a41af850a0bb25658b596-cups.service-fOkfOB

systemd-private-7127d5a57d1a41af850a0bb25658b596-rtkit-daemon.service-JrKyBL

vmware-root_8491-1713771788

/ # exit

[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-pod 1/1 Running 0 79s 10.244.1.37 k8s-node1 <none> <none>

//取node1查看

[root@k8s-node1 ~]# cd /tmp

[root@k8s-node1 tmp]# ls

a.txt

k8s

systemd-private-7127d5a57d1a41af850a0bb25658b596-bolt.service-KGlreQ

systemd-private-7127d5a57d1a41af850a0bb25658b596-chronyd.service-DDWWhp

systemd-private-7127d5a57d1a41af850a0bb25658b596-colord.service-6UxhsE

systemd-private-7127d5a57d1a41af850a0bb25658b596-cups.service-fOkfOB

systemd-private-7127d5a57d1a41af850a0bb25658b596-rtkit-daemon.service-JrKyBL

vmware-root_8491-1713771788

//容器挂载的宿主机/tmp目录,数据报错一致

//容器创建test文件,测试持久化存储

[root@k8s-master ~]# kubectl exec -it my-pod sh

/ # cd /data

/data # echo 123 > test.txt

/data # ls

a.txt

k8s

systemd-private-7127d5a57d1a41af850a0bb25658b596-bolt.service-KGlreQ

systemd-private-7127d5a57d1a41af850a0bb25658b596-chronyd.service-DDWWhp

systemd-private-7127d5a57d1a41af850a0bb25658b596-colord.service-6UxhsE

systemd-private-7127d5a57d1a41af850a0bb25658b596-cups.service-fOkfOB

systemd-private-7127d5a57d1a41af850a0bb25658b596-rtkit-daemon.service-JrKyBL

test.txt

vmware-root_8491-1713771788

//到节点/tmp路径检查

[root@k8s-node1 tmp]# ls

a.txt

k8s

systemd-private-7127d5a57d1a41af850a0bb25658b596-bolt.service-KGlreQ

systemd-private-7127d5a57d1a41af850a0bb25658b596-chronyd.service-DDWWhp

systemd-private-7127d5a57d1a41af850a0bb25658b596-colord.service-6UxhsE

systemd-private-7127d5a57d1a41af850a0bb25658b596-cups.service-fOkfOB

systemd-private-7127d5a57d1a41af850a0bb25658b596-rtkit-daemon.service-JrKyBL

test.txt

vmware-root_8491-1713771788

//容器创建的数据保存到node节点的/tmp

以上empotydir 和 hostpath 很单一,解决不了数据夸node节点的问题,下文网络存储解决

k8s调度单元是pod ,一个pod的多个容器会被一起分配到一个节点,不会分散

11.3 网络NFS存储

apiVersion: apps/v1beta1 "版本"

kind: Deployment "pod类型"

metadata: "元信息"name: nginx-deployment "pod名称"

spec: "资源规格"replicas: 3 "3副本"template: "模板"metadata:labels:app: nginxspec: "容器规格"containers:- name: nginximage: nginxvolumeMounts: "挂载数据卷"- name: wwwroot "挂载的数据卷名称"mountPath: /usr/share/nginx/html "容器挂载点"ports: "容器端口"- containerPort: 80volumes: "源数据卷"- name: wwwroot "源数据卷名称"nfs: "数据卷类型"server: 192.168.0.200 "挂载节点"path: /data/nfs "挂载出去的目录"

示例:

//把192.168.100.200作为nfs存储节点

//master节点和nfs节点安装nfs

yum install nfs-utils -y

//nfs存储节点

[root@localhost ~]# vim /etc/exports

/ifs/kubernetes *(rw,no_root_squash) "读写.不降权"

[root@localhost ~]# mkdir -p /ifs/kubernetes

[root@localhost ~]# systemctl start nfs

[root@localhost ~]# systemctl enable nfs

//master节点

[root@k8s-master ~]# systemctl start nfs

[root@k8s-master ~]# systemctl senable nfs

[root@k8s-master ~]# kubectl create deploy web --image=nginx --dry-run -o yaml > nfs.yaml "创建yaml模板"

[root@k8s-master ~]# vim nfs.yaml

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: webname: web

spec:replicas: 3selector:matchLabels:app: webstrategy: {}template:metadata:labels:app: webspec:containers:- image: nginxname: nginxresources: {}volumeMounts:- name: datamountPath: /usr/share/nginx/htmlvolumes:- name: datanfs:server: 192.168.100.200path: /ifs/kubernetes[root@k8s-master ~]# df -Th

192.168.100.200:/ifs/kubernetes nfs4 190G 6.1G 184G 4% /mnt

[root@k8s-master ~]# kubectl apply -f nfs.yaml

deployment.apps/web created//nfs存储创建首页

[root@localhost ~]# cd /ifs/kubernetes/

[root@localhost kubernetes]# echo "123" >index.html

[root@localhost kubernetes]# ls

index.html

//pod查看

[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-pod 1/1 Running 0 3h17m 10.244.1.37 k8s-node1 <none> <none>

web-947cfd45d-jl2pk 1/1 Running 0 10m 10.244.1.38 k8s-node1 <none> <none>

web-947cfd45d-vq5n6 1/1 Running 0 11m 10.244.0.200 k8s-node2 <none> <none>

web-947cfd45d-wfpbl 1/1 Running 0 10m 10.244.0.201 k8s-node2 <none> <none>

//进node1

[root@k8s-master ~]# kubectl exec -it web-947cfd45d-jl2pk bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead.

root@web-947cfd45d-jl2pk:/# cd /usr/share/nginx/html/

root@web-947cfd45d-jl2pk:/usr/share/nginx/html# ls

index.html

//能够看到测试首页,说明数据存储在nfs上

//进node2查看

[root@k8s-master ~]# kubectl exec -it web-947cfd45d-vq5n6 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead.

root@web-947cfd45d-vq5n6:/# cd /usr/share/nginx/html

root@web-947cfd45d-vq5n6:/usr/share/nginx/html# ls

index.html

root@web-947cfd45d-vq5n6:/usr/share/nginx/html# cat index.html

123//增加副本

[root@k8s-master ~]# kubectl scale deploy web --replicas=4

deployment.apps/web scaled

[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-pod 1/1 Running 0 3h21m 10.244.1.37 k8s-node1 <none> <none>

web-947cfd45d-2gk2z 1/1 Running 0 4s 10.244.1.39 k8s-node1 <none> <none>

web-947cfd45d-jl2pk 1/1 Running 0 14m 10.244.1.38 k8s-node1 <none> <none>

web-947cfd45d-vq5n6 1/1 Running 0 15m 10.244.0.200 k8s-node2 <none> <none>

web-947cfd45d-wfpbl 1/1 Running 0 14m 10.244.0.201 k8s-node2 <none> <none>

//查看新创建的副本

[root@k8s-master ~]# kubectl exec -it web-947cfd45d-2gk2z bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead.

root@web-947cfd45d-2gk2z:/# cat /usr/share/nginx/html/index.html

123

//这个实验说明NFS网络卷持久化存储,几个节点间数据同步

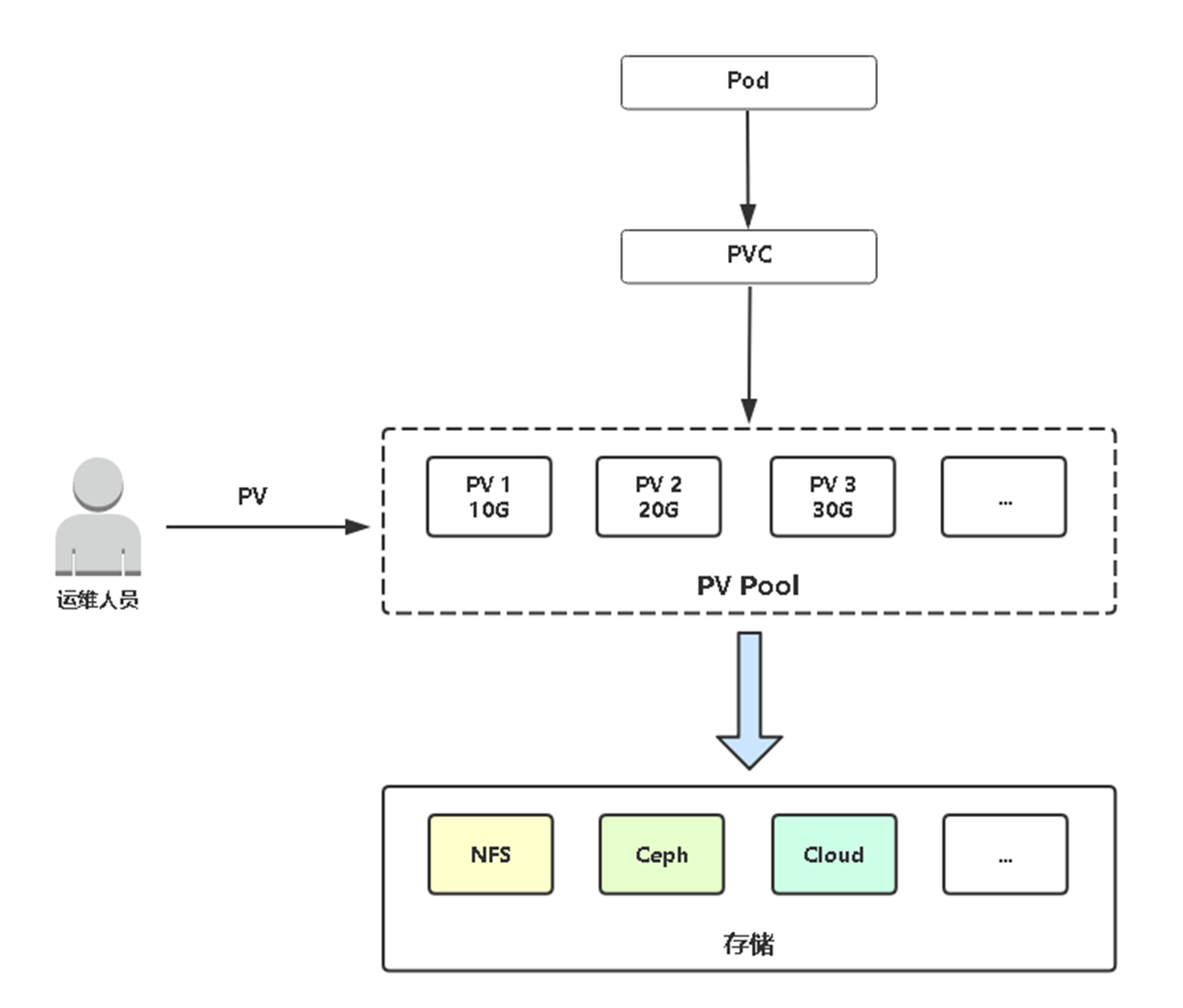

11.4 PV&PVC

**PersistentVolume(PV):**对存储资源创建和使用的抽象,使得存储作为集群中的资源管理

持久卷提供者 (类似于制作硬盘)

PV供给分为:

静态

动态

**PersistentVolumeClaim(PVC):**让用户不需要关心具体的Volume实现细节

持久卷需求者 (类似于买硬盘来用)

//容器使用数据卷

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: webname: web

spec:replicas: 3selector:matchLabels:app: webstrategy: {}template:metadata:labels:app: webspec:containers:- image: nginxname: nginxresources: {}volumeMounts: "容器卷挂载信息"- name: datamountPath: /usr/share/nginx/html "容器挂载点"ports:- containerPort: 80volumes: "容器数据卷"- name: datapersistentVolumeClaim: "用户存储请求"claimName: my-pvc "请求名称my-pvc,要与pvc的需求模板名称一致"

//这里不用写数据卷源信息这点和网络卷不一样

//pvc卷需求模板

apiVersion: v1

kind: PersistentVolumeClaim "pod类型pvc"

metadata:name: my-pvc "要与上面请求名称一致"

spec:accessModes: "请求模式"- ReadWriteMany "读写执行"resources: "资源信息"requests: "请求容量大小"storage: 5Gi "大小5G"

加入说请求6G 而Pv只有5G和10G 根据就近选择合适pv,不一定相等

容量并不是必须对应(pv!=pvc),根据就近选择合适pv如果pvc申请的是5G,那么它也有可能使用的容量超过5G,这个限不限制也取决于你的后端存储支不支持限制

扩容:1.11版本支持动态扩容(k8s层面),具体还要根据后端存储支持

现在用的最多的是ceph存储

//pv数据卷定义

apiVersion: v1

kind: PersistentVolume "pod类型pv"

metadata:name: pv00001

spec:capacity:storage: 5GiaccessModes:- ReadWriteManynfs:path: /ifs/kubernetes/pv00001server: 192.168.100.200

示例:

//创建pv提供者卷,创建三块5G的卷

[root@k8s-master ~]# vim pv.yamlapiVersion: v1

kind: PersistentVolume

metadata:name: pv00001

spec:capacity:storage: 5GiaccessModes:- ReadWriteManynfs:path: /ifs/kubernetes/pv00001server: 192.168.100.200

---

apiVersion: v1

kind: PersistentVolume

metadata:name: pv00002

spec:capacity:storage: 10GiaccessModes:- ReadWriteManynfs:path: /ifs/kubernetes/pv00002server: 192.168.100.200

---

apiVersion: v1

kind: PersistentVolume

metadata:name: pv00003

spec:capacity:storage: 10GiaccessModes:- ReadWriteManynfs:path: /ifs/kubernetes/pv00003server: 192.168.100.200

//NFS创建存储目录

[root@localhost kubernetes]# mkdir pv00001

[root@localhost kubernetes]# mkdir pv00002

[root@localhost kubernetes]# mkdir pv00003

[root@localhost kubernetes]# ls

index.html pv00001 pv00002 pv00003

//master创建

[root@k8s-master ~]# kubectl apply -f pv.yaml

persistentvolume/pv00001 created

persistentvolume/pv00002 created

persistentvolume/pv00003 created

[root@k8s-master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv00001 5Gi RWX Retain Available 2m

pv00002 10Gi RWX Retain Available 2m

pv00003 10Gi RWX Retain Available 2m

"avaliable为可用状态,说明还没被使用"

//pvc使用pv卷

[root@k8s-master ~]# vim pvc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: webname: web

spec:replicas: 3selector:matchLabels:app: webstrategy: {}template:metadata:labels:app: webspec:containers:- image: nginxname: nginxresources: {}volumeMounts:- name: datamountPath: /usr/share/nginx/htmlports:- containerPort: 80volumes:- name: datapersistentVolumeClaim:claimName: my-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: my-pvc

spec:accessModes:- ReadWriteManyresources:requests:storage: 5Gi[root@k8s-master ~]# kubectl apply -f pvc.yaml

deployment.apps/web configured

persistentvolumeclaim/my-pvc created

[root@k8s-master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv00001 5Gi RWX Retain Bound default/my-pvc 10m

pv00002 10Gi RWX Retain Available 10m

pv00003 10Gi RWX Retain Available 10m

//pv00001状态为Bound(bind的过去式),说明是绑定状态,一对一的关系[root@k8s-master ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

my-pvc Bound pv00001 5Gi RWX 66s

//查看pvc,名字py-pvc 绑定pv00001 权限读写执行//创建数据测试是否放在NFS

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pod 1/1 Running 0 4h42m

web-6c95dbff5f-jqkz6 1/1 Running 0 9m49s

web-6c95dbff5f-kjqjv 1/1 Running 0 9m44s

web-6c95dbff5f-xqc8k 1/1 Running 0 9m55s

[root@k8s-master ~]# kubectl get ep

NAME ENDPOINTS AGE

kubernetes 192.168.100.110:6443 3d9h

tomweb <none> 33h

web 10.244.0.202:80,10.244.1.40:80,10.244.1.41:80 25h

web1 <none> 24h

web2 <none> 24h

[root@k8s-master ~]# kubectl exec -it web-6c95dbff5f-jqkz6 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead.

root@web-6c95dbff5f-jqkz6:/# cd /usr/share/nginx

root@web-6c95dbff5f-jqkz6:/usr/share/nginx# ls

html

root@web-6c95dbff5f-jqkz6:/usr/share/nginx# cd html

root@web-6c95dbff5f-jqkz6:/usr/share/nginx/html# ls

root@web-6c95dbff5f-jqkz6:/usr/share/nginx/html# echo "hello" > index.html

root@web-6c95dbff5f-jqkz6:/usr/share/nginx/html# //测试nfs存储[root@localhost kubernetes]# ls

index.html pv00001 pv00002 pv00003

[root@localhost kubernetes]# ls pv00001

index.html

[root@localhost kubernetes]# ls pv00002

[root@localhost kubernetes]# ls pv00003

[root@localhost kubernetes]# cat pv00001/index.html

helloK8S涉及到的是动态挂载后端存储,而不涉及后端存储的容灾,故障恢复

11.5 PV静态供给

静态供给是指提前创建好很多个PV,以供使用。11.4就是静态供给

先准备一台NFS服务器作为测试。

# yum install nfs-utils

# vi /etc/exports

/ifs/kubernetes *(rw,no_root_squash)

# mkdir -p /ifs/kubernetes

# systemctl start nfs

# systemctl enable nfs

并且要在每个Node上安装nfs-utils包,用于mount挂载时用。

示例:先准备三个PV,分别是5G,10G,20G,修改下面对应值分别创建。

apiVersion: v1

kind: PersistentVolume

metadata:name: pv001 # 修改PV名称

spec:capacity:storage: 30Gi # 修改大小accessModes:- ReadWriteManynfs:path: /opt/nfs/pv001 # 修改目录名server: 192.168.31.62

创建一个Pod使用PV:

apiVersion: v1

kind: Pod

metadata:name: my-pod

spec:containers:- name: nginximage: nginx:latestports:- containerPort: 80volumeMounts:- name: wwwmountPath: /usr/share/nginx/htmlvolumes:- name: wwwpersistentVolumeClaim:claimName: my-pvc---apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: my-pvc

spec:accessModes:- ReadWriteManyresources:requests:storage: 5Gi

创建并查看PV与PVC状态:

# kubectl apply -f pod-pv.yaml

# kubectl get pv,pvc

会发现该PVC会与5G PV进行绑定成功。

然后进入到容器中/usr/share/nginx/html(PV挂载目录)目录下创建一个文件测试:

kubectl exec -it my-pod bash

cd /usr/share/nginx/html

echo "123" index.html

再切换到NFS服务器,会发现也有刚在容器创建的文件,说明工作正常。

cd /opt/nfs/pv001

ls

index.html

如果创建一个PVC为16G,你猜会匹配到哪个PV呢?

https://kubernetes.io/docs/concepts/storage/persistent-volumes/

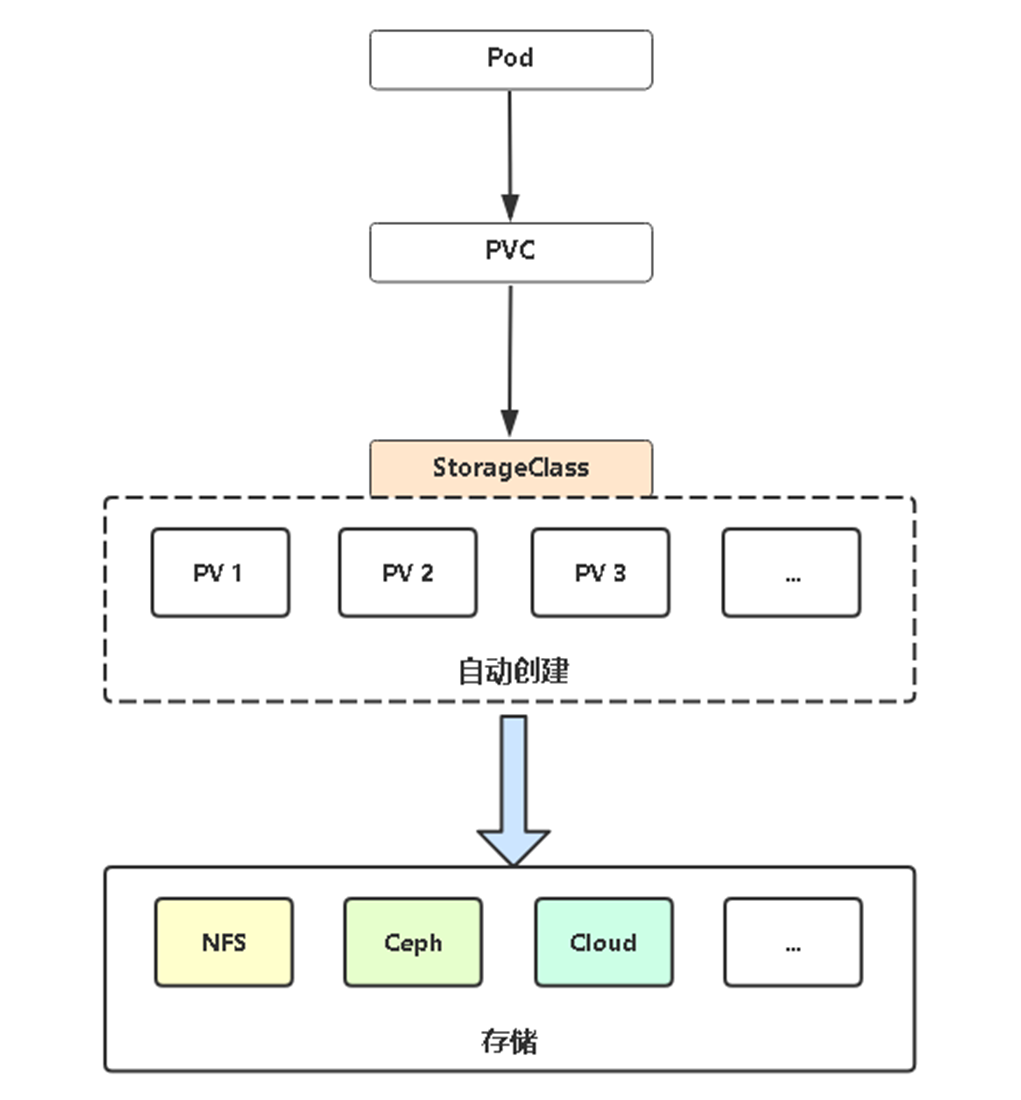

11.6 PV动态供给

Dynamic Provisioning机制工作的核心在于StorageClass的API对象。

StorageClass声明存储插件,用于自动创建PV。

Kubernetes支持动态供给的存储插件:

https://kubernetes.io/docs/concepts/storage/storage-classes/

11.7 PV动态供给实践(NFS)

工作流程

由于K8S不支持NFS动态供给,还需要先安装上图中的nfs-client-provisioner插件:

# cd nfs-client

# vi deployment.yaml # 修改里面NFS地址和共享目录为你的

# kubectl apply -f .

# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-df88f57df-bv8h7 1/1 Running 0 49m

测试:

apiVersion: v1

kind: Pod

metadata:name: my-pod

spec:containers:- name: nginximage: nginx:latestports:- containerPort: 80volumeMounts:- name: wwwmountPath: /usr/share/nginx/htmlvolumes:- name: wwwpersistentVolumeClaim:claimName: my-pvc---apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: my-pvc

spec:storageClassName: "managed-nfs-storage" "自动供给storage名称"accessModes:- ReadWriteManyresources:requests:storage: 5Gi

这次会自动创建5GPV并与PVC绑定。

kubectl get pv,pvc

测试方法同上,进入到容器中/usr/share/nginx/html(PV挂载目录)目录下创建一个文件测试。

再切换到NFS服务器,会发现下面目录,该目录是自动创建的PV挂载点。进入到目录会发现刚在容器创建的文件。

# ls /opt/nfs/

default-my-pvc-pvc-51cce4ed-f62d-437d-8c72-160027cba5ba

示例:

#class.yaml 自动创建PV

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:name: managed-nfs-storage "这个名字要与创建Pvc指定的storage名字一致"

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:archiveOnDelete: "false" "是否归档,true表示不归档"

//provisioner: fuseim.pri/ifs这个要与deployment里value:fuseim.pri/ifs 一致

#deployment.yaml 客户

apiVersion: v1

kind: ServiceAccount

metadata:name: nfs-client-provisioner

---

kind: Deployment

apiVersion: apps/v1

metadata:name: nfs-client-provisioner

spec:replicas: 1strategy:type: Recreateselector:matchLabels:app: nfs-client-provisionertemplate:metadata:labels:app: nfs-client-provisionerspec:serviceAccountName: nfs-client-provisionercontainers:- name: nfs-client-provisionerimage: quay.io/external_storage/nfs-client-provisioner:latestvolumeMounts:- name: nfs-client-rootmountPath: /persistentvolumesenv:- name: PROVISIONER_NAMEvalue: fuseim.pri/ifs "这里提供者要与class提供者名称一致"- name: NFS_SERVERvalue: 192.168.100.200- name: NFS_PATHvalue: /ifs/kubernetesvolumes:- name: nfs-client-rootnfs:server: 192.168.100.200path: /ifs/kubernetes

//quay.io/external_storage/nfs-client-provisioner:latest 这个镜像要是下不下来改为lizhenliang/nfs-client-provisioner:latest

#rbac.yaml 授权

kind: ServiceAccount

apiVersion: v1

metadata:name: nfs-client-provisioner

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: nfs-client-provisioner-runner

rules:- apiGroups: [""]resources: ["persistentvolumes"]verbs: ["get", "list", "watch", "create", "delete"]- apiGroups: [""]resources: ["persistentvolumeclaims"]verbs: ["get", "list", "watch", "update"]- apiGroups: ["storage.k8s.io"]resources: ["storageclasses"]verbs: ["get", "list", "watch"]- apiGroups: [""]resources: ["events"]verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: run-nfs-client-provisioner

subjects:- kind: ServiceAccountname: nfs-client-provisionernamespace: default

roleRef:kind: ClusterRolename: nfs-client-provisioner-runnerapiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: leader-locking-nfs-client-provisioner

rules:- apiGroups: [""]resources: ["endpoints"]verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: leader-locking-nfs-client-provisioner

subjects:- kind: ServiceAccountname: nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: default

roleRef:kind: Rolename: leader-locking-nfs-client-provisionerapiGroup: rbac.authorization.k8s.io//实操

[root@k8s-master nfs-client]# ls

class.yaml deployment.yaml rbac.yaml

[root@k8s-master nfs-client]# kubectl apply -f .

storageclass.storage.k8s.io/managed-nfs-storage created

serviceaccount/nfs-client-provisioner created

deployment.apps/nfs-client-provisioner created

serviceaccount/nfs-client-provisioner unchanged

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

//检查服务

[root@k8s-master nfs-client]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-5f84d68bb5-kblp8 1/1 Running 0 35s

"client-provisioner已经ok"

[root@k8s-master nfs-client]# kubectl get storageClass #简写sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage fuseim.pri/ifs Delete Immediate false 2m19s

"manager-nfx-storsage已经ok"//创建pvc看是否自动创建pv

[root@k8s-master ~]# cp pvc.yaml pvc-auto.yaml

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: webname: web2 "换个deploy名字"

spec:replicas: 1selector:matchLabels:app: webstrategy: {}template:metadata:labels:app: webspec:containers:- image: nginxname: nginxresources: {}volumeMounts:- name: datamountPath: /usr/share/nginx/htmlports:- containerPort: 80volumes:- name: datapersistentVolumeClaim:claimName: my-pvc2 "换个名字"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: my-pvc2 "与上面换一样的"

spec:storageClassName: managed-nfs-storage "定义自动创建pv的storage名称,要与class.yaml里的名字一致"accessModes:- ReadWriteManyresources:requests:storage: 5Gi[root@k8s-master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv00001 5Gi RWX Retain Bound default/my-pvc 112m

pv00002 10Gi RWX Retain Available 112m

pv00003 10Gi RWX Retain Available 112m

[root@k8s-master ~]# kubectl apply -f pvc-auto.yaml

deployment.apps/web2 created

persistentvolumeclaim/my-pvc2 created

[root@k8s-master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv00001 5Gi RWX Retain Bound default/my-pvc 114m

pv00002 10Gi RWX Retain Available 114m

pv00003 10Gi RWX Retain Available 114m

pvc-e3e2195c-4ee1-4cc2-ac54-ed96e89b5fb3 5Gi RWX Delete Bound default/my-pvc2 managed-nfs-storage 6s"pvc-e3e2195c-4ee1-4cc2-ac54-ed96e89b5fb3 自动创建的pv"[root@k8s-master ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

my-pvc Bound pv00001 5Gi RWX 104m

my-pvc2 Bound pvc-e3e2195c-4ee1-4cc2-ac54-ed96e89b5fb3 5Gi RWX managed-nfs-storage 16s

"可以看到my-pvc2使用的pv,类型为managed-nfs-storage"

第12章:再谈有状态应用部署

前言

问题1:Nginx镜像,Node1故障,在Node2自动拉起新的Pod,还能不能继续提供服务?

可以。问题2:Mysql镜像,Node1故障,在Node2自动拉起新的Pod,还能不能继续提供服务?

不可以。可以的前提:你必须做数据持久化 /var/lib/mysql

问题3:Mysql主从,两个Pod分别分配到了Node1和Node2,如果Node1故障,在Node2自动拉起新的Pod,还能不能继续提供服务?

不可以。有状态应用:在k8s一般指的分布式应用程序,mysql主从、zookeeper集群、etcd集群

1、数据持久化

2、IP地址,名称(为每个Pod分配一个固定DNS名称)有角色的拓扑关系

3、启动顺序 (如mysql主从.要先启动主)无有状态应用:nginx、api、微服务jar

1.StatefulSet控制器概述

StatefulSet:

部署有状态应用

解决Pod独立生命周期,保持Pod启动顺序和唯一性

稳定,唯一的网络标识符,持久存储

有序,优雅的部署和扩展、删除和终止

有序,滚动更新

应用场景:数据库

- StatefulSet与Deployment区别:有身份的!

身份三要素:

域名

主机名

存储(PVC)

2.稳定的网络ID

说起StatefulSet稳定的网络标识符,不得不从Headless说起了。

Headless service (无头服务)

标准Service:

apiVersion: v1

kind: Service

metadata:name: my-service

spec:selector:app: nginx ports:- protocol: TCPport: 80targetPort: 9376

无头Service(Headless Service):

apiVersion: v1

kind: Service

metadata:name: my-service

spec:clusterIP: None "将clousterIP设为none"selector:app: nginx ports:- protocol: TCPport: 80targetPort: 9376

[root@k8s-master ~]# kubectl expose deployment web --port=80 --target-port=80 --dry-run -o yaml > service.yaml

[root@k8s-master ~]# cat service.yaml

apiVersion: v1

kind: Service

metadata:creationTimestamp: nulllabels:app: webname: web

spec:ports:- port: 80protocol: TCPtargetPort: 80selector:app: web

status:loadBalancer: {}

[root@k8s-master ~]# cp service.yaml service-headless.yaml

apiVersion: v1

kind: Service

metadata:creationTimestamp: nulllabels:app: webname: web3

spec:clusterIP: Noneports:- port: 80protocol: TCPtargetPort: 80selector:app: web

status:loadBalancer: {}

[root@k8s-master ~]# kubectl apply -f service.yaml

service/web configured

[root@k8s-master ~]# kubectl apply -f service-headless.yaml

service/web3 created

[root@k8s-master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 3d13h

web ClusterIP 10.0.0.116 <none> 80/TCP 30h

web3 ClusterIP None <none> 80/TCP 22m

[root@k8s-master ~]# vim headless.yaml apiVersion: v1

kind: Service

metadata:name: web

spec:clusterIP: Noneselector:app: nginxports:- protocol: TCPport: 80targetPort: 80---apiVersion: apps/v1

kind: StatefulSet

metadata:name: etcd

spec:selector:matchLabels:app: nginxserviceName: "etcd"replicas: 3template:metadata:labels:app: nginxspec:containers:- name: nginximage: nginxresources: {}

[root@k8s-master ~]# kubectl apply -f headless.yaml

service/etcd created

statefulset.apps/web created

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pod 1/1 Running 0 9h

nfs-client-provisioner-5f84d68bb5-kblp8 1/1 Running 0 3h44m

web-0 1/1 Running 0 28s

web-1 0/1 ContainerCreating 0 11s

//会发现三个副本是一个一个创建

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pod 1/1 Running 0 9h

nfs-client-provisioner-5f84d68bb5-kblp8 1/1 Running 0 3h45m

web-0 1/1 Running 0 69s

web-1 1/1 Running 0 52s

web-2 1/1 Running 0 35s

标准Service与无头Service区别是clusterIP: None,这表示创建Service不要为我(Headless Service)分配Cluster IP,因为我不需要。

为什么标准Service需要?

这就是无状态和有状态的控制器设计理念了,无状态的应用Pod是完全对等的,提供相同的服务,可以在飘移在任意节点,例如Web。而像一些分布式应用程序,例如zookeeper集群、etcd集群、mysql主从,每个实例都会维护着一种状态,每个实例都各自的数据,并且每个实例之间必须有固定的访问地址(组建集群),这就是有状态应用。所以有状态应用是不能像无状态应用那样,创建一个标准Service,然后访问ClusterIP负载均衡到一组Pod上。这也是为什么无头Service不需要ClusterIP的原因,它要的是能为每个Pod固定一个”身份“。

举例说明:

apiVersion: v1

kind: Service

metadata:name: headless-svc

spec:clusterIP: Noneselector:app: nginx ports:- protocol: TCPport: 80targetPort: 80---apiVersion: apps/v1

kind: StatefulSet

metadata:name: web

spec:selector:matchLabels:app: nginx serviceName: "headless-svc"replicas: 3 template:metadata:labels:app: nginxspec:containers:- name: nginximage: nginx ports:- containerPort: 80name: web

相比之前讲的yaml,这次多了一个serviceName: “nginx”字段,这就告诉StatefulSet控制器要使用nginx这个headless service来保证Pod的身份。

# kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pod 1/1 Running 0 7h50m

nfs-client-provisioner-df88f57df-bv8h7 1/1 Running 0 7h54m

web-0 1/1 Running 0 6h55m

web-1 1/1 Running 0 6h55m

web-2 1/1 Running 0 6h55m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/headless-svc ClusterIP None <none> 80/TCP 7h15m

service/kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 8d

临时创建一个Pod,测试DNS解析:

# kubectl run -i --tty --image busybox:1.28.4 dns-test --restart=Never --rm /bin/sh

If you don't see a command prompt, try pressing enter.

/ # nslookup nginx.default.svc.cluster.local

Server: 10.0.0.2

Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.localName: nginx.default.svc.cluster.local

Address 1: 172.17.26.3 web-1.nginx.default.svc.cluster.local

Address 2: 172.17.26.4 web-2.nginx.default.svc.cluster.local

Address 3: 172.17.83.3 web-0.nginx.default.svc.cluster.local

结果得出该Headless Service代理的所有Pod的IP地址和Pod 的DNS A记录。

通过访问web-0.nginx的Pod的DNS名称时,可以解析到对应Pod的IP地址,其他Pod 的DNS名称也是如此,这个DNS名称就是固定身份,在生命周期不会再变化:

/ # nslookup web-0.nginx.default.svc.cluster.local

Server: 10.0.0.2

Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.localName: web-0.nginx.default.svc.cluster.local

Address 1: 172.17.83.3 web-0.nginx.default.svc.cluster.local

进入容器查看它们的主机名:

[root@k8s-master01 ~]# kubectl exec web-0 hostname

web-0

[root@k8s-master01 ~]# kubectl exec web-1 hostname

web-1

[root@k8s-master01 ~]# kubectl exec web-2 hostname

web-2

可以看到,每个Pod都从StatefulSet的名称和Pod的序号中获取主机名的。

不过,相信你也已经注意到了,尽管 web-0.nginx 这条记录本身不会变,但它解析到的 Pod 的 IP 地址,并不是固定的。这就意味着,对于“有状态应用”实例的访问,你必须使用 DNS 记录或者 hostname 的方式,而绝不应该直接访问这些 Pod 的 IP 地址。

以下是Cluster Domain,Service name,StatefulSet名称以及它们如何影响StatefulSet的Pod的DNS名称的一些选择示例。

| Cluster Domain | Service (ns/name) | StatefulSet (ns/name) | StatefulSet Domain | Pod DNS | Pod Hostname |

|---|---|---|---|---|---|

| cluster.local | default/nginx | default/web | nginx.default.svc.cluster.local | web-{0…N-1}.nginx.default.svc.cluster.local | web-{0…N-1} |

| cluster.local | foo/nginx | foo/web | nginx.foo.svc.cluster.local | web-{0…N-1}.nginx.foo.svc.cluster.local | web-{0…N-1} |

| kube.local | foo/nginx | foo/web | nginx.foo.svc.kube.local | web-{0…N-1}.nginx.foo.svc.kube.local | web-{0…N-1} |

3.稳定的存储

StatefulSet的存储卷使用VolumeClaimTemplate创建,称为卷申请模板,当StatefulSet使用VolumeClaimTemplate 创建一个PersistentVolume时,同样也会为每个Pod分配并创建一个编号的PVC。

示例:

apiVersion: apps/v1

kind: StatefulSet

metadata:name: web

spec:selector:matchLabels:app: nginx serviceName: "headless-svc"replicas: 3 template:metadata:labels:app: nginxspec:containers:- name: nginximage: nginx ports:- containerPort: 80name: webvolumeMounts:- name: wwwmountPath: /usr/share/nginx/htmlvolumeClaimTemplates:- metadata:name: wwwspec:accessModes: [ "ReadWriteOnce" ]storageClassName: "managed-nfs-storage"resources:requests:storage: 1Gi

# kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pv001 5Gi RWX Retain Released default/my-pvc 8h

persistentvolume/pv002 10Gi RWX Retain Available 8h

persistentvolume/pv003 30Gi RWX Retain Available 8h

persistentvolume/pvc-2c5070ff-bcd1-4703-a8dd-ac9b601bf59d 1Gi RWO Delete Bound default/www-web-0 managed-nfs-storage 6h58m

persistentvolume/pvc-46fd1715-181a-4041-9e93-fa73d99a1b48 1Gi RWO Delete Bound default/www-web-2 managed-nfs-storage 6h58m

persistentvolume/pvc-c82ae40f-07c5-45d7-a62b-b129a6a011ae 1Gi RWO Delete Bound default/www-web-1 managed-nfs-storage 6h58mNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/www-web-0 Bound pvc-2c5070ff-bcd1-4703-a8dd-ac9b601bf59d 1Gi RWO managed-nfs-storage 6h58m

persistentvolumeclaim/www-web-1 Bound pvc-c82ae40f-07c5-45d7-a62b-b129a6a011ae 1Gi RWO managed-nfs-storage 6h58m

persistentvolumeclaim/www-web-2 Bound pvc-46fd1715-181a-4041-9e93-fa73d99a1b48 1Gi RWO managed-nfs-storage 6h58m

结果得知,StatefulSet为每个Pod分配专属的PVC及编号。每个PVC绑定对应的 PV,从而保证每一个 Pod 都拥有一个独立的 Volume,无状态的是共享一个数据卷,有状态的StatefulSet为每个pod创建一个独立的数据卷。

在这种情况下,删除Pods或StatefulSet时,它所对应的PVC和PV不会被删除。所以,当这个Pod被重新创建出现之后,Kubernetes会为它找到同样编号的PVC,挂载这个PVC对应的Volume,从而获取到以前保存在 Volume 里的数据。

小结

StatefulSet与Deployment区别:有身份的!

身份三要素:

域名 (固定网络ID)

主机名 (与pod名称一样)

存储(PVC)

这里为你准备了一个etcd集群,来感受下有状态部署: https://github.com/lizhenliang/k8s-statefulset/tree/master/etcd

第13章:Kubernetes 鉴权框架与用户权限分配

1.Kubernetes的安全框架

访问K8S集群的资源需要过三关:认证、鉴权、准入控制

普通用户若要安全访问集群API Server,往往需要证书、Token或者用户名+密码;Pod访问,需要ServiceAccount

K8S安全控制框架主要由下面3个阶段进行控制,每一个阶段都支持插件方式,通过API Server配置来启用插件。

访问API资源要经过以下三关才可以:

Authentication(鉴权) (检查身份,相当于工牌)

Authorization(授权)(能不能进入不同的房间)

Admission Control(准入控制) (控制请求流入)

2.传输安全,认证,授权,准入控制

传输安全:

告别8080,迎接6443

全面基于HTTPS通信

鉴权:三种客户端身份认证:

HTTPS 证书认证:基于CA证书签名的数字证书认证

HTTP Token认证:通过一个Token来识别用户

HTTP Base认证:用户名+密码的方式认证

授权:

RBAC(Role-Based Access Control,基于角色的访问控制):负责完成授权(Authorization)工作。

根据API请求属性,决定允许还是拒绝。

准入控制:

Adminssion Control实际上是一个准入控制器插件列表,发送到API Server的请求都需要经过这个列表中的每个准入控制器插件的检查,检查不通过,则拒绝请求。

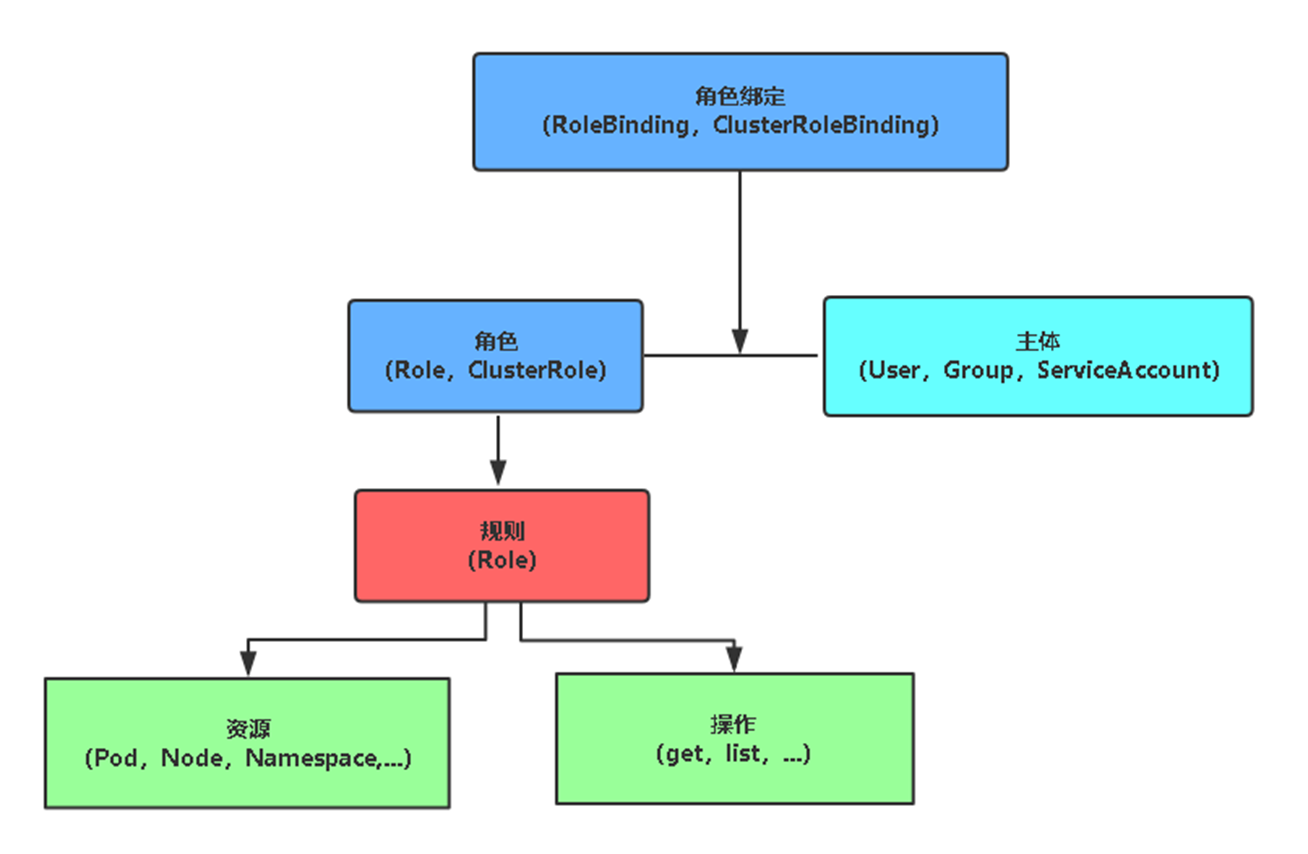

3.使用RBAC授权

RBAC(Role-Based Access Control,基于角色的访问控制),允许通过Kubernetes API动态配置策略。

角色

Role:授权特定命名空间的访问权限

ClusterRole:授权所有命名空间的访问权限

角色绑定

RoleBinding:将角色绑定到主体(即subject)

ClusterRoleBinding:将集群角色绑定到主体

主体(subject)

User:用户

Group:用户组

ServiceAccount:服务账号

Pod资源限制:request limit

命名空间限制:ResourceQuota

Pod默认资源限制:LimitRanger

RBAC授权:

1、创建角色(规则)

2、创建主体(用户)

3、角色与主体绑定

示例:为aliang用户授权default命名空间Pod读取权限

1、用K8S CA签发客户端证书

cat > ca-config.json <<EOF

{"signing": {"default": {"expiry": "87600h"},"profiles": {"kubernetes": {"usages": ["signing","key encipherment","server auth","client auth"],"expiry": "87600h"}}}

}

EOFcat > aliang-csr.json <<EOF

{"CN": "aliang","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "BeiJing","L": "BeiJing","O": "k8s","OU": "System"}]

}

EOFcfssl gencert -ca=/etc/kubernetes/pki/ca.crt -ca-key=/etc/kubernetes/pki/ca.key -config=ca-config.json -profile=kubernetes aliang-csr.json | cfssljson -bare aliang

2、生成kubeconfig授权文件

生成kubeconfig授权文件:kubectl config set-cluster kubernetes \--certificate-authority=/etc/kubernetes/pki/ca.crt \--embed-certs=true \--server=https://192.168.31.61:6443 \--kubeconfig=aliang.kubeconfig# 设置客户端认证

kubectl config set-credentials aliang \--client-key=aliang-key.pem \--client-certificate=aliang.pem \--embed-certs=true \--kubeconfig=aliang.kubeconfig# 设置默认上下文

kubectl config set-context kubernetes \--cluster=kubernetes \--user=aliang \--kubeconfig=aliang.kubeconfig# 设置当前使用配置

kubectl config use-context kubernetes --kubeconfig=aliang.kubeconfig

3、创建RBAC权限策略

创建角色(权限集合):

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:namespace: defaultname: pod-reader

rules:

- apiGroups: [""]resources: ["pods"]verbs: ["get", "watch", "list"]

将aliang用户绑定到角色:

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:name: read-podsnamespace: default

subjects:

- kind: Username: aliangapiGroup: rbac.authorization.k8s.io

roleRef:kind: Rolename: pod-readerapiGroup: rbac.authorization.k8s.io

测试:

# kubectl --kubeconfig=aliang.kubeconfig get pods

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-df88f57df-bv8h7 1/1 Running 0 8h

web-0 1/1 Running 0 7h25m

web-1 1/1 Running 0 7h25m

web-2 1/1 Running 0 7h25m

# kubectl --kubeconfig=aliang.kubeconfig get pods -n kube-system

Error from server (Forbidden): pods is forbidden: User "aliang" cannot list resource "pods" in API group "" in the namespace "kube-system"

aliang用户只有访问default命名空间Pod读取权限。

实操:

#1、创建角色(规则)

[root@k8s-master ~]# mkdir rbc && cd rbc

//长传脚本

[root@k8s-master rbc]# ls

cert.sh config.sh rbac.yaml sa.yaml

[root@k8s-master ~]# vim cert.sh

cat > ca-config.json <<EOF

{"signing": {"default": {"expiry": "87600h"},"profiles": {"kubernetes": {"usages": ["signing","key encipherment","server auth","client auth"],"expiry": "87600h"}}}

}

EOFcat > aliang-csr.json <<EOF

{"CN": "aliang","hosts": [],"key": {"names": [{"C": "CN","ST": "BeiJing","L": "BeiJing","O": "k8s","OU": "System"}]

}

EOFcfssl gencert -ca=/opt/kubernetes/ssl/ca.pem -ca-key=/opt/kubernetes/ssl/ca-key.pem -config=ca-config.json -profile=kubernetes aliang-csr.json | cfssljson -bare aliang"-ca= 指定K8S群集的ca证书;-ca-key=指定K8S群集的ca秘钥文件,注意不要指错"[root@k8s-master rbc]# bash cert.sh

2020/10/10 09:11:00 [INFO] generate received request

2020/10/10 09:11:00 [INFO] received CSR

2020/10/10 09:11:00 [INFO] generating key: rsa-2048

2020/10/10 09:11:01 [INFO] encoded CSR

2020/10/10 09:11:01 [INFO] signed certificate with serial number 212646194532273494530388964704516456568081736504

2020/10/10 09:11:01 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-master rbc]# ls

aliang.csr aliang-key.pem ca-config.json config.sh sa.yaml

aliang-csr.json aliang.pem cert.sh rbac.yaml//2、创建主体(用户)

[root@k8s-master rbc]# vim config.sh

kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=https://192.168.100.110:6443 \--kubeconfig=aliang.kubeconfig# 设置客户端认证

kubectl config set-credentials aliang \--client-key=aliang-key.pem \--client-certificate=aliang.pem \--embed-certs=true \--kubeconfig=aliang.kubeconfig# 设置默认上下文

kubectl config set-context kubernetes \--cluster=kubernetes \--user=aliang \--kubeconfig=aliang.kubeconfig# 设置当前使用配置

kubectl config use-context kubernetes --kubeconfig=aliang.kubeconfig" --server=https://192.168.100.110:6443 指定群集API地址"

"--certificate-authority=/opt/kubernetes/ssl/ca.pem 指定K8S群集ca证书"[root@k8s-master rbc]# bash config.sh

Cluster "kubernetes" set.

User "aliang" set.

Context "kubernetes" created.

Switched to context "kubernetes".

[root@k8s-master rbc]# ls

aliang.csr aliang-key.pem aliang.pem cert.sh rbac.yaml

aliang-csr.json aliang.kubeconfig ca-config.json config.sh sa.yaml//3、角色与主体绑定[root@k8s-master rbc]# vim rbac.yaml

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:namespace: defaultname: pod-reader

rules:

- apiGroups: [""]resources: ["pods"]verbs: ["get", "watch", "list"]---kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: read-podsnamespace: default

subjects: #"角色"

- kind: Username: aliangapiGroup: rbac.authorization.k8s.io

roleRef: #"规则,对应上面的规则名称"kind: Rolename: pod-readerapiGroup: rbac.authorization.k8s.io#规则

#"- apiGroups: [""] 组名 这里如果pod有所属组就写"

# resources: ["pods"] "pod资源"

# verbs: ["get", "watch", "list"] "可以查看的权限"[root@k8s-master rbc]# kubectl apply -f rbac.yaml

role.rbac.authorization.k8s.io/pod-reader created

rolebinding.rbac.authorization.k8s.io/read-pods created//4.测试用户查看

[root@k8s-master rbc]# kubectl --kubeconfig=aliang.kubeconfig get pods

NAME READY STATUS RESTARTS AGE

etcd-0 1/1 Running 0 2d10h

etcd-1 1/1 Running 0 2d10h

etcd-2 1/1 Running 0 2d10h

my-pod 1/1 Running 2 2d19h[root@k8s-master rbc]# kubectl --kubeconfig=aliang.kubeconfig get deploy

Error from server (Forbidden): deployments.apps is forbidden: User "aliang" cannot list resource "deployments" in API group "apps" in the namespace "default"

"只能看pod资源,不能看deploy"

"可以修改rbca.yaml进行规则修改"

《K8S进阶》 (下)相关推荐

- 在非k8s 环境下 的应用 使用 Dapr Sidekick for .NET

在k8s 环境下,通过Operator 可以管理Dapr sidecar, 在虚拟机环境下,我们也是非常需要这样的一个管理组件,之前写的一篇文章< 在 k8s 以外的分布式环境中使用 Dapr& ...

- 【云原生之kubernetes实战】在k8s环境下部署Snipe-IT固定资产管理平台

[云原生之kubernetes实战]在k8s环境下部署Snipe-IT固定资产管理平台 一.Snipe-IT介绍 二.检查本地k8s环境 1.检查工作节点状态 2.检查系统pod状态 3.检查kube ...

- F5在K8S环境下的4、7层应用统一发布

F5在K8S环境下的4.7层应用统一发布 F5在K8S环境下的4.7层应用统一发布(By Jeremy文轩) 一.实验拓扑 二.K8S环境搭建 三.开始前的准备 1.F5上安装AS3 2.取消阿里云的 ...

- 【云原生之kubernetes实战】在k8s环境下部署OneNav个人书签工具

[云原生之kubernetes实战]在k8s环境下部署OneNav个人书签工具 一.OneNav介绍 1.OneNav简介 2.OneNav特点 二.检查本地k8s环境 1.检查工作节点状态 2.检查 ...

- 【笔记记录】【敏感信息已混淆】k8s生态下 kubectl命令、pod性能验证及监控

trading# k8s生态下 kubectl命令操作pod性能监控 环境 1C2G 1POD 架构拓扑 查看命名空间,ns就是namespaces [root@pr-dr-13-47 .kube]# ...

- 【云原生之kubernetes实战】在k8s环境下部署Homepage个人导航页

[云原生之kubernetes实战]在k8s环境下部署Homepage个人导航页 一.Homepage简介 二.检查本地k8s环境 1.检查工作节点状态 2.检查系统pod状态 三.安装docker- ...

- 【K8S 二】搭建Docker Registry私有仓库(自签发证书+登录认证)(K8S和非K8S环境下)

目录 生成证书(更新:2022-08-02) 单SAN(Subject Alternative Name)的场景 多SAN(Subject Alternative Name)场景 创建openssl配 ...

- 超大规模商用 K8s 场景下,阿里巴巴如何动态解决容器资源的按需分配问题?

作者 | 张晓宇(衷源) 阿里云容器平台技术专家 关注『阿里巴巴云原生』公众号,回复关键词"1010",可获取本文 PPT. **导读:**资源利用率一直是很多平台管理和研发人员关 ...

- k8s进阶篇-云原生存储ceph

第一章 Rook安装 rook的版本大于1.3,不要使用目录创建集群,要使用单独的裸盘进行创建,也就是创建一个新的磁盘,挂载到宿主机,不进行格式化,直接使用即可.对于的磁盘节点配置如下: 做这个实验需 ...

最新文章

- 好玩,新版微信除了“炸屎”,还可以和她亲亲

- 后台返回不带http的图片路径前台怎么拼接_Shortcuts 教程:一键搞定公众号图片排版...

- 空心磁珠铁氧体抗干扰屏蔽磁环RH磁通高频磁芯圆形穿心磁珠滤波器

- 第二讲 ODE欧拉数值方法

- Knative 暂时不会捐给任何基金会 | 云原生生态周报 Vol. 22

- 这不是bug,而是语言特性

- vue项目(webpack+mintui),使用hbuilder打包app - 小小人儿大大梦想 - 博客园

- JPA 2.1如何成为新的EJB 2.0

- android 编译luajit,Android 嵌入 LuaJIT 的曲折道路

- scala shuffle

- 防止Linux库so中的接口冲突

- nginx从0到1之参数配置

- 发生无法识别的错误_车牌识别系统的核心部件抓拍摄像机怎么安装?

- dedecms(织梦)采集规则规则宝典

- 戴尔计算机没有硬盘驱动,戴尔做系统读取不到驱动器-戴尔笔记本出现硬盘驱动器无安装该如何操作?...

- 【面试突击算法第二天】剑指offer + Leetcode Hot100

- VS2017设置透明主题

- java中什么是线程不安全给出一个例子

- 卡方(χ2),四格表应用条件,理论频数

- 一个程序员近20年工资单