rancher导入rke

2019独角兽企业重金招聘Python工程师标准>>>

一、搭建RKE集群

1.1 概述

REK是Rancher Kubernetes Engine,通过rke工具可以快速简单地搭建一套 Kubernetes集群。

搭建的重点在于环境,如果环境配置正确,一切都会很顺利。

1.2 环境准备

首先说明下依赖版本:

| 序号 | 名称 | 版本 | 备注 |

| 1 | ubuntu | 16.04.1 | |

| 2 | docker | 17.03.2-ce | |

| 3 | rke | 0.1.1 | |

| 4 | k8s | 1.8.7 | |

| 5 | rancher | 2.0.0-alpha16 |

然后开始准备环境,注意需要两台机器,一台用于安装rke集群,一台用于rke工具控制机。注意控制机最好和rke集群执行同样的环境准备,否则有可能出现莫名安装不上的问题。

1、初装的ubuntu自带的编辑命令不太好用,这里卸载原来的,然后安装vi

#此时未使用root登陆,需要增加sudo,以获取root权限

#首先卸载旧版本的vi编辑器:

sudo apt-get remove vim-common

#然后安装新版vi即可:

$sudo apt-get install vim2、ubuntu默认不允许使用root登陆,为了减少不必要的麻烦,这里修改配置启用root,然后以后所有步骤都在root用户下操作。

#1、将PermitRootLogin prohibit-password修改为PermitRootLogin yes

#2、取消注释AuthorizedKeysFile %h/.ssh/authorized_keys

sudo vi /etc/ssh/sshd_config

#3、切换到root登陆

sudo su

#4、设置root密码

sudo passwd root

#5、更新密码

sudo passwd -u root

#6、重启ssh

sudo service ssh restart

修改完后,使用root登陆,重新打开客户端。

3、修改主机名,注意不能包含大写字母,并且最好不要包含特殊字符,修改完后重启机器。

vi /etc/hostname 4、确保hosts文件包含如下行

127.0.0.1 localhost另外ubuntu会把主机名对应127.0.0.1加入到hosts里,第三步我们改了名称,所以hosts也要相应改变,但注意IP要改为真实IP

172.16.10.99 worker025、启用cgroup内存和Swap限额,修改/etc/default/grub 配置文件,修改/增加两项

GRUB_CMDLINE_LINUX_DEFAULT="cgroup_enable=memory swapaccount=1"

GRUB_CMDLINE_LINUX="cgroup_enable=memory swapaccount=1"修改完后执行

update-grub执行完后,再次重启机器。

6、永久禁用交换分区,直接修改/etc/fstab文件,注释掉swap项

# swap was on /dev/sda6 during installation

#UUID=5e4b0d14-ad10-4d24-8f7c-4a07c4eb4d29 none swap sw 0 07、其他的检查防火墙(默认无规则)、SELinux(默认未启用)、ipv4转发(默认已启用)、配置rke控制机到rke集群机器的单向免密登陆

8、下载安装docker17.03.2-ce版本(https://docs.docker.com/install/linux/docker-ce/ubuntu/#upgrade-docker-ce),最好再设置加速器,不然下载镜像会很慢。最后将root再添加到docker用户组中。

1.3安装rke

rke的github地址是https://github.com/rancher/rke,点击到releases页面,下载rke工具。下载成功后,无需其他操作,只需要赋权即可使用。

chmod 777 rke

#验证安装

./rke -version1.4部署rke集群

1、重启一下机器(为什么重启?不知道,偶发装不上,重启就好了,这里为了确保成功,先重启下。)

2、创建cluster.yml文件,内容类似如下:

nodes:- address: 172.16.10.99user: rootrole: [controlplane,worker,etcd]

services:etcd:image: quay.io/coreos/etcd:latestkube-api:image: rancher/k8s:v1.8.3-rancher2kube-controller:image: rancher/k8s:v1.8.3-rancher2scheduler:image: rancher/k8s:v1.8.3-rancher2kubelet:image: rancher/k8s:v1.8.3-rancher2kubeproxy:image: rancher/k8s:v1.8.3-rancher2注意etcd的那个镜像,不要更换其他etcd镜像,更换会导致启动403错误。quay.io的镜像比较慢,可以先pull下来,但绝对不能换。

3、执行

./rke up --config cluster.yml执行结果如下:

root@master:/opt/rke# ./rke up --config cluster-99.yml

INFO[0000] Building Kubernetes cluster

INFO[0000] [dialer] Setup tunnel for host [172.16.10.99]

INFO[0000] [network] Deploying port listener containers

INFO[0001] [network] Successfully started [rke-etcd-port-listener] container on host [172.16.10.99]

INFO[0001] [network] Successfully started [rke-cp-port-listener] container on host [172.16.10.99]

INFO[0002] [network] Successfully started [rke-worker-port-listener] container on host [172.16.10.99]

INFO[0002] [network] Port listener containers deployed successfully

INFO[0002] [network] Running all -> etcd port checks

INFO[0003] [network] Successfully started [rke-port-checker] container on host [172.16.10.99]

INFO[0004] [network] Successfully started [rke-port-checker] container on host [172.16.10.99]

INFO[0004] [network] Running control plane -> etcd port checks

INFO[0005] [network] Successfully started [rke-port-checker] container on host [172.16.10.99]

INFO[0005] [network] Running workers -> control plane port checks

INFO[0005] [network] Successfully started [rke-port-checker] container on host [172.16.10.99]

INFO[0006] [network] Checking KubeAPI port Control Plane hosts

INFO[0006] [network] Removing port listener containers

INFO[0006] [remove/rke-etcd-port-listener] Successfully removed container on host [172.16.10.99]

INFO[0007] [remove/rke-cp-port-listener] Successfully removed container on host [172.16.10.99]

INFO[0007] [remove/rke-worker-port-listener] Successfully removed container on host [172.16.10.99]

INFO[0007] [network] Port listener containers removed successfully

INFO[0007] [certificates] Attempting to recover certificates from backup on host [172.16.10.99]

INFO[0007] [certificates] No Certificate backup found on host [172.16.10.99]

INFO[0007] [certificates] Generating kubernetes certificates

INFO[0007] [certificates] Generating CA kubernetes certificates

INFO[0008] [certificates] Generating Kubernetes API server certificates

INFO[0008] [certificates] Generating Kube Controller certificates

INFO[0009] [certificates] Generating Kube Scheduler certificates

INFO[0011] [certificates] Generating Kube Proxy certificates

INFO[0011] [certificates] Generating Node certificate

INFO[0012] [certificates] Generating admin certificates and kubeconfig

INFO[0012] [certificates] Generating etcd-172.16.10.99 certificate and key

INFO[0013] [certificates] Temporarily saving certs to etcd host [172.16.10.99]

INFO[0018] [certificates] Saved certs to etcd host [172.16.10.99]

INFO[0018] [reconcile] Reconciling cluster state

INFO[0018] [reconcile] This is newly generated cluster

INFO[0018] [certificates] Deploying kubernetes certificates to Cluster nodes

INFO[0024] Successfully Deployed local admin kubeconfig at [./kube_config_cluster-99.yml]

INFO[0024] [certificates] Successfully deployed kubernetes certificates to Cluster nodes

INFO[0024] Pre-pulling kubernetes images

INFO[0024] Kubernetes images pulled successfully

INFO[0024] [etcd] Building up Etcd Plane..

INFO[0025] [etcd] Successfully started [etcd] container on host [172.16.10.99]

INFO[0025] [etcd] Successfully started Etcd Plane..

INFO[0025] [controlplane] Building up Controller Plane..

INFO[0026] [controlplane] Successfully started [kube-api] container on host [172.16.10.99]

INFO[0026] [healthcheck] Start Healthcheck on service [kube-api] on host [172.16.10.99]

INFO[0036] [healthcheck] service [kube-api] on host [172.16.10.99] is healthy

INFO[0037] [controlplane] Successfully started [kube-controller] container on host [172.16.10.99]

INFO[0037] [healthcheck] Start Healthcheck on service [kube-controller] on host [172.16.10.99]

INFO[0037] [healthcheck] service [kube-controller] on host [172.16.10.99] is healthy

INFO[0038] [controlplane] Successfully started [scheduler] container on host [172.16.10.99]

INFO[0038] [healthcheck] Start Healthcheck on service [scheduler] on host [172.16.10.99]

INFO[0038] [healthcheck] service [scheduler] on host [172.16.10.99] is healthy

INFO[0038] [controlplane] Successfully started Controller Plane..

INFO[0038] [authz] Creating rke-job-deployer ServiceAccount

INFO[0038] [authz] rke-job-deployer ServiceAccount created successfully

INFO[0038] [authz] Creating system:node ClusterRoleBinding

INFO[0038] [authz] system:node ClusterRoleBinding created successfully

INFO[0038] [certificates] Save kubernetes certificates as secrets

INFO[0039] [certificates] Successfully saved certificates as kubernetes secret [k8s-certs]

INFO[0039] [state] Saving cluster state to Kubernetes

INFO[0039] [state] Successfully Saved cluster state to Kubernetes ConfigMap: cluster-state

INFO[0039] [worker] Building up Worker Plane..

INFO[0039] [sidekick] Sidekick container already created on host [172.16.10.99]

INFO[0040] [worker] Successfully started [kubelet] container on host [172.16.10.99]

INFO[0040] [healthcheck] Start Healthcheck on service [kubelet] on host [172.16.10.99]

INFO[0046] [healthcheck] service [kubelet] on host [172.16.10.99] is healthy

INFO[0046] [worker] Successfully started [kube-proxy] container on host [172.16.10.99]

INFO[0046] [healthcheck] Start Healthcheck on service [kube-proxy] on host [172.16.10.99]

INFO[0052] [healthcheck] service [kube-proxy] on host [172.16.10.99] is healthy

INFO[0052] [sidekick] Sidekick container already created on host [172.16.10.99]

INFO[0052] [healthcheck] Start Healthcheck on service [kubelet] on host [172.16.10.99]

INFO[0052] [healthcheck] service [kubelet] on host [172.16.10.99] is healthy

INFO[0052] [healthcheck] Start Healthcheck on service [kube-proxy] on host [172.16.10.99]

INFO[0052] [healthcheck] service [kube-proxy] on host [172.16.10.99] is healthy

INFO[0052] [sidekick] Sidekick container already created on host [172.16.10.99]

INFO[0052] [healthcheck] Start Healthcheck on service [kubelet] on host [172.16.10.99]

INFO[0052] [healthcheck] service [kubelet] on host [172.16.10.99] is healthy

INFO[0052] [healthcheck] Start Healthcheck on service [kube-proxy] on host [172.16.10.99]

INFO[0052] [healthcheck] service [kube-proxy] on host [172.16.10.99] is healthy

INFO[0052] [worker] Successfully started Worker Plane..

INFO[0052] [network] Setting up network plugin: flannel

INFO[0052] [addons] Saving addon ConfigMap to Kubernetes

INFO[0053] [addons] Successfully Saved addon to Kubernetes ConfigMap: rke-network-plugin

INFO[0053] [addons] Executing deploy job..

INFO[0058] [sync] Syncing nodes Labels and Taints

INFO[0058] [sync] Successfully synced nodes Labels and Taints

INFO[0058] [addons] Setting up KubeDNS

INFO[0058] [addons] Saving addon ConfigMap to Kubernetes

INFO[0058] [addons] Successfully Saved addon to Kubernetes ConfigMap: rke-kubedns-addon

INFO[0058] [addons] Executing deploy job..

INFO[0063] [addons] KubeDNS deployed successfully..

INFO[0063] [ingress] Setting up nginx ingress controller

INFO[0063] [addons] Saving addon ConfigMap to Kubernetes

INFO[0063] [addons] Successfully Saved addon to Kubernetes ConfigMap: rke-ingress-controller

INFO[0063] [addons] Executing deploy job..

INFO[0068] [ingress] ingress controller nginx is successfully deployed

INFO[0068] [addons] Setting up user addons..

INFO[0068] [addons] No user addons configured..

INFO[0068] Finished building Kubernetes cluster successfully 如果执行过程出现错误,可以先执行remove,再执行up,如果还不行,先remove再重启机器,最后在up。一般都会成功,如果执行了如上步骤还不行,再看具体报错信息。

二、导入rke到rancher2.0(预览版)

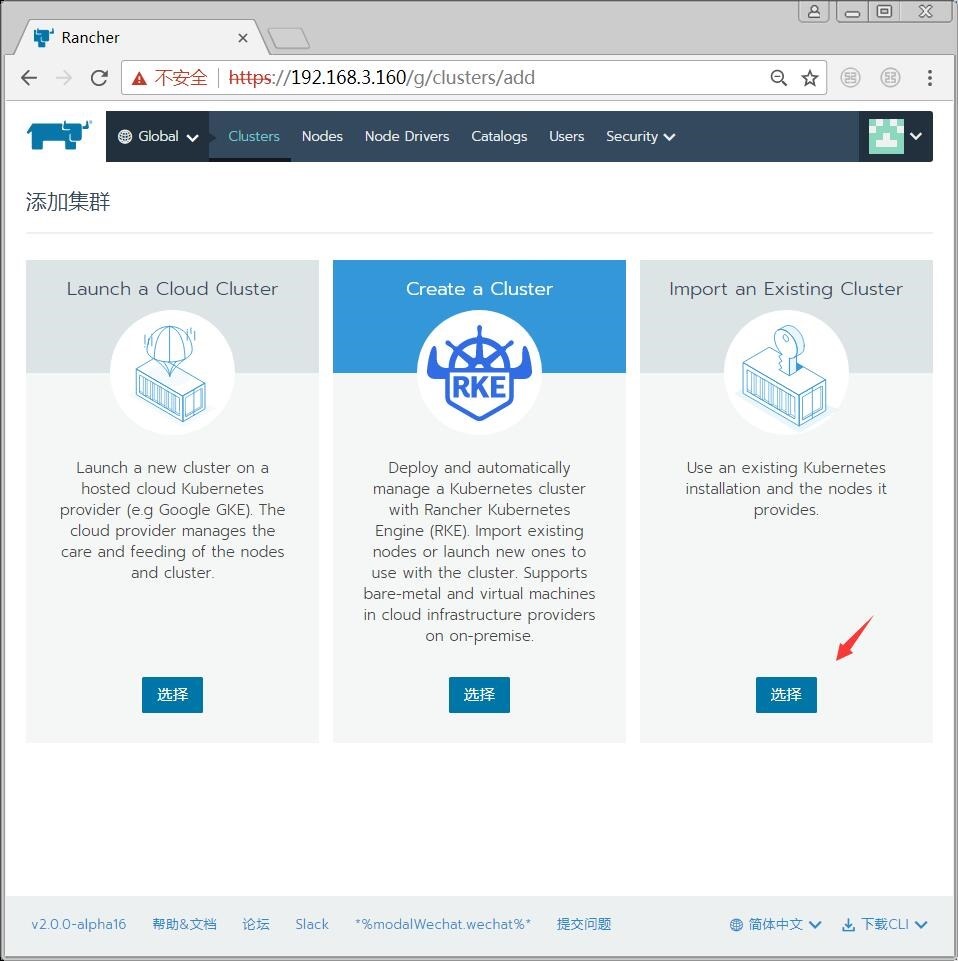

点击创建集群,在最右边有个导入现有集群。

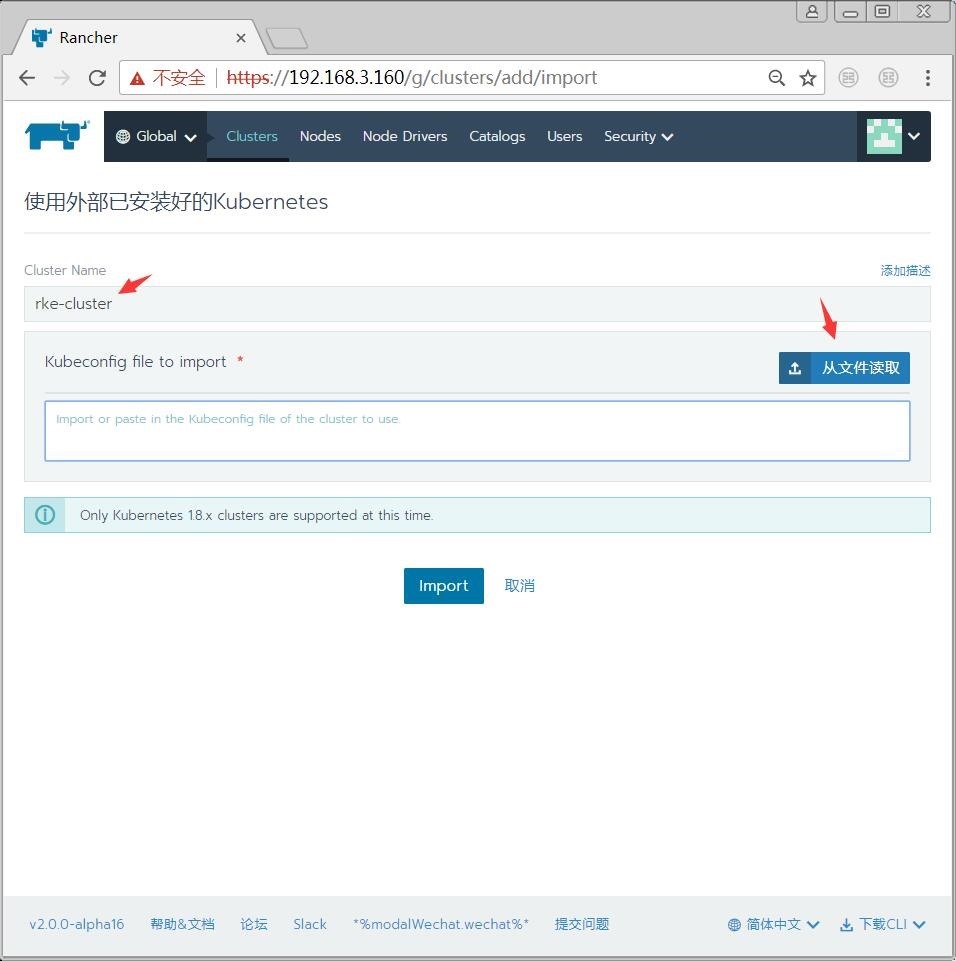

执行rke命令的目录下会自动生成一个kube_config_cluster.yml文件,点击页面的导入,把该文件导入。

注意:rancher2.0,现在都是开发版,存在各种问题,仅适合调研用,缺少很多东西,不适合生产用。消息说三月底会推送2.0正式版。

三、附录

rke安装注意事项:http://blog.csdn.net/csdn_duomaomao/article/details/79325759

rancher导入rke:http://blog.csdn.net/csdn_duomaomao/article/details/79325436

转载于:https://my.oschina.net/shyloveliyi/blog/1626397

rancher导入rke相关推荐

- 使用Rancher的RKE快速部署Kubernetes集群

简要说明: 本文共涉及3台Ubuntu机器,1台RKE部署机器(192.168.3.161),2台Kubernetes集群机器(3.162和3.163). 先在Windows机器上,将rke_linu ...

- rancher导入集群时证书报错

rancher导入集群时证书报错 现象 导入集群时,cattle-cluster-agent报错如下: time="2022-06-28T08:00:28Z" level=erro ...

- 使用Rancher的RKE部署Kubernetes要点

简要说明: RKE (Rancher Kubernetes Engine)是RancherLabs提供的一个工具,可以在裸机.虚拟机.公私有云上快速安装Kubernetes集群.整个集群的部署只需要一 ...

- 如何统一管理谷歌GKE、AWS EKS和Oracle OKE

在Rancher出现之前,管理在不同云提供商中运行的kubernetes集群从来都不是一件容易的事.Rancher是什么?它是一个开源的Kubernetes管理平台,用户可以在Rancher上创建对接 ...

- Kafka Partition重分配流程简析

节日快乐~ 今天是属于广大程序员的节日,祝自己快乐hhhhhh 随着业务量的急速膨胀和又一年双11的到来,我们会对现有的Kafka集群进行扩容,以应对更大的流量和业务尖峰.当然,扩容之后的新Kafka ...

- RKE部署Rancher v2.5.8 HA高可用集群 以及常见错误解决

此博客,是根据Rancher官网文档,使用RKE测试部署最新发布版 Rancher v2.5.8 高可用集群的总结文档.Rancher文档 | K8S文档 | Rancher | Rancher文档 ...

- RKE安装k8s及部署高可用rancher

此博客,是根据 Rancher 官网文档,使用 RKE 测试部署最新发布版 Rancher v2.5.9 高可用集群的总结文档. 一 了解 Rancher Rancher 是为使用容器的公司打造的容器 ...

- 使用RKE部署Rancher v2.5.8 HA高可用集群

文章目录 一 了解 Rancher 1 关于Helm 2 关于RKE 3 关于K3S 4 Rancher 名词解释 4.1 仪表盘 4.2 项目 4.3 多集群应用 4.4 应用商店 4.5 Ranc ...

- k8s ubuntu cni_手把手教你使用RKE快速部署K8S集群并部署Rancher HA

作者:杨紫熹 原文链接: https://fs.tn/post/PmaL-uIiQ/ RKE全称为Rancher Kubernetes Engine,是一款经过CNCF认证的开源Kubernetes发 ...

最新文章

- hdu1695(莫比乌斯)或欧拉函数+容斥

- 写给想用技术改变世界的年轻人-by 沃兹

- CTFshow 命令执行 web47

- Matrix工作室第六届纳新AI组考核题(B卷)

- 深入理解golang的defer

- 使用FastReport报表工具生成图片格式文档

- 8.霍夫变换:线条——动手编码、霍夫演示_4

- 【HDOJ6986】Kanade Loves Maze Designing(暴力,dfs树)

- 如何解决Mac苹果上运行VMware Fusion虚拟机提示“未找到文件”

- 条带装箱问题 Strip packing problem是什么

- 关于Activity跳转动画大汇总

- 基于OpenCV与MFC的人脸识别

- 交换机,集线器,路由器这三者怎样区分,各自的作用是什么?

- 高手实战!Windows 7开机加速完全攻略

- 回顾12306 成长的烦恼

- 视频监控摄像头直播主要应用领域分析

- jQuery入门(一)--jQuery中的选择器

- 扬子苦荞啤酒 一杯苦荞啤酒,精彩你的世界

- 水纹(涟漪)特效壁纸——程序+实现原理

- PDF转jpg工具(含注册码)