卡夫卡详解_卡夫卡概念

卡夫卡详解

Apache Kafka is an open-source distributed event streaming platform used by thousands of companies for high-performance data pipelines, streaming analytics, data integration, and mission-critical applications.

Apache Kafka是一个开放源代码的分布式事件流平台,成千上万的公司使用它来实现高性能数据管道,流分析,数据集成和关键任务应用程序。

简短的Apache Kafka背景 (A brief Apache Kafka background)

Apache Kafka is written in Scala and Java and is the creation of former LinkedIn data engineers. As early as 2011, the technology was handed over to the open-source community as a highly scalable messaging system. Today, Apache Kafka is part of the Confluent Stream Platform and handles trillions of events every day. Apache Kafka has established itself on the market with many trusted companies waving the Kafka banner.

Apache Kafka用Scala和Java编写,是前LinkedIn数据工程师的创建。 早在2011年,该技术就已作为高度可扩展的消息传递系统移交给开源社区。 今天,Apache Kafka已成为Confluent Stream Platform的一部分,每天处理数万亿个事件。 Apache Kafka已在市场上建立了自己的地位,有许多值得信赖的公司挥舞着Kafka的旗帜。

This article is a beginners guide to Apache Kafka basic architecture, components, concepts etc. Here we will try and understand what is Kafka, what are the use cases of Kafka, what are some basic APIs and components of Kafka ecosystem.

本文是Apache Kafka基本体系结构,组件,概念等的初学者指南 。在这里,我们将尝试理解什么是Kafka,Kafka的用例是什么,Kafka生态系统的一些基本API和组件。

什么是事件流? (What is event streaming?)

Event streaming is the digital equivalent of the human body’s central nervous system. It is the technological foundation for the ‘always-on’ world where businesses are increasingly software-defined and automated, and where the user of software is more software.

事件流是人体中枢神经系统的数字等效形式。 它是“永远在线”世界的技术基础,在这个世界中,业务越来越多地由软件定义和自动化,并且软件的用户越来越多。

Technically speaking, event streaming is the practice of capturing data in real-time from event sources like databases, sensors, mobile devices, cloud services, and software applications in the form of streams of events; storing these event streams durably for later retrieval; manipulating, processing, and reacting to the event streams in real-time as well as retrospectively; and routing the event streams to different destination technologies as needed. Event streaming thus ensures a continuous flow and interpretation of data so that the right information is at the right place, at the right time.

从技术上讲,事件流是一种以事件流的形式从数据库,传感器,移动设备,云服务和软件应用程序等事件源实时捕获数据的实践。 持久存储这些事件流以供以后检索; 实时以及回顾性地处理,处理和响应事件流; 并根据需要将事件流路由到不同的目标技术。 事件流因此确保了数据的连续流和解释,以便正确的信息在正确的时间,正确的位置。

我可以将事件流用于什么? (What can I use event streaming for?)

Event streaming is applied to a wide variety of use cases across a plethora of industries and organizations. Its many examples include:

事件流适用于众多行业和组织的各种用例。 它的许多示例包括:

- To process payments and financial transactions in real-time, such as in stock exchanges, banks, and insurances.实时处理付款和金融交易,例如在证券交易所,银行和保险中。

- To track and monitor cars, trucks, fleets, and shipments in real-time, such as in logistics and the automotive industry.实时跟踪和监视汽车,卡车,车队和货运,例如在物流和汽车行业。

- To continuously capture and analyze sensor data from IoT devices or other equipment, such as in factories and wind parks.连续捕获和分析来自IoT设备或其他设备(例如工厂和风电场)中的传感器数据。

- To collect and immediately react to customer interactions and orders, such as in retail, the hotel and travel industry, and mobile applications.收集并立即响应客户的交互和订单,例如在零售,酒店和旅游行业以及移动应用程序中。

- To monitor patients in hospital care and predict changes in condition to ensure timely treatment in emergencies.监测患者的医院护理情况并预测病情变化,以确保在紧急情况下及时得到治疗。

- To connect, store, and make available data produced by different divisions of a company.连接,存储和提供公司不同部门产生的数据。

- To serve as the foundation for data platforms, event-driven architectures, and microservices.用作数据平台,事件驱动的体系结构和微服务的基础。

Apache Kafka是一个事件流平台。 那是什么意思? (Apache Kafka is an event streaming platform. What does that mean?)

Kafka combines three key capabilities so you can implement your use cases for event streaming end-to-end with a single battle-tested solution:

Kafka结合了三个关键功能,因此您可以使用一个经过战斗验证的解决方案来端到端实施事件流的用例:

To publish (write) and subscribe to (read) streams of events, including continuous import/export of your data from other systems.

发布 (写入)和订阅 (读取)事件流,包括从其他系统连续导入/导出数据。

To store streams of events durably and reliably for as long as you want.

根据需要持久而可靠地存储事件流。

To process streams of events as they occur or retrospectively.

处理事件流的发生或追溯。

And all this functionality is provided in a distributed, highly scalable, elastic, fault-tolerant, and secure manner. Kafka can be deployed on bare-metal hardware, virtual machines, and containers, and on-premises as well as in the cloud. You can choose between self-managing your Kafka environments and using fully managed services offered by a variety of vendors.

并且以分布式,高度可伸缩,弹性,容错和安全的方式提供所有这些功能。 Kafka可以部署在裸机硬件,虚拟机和容器,本地以及云中。 您可以在自我管理Kafka环境与使用各种供应商提供的完全托管服务之间进行选择。

主要概念和术语 (Main Concepts and Terminology)

An event records the fact that “something happened” in the world or in your business. It is also called record or message in the documentation. When you read or write data to Kafka, you do this in the form of events. Conceptually, an event has a key, value, timestamp, and optional metadata headers. Here’s an example event:

事件记录了一个事实,即世界或您的企业中发生了“某些事情”。 在文档中也称为记录或消息。 当您向Kafka读取或写入数据时,您将以事件的形式进行操作。 从概念上讲,事件具有键,值,时间戳和可选的元数据标题。 这是一个示例事件:

- Event key: “Alice”事件键:“ Alice”

- Event value: “Made a payment of $200 to Bob”赛事价值:“向Bob支付了$ 200”

- Event timestamp: “Jun. 25, 2020 at 2:06 p.m.”活动时间戳:“ Jun。 2020年2月25日下午2点06分”

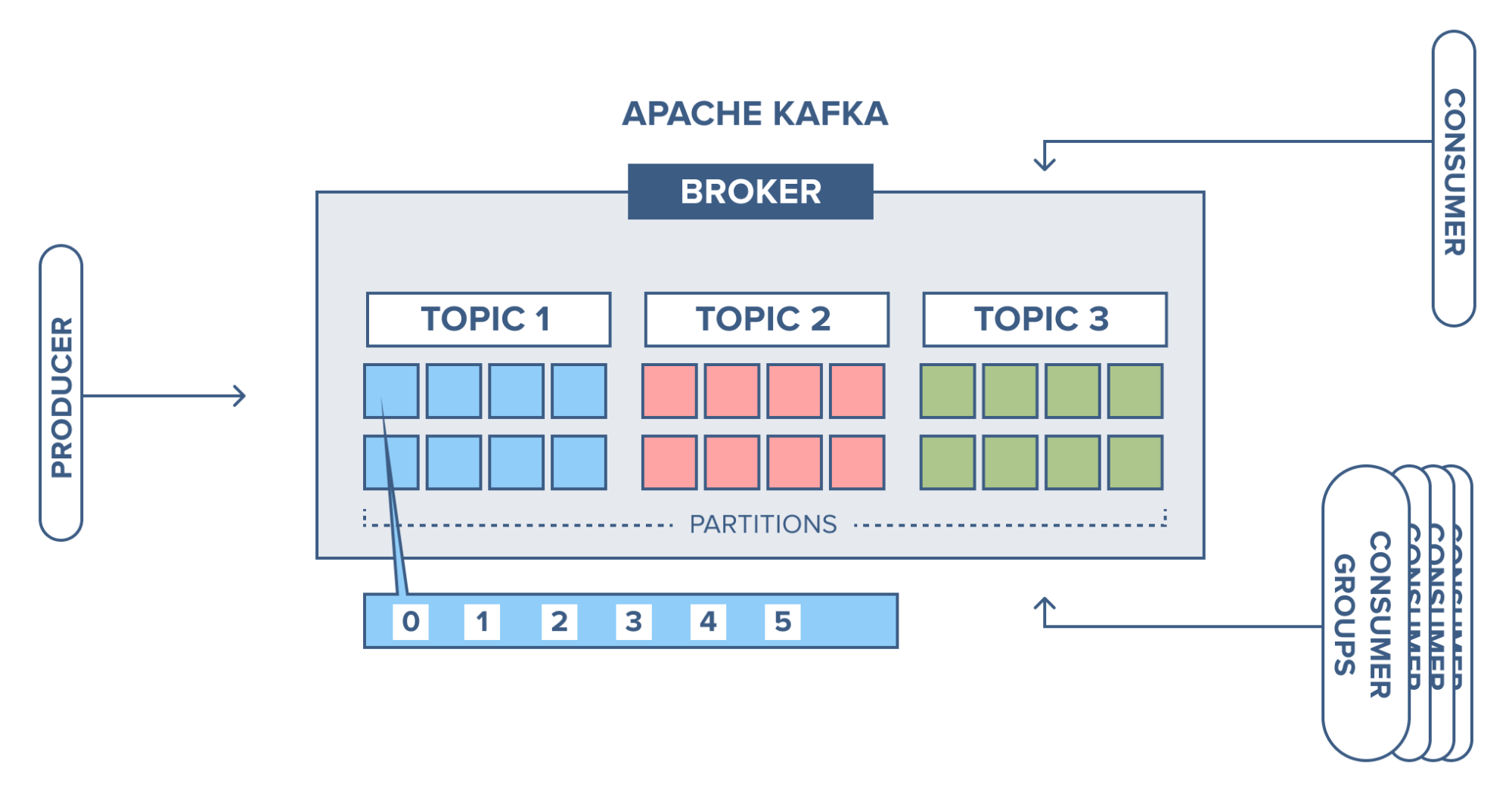

Producers are those client applications that publish (write) events to Kafka, and consumers are those that subscribe to (read and process) these events. In Kafka, producers and consumers are fully decoupled and agnostic of each other, which is a key design element to achieve the high scalability that Kafka is known for. For example, producers never need to wait for consumers. Kafka provides various guarantees such as the ability to process events exactly-once.

生产者是那些向Kafka发布(写)事件的客户端应用程序,而消费者是那些订阅(读和处理)这些事件的客户端应用程序。 在Kafka中,生产者和消费者之间完全脱钩并且彼此不可知,这是实现Kafka众所周知的高可伸缩性的关键设计元素。 例如,生产者永远不需要等待消费者。 Kafka提供各种保证,例如能够一次准确地处理事件。

Events are organized and durably stored in topics. Very simplified, a topic is similar to a folder in a filesystem, and the events are the files in that folder. An example topic name could be “payments”. Topics in Kafka are always multi-producer and multi-subscriber: a topic can have zero, one, or many producers that write events to it, as well as zero, one, or many consumers that subscribe to these events. Events in a topic can be read as often as needed — unlike traditional messaging systems, events are not deleted after consumption. Instead, you define for how long Kafka should retain your events through a per-topic configuration setting, after which old events will be discarded. Kafka’s performance is effectively constant with respect to data size, so storing data for a long time is perfectly fine.

活动被组织并持久地存储在主题中 。 非常简化,主题类似于文件系统中的文件夹,事件是该文件夹中的文件。 示例主题名称可以是“付款”。 Kafka中的主题始终是多生产者和多用户的:一个主题可以有零个,一个或多个向其写入事件的生产者,以及零个,一个或多个订阅这些事件的使用者。 可以按需要频繁读取主题中的事件-与传统的消息传递系统不同,使用后不会删除事件。 相反,您可以通过按主题的配置设置来定义Kafka将事件保留多长时间,之后旧的事件将被丢弃。 Kafka的性能相对于数据大小实际上是恒定的,因此长时间存储数据是完全可以的。

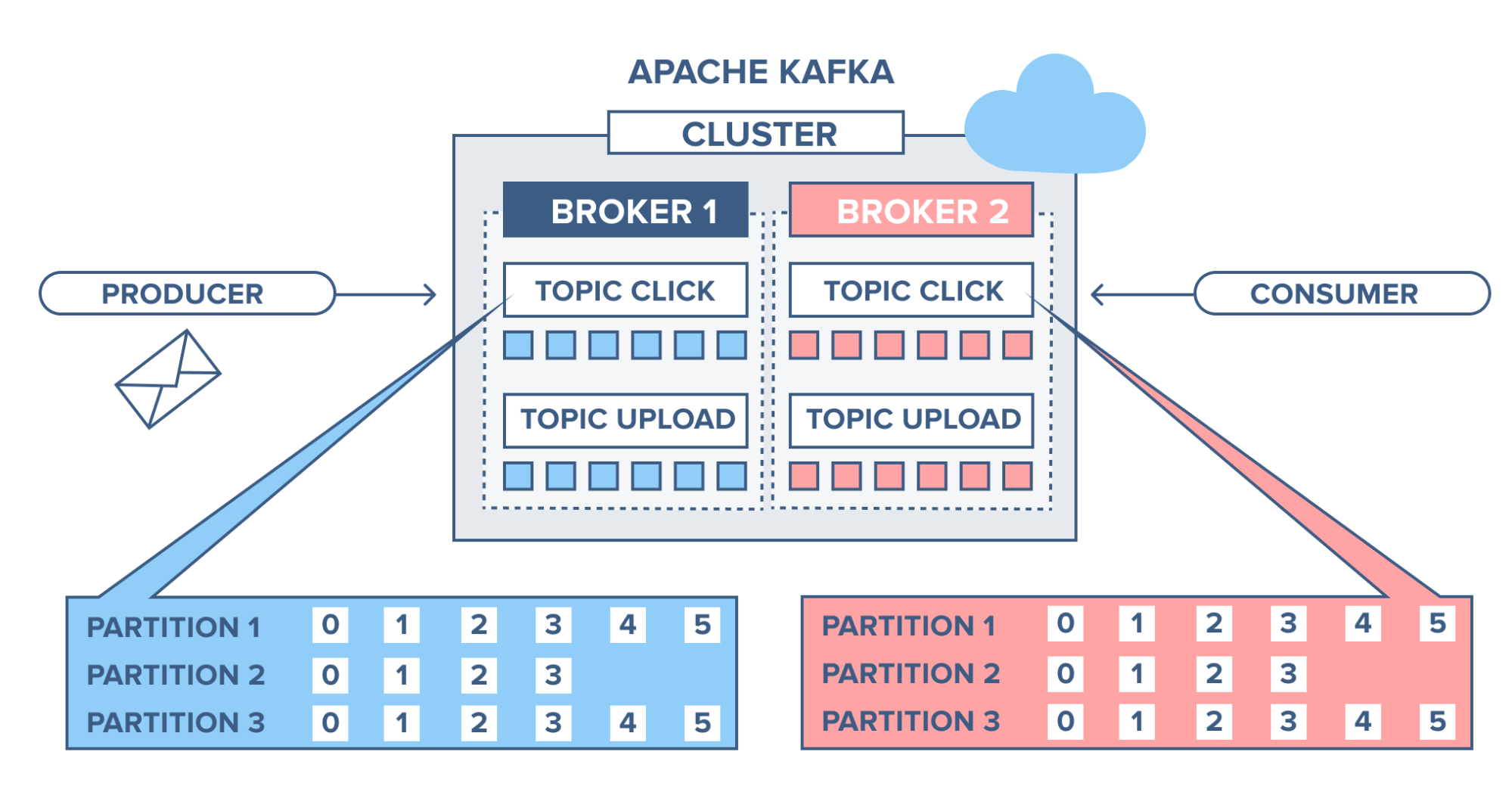

Topics are partitioned, meaning a topic is spread over a number of “buckets” located on different Kafka brokers. This distributed placement of your data is very important for scalability because it allows client applications to both read and write the data from/to many brokers at the same time. When a new event is published to a topic, it is actually appended to one of the topic’s partitions. Events with the same event key (e.g., a customer or vehicle ID) are written to the same partition, and Kafka guarantees that any consumer of a given topic-partition will always read that partition’s events in exactly the same order as they were written.

主题是分区的 ,这意味着主题分布在位于不同Kafka经纪人上的多个“存储桶”中。 数据的这种分布式放置对于可伸缩性非常重要,因为它允许客户端应用程序同时从多个代理读取数据或向多个代理写入数据。 将新事件发布到主题时,实际上会将其附加到主题的一个分区中。 具有相同事件键(例如,客户或车辆ID)的事件被写入相同的分区,并且Kafka 保证 ,给定主题分区的任何使用者都将始终以与写入时完全相同的顺序读取该分区的事件。

This example topic has four partitions P1–P4. Two different producer clients are publishing, independently from each other, new events to the topic by writing events over the network to the topic’s partitions. Events with the same key (denoted by their color in the figure) are written to the same partition.

Ť 他的例子主题有四个分区P1-P4。 通过在网络上将事件写入主题分区,两个不同的生产者客户端正在彼此独立地发布新事件。 具有相同键(在图中由其颜色表示)的事件被写入同一分区。

To make your data fault-tolerant and highly-available, every topic can be replicated, even across geo-regions or datacenters, so that there are always multiple brokers that have a copy of the data just in case things go wrong, you want to do maintenance on the brokers, and so on. A common production setting is a replication factor of 3, i.e., there will always be three copies of your data. This replication is performed at the level of topic-partitions.

为了使您的数据具有容错性和高可用性,即使在地理区域或数据中心之间,也可以复制每个主题,以便始终有多个代理具有数据副本,以防万一出错。对经纪人进行维护,等等。 常见的生产设置是3的复制因子,即,始终会有三个数据副本。 此复制在主题分区级别执行。

This primer should be sufficient for an introduction. The Design section of the documentation explains Kafka’s various concepts in full detail, if you are interested.

该入门手册应该足够介绍。 如果您有兴趣,文档的“设计”部分将详细介绍Kafka的各种概念。

用例 (Use Cases)

Kafka has many use cases. I have listed some of the very popular ones below.

Kafka有很多用例。 我在下面列出了一些非常受欢迎的。

讯息传递 (Messaging)

Kafka works well as a replacement for a more traditional message broker. Message brokers are used for a variety of reasons (to decouple processing from data producers, to buffer unprocessed messages, etc). In comparison to most messaging systems Kafka has better throughput, built-in partitioning, replication, and fault-tolerance which makes it a good solution for large scale message processing applications.

Kafka可以很好地替代传统邮件代理。 消息代理的使用有多种原因(将处理与数据生产者分离,缓冲未处理的消息等)。 与大多数邮件系统相比,Kafka具有更好的吞吐量,内置的分区,复制和容错能力,这使其成为大规模邮件处理应用程序的理想解决方案。

In our experience messaging uses are often comparatively low-throughput, but may require low end-to-end latency and often depend on the strong durability guarantees Kafka provides.

根据我们的经验,消息传递的使用通常吞吐量较低,但是可能需要较低的端到端延迟,并且通常取决于Kafka提供的强大的持久性保证。

In this domain Kafka is comparable to traditional messaging systems such as ActiveMQ or RabbitMQ.

在此领域中,Kafka可与传统消息传递系统(如ActiveMQ或RabbitMQ)媲美。

网站活动跟踪 (Website Activity Tracking)

The original use case for Kafka was to be able to rebuild a user activity tracking pipeline as a set of real-time publish-subscribe feeds. This means site activity (page views, searches, or other actions users may take) is published to central topics with one topic per activity type. These feeds are available for subscription for a range of use cases including real-time processing, real-time monitoring, and loading into Hadoop or offline data warehousing systems for offline processing and reporting.

Kafka最初的用例是能够将用户活动跟踪管道重建为一组实时的发布-订阅供稿。 这意味着将网站活动(页面浏览,搜索或用户可能采取的其他操作)发布到中心主题,每种活动类型只有一个主题。 这些提要可用于一系列用例的订阅,包括实时处理,实时监控,以及加载到Hadoop或脱机数据仓库系统中以进行脱机处理和报告。

Activity tracking is often very high volume as many activity messages are generated for each user page view.

活动跟踪通常量很大,因为每个用户页面视图都会生成许多活动消息。

指标 (Metrics)

Kafka is often used for operational monitoring data. This involves aggregating statistics from distributed applications to produce centralized feeds of operational data.

Kafka通常用于操作监控数据。 这涉及汇总来自分布式应用程序的统计信息,以生成操作数据的集中供稿。

日志汇总 (Log Aggregation)

Many people use Kafka as a replacement for a log aggregation solution. Log aggregation typically collects physical log files off servers and puts them in a central place (a file server or HDFS perhaps) for processing. Kafka abstracts away the details of files and gives a cleaner abstraction of log or event data as a stream of messages. This allows for lower-latency processing and easier support for multiple data sources and distributed data consumption. In comparison to log-centric systems like Scribe or Flume, Kafka offers equally good performance, stronger durability guarantees due to replication, and much lower end-to-end latency.

许多人使用Kafka代替日志聚合解决方案。 日志聚合通常从服务器上收集物理日志文件,并将它们放在中央位置(也许是文件服务器或HDFS)以进行处理。 Kafka提取文件的详细信息,并以日志流的形式更清晰地抽象日志或事件数据。 这允许较低延迟的处理,并更容易支持多个数据源和分布式数据消耗。 与以日志为中心的系统(例如Scribe或Flume)相比,Kafka具有同样出色的性能,由于复制而提供的更强的耐用性保证以及更低的端到端延迟。

网上商店 (Web Shop)

Think of a webshop with a ‘similar products’ feature on the site. To make this work, each action performed by a consumer is recorded and sent to Kafka. A separate application comes along and consumes these messages, filtering out the products the consumer has shown an interest in and gathering information on similar products. This ‘similar product’ information is then sent back to the webshop for it to display to the consumer in real-time.

想想一个网站上具有“类似产品”功能的网上商店。 为了完成这项工作,要记录消费者执行的每个动作并将其发送给Kafka。 出现了一个单独的应用程序并使用这些消息,过滤出消费者感兴趣的产品,并收集有关类似产品的信息。 然后,该“相似产品”信息被发送回网上商店,以实时显示给消费者。

Alternatively, since all data is persistent in Kafka, a batch job can run overnight on the ‘similar product’ information gathered by the system, generating an email for the customer with suggestions of products.

另外,由于所有数据都保存在Kafka中,因此批处理作业可以在一夜之间根据系统收集的“相似产品”信息运行,从而为客户发送一封包含产品建议的电子邮件。

流处理 (Stream Processing)

Many users of Kafka process data in processing pipelines consisting of multiple stages, where raw input data is consumed from Kafka topics and then aggregated, enriched, or otherwise transformed into new topics for further consumption or follow-up processing. For example, a processing pipeline for recommending news articles might crawl article content from RSS feeds and publish it to an “articles” topic; further processing might normalize or deduplicate this content and publish the cleansed article content to a new topic; a final processing stage might attempt to recommend this content to users. Such processing pipelines create graphs of real-time data flows based on the individual topics. Starting in 0.10.0.0, a light-weight but powerful stream processing library called Kafka Streams is available in Apache Kafka to perform such data processing as described above. Apart from Kafka Streams, alternative open source stream processing tools include Apache Storm and Apache Samza.

Kafka的许多用户在由多个阶段组成的处理管道中处理数据,其中原始输入数据从Kafka主题中使用,然后进行汇总,丰富或转换为新主题,以供进一步使用或后续处理。 例如,用于推荐新闻文章的处理管道可能会从RSS提要中检索文章内容,并将其发布到“文章”主题中。 进一步的处理可能会使该内容规范化或重复数据删除,并将清洗后的文章内容发布到新主题; 最后的处理阶段可能会尝试向用户推荐此内容。 这样的处理管道基于各个主题创建实时数据流图。 从0.10.0.0开始,Apache Kafka中提供了一个轻量但功能强大的流处理库,称为Kafka Streams,可以执行上述数据处理。 除了Kafka Streams,其他开源流处理工具还包括Apache Storm和Apache Samza。

卡夫卡数据库 (Kafka as a Database)

Apache Kafka has another interesting feature not found in RabbitMQ — log compaction. Log compaction ensures that Kafka always retains the last known value for each record key. Kafka simply keeps the latest version of a record and deletes the older versions with the same key.

Apache Kafka具有RabbitMQ中未发现的另一个有趣功能-日志压缩。 日志压缩可确保Kafka始终为每个记录键保留最后的已知值。 Kafka只是保留记录的最新版本,并使用相同的密钥删除较旧的版本。

An example of log compaction use is when displaying the latest status of a cluster among thousands of clusters running. The current status of the cluster is written into Kafka and the topic is configured to compact the records. When this topic is consumed, it displays the latest status first and then a continuous stream of new statuses.

日志压缩使用的一个示例是在数千个正在运行的集群中显示集群的最新状态时。 集群的当前状态被写入Kafka,并且配置了该主题以压缩记录。 使用此主题时,它将首先显示最新状态,然后连续显示新状态。

活动采购 (Event Sourcing)

Event sourcing is a style of application design where state changes are logged as a time-ordered sequence of records. Kafka’s support for very large stored log data makes it an excellent backend for an application built in this style.

事件源是一种应用程序设计方式,其中状态更改以时间顺序记录。 Kafka对非常大的存储日志数据的支持使其成为使用这种样式构建的应用程序的绝佳后端。

应用程序运行状况监控 (Application health monitoring)

Servers can be monitored and set to trigger alarms in case of rapid changes in usage or system faults. Information from server agents can be combined with the server syslog and sent to a Kafka cluster. Through Kafka Streams, these topics can be joined and set to trigger alarms based on usage thresholds, containing full information for easier troubleshooting of system problems before they become catastrophic.

可以监视服务器并将其设置为在使用情况快速变化或系统故障时触发警报。 来自服务器代理的信息可以与服务器syslog合并,然后发送到Kafka集群。 通过Kafka Streams,可以将这些主题结合起来并设置为根据使用率阈值触发警报,其中包含完整的信息,可以在灾难性灾难发生之前更轻松地对系统问题进行故障排除。

提交日志 (Commit Log)

Kafka can serve as a kind of external commit-log for a distributed system. The log helps replicate data between nodes and acts as a re-syncing mechanism for failed nodes to restore their data. The log compaction feature in Kafka helps support this usage. In this usage Kafka is similar to Apache BookKeeper project.

Kafka可以用作分布式系统的一种外部提交日志。 该日志有助于在节点之间复制数据,并充当故障节点恢复其数据的重新同步机制。 Kafka中的日志压缩功能有助于支持此用法。 在这种用法中,Kafka类似于Apache BookKeeper项目。

卡夫卡基本概念 (Kafka Fundamental Concepts)

In this section we’ll go over some fundamental concepts of Kafka. It’s imperative to have a clear understanding of these concepts. The concepts will be useful when we start working tutorials.

在本节中,我们将介绍Kafka的一些基本概念。 必须对这些概念有清楚的了解。 当我们开始工作教程时,这些概念将非常有用。

主题 (Topics)

A Topic is a category/feed name to which records are stored and published.

主题是将记录存储和发布到的类别/源名称。

The topic is a logical channel to which producers publish message and from which the consumers receive messages.

主题是生产者向其发布消息以及消费者从中接收消息的逻辑通道。

- A topic defines the stream of a particular type/classification of data, in Kafka.主题在Kafka中定义了特定类型/数据分类的流。

- Moreover, here messages are structured or organized. A particular type of messages is published on a particular topic.此外,此处消息是结构化或组织化的。 在特定主题上发布特定类型的消息。

- Basically, at first, a producer writes its messages to the topics. Then consumers read those messages from topics.基本上,首先,生产者将其消息写入主题。 然后,消费者从主题中读取这些消息。

- In a Kafka cluster, a topic is identified by its name and must be unique.在Kafka集群中,主题由其名称标识,并且必须唯一。

- There can be any number of topics, there is no limitation.主题可以有任意数量,没有限制。

- We can not change or update data, as soon as it gets published.数据一旦发布,我们就无法更改或更新。

Kafka retains records in the log, making the consumers responsible for tracking the position in the log, known as the “offset”. Typically, a consumer advances the offset in a linear manner as messages are read. However, the position is actually controlled by the consumer, which can consume messages in any order. For example, a consumer can reset to an older offset when reprocessing records.

Kafka将记录保留在日志中,使使用者负责跟踪日志中的位置,称为“偏移”。 通常,消费者在读取消息时以线性方式提前偏移量。 但是,位置实际上是由使用者控制的,使用者可以按任何顺序使用消息。 例如,使用者可以在重新处理记录时将其重置为较早的偏移量。

分区 (Partitions)

In a Kafka cluster, Topics are split into Partitions and also replicated across brokers.

在Kafka集群中,主题被划分为分区,并且也在代理之间复制。

- However, to which partition a published message will be written, there is no guarantee about that.但是,发布的消息将写入哪个分区,因此无法保证。

Also, we can add a key to a message. Basically, we will get ensured that all these messages (with the same key) will end up in the same partition if a producer publishes a message with a key. Due to this feature, Kafka offers message sequencing guarantee. Though, unless a key is added to it, data is written to partitions randomly.

另外,我们可以在消息中添加密钥。 基本上,如果生产者发布带有密钥的消息,我们将确保所有这些消息(具有相同的密钥)将最终位于同一分区中。 由于此功能, Kafka提供消息顺序保证。 但是,除非向其添加密钥,否则数据将被随机写入分区。

- Moreover, in one partition, messages are stored in the sequenced fashion.此外,在一个分区中,消息以顺序方式存储。

- In a partition, each message is assigned an incremental id, also called offset.在分区中,为每个消息分配一个增量ID,也称为偏移量。

- However, only within the partition, these offsets are meaningful. Moreover, in a topic, it does not have any value across partitions.但是,仅在分区内,这些偏移量才有意义。 此外,在主题中,跨分区没有任何价值。

- There can be any number of Partitions, there is no limitation.可以有任意数量的分区,没有限制。

经纪人 (Brokers)

A Kafka server is also called a Kafka broker. A Kafka cluster consists multiple brokers.

Kafka服务器也称为Kafka代理。 一个Kafka集群由多个代理组成。

- Each broker has an integer identification number每个经纪人都有一个整数的标识号

- Each broker contains some topic partitions.每个代理都包含一些主题分区。

- Each broker can contain multiple partitions of same topic每个代理可以包含相同主题的多个分区

- A producer or consumer can connect to any broker and in turn gets connected to the entire cluster.生产者或消费者可以连接到任何代理,进而可以连接到整个集群。

主题复制因子 (Topic Replication Factor)

- It’s always a wise decision to factor in topic replication while designing a Kafka system. This way, if a broker goes down, its topics’ replicas from another broker can solve the crisis. Let’s take a look into the below example. Here, we have 3 brokers and 3 topics. Broker1 has Topic 1 and Partition 0, it’s replica is in Broker2, so on and so forth. It has got a replication factor of 2; it means it will have one additional copy other than the primary one.在设计Kafka系统时,始终将主题复制作为因素是一个明智的决定。 这样,如果一个经纪人崩溃了,它的主题从另一个经纪人那里复制就可以解决危机。 让我们看下面的例子。 在这里,我们有3个经纪人和3个主题。 Broker1具有主题1和分区0,它的副本位于Broker2中,依此类推。 复制因子为2; 这意味着它将具有除主副本之外的另一副本。

Couple of notes

几个笔记

- Replication happens in the partition level复制发生在分区级别

- At a time, only one broker can be a leader for a given partition; other brokers will have in-sync replica; also known as ISR.一次,给定分区的经纪人只能是一位。 其他经纪人将具有同步副本; 也称为ISR。

- You can’t have number of replication factor more than the number of available brokers.复制因子的数量不能超过可用代理的数量。

- In normal circumstance, this leader partition will receive data from producer and consumers will read from the leader partition.在正常情况下,此领导者分区将从生产者那里接收数据,而消费者将从领导者分区读取数据。

经纪人 (Broker)

A Kafka cluster consists of one or more servers (Kafka brokers) running Kafka. Producers are processes that push records into Kafka topics within the broker. A consumer pulls records off a Kafka topic.

一个Kafka集群由一个或多个运行Kafka的服务器(Kafka代理)组成。 生产者是将记录推送到代理内的Kafka主题的过程。 消费者从Kafka主题中提取记录。

Running a single Kafka broker is possible but it doesn’t give all the benefits that Kafka in a cluster can give, for example, data replication.

可以运行单个Kafka代理,但是不能提供集群中Kafka可以提供的所有好处,例如,数据复制。

Management of the brokers in the cluster is performed by Zookeeper. There may be multiple Zookeepers in a cluster, in fact the recommendation is three to five, keeping an odd number so that there is always a majority and the number as low as possible to conserve overhead resources.

群集中代理的管理由Zookeeper执行。 一个集群中可能有多个Zookeeper,实际上建议为3到5个,并保持一个奇数,以便始终保持多数,并且该数目应尽可能低以节省开销资源。

生产者 (Producers)

Producers are the ones to write data to a topic

生产者是将数据写入主题的人

- Producers need to specify the topic name and one broker to connect to; Kafka will automatically take care of sending the data to the right partition of the right broker. Kafka will take care of the required load balancing among multiple partitions across multiple brokers.生产者需要指定主题名称和一个要连接的代理; Kafka将自动负责将数据发送到正确的代理的正确分区。 Kafka将负责跨多个代理的多个分区之间所需的负载平衡。

- Producers have the provision to receive back the acknowledgement of the data it writes. There are following kinds of acknowledgements possible –生产者可以接收其写入的数据的确认。 有以下几种可能的确认–

acks =0 [In this case, producer does not wait for any acknowledgment. Producer writes the message to topic and moves on. In this way, producer won’t wait for acknowledgment. This is the fastest way of publishing the message to topic.]

acks = 0 [在这种情况下,生产者不等待任何确认。 生产者将消息写入主题并继续。 这样,生产者将不必等待确认。 这是将消息发布到主题的最快方法。]

acks = 1 [In this case, producer will wait for only leader acknowledgment. It guarantees that atleast one broker has got the message; however there is no guarantee that the data has made it to the replicas.]

acks = 1 [在这种情况下,生产者将只等待领导者的确认。 它保证至少有一个经纪人已经收到消息。 但是,不能保证数据已经复制到副本中。]

acks=all [In this case, the leader and all the replicas will need to acknowledge back; this has worst possible performance impact among total 3 types.]

acks = all [在这种情况下,领导者和所有副本都需要确认; 这在总共3种类型中对性能的影响可能最差。]

消费者和消费群体 (Consumers and consumer groups)

Consumers can read messages starting from a specific offset and are allowed to read from any offset point they choose. This allows consumers to join the cluster at any point in time.

消费者可以从特定的偏移量开始读取消息,并且可以从他们选择的任何偏移量点进行读取。 这使使用者可以在任何时间点加入集群。

低级消费者 (Low-level consumers)

There are two types of consumers in Kafka. First, the low-level consumer, where topics and partitions are specified as is the offset from which to read, either fixed position, at the beginning or at the end. This can, of course, be cumbersome to keep track of which offsets are consumed so the same records aren’t read more than once. So Kafka added another easier way of consuming with:

卡夫卡有两种类型的消费者。 首先,低级使用者,在其中指定主题和分区,以及从其开始或结束处的固定位置读取的偏移量。 当然,要跟踪消耗了哪些偏移量可能很麻烦,因此对同一记录的读取不会超过一次。 因此,Kafka添加了另一种更简单的消费方式:

高级消费者 (High-level consumer)

The high-level consumer (more known as consumer groups) consists of one or more consumers. Here a consumer group is created by adding the property “group.id” to a consumer. Giving the same group id to another consumer means it will join the same group.

高级消费者(更称为消费者群体)由一个或多个消费者组成。 这里,通过将属性“ group.id”添加到使用者来创建使用者组。 为另一个使用者提供相同的组ID意味着它将加入同一组。

The broker will distribute according to which consumer should read from which partitions and it also keeps track of which offset the group is at for each partition. It tracks this by having all consumers committing which offset they have handled.

经纪人将根据哪个消费者应该从哪个分区中读取数据进行分配,并且还将跟踪该组在每个分区中位于哪个偏移量。 它通过让所有消费者提交他们已处理的偏移量来跟踪此情况。

Every time a consumer is added or removed from a group the consumption is rebalanced between the group. All consumers are stopped on every rebalance, so clients that time out or are restarted often will decrease the throughput. Make the consumers stateless since the consumer might get different partitions assigned on a rebalance.

每次在组中添加或删除消费者时,组之间的消耗都会重新平衡。 所有使用者在每次重新平衡时都会停止,因此经常超时或重新启动的客户端会降低吞吐量。 使使用者无状态,因为使用者可能会在重新平衡时获得不同的分区分配。

Consumers pull messages from topic partitions. Different consumers can be responsible for different partitions. Kafka can support a large number of consumers and retain large amounts of data with very little overhead. By using consumer groups, consumers can be parallelized so that multiple consumers can read from multiple partitions on a topic, allowing a very high message processing throughput. The number of partitions impacts the maximum parallelism of consumers as there cannot be more consumers than partitions.

消费者从主题分区中提取消息。 不同的使用者可以负责不同的分区。 Kafka可以支持大量使用者,并以很少的开销保留大量数据。 通过使用使用者组,可以使使用者并行化,以便多个使用者可以从某个主题的多个分区中读取内容,从而实现非常高的消息处理吞吐量。 分区的数量会影响使用者的最大并行度,因为不能有更多的使用者超过分区。

Records are never pushed out to consumers, the consumer will ask for messages when the consumer is ready to handle the message.

记录永远不会推送给使用者,当使用者准备好处理消息时,使用者会询问消息。

The consumers will never overload themselves with lots of data or lose any data since all records are being queued up in Kafka. If the consumer is behind during message processing, it has the option to eventually catch up and get back to handle data in real-time.

由于所有记录都在Kafka中排队,因此消费者永远不会使自己过载大量数据或丢失任何数据。 如果消费者在消息处理过程中落后,则可以选择最终追赶并返回实时处理数据。

在Apache Kafka中记录流 (Record flow in Apache Kafka)

Now we have been looking at the producer and the consumer, and we will check at how the broker receives and stores records coming in the broker.

现在我们一直在研究生产者和消费者,我们将检查经纪人如何接收和存储来自经纪人的记录。

We have an example, where we have a broker with three topics, where each topic has 8 partitions.

我们有一个示例,其中有一个包含三个主题的代理,其中每个主题都有8个分区。

The producer sends a record to partition 1 in topic 1 and since the partition is empty the record ends up at offset 0.

生产者将记录发送到主题1中的分区1,并且由于该分区为空,因此该记录以偏移量0结尾。

Next record is added to partition 1 will and up at offset 1, and the next record at offset 2 and so on.

下一条记录将添加到分区1中,并且将在偏移量1处向上添加,下一条记录将在偏移量2中添加,依此类推。

This is what is referred to as a commit log, each record is appended to the log and there is no way to change the existing records in the log. This is also the same offset that the consumer uses to specify where to start reading.

这就是所谓的提交日志,每个记录都附加到日志中,并且无法更改日志中的现有记录。 这也是使用者用来指定从何处开始阅读的偏移量。

快速开始 (Quick Start)

第1步:获取卡夫卡 (STEP 1: GET KAFKA)

Download the latest Kafka release and extract it:

下载最新的Kafka版本并解压缩:

$ tar -xzf kafka_2.13-2.6.0.tgz$ cd kafka_2.13-2.6.0步骤2:启动KAFKA环境 (STEP 2: START THE KAFKA ENVIRONMENT)

NOTE: Your local environment must have Java 8+ installed.

注意:您的本地环境必须安装了Java 8+。

Run the following commands in order to start all services in the correct order:

运行以下命令以正确的顺序启动所有服务:

# Start the ZooKeeper service# Note: Soon, ZooKeeper will no longer be required by Apache Kafka.$ bin/zookeeper-server-start.sh config/zookeeper.propertiesOpen another terminal session and run:

打开另一个终端会话并运行:

# Start the Kafka broker service$ bin/kafka-server-start.sh config/server.propertiesOnce all services have successfully launched, you will have a basic Kafka environment running and ready to use.

成功启动所有服务后,您将运行并可以使用基本的Kafka环境。

步骤3:建立主题以储存您的活动 (STEP 3: CREATE A TOPIC TO STORE YOUR EVENTS)

Kafka is a distributed event streaming platform that lets you read, write, store, and process events (also called records or messages in the documentation) across many machines.

Kafka是一个分布式事件流平台 ,可让您跨多台计算机读取,写入,存储和处理事件 (在文档中也称为记录或消息 )。

Example events are payment transactions, geolocation updates from mobile phones, shipping orders, sensor measurements from IoT devices or medical equipment, and much more. These events are organized and stored in topics. Very simplified, a topic is similar to a folder in a filesystem, and the events are the files in that folder.

示例事件包括付款交易,移动电话的地理位置更新,运输订单,物联网设备或医疗设备的传感器测量等等。 这些事件被组织并存储在主题中 。 非常简化,主题类似于文件系统中的文件夹,事件是该文件夹中的文件。

So before you can write your first events, you must create a topic. Open another terminal session and run:

因此,在编写第一个事件之前,必须创建一个主题。 打开另一个终端会话并运行:

$ bin/kafka-topics.sh --create --topic quickstart-events --bootstrap-server localhost:9092All of Kafka’s command line tools have additional options: run the kafka-topics.sh command without any arguments to display usage information. For example, it can also show you details such as the partition count of the new topic:

Kafka的所有命令行工具都具有其他选项:不带任何参数的kafka-topics.sh命令以显示用法信息。 例如,它还可以向您显示详细信息,例如新主题的分区数 :

$ bin/kafka-topics.sh --describe --topic quickstart-events --bootstrap-server localhost:9092Topic:quickstart-events PartitionCount:1 ReplicationFactor:1 Configs: Topic: quickstart-events Partition: 0 Leader: 0 Replicas: 0 Isr: 0步骤4:将一些事件写入主题 (STEP 4: WRITE SOME EVENTS INTO THE TOPIC)

A Kafka client communicates with the Kafka brokers via the network for writing (or reading) events. Once received, the brokers will store the events in a durable and fault-tolerant manner for as long as you need — even forever.

Kafka客户端通过网络与Kafka经纪人进行通信,以编写(或读取)事件。 一旦收到,经纪人将以持久和容错的方式存储事件,只要您需要,甚至可以永久保存。

Run the console producer client to write a few events into your topic. By default, each line you enter will result in a separate event being written to the topic.

运行控制台生产者客户端,以将一些事件写入您的主题。 默认情况下,您输入的每一行都会导致一个单独的事件写入该主题。

$ bin/kafka-console-producer.sh --topic quickstart-events --bootstrap-server localhost:9092This is my first eventThis is my second eventYou can stop the producer client with Ctrl-C at any time.

您可以随时使用Ctrl-C停止生产者客户端。

步骤5:阅读事件 (STEP 5: READ THE EVENTS)

Open another terminal session and run the console consumer client to read the events you just created:

打开另一个终端会话并运行控制台使用者客户端以读取您刚刚创建的事件:

$ bin/kafka-console-consumer.sh --topic quickstart-events --from-beginning --bootstrap-server localhost:9092This is my first eventThis is my second eventYou can stop the consumer client with Ctrl-C at any time.

您可以随时使用Ctrl-C停止使用方客户端。

Feel free to experiment: for example, switch back to your producer terminal (previous step) to write additional events, and see how the events immediately show up in your consumer terminal.

随时尝试:例如,切换回生产者终端(上一步)以编写其他事件,并查看事件如何立即显示在消费者终端中。

Because events are durably stored in Kafka, they can be read as many times and by as many consumers as you want. You can easily verify this by opening yet another terminal session and re-running the previous command again.

因为事件被持久地存储在Kafka中,所以您可以根据需要任意多次地读取它们。 您可以通过打开另一个终端会话并再次重新运行上一个命令来轻松地验证这一点。

步骤6:使用KAFKA CONNECT将数据作为事件流导入/导出 (STEP 6: IMPORT/EXPORT YOUR DATA AS STREAMS OF EVENTS WITH KAFKA CONNECT)

You probably have lots of data in existing systems like relational databases or traditional messaging systems, along with many applications that already use these systems. Kafka Connect allows you to continuously ingest data from external systems into Kafka, and vice versa. It is thus very easy to integrate existing systems with Kafka. To make this process even easier, there are hundreds of such connectors readily available.

在诸如关系数据库或传统消息传递系统之类的现有系统中,您可能拥有大量数据,以及已经使用这些系统的许多应用程序。 通过Kafka Connect ,您可以将来自外部系统的数据连续地吸收到Kafka中,反之亦然。 因此,将现有系统与Kafka集成非常容易。 为了使此过程变得更加容易,有数百种此类连接器随时可用。

Take a look at the Kafka Connect section learn more about how to continuously import/export your data into and out of Kafka.

看一下Kafka Connect部分,了解更多有关如何连续地将数据导入和导出Kafka的信息。

第7步:使用卡夫卡流处理您的事件 (STEP 7: PROCESS YOUR EVENTS WITH KAFKA STREAMS)

Once your data is stored in Kafka as events, you can process the data with the Kafka Streams client library for Java/Scala. It allows you to implement mission-critical real-time applications and microservices, where the input and/or output data is stored in Kafka topics. Kafka Streams combines the simplicity of writing and deploying standard Java and Scala applications on the client side with the benefits of Kafka’s server-side cluster technology to make these applications highly scalable, elastic, fault-tolerant, and distributed. The library supports exactly-once processing, stateful operations and aggregations, windowing, joins, processing based on event-time, and much more.

一旦将数据作为事件存储在Kafka中,就可以使用Java / Scala的Kafka Streams客户端库处理数据。 它允许您实现关键任务实时应用程序和微服务,其中输入和/或输出数据存储在Kafka主题中。 Kafka Streams结合了在客户端编写和部署标准Java和Scala应用程序的简便性以及Kafka服务器端集群技术的优势,使这些应用程序具有高度可伸缩性,弹性,容错性和分布式性。 该库支持一次精确处理,有状态操作和聚合,开窗,联接,基于事件时间的处理等等。

To give you a first taste, here’s how one would implement the popular WordCount algorithm:

为了让您有一个WordCount的了解,以下是实现流行的WordCount算法的方法:

KStream<String, String> textLines = builder.stream("quickstart-events");KTable<String, Long> wordCounts = textLines .flatMapValues(line -> Arrays.asList(line.toLowerCase().split(" "))) .groupBy((keyIgnored, word) -> word) .count();wordCounts.toStream().to("output-topic"), Produced.with(Serdes.String(), Serdes.Long()));The Kafka Streams demo and the app development tutorial demonstrate how to code and run such a streaming application from start to finish.

Kafka Streams演示和应用程序开发教程演示了如何从头到尾编写和运行这种流媒体应用程序。

步骤8:终止KAFKA环境 (STEP 8: TERMINATE THE KAFKA ENVIRONMENT)

Now that you reached the end of the quickstart, feel free to tear down the Kafka environment — or continue playing around.

既然您已开始快速入门,请随时拆除Kafka环境-或继续玩耍。

Stop the producer and consumer clients with

Ctrl-C, if you haven't done so already.如果尚未停止,请使用

Ctrl-C停止生产者和消费者客户端。Stop the Kafka broker with

Ctrl-C.使用

Ctrl-C停止Kafka代理。Lastly, stop the ZooKeeper server with

Ctrl-C.最后,使用

Ctrl-C停止ZooKeeper服务器。

If you also want to delete any data of your local Kafka environment including any events you have created along the way, run the command:

如果您还想删除本地Kafka环境的任何数据,包括您在此过程中创建的所有事件,请运行以下命令:

$ rm -rf /tmp/kafka-logs /tmp/zookeeper

您已成功完成Apache Kafka快速入门。 (You have successfully finished the Apache Kafka quickstart.)

Thanks so much for your interest in my post!

非常感谢您对我的帖子感兴趣!

If it was useful for you, please remember to “Clap”

卡夫卡详解_卡夫卡概念相关推荐

- android sd卡名称,科普详解Android系统SD卡各类文件夹名称

该楼层疑似违规已被系统折叠 隐藏此楼查看此楼 15.moji:墨迹天气的缓存目录. 16.MusicFolders:poweramp产生的缓存文件夹. 17.openfeint:openfeint的缓 ...

- python输入输出流详解_输入输出流的概念

Java中的文件复制相较Python而言,涉及到输入输出流的概念,实现中会调用很多对象,复杂很多,在此以文件复制进行简单总结. 这里是一个简单的处理代码: import java.io.*; publ ...

- java阴阳师抽卡算法_阴阳师详解新的抽卡机制 全图鉴和SP获取更加简单

原标题:阴阳师详解新的抽卡机制 全图鉴和SP获取更加简单 阴阳师随着大岳丸活动的临近,马上大家就要再次进入抽卡的热潮中了,而这次的新SSR大岳丸的获取,又一次更新了新的抽卡机制,本次就带来新抽卡机制详 ...

- css鼠标拖拉卡顿_详解overflow-scrolling解决滚动卡顿问题

前言 如果你对某个div或模块使用了overflow: scroll属性,在iOS系统的手机上浏览时,则会出现明显的卡顿现象.但是在android系统的手机上则不会出现该问题. 解决方法 以下代码可解 ...

- android卡刷教程,卡刷是什么意思?安卓系统卡刷教程详解

2016-03-29 17:43:49 卡刷是什么意思?安卓系统卡刷教程详解 标签:卡刷,安卓系统卡刷教程,卡刷升级 [ROM之家]使用安卓系统手机的发烧友可能会经常提到卡刷一词,那么到底卡刷是什么意 ...

- linux免采集卡直播ps4,PS4游戏直播采集卡使用教程详解

在网络直播潮流中,ps4连接笔记本显示器进行的PS4游戏直播拥有着大批的主播与粉丝,而这其中也包括了PS4采集卡的鼎力相助.接下来同三维来PS4游戏直播采集卡使用教程详解. 一.准备阶段: 一台PS4 ...

- 【SD卡】关于DJYOS下SD卡驱动开发详解

关于DJYOS下SD卡驱动开发详解 王建忠 2011/6/21 1 开发环境及说明 硬件平台:tq2440(CPU: s3c2440) 操作系统:DJYOS1.0.0 1.1 说明 T ...

- android 请求sd卡权限,androidQ sd卡权限使用详解

默认情况下,如果应用以 Android Q 为目标平台,则在访问外部存储设备中的文件时会进入过滤视图.应用可以使用 Context.getExternalFilesDir() 将专用于自己的文件存储在 ...

- android sdcardfs 权限,androidQ sd卡权限使用详解

默认情况下,如果应用以 Android Q 为目标平台,则在访问外部存储设备中的文件时会进入过滤视图.应用可以使用 Context.getExternalFilesDir() 将专用于自己的文件存储在 ...

- Spring事务管理详解_基本原理_事务管理方式

Spring事务管理详解_基本原理_事务管理方式 1. 事务的基本原理 Spring事务的本质其实就是数据库对事务的支持,使用JDBC的事务管理机制,就是利用java.sql.Connection对象 ...

最新文章

- 空间点像素索引(二)

- idea自动为行尾加分号

- redhat9Linux解压gz,linux (redhat9)下subversion 的安装

- java学习(112):simpledateformat进行格式化

- HDU2110 Crisis of HDU【母函数】

- python用什么编译器-Python必学之编译器用哪个好?你用错了吧!

- Postfix配置QA

- 简述redux(1)

- 06-continue和break的区别

- dell服务器运维,施用smartctl查dell服务器坏道实录

- SCVMM 2012 R2---添加Hyper-V虚拟机

- 2021北京计算机考研科目,2021年北京大学计算机考研科目

- fedora mysql安装教程,Fedora 14 上MySQL的安装及使用

- 年报文本分析:jieba词频统计

- linux显卡驱动与opengl,NVIDIA率先发布OpenGL 3.0 Linux驱动

- 2020鸿蒙系统pc版,华为将在2020年发布鸿蒙操作系统2.0版,应用于创新国产PC电脑...

- 企业级数据管理——DAMA数据管理

- jsoup爬取王者荣耀所有英雄背景图片

- 信息共享的记忆被囊群算法

- 【Arduino基础】一位数码管实验