以Binder视角来看Service启动

一. 概述

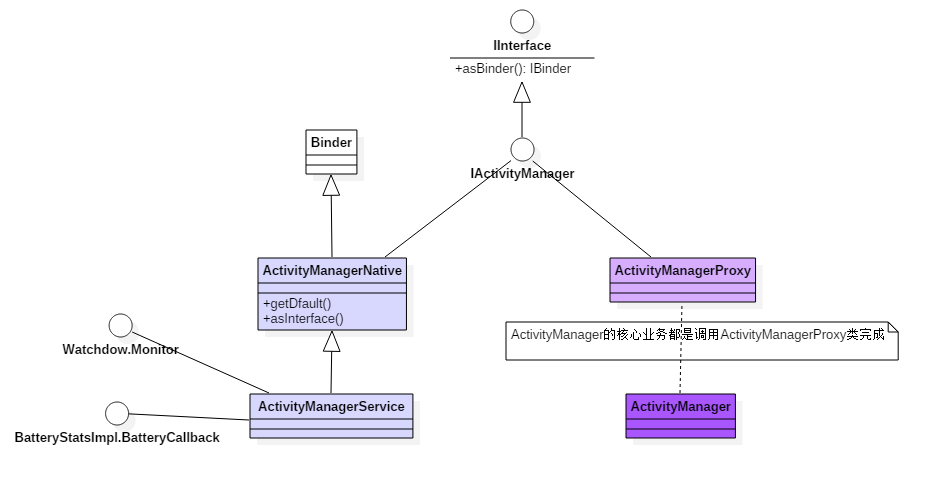

在前面的文章startService流程分析,从系统framework层详细介绍Service启动流程,见下图:

Service启动过程中,首先在发起方进程调用startService,经过binder驱动,最终进入system_server进程的binder线程来执行ActivityManagerService模块的代码。本文将以Binder视角来深入讲解其中地这一个过程:如何由AMP.startService 调用到 AMS.startService。

继承关系

这里涉及AMP(ActivityManagerProxy)和AMS(ActivityManagerService),先来看看这两者之间的关系。

从上图,可知:

- AMS继承于AMN(抽象类);

- AMN实现了IActivityManager接口,继承于Binder对象(Binder服务端);

- AMP也实现IActivityManager接口;

- Binder对象实现了IBinder接口,IActivityManager继承于IInterface。

二. 分析

2.1 AMP.startService

public ComponentName startService(IApplicationThread caller, Intent service,String resolvedType, String callingPackage, int userId) throws RemoteException

{//【见小节2.1.1】Parcel data = Parcel.obtain();Parcel reply = Parcel.obtain();data.writeInterfaceToken(IActivityManager.descriptor);data.writeStrongBinder(caller != null ? caller.asBinder() : null);service.writeToParcel(data, 0);data.writeString(resolvedType);data.writeString(callingPackage);data.writeInt(userId);//通过Binder 传递数据 【见小节2.2】mRemote.transact(START_SERVICE_TRANSACTION, data, reply, 0);//读取应答消息的异常情况reply.readException();//根据reply数据来创建ComponentName对象ComponentName res = ComponentName.readFromParcel(reply);//【见小节2.1.2】data.recycle();reply.recycle();return res;

}

创建两个Parcel对象,data用于发送数据,reply用于接收应答数据。其中descriptor = “android.app.IActivityManager”;

- 将startService相关数据都封装到Parcel对象data;通过mRemote发送到Binder驱动;

- Binder应答消息都封装到reply对象,从reply解析出ComponentName.

2.1.1 Parcel.obtain

[-> Parcel.java]

public static Parcel obtain() {final Parcel[] pool = sOwnedPool;synchronized (pool) {Parcel p;//POOL_SIZE = 6for (int i=0; i<POOL_SIZE; i++) {p = pool[i];if (p != null) {pool[i] = null;return p;}}}//当缓存池没有现成的Parcel对象,则直接创建return new Parcel(0);

}

sOwnedPool是一个大小为6,存放着parcel对象的缓存池. obtain()方法的作用:

- 先尝试从缓存池

sOwnedPool中查询是否存在缓存Parcel对象,当存在则直接返回该对象;否则执行下面操作; - 当不存在Parcel对象,则直接创建Parcel对象。

这样设计的目标是用于节省每次都创建Parcel对象的开销。

2.1.2 Parcel.recycle

public final void recycle() {//释放native parcel对象freeBuffer();final Parcel[] pool;//根据情况来选择加入相应池if (mOwnsNativeParcelObject) {pool = sOwnedPool;} else {mNativePtr = 0;pool = sHolderPool;}synchronized (pool) {for (int i=0; i<POOL_SIZE; i++) {if (pool[i] == null) {pool[i] = this;return;}}}

}

将不再使用的Parcel对象放入缓存池,可回收重复利用,当缓存池已满则不再加入缓存池。

mOwnsNativeParcelObject变量来决定是将Parcel对象存放到sOwnedPool,还是sHolderPool池。该变量值取决于Parcel初始化init()过程是否存在native指针。

private void init(long nativePtr) {if (nativePtr != 0) {//native指针不为0,则采用sOwnedPoolmNativePtr = nativePtr;mOwnsNativeParcelObject = false;} else {//否则,采用sHolderPoolmNativePtr = nativeCreate();mOwnsNativeParcelObject = true;}

}

recycle()操作用于向池中添加parcel对象,obtain()则是从池中取对象的操作。

2.2 mRemote.transact

2.2.1 mRemote

mRemote是在AMP对象创建的时候由构造函数赋值的,而AMP的创建是由ActivityManagerNative.getDefault()来获取的,核心实现是由如下代码:

static public IActivityManager getDefault() {return gDefault.get();

}

gDefault为Singleton类型对象,此次采用单例模式.

public abstract class Singleton<T> {public final T get() {synchronized (this) {if (mInstance == null) {//首次调用create()来获取AMP对象mInstance = create();}return mInstance;}}

}

get()方法获取的便是mInstance,再来看看create()的过程:

private static final Singleton<IActivityManager> gDefault = new Singleton<IActivityManager>() {protected IActivityManager create() {//获取名为"activity"的服务IBinder b = ServiceManager.getService("activity");//创建AMP对象IActivityManager am = asInterface(b);return am;}

};

看过文章Binder系列7—framework层分析,可知ServiceManager.getService(“activity”)返回的是指向目标服务AMS的代理对象BinderProxy对象,由该代理对象可以找到目标服务AMS所在进程,这个过程就不再重复了。接下来,再来看看asInterface的功能:

public abstract class ActivityManagerNative extends Binder implements IActivityManager

{static public IActivityManager asInterface(IBinder obj) {if (obj == null) {return null;}IActivityManager in = (IActivityManager)obj.queryLocalInterface(descriptor);if (in != null) { //此处为nullreturn in;}// 此处调用AMP的构造函数,obj为BinderProxy对象(记录远程AMS的handle)return new ActivityManagerProxy(obj);}public ActivityManagerNative() {//调用父类binder对象的方法,保存attachInterface(this, descriptor);}...

}

接下来,进入AMP的构造方法:

class ActivityManagerProxy implements IActivityManager

{public ActivityManagerProxy(IBinder remote){mRemote = remote;}

}

到此,可知mRemote便是指向AMS服务的BinderProxy对象。

2.2.2 mRemote.transact

mRemote.transact(START_SERVICE_TRANSACTION, data, reply, 0);其中data保存了descriptor,caller, intent, resolvedType, callingPackage, userId这6项信息。

final class BinderProxy implements IBinder {public boolean transact(int code, Parcel data, Parcel reply, int flags) throws RemoteException {//用于检测Parcel大小是否大于800kBinder.checkParcel(this, code, data, "Unreasonably large binder buffer");//【见2.3】return transactNative(code, data, reply, flags);}

}

transactNative这是native方法,经过jni调用android_os_BinderProxy_transact方法。

2.3 android_os_BinderProxy_transact

[-> android_util_Binder.cpp]

static jboolean android_os_BinderProxy_transact(JNIEnv* env, jobject obj,jint code, jobject dataObj, jobject replyObj, jint flags)

{if (dataObj == NULL) {jniThrowNullPointerException(env, NULL);return JNI_FALSE;}...//将java Parcel转为native ParcelParcel* data = parcelForJavaObject(env, dataObj);Parcel* reply = parcelForJavaObject(env, replyObj);//gBinderProxyOffsets.mObject中保存的是new BpBinder(handle)对象IBinder* target = (IBinder*) env->GetLongField(obj, gBinderProxyOffsets.mObject);if (target == NULL) {jniThrowException(env, "java/lang/IllegalStateException", "Binder has been finalized!");return JNI_FALSE;}...if (kEnableBinderSample){time_binder_calls = should_time_binder_calls();if (time_binder_calls) {start_millis = uptimeMillis();}}//此处便是BpBinder::transact()【见小节2.4】status_t err = target->transact(code, *data, reply, flags);if (kEnableBinderSample) {if (time_binder_calls) {conditionally_log_binder_call(start_millis, target, code);}}if (err == NO_ERROR) {return JNI_TRUE;} else if (err == UNKNOWN_TRANSACTION) {return JNI_FALSE;}//最后根据transact执行具体情况,抛出相应的ExceptionsignalExceptionForError(env, obj, err, true , data->dataSize());return JNI_FALSE;

}

kEnableBinderSample这是调试开关,用于打开调试主线程执行一次transact所花时长的统计。接下来进入native层BpBinder

这里会有异常抛出:

NullPointerException:当dataObj对象为空,则抛该异常;IllegalStateException:当BpBinder对象为空,则抛该异常signalExceptionForError(): 根据transact执行具体情况,抛出相应的异常。

2.4 BpBinder.transact

[-> BpBinder.cpp]

status_t BpBinder::transact(uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{if (mAlive) {// 【见小节2.5】status_t status = IPCThreadState::self()->transact(mHandle, code, data, reply, flags);if (status == DEAD_OBJECT) mAlive = 0;return status;}return DEAD_OBJECT;

}

IPCThreadState::self()采用单例模式,保证每个线程只有一个实例对象。

2.5 IPC.transact

[-> IPCThreadState.cpp]

status_t IPCThreadState::transact(int32_t handle,uint32_t code, const Parcel& data,Parcel* reply, uint32_t flags)

{status_t err = data.errorCheck(); //数据错误检查flags |= TF_ACCEPT_FDS;....if (err == NO_ERROR) {// 传输数据 【见小节2.6】err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL);}if (err != NO_ERROR) {if (reply) reply->setError(err);return (mLastError = err);}if ((flags & TF_ONE_WAY) == 0) {if (reply) {//进入等待响应 【见小节2.7】err = waitForResponse(reply);}...}...return err;

}

transact主要过程:

- 先执行writeTransactionData()已向

mOut写入数据,此时mIn还没有数据; - 然后执行waitForResponse()方法,循环执行,直到收到应答消息:

- talkWithDriver()跟驱动交互,收到应答消息,便会写入

mIn; - 当

mIn存在数据,则根据不同的响应吗,执行相应的操作。

- talkWithDriver()跟驱动交互,收到应答消息,便会写入

mOut和mIn都是parcel对象。

2.6 IPC.writeTransactionData

[-> IPCThreadState.cpp]

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{binder_transaction_data tr;tr.target.ptr = 0;tr.target.handle = handle; // handle指向AMStr.code = code; // START_SERVICE_TRANSACTIONtr.flags = binderFlags; // 0tr.cookie = 0;tr.sender_pid = 0;tr.sender_euid = 0;const status_t err = data.errorCheck();if (err == NO_ERROR) {// data为startService相关信息tr.data_size = data.ipcDataSize(); // mDataSizetr.data.ptr.buffer = data.ipcData(); // mData指针tr.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t); //mObjectsSizetr.data.ptr.offsets = data.ipcObjects(); //mObjects指针}...mOut.writeInt32(cmd); //cmd = BC_TRANSACTIONmOut.write(&tr, sizeof(tr)); //写入binder_transaction_data数据return NO_ERROR;

}

2.7 IPC.waitForResponse

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{int32_t cmd;int32_t err;while (1) {if ((err=talkWithDriver()) < NO_ERROR) break; // 【见小节2.8】err = mIn.errorCheck();//当存在error则退出循环,最终将error返回给transact过程if (err < NO_ERROR) break;//当mDataSize > mDataPos则代表有可用数据,往下执行if (mIn.dataAvail() == 0) continue;cmd = mIn.readInt32();switch (cmd) {case BR_TRANSACTION_COMPLETE:if (!reply && !acquireResult) goto finish;break;...default:err = executeCommand(cmd); //【见小节2.9】if (err != NO_ERROR) goto finish;break;}}finish:if (err != NO_ERROR) {if (reply) reply->setError(err);}return err;

}

这里有了真正跟binder driver大交道的地方,那就是talkWithDriver.

2.8 IPC.talkWithDriver

此时mOut有数据,mIn还没有数据。doReceive默认值为true

status_t IPCThreadState::talkWithDriver(bool doReceive)

{binder_write_read bwr;const bool needRead = mIn.dataPosition() >= mIn.dataSize();const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;bwr.write_size = outAvail;bwr.write_buffer = (uintptr_t)mOut.data();if (doReceive && needRead) {//接收数据缓冲区信息的填充。当收到驱动的数据,则写入mInbwr.read_size = mIn.dataCapacity();bwr.read_buffer = (uintptr_t)mIn.data();} else {bwr.read_size = 0;bwr.read_buffer = 0;}// 当同时没有输入和输出数据则直接返回if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;bwr.write_consumed = 0;bwr.read_consumed = 0;status_t err;do {//ioctl不停的读写操作,经过syscall,进入Binder驱动。调用Binder_ioctl【小节3.1】if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)err = NO_ERROR;elseerr = -errno;...} while (err == -EINTR);if (err >= NO_ERROR) {if (bwr.write_consumed > 0) {if (bwr.write_consumed < mOut.dataSize())mOut.remove(0, bwr.write_consumed);elsemOut.setDataSize(0);}if (bwr.read_consumed > 0) {mIn.setDataSize(bwr.read_consumed);mIn.setDataPosition(0);}return NO_ERROR;}return err;

}

binder_write_read结构体用来与Binder设备交换数据的结构, 通过ioctl与mDriverFD通信,是真正与Binder驱动进行数据读写交互的过程。

2.9 IPC.executeCommand

add Service

…

三、Binder driver

3.1 Binder_ioctl

由【小节2.8】传递过出来的参数cmd=BINDER_WRITE_READ

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{int ret;struct binder_proc *proc = filp->private_data;struct binder_thread *thread;//当binder_stop_on_user_error>=2,则该线程加入等待队列,进入休眠状态ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);...binder_lock(__func__);// 从binder_proc中查找binder_thread,如果当前线程已经加入到proc的线程队列则直接返回,// 如果不存在则创建binder_thread,并将当前线程添加到当前的procthread = binder_get_thread(proc);if (thread == NULL) {ret = -ENOMEM;goto err;}switch (cmd) {case BINDER_WRITE_READ://【见小节3.2】ret = binder_ioctl_write_read(filp, cmd, arg, thread);if (ret)goto err;break;...}default:ret = -EINVAL;goto err;}ret = 0;

err:if (thread)thread->looper &= ~BINDER_LOOPER_STATE_NEED_RETURN;binder_unlock(__func__);//当binder_stop_on_user_error>=2,则该线程加入等待队列,进入休眠状态wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);return ret;

}

- 当返回值为-ENOMEM,则意味着内存不足,无法创建binder_thread对象。

- 当返回值为-EINVAL,则意味着CMD命令参数无效;

3.2 binder_ioctl_write_read

此时arg是一个binder_write_read结构体,mOut数据保存在write_buffer,所以write_size>0,但此时read_size=0。

static int binder_ioctl_write_read(struct file *filp,unsigned int cmd, unsigned long arg,struct binder_thread *thread)

{int ret = 0;struct binder_proc *proc = filp->private_data;unsigned int size = _IOC_SIZE(cmd);void __user *ubuf = (void __user *)arg;struct binder_write_read bwr;if (size != sizeof(struct binder_write_read)) {ret = -EINVAL;goto out;}//将用户空间bwr结构体拷贝到内核空间if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {ret = -EFAULT;goto out;}if (bwr.write_size > 0) {//【见小节3.3】ret = binder_thread_write(proc, thread,bwr.write_buffer,bwr.write_size,&bwr.write_consumed);//当执行失败,则直接将内核bwr结构体写回用户空间,并跳出该方法if (ret < 0) {bwr.read_consumed = 0;if (copy_to_user(ubuf, &bwr, sizeof(bwr)))ret = -EFAULT;goto out;}}if (bwr.read_size > 0) {...}if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {ret = -EFAULT;goto out;}

out:return ret;

}

3.3 binder_thread_write

static int binder_thread_write(struct binder_proc *proc,struct binder_thread *thread,binder_uintptr_t binder_buffer, size_t size,binder_size_t *consumed)

{uint32_t cmd;void __user *buffer = (void __user *)(uintptr_t)binder_buffer;void __user *ptr = buffer + *consumed;void __user *end = buffer + size;while (ptr < end && thread->return_error == BR_OK) {//拷贝用户空间的cmd命令,此时为BC_TRANSACTIONif (get_user(cmd, (uint32_t __user *)ptr))return -EFAULT;ptr += sizeof(uint32_t);switch (cmd) {case BC_TRANSACTION:{struct binder_transaction_data tr;//拷贝用户空间的binder_transaction_dataif (copy_from_user(&tr, ptr, sizeof(tr)))return -EFAULT;ptr += sizeof(tr);//【见小节3.4】binder_transaction(proc, thread, &tr, cmd == BC_REPLY);break;}...}*consumed = ptr - buffer;}return 0;

}

不断从binder_buffer所指向的地址,获取并处理相应的binder_transaction_data。

3.4 binder_transaction

发送的是BC_TRANSACTION时,此时reply=0。

static void binder_transaction(struct binder_proc *proc,struct binder_thread *thread,struct binder_transaction_data *tr, int reply){struct binder_transaction *t;struct binder_work *tcomplete;binder_size_t *offp, *off_end;binder_size_t off_min;struct binder_proc *target_proc;struct binder_thread *target_thread = NULL;struct binder_node *target_node = NULL;struct list_head *target_list;wait_queue_head_t *target_wait;struct binder_transaction *in_reply_to = NULL;if (reply) {...}else {//查询目标进程的过程: handle -> binder_ref -> binder_node -> binder_procif (tr->target.handle) {struct binder_ref *ref;ref = binder_get_ref(proc, tr->target.handle);target_node = ref->node;}target_proc = target_node->proc;...}if (target_thread) {e->to_thread = target_thread->pid;target_list = &target_thread->todo;target_wait = &target_thread->wait;} else {//首次执行target_thread为空target_list = &target_proc->todo;target_wait = &target_proc->wait;}t = kzalloc(sizeof(*t), GFP_KERNEL);tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL);//非oneway的通信方式,把当前thread保存到transaction的from字段if (!reply && !(tr->flags & TF_ONE_WAY))t->from = thread;elset->from = NULL;t->sender_euid = task_euid(proc->tsk);t->to_proc = target_proc; //目标进程为system_servert->to_thread = target_thread;t->code = tr->code; //code = START_SERVICE_TRANSACTIONt->flags = tr->flags; // flags = 0t->priority = task_nice(current);//从目标进程中分配内存空间t->buffer = binder_alloc_buf(target_proc, tr->data_size,tr->offsets_size, !reply && (t->flags & TF_ONE_WAY));t->buffer->allow_user_free = 0;t->buffer->transaction = t;t->buffer->target_node = target_node;if (target_node)binder_inc_node(target_node, 1, 0, NULL);offp = (binder_size_t *)(t->buffer->data + ALIGN(tr->data_size, sizeof(void *)));//分别拷贝用户空间的binder_transaction_data中ptr.buffer和ptr.offsets到内核copy_from_user(t->buffer->data, (const void __user *)(uintptr_t)tr->data.ptr.buffer, tr->data_size);copy_from_user(offp, (const void __user *)(uintptr_t)tr->data.ptr.offsets, tr->offsets_size);off_end = (void *)offp + tr->offsets_size;for (; offp < off_end; offp++) {struct flat_binder_object *fp;fp = (struct flat_binder_object *)(t->buffer->data + *offp);off_min = *offp + sizeof(struct flat_binder_object);switch (fp->type) {...case BINDER_TYPE_HANDLE:case BINDER_TYPE_WEAK_HANDLE: {struct binder_ref *ref = binder_get_ref(proc, fp->handle);if (ref->node->proc == target_proc) {if (fp->type == BINDER_TYPE_HANDLE)fp->type = BINDER_TYPE_BINDER;elsefp->type = BINDER_TYPE_WEAK_BINDER;fp->binder = ref->node->ptr;fp->cookie = ref->node->cookie;binder_inc_node(ref->node, fp->type == BINDER_TYPE_BINDER, 0, NULL);} else {struct binder_ref *new_ref;new_ref = binder_get_ref_for_node(target_proc, ref->node);fp->handle = new_ref->desc;binder_inc_ref(new_ref, fp->type == BINDER_TYPE_HANDLE, NULL);trace_binder_transaction_ref_to_ref(t, ref, new_ref);}} break;...default:return_error = BR_FAILED_REPLY;goto err_bad_object_type;}}if (reply) {binder_pop_transaction(target_thread, in_reply_to);} else if (!(t->flags & TF_ONE_WAY)) {t->need_reply = 1;t->from_parent = thread->transaction_stack;thread->transaction_stack = t;} else {if (target_node->has_async_transaction) {target_list = &target_node->async_todo;target_wait = NULL;} elsetarget_node->has_async_transaction = 1;}t->work.type = BINDER_WORK_TRANSACTION;list_add_tail(&t->work.entry, target_list);tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;list_add_tail(&tcomplete->entry, &thread->todo);if (target_wait)wake_up_interruptible(target_wait);return;

}

未完待续。。。

原文地址: http://gityuan.com/2016/09/04/binder-start-service/

以Binder视角来看Service启动相关推荐

- Android系统进程间通信(IPC)机制Binder中的Server启动过程源代码分析

原文地址: http://blog.csdn.net/luoshengyang/article/details/6629298 在前面一篇文章浅谈Android系统进程间通信(IPC)机制Binder ...

- Android service启动流程分析.

文章仅仅用于个人的学习记录,基本上内容都是网上各个大神的杰作,此处摘录过来以自己的理解学习方式记录一下. 参考链接: https://my.oschina.net/youranhongcha/blog ...

- (七十)Android O Service启动流程梳理——bindService

前言:最近在处理anr问题的时候迫切需要搞清楚service的启动流程,抽时间梳理一下. 1.service启动简述 service启动分三种,比较简单的就是startService,Android ...

- Service启动流程总结-bind和unbind

文章目录 回顾 概述 基本使用 源码探究 bind过程 Caller发起bind IServiceConnection说明 AMS处理bind请求 Service处理bind请求 AMS发布Servi ...

- service启动activity_「 Android 10 四大组件 」系列—Service 的 quot; 启动流程 quot;

作者:DeepCoder 核心源码 关键类路径 Service 的启动过程相对 Activity 的启动过程来说简单了很多,我们都知道怎么去创建和启动一个 Service, 那么你有没有从源码角度研究 ...

- 深入分析Android 9.0源代码——Service启动流程(startService方式)

引言 点击此处查看<深入分析Android 9.0源代码>系列的组织结构和相关说明. 1 应用进程发起启动请求 本章的调用流程如下图所示: (Context)ContextWrapperC ...

- 一篇文章看明白 Android Service 启动过程

Android - Service 启动过程 相关系列 一篇文章看明白 Android 系统启动时都干了什么 一篇文章了解相见恨晚的 Android Binder 进程间通讯机制 一篇文章看明白 An ...

- Android service 启动篇之 startService

系列博文: Android 中service 详解 Android service 启动篇之 startService Android service 启动篇之 bindService Android ...

- 【Android】Service启动、生命周期

service启动流程 startService方式 每个App进程中至少会有两个binder线程 ApplicationThread(简称AT)和ActivityManagerProxy(简称AMP ...

最新文章

- 2021年大数据Flink(四十四):扩展阅读 End-to-End Exactly-Once

- 使用Android studio完成”仿QQ的头像选择弹出的对话框“步骤及知识梳理

- JSON | JSON字符串和JSON对象的区别

- php 文件 不更新,php页面不刷新更新数据

- DR5白金版 for mac(PS一键磨皮插件Delicious Retouch)支持ps 2022

- python手机自动化框架_python自动化框架(一)

- linux trac svn,Ubuntu安装Trac SVN的方法及命令

- ipv6单播地址包括哪两种类型_探秘联接|技术小课堂之BRAS设备IPv6地址分配方式...

- 建议不要使用Android studio的SVN功能

- 一次性搞懂 HTTP、HTTPS、SPDY

- Mac vmvare vmdk文件使用

- c语言调用even函数,定义一个判断奇偶数的函数even(int n),当n为偶数时返回1,否则返回0,并实现对其调用。...

- 第一章概述-------第一节--1.7 计算机网络体系结构

- 按计算机应用领域分类 情报检索系统属于,2014年高职单招计算机类模拟试卷一(环职职专)...

- 笔记本重装系统如何找回之前自己自带的office

- AB测试(Test)——原理与实际案例手把手教学

- ticklength

- html中onfocus和onblur的使用

- CSM(Certified Scrum Master) 敏捷认证是什么?

- C4D R19 图文安装教程