私有云中Kubernetes Cluster HA方案

2019独角兽企业重金招聘Python工程师标准>>>

更多关于Kubernetes的深度文章,请到我oschina/WaltonWang的博客主页。

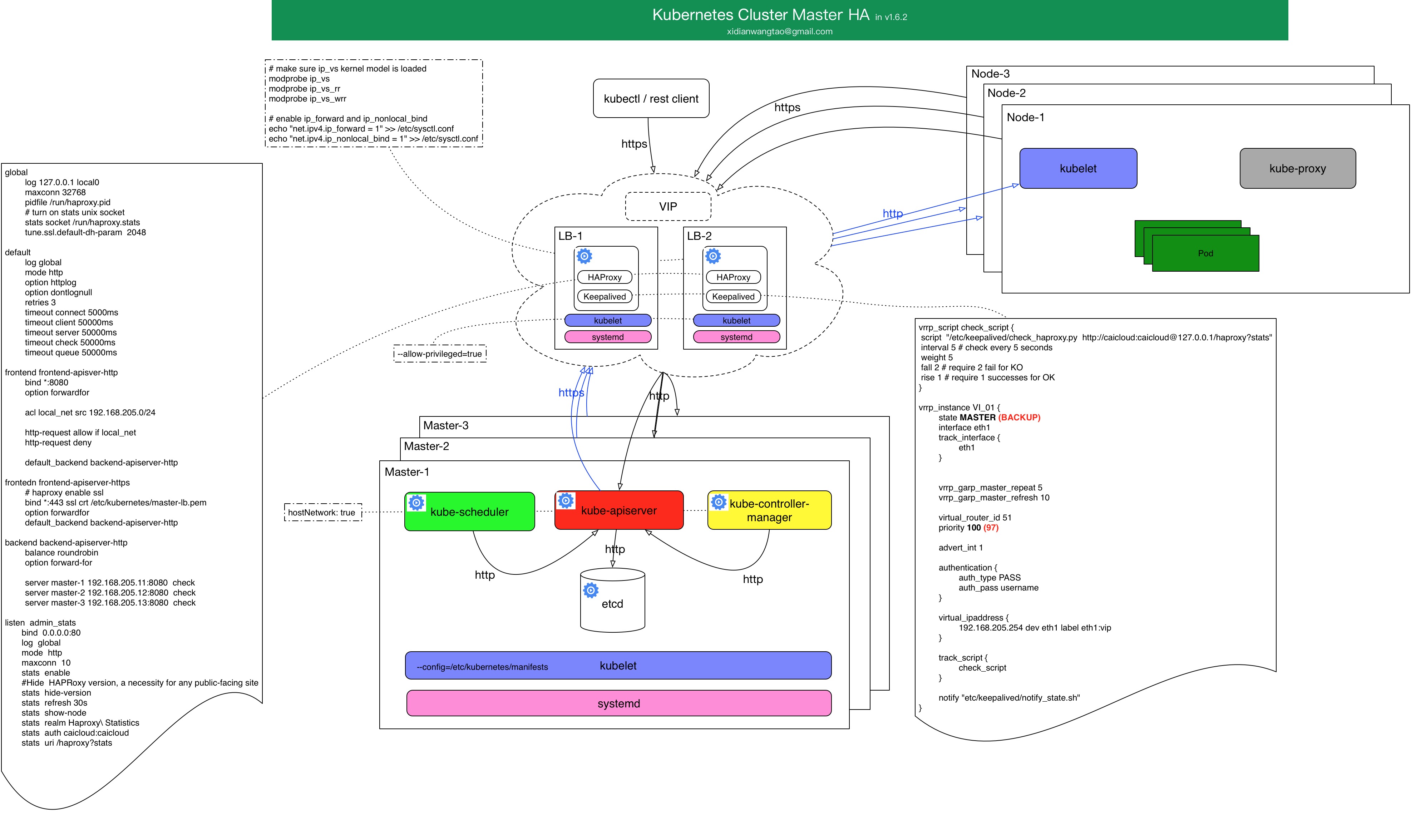

Kubernetes Master HA架构图

配置与说明

所有组件可以通过kubelet static pod的方式启动和管理,由kubelet static pod机制保证宿主机上各个组件的高可用, 注意kubelet要添加配置

--allow-privileged=true;管理static pod的kubelet的高可用通过systemd来负责;

当然,你也可以直接通过进程来部署这些组件,systemd来直接管理这些进程;(我们选择的是这种方式,降低复杂度。)

上图中,etcd和Master部署在一起,三个Master节点分别部署了三个etcd,这三个etcd组成一个集群;(当然,如果条件允许,建议将etcd集群和Master节点分开部署。)

每个Master中的apiserver、controller-manager、scheduler都使用hostNetwork, controller-manager和scheduler通过localhost连接到本节点的apiserver,而不会和其他两个Master节点的apiserver连接;

外部的rest-client、kubectl、kubelet、kube-proxy等都通过TLS证书,在LB节点做TLS Termination,LB出来就是http请求发到经过LB策略(RR)到对应的apiserver instance;

apiserver到kubelet server和kube-proxy server的访问也类似,Https到LB这里做TLS Termination,然后http请求出来到对应node的kubelet/kube-proxy server;

apiserver的HA通过经典的haproxy + keepalived来保证,集群对外暴露VIP;

controller-manager和scheduler的HA通过自身提供的leader选举功能(--leader-elect=true),使得3个controller-manager和scheduler都分别只有一个是leader,leader处于正常工作状态,当leader失败,会重新选举新leader来顶替继续工作;

因此,该HA方案中,通过haproxy+keepalived来做apiserver的LB和HA,controller-manager和scheduler通过自身的leader选举来达到HA,etcd通过raft协议保证etcd cluster数据的一致性,达到HA;

keepalived的配置可参考如下:

vrrp_script check_script {script "/etc/keepalived/check_haproxy.py http://caicloud:caicloud@127.0.0.1/haproxy?stats"interval 5 # check every 5 secondsweight 5fall 2 # require 2 fail for KOrise 1 # require 1 successes for OK }vrrp_instance VI_01 {state MASTER (BACKUP)interface eth1track_interface {eth1}vrrp_garp_master_repeat 5vrrp_garp_master_refresh 10virtual_router_id 51priority 100 (97)advert_int 1authentication {auth_type PASSauth_pass username}virtual_ipaddress {192.168.205.254 dev eth1 label eth1:vip}track_script {check_script}notify "etc/keepalived/notify_state.sh" }haproxy的配置可参考如下:

globallog 127.0.0.1 local0maxconn 32768pidfile /run/haproxy.pid# turn on stats unix socketstats socket /run/haproxy.statstune.ssl.default-dh-param 2048default log globalmode httpoption httplogoption dontlognullretries 3timeout connect 5000mstimeout client 50000mstimeout server 50000mstimeout check 50000mstimeout queue 50000msfrontend frontend-apisver-httpbind *:8080option forwardforacl local_net src 192.168.205.0/24http-request allow if local_nethttp-request denydefault_backend backend-apiserver-httpfrontedn frontend-apiserver-https# haproxy enable sslbind *:443 ssl crt /etc/kubernetes/master-lb.pemoption forwardfordefault_backend backend-apiserver-httpbackend backend-apiserver-httpbalance roundrobinoption forward-forserver master-1 192.168.205.11:8080 checkserver master-2 192.168.205.12:8080 checkserver master-3 192.168.205.13:8080 checklisten admin_statsbind 0.0.0.0:80log globalmode httpmaxconn 10stats enable#Hide HAPRoxy version, a necessity for any public-facing sitestats hide-versionstats refresh 30sstats show-nodestats realm Haproxy\ Statisticsstats auth caicloud:caicloudstats uri /haproxy?statsLB所在的节点,注意确保ip_vs model已加载、ip_forward和ip_nonlocal_bind已开启;

# make sure ip_vs kernel model is loaded modprobe ip_vs modprobe ip_vs_rr modprobe ip_vs_wrr# enable ip_forward and ip_nonlocal_bind echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf echo "net.ipv4.ip_nonlocal_bind = 1" >> /etc/sysctl.conf如果你通过pod来部署K8S的组件,可参考官方给出的Yaml:

apiserver

apiVersion: v1 kind: Pod metadata:name: kube-apiserver spec:hostNetwork: truecontainers:- name: kube-apiserverimage: gcr.io/google_containers/kube-apiserver:9680e782e08a1a1c94c656190011bd02command:- /bin/sh- -c- /usr/local/bin/kube-apiserver --address=127.0.0.1 --etcd-servers=http://127.0.0.1:4001--cloud-provider=gce --admission-control=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota--service-cluster-ip-range=10.0.0.0/16 --client-ca-file=/srv/kubernetes/ca.crt--basic-auth-file=/srv/kubernetes/basic_auth.csv --cluster-name=e2e-test-bburns--tls-cert-file=/srv/kubernetes/server.cert --tls-private-key-file=/srv/kubernetes/server.key--secure-port=443 --token-auth-file=/srv/kubernetes/known_tokens.csv --v=2--allow-privileged=False 1>>/var/log/kube-apiserver.log 2>&1ports:- containerPort: 443hostPort: 443name: https- containerPort: 7080hostPort: 7080name: http- containerPort: 8080hostPort: 8080name: localvolumeMounts:- mountPath: /srv/kubernetesname: srvkubereadOnly: true- mountPath: /var/log/kube-apiserver.logname: logfile- mountPath: /etc/sslname: etcsslreadOnly: true- mountPath: /usr/share/sslname: usrsharesslreadOnly: true- mountPath: /var/sslname: varsslreadOnly: true- mountPath: /usr/sslname: usrsslreadOnly: true- mountPath: /usr/lib/sslname: usrlibsslreadOnly: true- mountPath: /usr/local/opensslname: usrlocalopensslreadOnly: true- mountPath: /etc/opensslname: etcopensslreadOnly: true- mountPath: /etc/pki/tlsname: etcpkitlsreadOnly: truevolumes:- hostPath:path: /srv/kubernetesname: srvkube- hostPath:path: /var/log/kube-apiserver.logname: logfile- hostPath:path: /etc/sslname: etcssl- hostPath:path: /usr/share/sslname: usrsharessl- hostPath:path: /var/sslname: varssl- hostPath:path: /usr/sslname: usrssl- hostPath:path: /usr/lib/sslname: usrlibssl- hostPath:path: /usr/local/opensslname: usrlocalopenssl- hostPath:path: /etc/opensslname: etcopenssl- hostPath:path: /etc/pki/tlsname: etcpkitls- controller-manager

apiVersion: v1 kind: Pod metadata:name: kube-controller-manager spec:containers:- command:- /bin/sh- -c- /usr/local/bin/kube-controller-manager --master=127.0.0.1:8080 --cluster-name=e2e-test-bburns--cluster-cidr=10.245.0.0/16 --allocate-node-cidrs=true --cloud-provider=gce --service-account-private-key-file=/srv/kubernetes/server.key--v=2 --leader-elect=true 1>>/var/log/kube-controller-manager.log 2>&1image: gcr.io/google_containers/kube-controller-manager:fda24638d51a48baa13c35337fcd4793livenessProbe:httpGet:path: /healthzport: 10252initialDelaySeconds: 15timeoutSeconds: 1name: kube-controller-managervolumeMounts:- mountPath: /srv/kubernetesname: srvkubereadOnly: true- mountPath: /var/log/kube-controller-manager.logname: logfile- mountPath: /etc/sslname: etcsslreadOnly: true- mountPath: /usr/share/sslname: usrsharesslreadOnly: true- mountPath: /var/sslname: varsslreadOnly: true- mountPath: /usr/sslname: usrsslreadOnly: true- mountPath: /usr/lib/sslname: usrlibsslreadOnly: true- mountPath: /usr/local/opensslname: usrlocalopensslreadOnly: true- mountPath: /etc/opensslname: etcopensslreadOnly: true- mountPath: /etc/pki/tlsname: etcpkitlsreadOnly: truehostNetwork: truevolumes:- hostPath:path: /srv/kubernetesname: srvkube- hostPath:path: /var/log/kube-controller-manager.logname: logfile- hostPath:path: /etc/sslname: etcssl- hostPath:path: /usr/share/sslname: usrsharessl- hostPath:path: /var/sslname: varssl- hostPath:path: /usr/sslname: usrssl- hostPath:path: /usr/lib/sslname: usrlibssl- hostPath:path: /usr/local/opensslname: usrlocalopenssl- hostPath:path: /etc/opensslname: etcopenssl- hostPath:path: /etc/pki/tlsname: etcpkitls- scheduler

apiVersion: v1 kind: Pod metadata:name: kube-scheduler spec:hostNetwork: truecontainers:- name: kube-schedulerimage: gcr.io/google_containers/kube-scheduler:34d0b8f8b31e27937327961528739bc9command:- /bin/sh- -c- /usr/local/bin/kube-scheduler --master=127.0.0.1:8080 --v=2 --leader-elect=true 1>>/var/log/kube-scheduler.log2>&1livenessProbe:httpGet:path: /healthzport: 10251initialDelaySeconds: 15timeoutSeconds: 1volumeMounts:- mountPath: /var/log/kube-scheduler.logname: logfile- mountPath: /var/run/secrets/kubernetes.io/serviceaccountname: default-token-s8ejdreadOnly: truevolumes:- hostPath:path: /var/log/kube-scheduler.logname: logfile- etcd

apiVersion: v1 kind: Pod metadata:name: etcd-server spec:hostNetwork: truecontainers:- image: gcr.io/google_containers/etcd:2.0.9name: etcd-containercommand:- /usr/local/bin/etcd- --name- ${NODE_NAME}- --initial-advertise-peer-urls- http://${NODE_IP}:2380- --listen-peer-urls- http://${NODE_IP}:2380- --advertise-client-urls- http://${NODE_IP}:4001- --listen-client-urls- http://127.0.0.1:4001- --data-dir- /var/etcd/data- --discovery- ${DISCOVERY_TOKEN}ports:- containerPort: 2380hostPort: 2380name: serverport- containerPort: 4001hostPort: 4001name: clientportvolumeMounts:- mountPath: /var/etcdname: varetcd- mountPath: /etc/sslname: etcsslreadOnly: true- mountPath: /usr/share/sslname: usrsharesslreadOnly: true- mountPath: /var/sslname: varsslreadOnly: true- mountPath: /usr/sslname: usrsslreadOnly: true- mountPath: /usr/lib/sslname: usrlibsslreadOnly: true- mountPath: /usr/local/opensslname: usrlocalopensslreadOnly: true- mountPath: /etc/opensslname: etcopensslreadOnly: true- mountPath: /etc/pki/tlsname: etcpkitlsreadOnly: truevolumes:- hostPath:path: /var/etcd/dataname: varetcd- hostPath:path: /etc/sslname: etcssl- hostPath:path: /usr/share/sslname: usrsharessl- hostPath:path: /var/sslname: varssl- hostPath:path: /usr/sslname: usrssl- hostPath:path: /usr/lib/sslname: usrlibssl- hostPath:path: /usr/local/opensslname: usrlocalopenssl- hostPath:path: /etc/opensslname: etcopenssl- hostPath:path: /etc/pki/tlsname: etcpkitls

转载于:https://my.oschina.net/jxcdwangtao/blog/1556365

私有云中Kubernetes Cluster HA方案相关推荐

- 浅析企业私有云中的存储架构

1 云计算 1.1 云计算 云计算(Cloud Computing)是分布式计算(Distributed Computing).并行计算(Parallel Computing).效用计算(Utilit ...

- PostgreSQL HA集群高可用方案介绍 pgpool-II+PostgreSQL HA方案部署

PostgreSQL HA集群高可用方案介绍 & pgpool-II+PostgreSQL HA方案部署 一.PostgreSQL HA集群高可用方案介绍 二.pgpool-II+Postgr ...

- 在Google Cloud platform上创建Kubernetes cluster并使用

登录Google Cloud platform,创建一个新的Kubernetes Cluster: 该集群的node个数选择为1,从Machine type下拉列表里选择CPU配置: 展开Advanc ...

- Hadoop CDH4.5 MapReduce MRv1 HA方案实战

为什么80%的码农都做不了架构师?>>> 上篇实战了HDFS的HA方案,这篇来实战一下MRv1的HA方案,还是基于上篇的环境来实战,原有的HDFS HA环境不做拆除.因为Job ...

- spring boot、mybatis集成druid数据库连接池,实现mysql cluster HA负载均衡访问

spring boot.mybatis集成druid数据库连接池,实现mysql cluster HA负载均衡访问 1.原理实现介绍 本质来说使用连接池是为了节省创建.关闭数据库连接的资源消耗,从而提 ...

- hadoop namenode ha方案

Hadoop 2.0 NameNode HA和Federation实践 Posted on 2012/12/10 一.背景 天云趋势在2012年下半年开始为某大型国有银行的历史交易数据备份及查询提供基 ...

- BookKeeper设计介绍及其在Hadoop2.0 Namenode HA方案中的使用分析

BookKeeper背景 BK是一个可靠的日志流记录系统,用于将系统产生的日志(也可以是其他数据)记录在BK集群上,由BK这个第三方Storage保证数据存储的可靠和一致性.典型场景是系统写write ...

- Helm:问题对应:k3s下使用helm 3提示Kubernetes cluster unreachable

在k3s 1.0.0版本安装之后使用helm 3时提示Kubernetes cluster unreachable,手动指定KUBECONFIG即可解决此问题. 现象 在使用helm install安 ...

- MySQL HA方案:MMM,MHA,Orchestrator,MGR

一.优缺点 HA方案没有最好,只有最合适:MMM虽然最古老&有点跟不上时代,但配置完成后运维基本不需要人工介入:其它几种多少都需要人工介入,MySQL8.0及以上强烈推荐MGR单主模式.其它几 ...

最新文章

- grafana官方使用文档_使用 Loki 采集微服务日志

- PE关于导入表(IAT)知识复习

- Windows 全部调试符号包下载

- oracle 恢复 跳过 表空间,Oracle表空间恢复

- oracle-SYSTEM表空间的备份与恢复

- ios 绘制线框_iOS开发 给View添加指定位置的边框线

- IT、CT、OT是什么

- 高性能反向代理软件HAProxy(一)之基本概念

- springboot项目启动成功后执行一段代码的两种方式

- c#excel导入mysql_(转)C# Excel导入Access数据库的源码

- 让菜鸟飞上天,简单搞定linux服务器

- 查询sql 语句的好坏

- Linux进阶之VMware Linux虚拟机运行提示“锁定文件失败 虚拟机开启模块snapshot失败”的解决办法...

- 杭州电子科技大学操作系统课程设计:简单文件系统的实现

- SVN工具将本地代码导入SVN资源库

- 论文笔记:DELPHI:预测蛋白质相互作用位点的精确深度集成模型

- QuatusII--7段数码管

- 虚拟贴图理论篇之Texture Filtering

- 引发卡塔尔断交潮的“俄罗斯黑客”究竟有多牛?

- team viewer如何解绑设备