Hadoop笔记(1)——hdfs命令访问方式

2019独角兽企业重金招聘Python工程师标准>>>

之前的CDH5.8.3安装部署就算是前菜。今天开始写正式的系列第一篇,给自己个完整的笔记整理。

其实目前随便找一本hadoop的书,都会在HDFS的章节把hadoop dfs的命令从查看、推拉、增删、复制剪切等逐一罗列。但是,就连今年刚出版的第n版,已经将hadoop介绍到hadoop2.6以上的,也没有使用hadoop最新官方推荐的hdfs命令交互方式,如果你按照那些命令敲,一大堆的Deprecated。今天这篇博客,我按照类别,将原先的老命令(已经deprecated了)和对应的新命令放到一起做个整理。

(本文出自:https://my.oschina.net/happyBKs/blog/811739)

为了方便以后查阅和给读者一个看了就知道如何用的帮助。我将所有的命令用命令示例的方式给出,不在只是给出其他博客抄来抄去的命令文字说明。不过需要啰嗦的地方也会啰嗦几句。

为了方便说明,先新建hdfs目录,命令我们先用老命令,后面会把新命令罗列。我们建两个目录。

[root@localhost hadoop]# hadoop fs -mkdir /myhome

[root@localhost hadoop]# hadoop fs -mkdir /myhome/happyBKs1. 文件的推与拉:

推:把本地系统文件推到hdfs上。

拉:把hdfs上的文件拉倒本地文件系统目录。

(1)“推”命令:

hadoop dfs -put <本地文件> <HDFS路径:可以是一个目录,文件将被推倒这个目录下,或者是一个文件名,文件推上去会被重新命名>上面这条命令是从hadoop1开始就有的方式,也是各类书介绍的命令。有的也用hadoop fs .....等等。

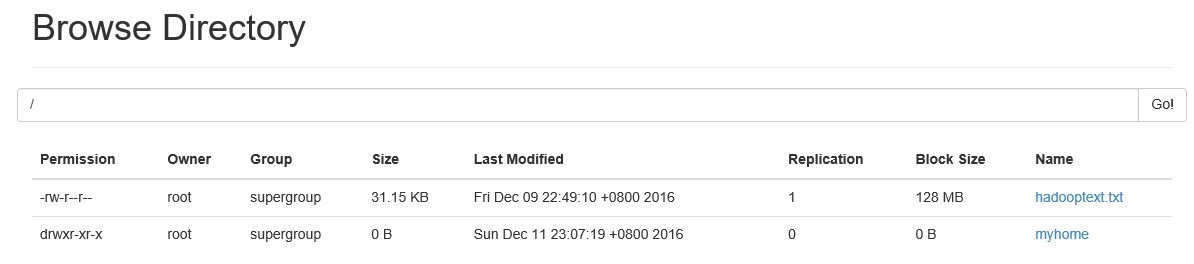

下面用这种方式先将hadooptext.txt这个文本文件推到hdfs的根目录/下。

[root@localhost log]# ll

total 32

-rw-r--r--. 1 root root 31894 Dec 9 06:45 hadooptext.txt[root@localhost log]# hadoop dfs -put hadooptext.txt /

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

16/12/09 06:48:29 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost log]# hadoop dfs -ls /

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.16/12/09 06:49:30 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 2 items

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /hadooptext.txt

drwxr-xr-x - root supergroup 0 2016-12-04 06:46 /myhome

[root@localhost log]# hadoop dfs -lsr /

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.lsr: DEPRECATED: Please use 'ls -R' instead.

16/12/09 06:50:00 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /hadooptext.txt

drwxr-xr-x - root supergroup 0 2016-12-04 06:46 /myhome

drwxr-xr-x - root supergroup 0 2016-12-04 06:46 /myhome/happyBKs

值得注意的是,过程中,CDH5.8 hdfs提示命令已经不被推荐。

那么推荐的方式是什么呢?

“推”命令【官方推荐的新方式】:

hdfs dfs <本地文件> <HDFS路径:可以是一个目录,文件将被推倒这个目录下,或者是一个文件名,文件推上去会被重新命名>我们用这种方式再来将另一个本地文件googledev.txt推送到hdfs的/myhome/happyBKs目录下。

然后再将刚才那个hadooptext.txt推送到/myhome/happyBKs下,并且命名为hadooptext2.txt。

这样,就不会出现Deprecated的提示了。更重要的是,hdfs dfs命令比hadoop dfs的运行速度快得多,前后对比一下还是比较明显的。

[root@localhost log]# nano

[root@localhost log]# ll

total 36

-rw-r--r--. 1 root root 2185 Dec 9 06:57 googledev.txt

-rw-r--r--. 1 root root 31894 Dec 9 06:45 hadooptext.txt

[root@localhost log]# hdfs dfs -put googledev.txt /myhome/happyBKs

16/12/09 06:57:39 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost log]# hdfs dfs -lsr /

lsr: DEPRECATED: Please use 'ls -R' instead.

16/12/09 06:58:14 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /hadooptext.txt

drwxr-xr-x - root supergroup 0 2016-12-04 06:46 /myhome

drwxr-xr-x - root supergroup 0 2016-12-09 06:57 /myhome/happyBKs

-rw-r--r-- 1 root supergroup 2185 2016-12-09 06:57 /myhome/happyBKs/googledev.txt

[root@localhost log]# hdfs dfs -put hadooptext.txt /myhome/happyBKs/hadooptext2.txt

16/12/09 06:59:21 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost log]# hdfs dfs -lsr /

lsr: DEPRECATED: Please use 'ls -R' instead.

16/12/09 06:59:29 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /hadooptext.txt

drwxr-xr-x - root supergroup 0 2016-12-04 06:46 /myhome

drwxr-xr-x - root supergroup 0 2016-12-09 06:59 /myhome/happyBKs

-rw-r--r-- 1 root supergroup 2185 2016-12-09 06:57 /myhome/happyBKs/googledev.txt

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:59 /myhome/happyBKs/hadooptext2.txt

[root@localhost log]#

上传本地整个目录到hdfs也是可以的:

[root@localhost mqbag]# ll

total 8

-rw-r--r--. 1 root root 85 Dec 11 05:32 tangshi1.txt

-rw-r--r--. 1 root root 82 Dec 11 05:33 tangshi2.txt

[root@localhost mqbag]# cd ..

[root@localhost log]#

[root@localhost log]#

[root@localhost log]#

[root@localhost log]# hdfs dfs put mqbag/ /myhome/happyBKs

put: Unknown command

Did you mean -put? This command begins with a dash.

[root@localhost log]# hdfs dfs -put mqbag/ /myhome/happyBKs

16/12/11 05:50:37 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable[root@localhost log]# hdfs dfs -ls -R /

16/12/11 05:51:43 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /hadooptext.txt

drwxr-xr-x - root supergroup 0 2016-12-04 06:46 /myhome

drwxr-xr-x - root supergroup 0 2016-12-11 05:50 /myhome/happyBKs

-rw-r--r-- 1 root supergroup 2185 2016-12-09 06:57 /myhome/happyBKs/googledev.txt

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:59 /myhome/happyBKs/hadooptext2.txt

drwxr-xr-x - root supergroup 0 2016-12-11 05:50 /myhome/happyBKs/mqbag

-rw-r--r-- 1 root supergroup 85 2016-12-11 05:50 /myhome/happyBKs/mqbag/tangshi1.txt

-rw-r--r-- 1 root supergroup 82 2016-12-11 05:50 /myhome/happyBKs/mqbag/tangshi2.txt

[root@localhost log]#

还有一种类似于put的“推”命令 dfs -copyFromLocal

用法完全一样:

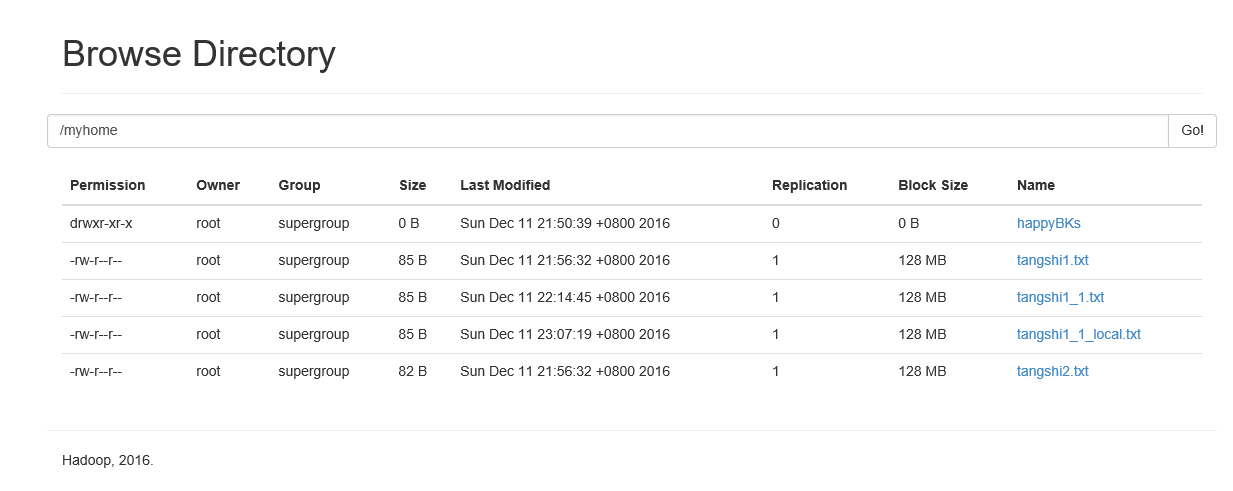

[root@localhost mqbag]# hadoop dfs -copyFromLocal *.txt /myhome

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.16/12/11 05:56:29 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable[root@localhost mqbag]# hdfs dfs -ls /myhome

16/12/11 05:57:22 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 3 items

drwxr-xr-x - root supergroup 0 2016-12-11 05:50 /myhome/happyBKs

-rw-r--r-- 1 root supergroup 85 2016-12-11 05:56 /myhome/tangshi1.txt

-rw-r--r-- 1 root supergroup 82 2016-12-11 05:56 /myhome/tangshi2.txt

推荐命令:

[root@localhost mqbag]# hdfs dfs -copyFromLocal tangshi1.txt /myhome/tangshi1_1.txt

16/12/11 06:14:43 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost mqbag]# hdfs dfs -ls /myhome

16/12/11 06:14:53 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 4 items

drwxr-xr-x - root supergroup 0 2016-12-11 05:50 /myhome/happyBKs

-rw-r--r-- 1 root supergroup 85 2016-12-11 05:56 /myhome/tangshi1.txt

-rw-r--r-- 1 root supergroup 85 2016-12-11 06:14 /myhome/tangshi1_1.txt

-rw-r--r-- 1 root supergroup 82 2016-12-11 05:56 /myhome/tangshi2.txt

[root@localhost mqbag]#

(3)拉命令

老命令:

[root@localhost mqbag]# hadoop dfs -get /myhome/tangshi1_1.txt ./

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.16/12/11 06:42:39 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost mqbag]# ll

total 12

-rw-r--r--. 1 root root 85 Dec 11 06:42 tangshi1_1.txt

-rw-r--r--. 1 root root 85 Dec 11 05:32 tangshi1.txt

-rw-r--r--. 1 root root 82 Dec 11 05:33 tangshi2.txt

推荐命令:

[root@localhost mqbag]# rm tangshi1_1.txt

rm: remove regular file ‘tangshi1_1.txt’? y[root@localhost mqbag]# hdfs dfs -get /myhome/tangshi1_1.txt tangshi1_1_local.txt

16/12/11 07:03:37 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost mqbag]# ll

total 12

-rw-r--r--. 1 root root 85 Dec 11 07:03 tangshi1_1_local.txt

-rw-r--r--. 1 root root 85 Dec 11 05:32 tangshi1.txt

-rw-r--r--. 1 root root 82 Dec 11 05:33 tangshi2.txt

[root@localhost mqbag]# 拉文件时,还能选择是否拉取crc校验失败的文件,用-ignoreCrc

还能用选项-crc 复制文件以及CRC信息。

hdfs dfs -get [-ignoreCrc] [-crc] <src> <localdst>(4) 把本地文件移动到hdfs,本地不留。

[root@localhost mqbag]# hdfs dfs -moveFromLocal tangshi1_1_local.txt /myhome

16/12/11 07:07:13 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost mqbag]# ll

total 8

-rw-r--r--. 1 root root 85 Dec 11 05:32 tangshi1.txt

-rw-r--r--. 1 root root 82 Dec 11 05:33 tangshi2.txt

[root@localhost mqbag]# hdfs dfs -ls /myhome

16/12/11 07:07:37 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 5 items

drwxr-xr-x - root supergroup 0 2016-12-11 05:50 /myhome/happyBKs

-rw-r--r-- 1 root supergroup 85 2016-12-11 05:56 /myhome/tangshi1.txt

-rw-r--r-- 1 root supergroup 85 2016-12-11 06:14 /myhome/tangshi1_1.txt

-rw-r--r-- 1 root supergroup 85 2016-12-11 07:07 /myhome/tangshi1_1_local.txt

-rw-r--r-- 1 root supergroup 82 2016-12-11 05:56 /myhome/tangshi2.txt

[root@localhost mqbag]#

2. 文件查看类命令:

(1)查看hdfs某目录:

[root@localhost hadoop]# hdfs dfs -ls /

16/12/13 06:55:28 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 2 items

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /hadooptext.txt

drwxr-xr-x - root supergroup 0 2016-12-11 07:07 /myhome

[root@localhost hadoop]# hadoop dfs -lsr /

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.lsr: DEPRECATED: Please use 'ls -R' instead.

16/12/13 07:00:49 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /hadooptext.txt

drwxr-xr-x - root supergroup 0 2016-12-11 07:07 /myhome

drwxr-xr-x - root supergroup 0 2016-12-11 05:50 /myhome/happyBKs

-rw-r--r-- 1 root supergroup 2185 2016-12-09 06:57 /myhome/happyBKs/googledev.txt

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:59 /myhome/happyBKs/hadooptext2.txt

drwxr-xr-x - root supergroup 0 2016-12-11 05:50 /myhome/happyBKs/mqbag

-rw-r--r-- 1 root supergroup 85 2016-12-11 05:50 /myhome/happyBKs/mqbag/tangshi1.txt

-rw-r--r-- 1 root supergroup 82 2016-12-11 05:50 /myhome/happyBKs/mqbag/tangshi2.txt

-rw-r--r-- 1 root supergroup 85 2016-12-11 05:56 /myhome/tangshi1.txt

-rw-r--r-- 1 root supergroup 85 2016-12-11 06:14 /myhome/tangshi1_1.txt

-rw-r--r-- 1 root supergroup 85 2016-12-11 07:07 /myhome/tangshi1_1_local.txt

-rw-r--r-- 1 root supergroup 82 2016-12-11 05:56 /myhome/tangshi2.txt

[root@localhost hadoop]#

[root@localhost hadoop]# hdfs dfs -ls -R /

16/12/13 07:01:08 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /hadooptext.txt

drwxr-xr-x - root supergroup 0 2016-12-11 07:07 /myhome

drwxr-xr-x - root supergroup 0 2016-12-11 05:50 /myhome/happyBKs

-rw-r--r-- 1 root supergroup 2185 2016-12-09 06:57 /myhome/happyBKs/googledev.txt

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:59 /myhome/happyBKs/hadooptext2.txt

drwxr-xr-x - root supergroup 0 2016-12-11 05:50 /myhome/happyBKs/mqbag

-rw-r--r-- 1 root supergroup 85 2016-12-11 05:50 /myhome/happyBKs/mqbag/tangshi1.txt

-rw-r--r-- 1 root supergroup 82 2016-12-11 05:50 /myhome/happyBKs/mqbag/tangshi2.txt

-rw-r--r-- 1 root supergroup 85 2016-12-11 05:56 /myhome/tangshi1.txt

-rw-r--r-- 1 root supergroup 85 2016-12-11 06:14 /myhome/tangshi1_1.txt

-rw-r--r-- 1 root supergroup 85 2016-12-11 07:07 /myhome/tangshi1_1_local.txt

-rw-r--r-- 1 root supergroup 82 2016-12-11 05:56 /myhome/tangshi2.txt

[root@localhost hadoop]#

(2)查看HDFS文件、目录的大小和数量的命令

一共四个命令需要知道:三个看大小的和一个看数量的

a. 某个目录的总容量、使用量、剩余量、使用率百分比。(看资源容量时最推荐)

hdfs dfs -df <path>查看目录的使用情况,如hdfs上该目录的总容量、该目录已经使用了多少字节,还有多少字节可用,使用率的百分比。但不会显示该目录下每个文件或子目录的有哪些及它们的大小信息。

[root@localhost hadoop]# hdfs dfs -df /

16/12/13 07:10:30 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Filesystem Size Used Available Use%

hdfs://master:9000 19001245696 143360 12976656384 0%

[root@localhost hadoop]#

[root@localhost hadoop]# hdfs dfs -df /myhome/happyBKs

16/12/15 06:44:46 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Filesystem Size Used Available Use%

hdfs://master:9000 19001245696 143360 12976279552 0%

[root@localhost hadoop]#

b. 查看某个目录下的文件和子目录的大小(看子女)和查看某个目录本身的大小(只看本人)

hdfs dfs -du /显示某目录下的文件和子目录,但是不递归下去显示孙级目录的内容,也不会显示本级目录的总大小。显示这些文件的大小(Byte)

hdfs dfs -du -s /显示本级目录的总大小,但是不会显示该目录下的任何文件和子目录大小。

下面的是上面两个命令的示例,可以看出区别:

[root@localhost hadoop]# hdfs dfs -du /

16/12/13 07:12:38 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

31894 31894 /hadooptext.txt

34583 34583 /myhome

[root@localhost hadoop]#

[root@localhost hadoop]# hdfs dfs -du /myhome/happyBKs

16/12/15 06:30:53 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2185 2185 /myhome/happyBKs/googledev.txt

31894 31894 /myhome/happyBKs/hadooptext2.txt

167 167 /myhome/happyBKs/mqbag

[root@localhost hadoop]# 如果我只关心整个目录的总大小:

[root@localhost hadoop]# hdfs dfs -du -s /

16/12/15 06:27:40 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

66477 66477 /

[root@localhost hadoop]# hdfs dfs -du -s /myhome

16/12/15 06:28:11 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

34583 34583 /myhome

[root@localhost hadoop]# hdfs dfs -du -s /myhome/happyBKs

16/12/15 06:28:31 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

34246 34246 /myhome/happyBKs

[root@localhost hadoop]#

当然-du -s还可以写成-dus, 不过已经被DEPRECATED了。

[root@localhost hadoop]# hdfs dfs -dus /

dus: DEPRECATED: Please use 'du -s' instead.

16/12/13 07:13:25 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

66477 66477 /d. 看数量

看某个目录下的目录数和文件数

显示<path>下的目录数和文件数,输出格式:

| 目录数 | 文件数 | 大小 | 文件名 |

hdfs dfs -count [-q] /如果加上-q,可以查看文件索引的情况。

[root@localhost hadoop]# hdfs dfs -count -q /

16/12/13 07:15:29 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

9223372036854775807 9223372036854775794 none inf 4 9 66477 /

[root@localhost hadoop]#

[root@localhost hadoop]# hdfs dfs -count /

16/12/13 07:16:08 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable4 9 66477 /

[root@localhost hadoop]# 当然,若是要查看hdfs的使用情况和文件,也可以用通过浏览器访问。但请确保hadoop 服务器的对应的hdfs端口已经在防火墙设置过,且最好是永久设置,不然你无法通过50070端口访问。(如果你设置了其他号码为hdfs端口。请设置其他数字)

3. 查看文件的内容

a. 有两个命令都可以用来查看文件的内容:

hdfs dfs -cat <src>浏览HDFS路径为<src>的文件的内容

-cat [-ignoreCrc] <src> ... :

Fetch all files that match the file pattern <src> and display their content on

stdout.

hdfs dfs -text <src>强HDFS路径为<src>的文本文件输出

-text [-ignoreCrc] <src> ... :

Takes a source file and outputs the file in text format.

The allowed formats are zip and TextRecordInputStream and Avro.

是不是没看出什么区别?是的,在输出查看文本内容时确实如此:

[root@localhost hadoop]# hdfs dfs -cat /myhome/tangshi1.txt

16/12/18 05:53:03 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

八阵图功盖三分国,名成八阵图。

江流石不转,遗恨失吞吴。

[root@localhost hadoop]# hdfs dfs -text /myhome/tangshi1.txt

16/12/18 05:53:24 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

八阵图功盖三分国,名成八阵图。

江流石不转,遗恨失吞吴。

[root@localhost hadoop]# b. 查看文件的最后1KB内容 、动态监控日志更新

hdfs dfs -tail <src>查看文件的最后1KB内容。这对查看那种庞大的日志文件来说是十分有用的。

[root@localhost logs]# hdfs dfs -tail /myhome/hadoop-hadoop-datanode-localhost.localdomain.log

16/12/18 06:34:19 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

127.0.0.1:38881, dest: /127.0.0.1:50010, bytes: 15181, op: HDFS_WRITE, cliID: DFSClient_NONMAPREDUCE_586277825_1, offset: 0, srvID: 54611752-a966-4db1-aabe-8d4525b73d78, blockid: BP-1682033911-127.0.0.1-1480341812291:blk_1073741834_1010, duration: 848268122

2016-12-18 06:07:49,911 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: PacketResponder: BP-1682033911-127.0.0.1-1480341812291:blk_1073741834_1010, type=LAST_IN_PIPELINE, downstreams=0:[] terminating

2016-12-18 06:10:41,876 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetAsyncDiskService: Scheduling blk_1073741834_1010 file /opt/hdfs/data/current/BP-1682033911-127.0.0.1-1480341812291/current/finalized/subdir0/subdir0/blk_1073741834 for deletion

2016-12-18 06:10:41,914 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetAsyncDiskService: Deleted BP-1682033911-127.0.0.1-1480341812291 blk_1073741834_1010 file /opt/hdfs/data/current/BP-1682033911-127.0.0.1-1480341812291/current/finalized/subdir0/subdir0/blk_1073741834

[root@localhost logs]#

或者说它就是为日志文件量身定制的。因为它还能适用于文件不断变化的情景。

[root@localhost logs]# hdfs dfs -tail -f /myhome/hadoop-hadoop-datanode-localhost.localdomain.log

16/12/18 06:53:31 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

127.0.0.1:38881, dest: /127.0.0.1:50010, bytes: 15181, op: HDFS_WRITE, cliID: DFSClient_NONMAPREDUCE_586277825_1, offset: 0, srvID: 54611752-a966-4db1-aabe-8d4525b73d78, blockid: BP-1682033911-127.0.0.1-1480341812291:blk_1073741834_1010, duration: 848268122

2016-12-18 06:07:49,911 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: PacketResponder: BP-1682033911-127.0.0.1-1480341812291:blk_1073741834_1010, type=LAST_IN_PIPELINE, downstreams=0:[] terminating

2016-12-18 06:10:41,876 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetAsyncDiskService: Scheduling blk_1073741834_1010 file /opt/hdfs/data/current/BP-1682033911-127.0.0.1-1480341812291/current/finalized/subdir0/subdir0/blk_1073741834 for deletion

2016-12-18 06:10:41,914 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetAsyncDiskService: Deleted BP-1682033911-127.0.0.1-1480341812291 blk_1073741834_1010 file /opt/hdfs/data/current/BP-1682033911-127.0.0.1-1480341812291/current/finalized/subdir0/subdir0/blk_1073741834

^Ctail: Filesystem closed加入-f参数会进入一种监控方式,控制台显示了最后1KB内容之后,命令行不会返回,而知等待新内容的到来。输出的内容会随着文件追加内容而更新。非常适用于监控日志文件。停止监控,只需要Ctrl+C

c. 查HDFS上的文件或目录的统计信息

输入下面的命令,查HDFS上的路径为<path>的文件或目录的统计信息。格式为:

| %b | 文件大小 |

| %n | 文件名 |

| %r | 复制因子,或者说副本数 |

| %y,%Y | 修改日期 |

看例子吧。

[root@localhost logs]# hadoop fs -stat '%b %n %r %y %Y %o' /myhome/tangshi1.txt

16/12/18 07:22:43 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

85 tangshi1.txt 1 2016-12-11 13:56:32 1481464592248 134217728

[root@localhost logs]# hadoop fs -stat '%b %n %r %y %Y %o' /myhome/

16/12/18 07:24:41 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

0 myhome 0 2016-12-18 14:26:04 1482071164232 0

[root@localhost logs]#

4. HDFS目录的新建、删、移、复制

(1)HDFS目录的新建:

注意:新建目录如果新建的的目录包含多级目录,必须加上-p,否则会由于不认识第一级目录而报错。这个l与linux命令一样啦。

[root@localhost logs]# hdfs dfs -mkdir /yourhome/home1

16/12/18 07:27:49 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

mkdir: '/yourhome/home1': No such file or directory

[root@localhost logs]#

[root@localhost logs]# hdfs dfs -mkdir -p /yourhome/home1

16/12/18 07:28:22 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost logs]# hdfs dfs -ls /

16/12/18 07:28:32 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 3 items

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /hadooptext.txt

drwxr-xr-x - root supergroup 0 2016-12-18 06:26 /myhome

drwxr-xr-x - root supergroup 0 2016-12-18 07:28 /yourhome

[root@localhost logs]# hdfs dfs -ls /yourhome

16/12/18 07:28:40 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 1 items

drwxr-xr-x - root supergroup 0 2016-12-18 07:28 /yourhome/home1

[root@localhost logs]# (2)HDFS目录的改名和剪切移位:

如果mv的目标目录不存在,那么源目录会被改名;如果目标目录存在,那么源目录会被剪切移位到目标目录下。

[root@localhost sbin]# hdfs dfs mkdir -p /yourhome/home2

mkdir: Unknown command

Did you mean -mkdir? This command begins with a dash.

[root@localhost sbin]# hdfs dfs -mkdir -p /yourhome/home2

16/12/22 07:03:07 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost sbin]#

[root@localhost sbin]#

[root@localhost sbin]# hdfs dfs -ls /yourhome

16/12/22 07:03:18 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 2 items

drwxr-xr-x - root supergroup 0 2016-12-18 07:28 /yourhome/home1

drwxr-xr-x - root supergroup 0 2016-12-22 07:03 /yourhome/home2

[root@localhost sbin]# hdfs dfs -mv /yourhome/home1 /yourhome/home3

16/12/22 07:04:57 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost sbin]# hdfs dfs -ls /yourhome

16/12/22 07:05:03 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 2 items

drwxr-xr-x - root supergroup 0 2016-12-22 07:03 /yourhome/home2

drwxr-xr-x - root supergroup 0 2016-12-18 07:28 /yourhome/home3[root@localhost sbin]# hdfs dfs -ls -R /yourhome

16/12/22 07:05:28 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

drwxr-xr-x - root supergroup 0 2016-12-22 07:03 /yourhome/home2

drwxr-xr-x - root supergroup 0 2016-12-18 07:28 /yourhome/home3

[root@localhost sbin]#

[root@localhost sbin]# hdfs dfs -mv /yourhome/home2 /yourhome/home3

16/12/22 07:05:47 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost sbin]# hdfs dfs -ls -R /yourhome

16/12/22 07:05:53 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

drwxr-xr-x - root supergroup 0 2016-12-22 07:05 /yourhome/home3

drwxr-xr-x - root supergroup 0 2016-12-22 07:03 /yourhome/home3/home2

[root@localhost sbin]#

(3)HDFS目录的删除

删除目录的时候,不要忘记-r

[root@localhost sbin]# hdfs dfs -rm -r /yourhome/home3

16/12/22 07:14:36 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Deleted /yourhome/home3

删除的时候注意,hdfs上的删除默认都是标记为删除,实际上在hdfs的回收站里依然占据着空间。要想彻底删除,必须把回收站清空,命令如下:

[root@localhost sbin]# hdfs dfs -expunge

16/12/22 07:17:48 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost sbin]#

或者在rm时加入-skipTrash,这样就能直接删除干净了。有点像windows下你按住shift+DEL。

[root@localhost sbin]# hdfs dfs -rm -r -skipTrash /yourhome/home3

16/12/22 07:19:03 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Deleted /yourhome/home3

[root@localhost sbin]#

假如你是那种把绝密文件放在自己虚拟机hdfs上的男同胞,冠希哥的错误不要在学习hdfs的路程中再犯了。

(4)HDFS目录的复制:

复制目录的时候,用的不是-r,而是-f。

[root@localhost hadoop]# hdfs dfs -cp -f /yourhome /yourhome2

16/12/24 07:06:41 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost hadoop]# hdfs dfs -ls /your*

16/12/24 07:06:59 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 1 items

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /yourhome/hadooparticle.txt

Found 1 items

-rw-r--r-- 1 root supergroup 31894 2016-12-24 07:06 /yourhome2/hadooparticle.txt

[root@localhost hadoop]# hdfs dfs -rm -r -skipTrash /yourhome

16/12/24 07:09:07 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Deleted /yourhome

[root@localhost hadoop]#

5. 文件的增删移位

(1)在HDFS上新建一个空文件:

[root@localhost hadoop]# hdfs dfs -touchz /blacnk.txt

16/12/24 06:33:03 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost hadoop]# hdfs dfs -ls /

16/12/24 06:33:11 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 4 items

-rw-r--r-- 1 root supergroup 0 2016-12-24 06:33 /blacnk.txt

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /hadooptext.txt

drwxr-xr-x - root supergroup 0 2016-12-18 06:26 /myhome

drwxr-xr-x - root supergroup 0 2016-12-22 07:19 /yourhome[root@localhost hadoop]# hdfs dfs -cat /blacnk.txt

16/12/24 06:33:49 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost hadoop]#(2)删除HDFS上的一个文件

[root@localhost hadoop]# hdfs dfs -rm -skipTrash /blacnk.txt

16/12/24 06:40:15 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Deleted /blacnk.txt

[root@localhost hadoop]#

(3)将HDFS上的文件剪切移位:

[root@localhost hadoop]# hdfs dfs -ls -R /

16/12/24 06:28:22 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /hadooptext.txt

drwxr-xr-x - root supergroup 0 2016-12-18 06:26 /myhome

-rw-r--r-- 1 root supergroup 171919 2016-12-18 06:26 /myhome/hadoop-hadoop-datanode-localhost.localdomain.log

drwxr-xr-x - root supergroup 0 2016-12-11 05:50 /myhome/happyBKs

-rw-r--r-- 1 root supergroup 2185 2016-12-09 06:57 /myhome/happyBKs/googledev.txt

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:59 /myhome/happyBKs/hadooptext2.txt

drwxr-xr-x - root supergroup 0 2016-12-11 05:50 /myhome/happyBKs/mqbag

-rw-r--r-- 1 root supergroup 85 2016-12-11 05:50 /myhome/happyBKs/mqbag/tangshi1.txt

-rw-r--r-- 1 root supergroup 82 2016-12-11 05:50 /myhome/happyBKs/mqbag/tangshi2.txt

-rw-r--r-- 1 root supergroup 85 2016-12-11 05:56 /myhome/tangshi1.txt

-rw-r--r-- 1 root supergroup 85 2016-12-11 06:14 /myhome/tangshi1_1.txt

-rw-r--r-- 1 root supergroup 85 2016-12-11 07:07 /myhome/tangshi1_1_local.txt

-rw-r--r-- 1 root supergroup 82 2016-12-11 05:56 /myhome/tangshi2.txt

drwxr-xr-x - root supergroup 0 2016-12-22 07:19 /yourhome[root@localhost hadoop]# hdfs dfs -mv /hadooptext.txt /yourhome

16/12/24 06:44:04 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost hadoop]# hdfs dfs -ls -R /

16/12/24 06:44:19 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

drwxr-xr-x - root supergroup 0 2016-12-18 06:26 /myhome

-rw-r--r-- 1 root supergroup 171919 2016-12-18 06:26 /myhome/hadoop-hadoop-datanode-localhost.localdomain.log

drwxr-xr-x - root supergroup 0 2016-12-11 05:50 /myhome/happyBKs

-rw-r--r-- 1 root supergroup 2185 2016-12-09 06:57 /myhome/happyBKs/googledev.txt

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:59 /myhome/happyBKs/hadooptext2.txt

drwxr-xr-x - root supergroup 0 2016-12-11 05:50 /myhome/happyBKs/mqbag

-rw-r--r-- 1 root supergroup 85 2016-12-11 05:50 /myhome/happyBKs/mqbag/tangshi1.txt

-rw-r--r-- 1 root supergroup 82 2016-12-11 05:50 /myhome/happyBKs/mqbag/tangshi2.txt

-rw-r--r-- 1 root supergroup 85 2016-12-11 05:56 /myhome/tangshi1.txt

-rw-r--r-- 1 root supergroup 85 2016-12-11 06:14 /myhome/tangshi1_1.txt

-rw-r--r-- 1 root supergroup 85 2016-12-11 07:07 /myhome/tangshi1_1_local.txt

-rw-r--r-- 1 root supergroup 82 2016-12-11 05:56 /myhome/tangshi2.txt

drwxr-xr-x - root supergroup 0 2016-12-24 06:44 /yourhome

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /yourhome/hadooptext.txt

[root@localhost hadoop]#

(4)hdfs文件重命名

前面用mv可以移动hdfs目录,也可以为目录重命名。mv对hdfs文件也是一样。

[root@localhost hadoop]# hdfs dfs -mv /yourhome/hadooptext.txt /yourhome/hadooparticle.txt

16/12/24 06:58:11 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost hadoop]# hdfs dfs -ls /yourhome

16/12/24 06:58:25 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 1 items

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /yourhome/hadooparticle.txt

[root@localhost hadoop]#(5)hdfs复制文件

复制的时候可以只指定文件复制的目标目录位置;也可以指定一个完整的目标文件名,即在复制的同时为文件改名字。

[root@localhost hadoop]# hdfs dfs -cp /yourhome/hadooparticle.txt /

16/12/24 07:00:04 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost hadoop]#

[root@localhost hadoop]# hdfs dfs -ls / /yourhome

16/12/24 07:00:30 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 3 items

-rw-r--r-- 1 root supergroup 31894 2016-12-24 07:00 /hadooparticle.txt

drwxr-xr-x - root supergroup 0 2016-12-18 06:26 /myhome

drwxr-xr-x - root supergroup 0 2016-12-24 06:58 /yourhome

Found 1 items

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /yourhome/hadooparticle.txt

[root@localhost hadoop]#

[root@localhost hadoop]# hdfs dfs -cp /hadooparticle.txt /hadooptext.txt

16/12/24 07:01:17 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@localhost hadoop]# hdfs dfs -ls / /yourhome

16/12/24 07:01:22 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 4 items

-rw-r--r-- 1 root supergroup 31894 2016-12-24 07:00 /hadooparticle.txt

-rw-r--r-- 1 root supergroup 31894 2016-12-24 07:01 /hadooptext.txt

drwxr-xr-x - root supergroup 0 2016-12-18 06:26 /myhome

drwxr-xr-x - root supergroup 0 2016-12-24 06:58 /yourhome

Found 1 items

-rw-r--r-- 1 root supergroup 31894 2016-12-09 06:49 /yourhome/hadooparticle.txt

[root@localhost hadoop]#

这里其实提到了ls的一个用法,显示多个目录下的内容,只要在-ls后面逐个列举就可以了,显示的时候会分开显示,并显示每个列举的目录下有多少个文件或者子目录,Found x items。

至于编辑HDFS上的文件内容,那你就别想了。老老实实把要修改的文件get下来,再put回去吧。

6. 一个非常有用的文件条件判断命令

test

使用方法:hadoop fs -test -[ezd] URI

选项:

-e 检查文件是否存在。如果存在则返回0。

-z 检查文件是否是0字节。如果是则返回0。

-d 如果路径是个目录,则返回1,否则返回0。

不过这玩意是不显示的,所以别傻敲。

最后,我把hdfs命令在三种命令形式的区别给出:比较权威的区别解释:不翻译了。

Following are the three commands which appears same but have minute differences

- hadoop fs {args}

- hadoop dfs {args}

hdfs dfs {args}

hadoop fs <args>

FS relates to a generic file system which can point to any file systems like local, HDFS etc. So this can be used when you are dealing with different file systems such as Local FS, HFTP FS, S3 FS, and others

hadoop dfs <args>

dfs is very specific to HDFS. would work for operation relates to HDFS. This has been deprecated and we should use hdfs dfs instead.

hdfs dfs <args>

same as 2nd i.e would work for all the operations related to HDFS and is the recommended command instead of hadoop dfs

below is the list categorized as HDFS commands.

**#hdfs commands**namenode|secondarynamenode|datanode|dfs|dfsadmin|fsck|balancer|fetchdt|oiv|dfsgroups

So even if you use Hadoop dfs , it will look locate hdfs and delegate that command to hdfs dfs

最后的最后,介绍hdfs中最有用的命令: --help,不知道的时候,查就是了!

[root@localhost mqbag]# hdfs --help

Usage: hdfs [--config confdir] COMMANDwhere COMMAND is one of:dfs run a filesystem command on the file systems supported in Hadoop.namenode -format format the DFS filesystemsecondarynamenode run the DFS secondary namenodenamenode run the DFS namenodejournalnode run the DFS journalnodezkfc run the ZK Failover Controller daemondatanode run a DFS datanodedfsadmin run a DFS admin clientdiskbalancer Distributes data evenly among disks on a given nodehaadmin run a DFS HA admin clientfsck run a DFS filesystem checking utilitybalancer run a cluster balancing utilityjmxget get JMX exported values from NameNode or DataNode.mover run a utility to move block replicas acrossstorage typesoiv apply the offline fsimage viewer to an fsimageoiv_legacy apply the offline fsimage viewer to an legacy fsimageoev apply the offline edits viewer to an edits filefetchdt fetch a delegation token from the NameNodegetconf get config values from configurationgroups get the groups which users belong tosnapshotDiff diff two snapshots of a directory or diff thecurrent directory contents with a snapshotlsSnapshottableDir list all snapshottable dirs owned by the current userUse -help to see optionsportmap run a portmap servicenfs3 run an NFS version 3 gatewaycacheadmin configure the HDFS cachecrypto configure HDFS encryption zonesstoragepolicies list/get/set block storage policiesversion print the versionMost commands print help when invoked w/o parameters.

[root@localhost mqbag]# hdfs version

Hadoop 2.6.0-cdh5.8.3

Subversion http://github.com/cloudera/hadoop -r 992be3bac6b145248d32c45b16f8fce5a984b158

Compiled by jenkins on 2016-10-13T03:23Z

Compiled with protoc 2.5.0

From source with checksum ef7968b8b98491d54f83cb3bd7a87ea

This command was run using /opt/hadoop-2.6.0-cdh5.8.3/share/hadoop/common/hadoop-common-2.6.0-cdh5.8.3.jar

[root@localhost mqbag]# 转载于:https://my.oschina.net/happyBKs/blog/811739

Hadoop笔记(1)——hdfs命令访问方式相关推荐

- HDFS的访问方式之HDFS shell的常用命令

场景 CentOS7上搭建Hadoop集群(入门级): https://blog.csdn.net/BADAO_LIUMANG_QIZHI/article/details/119335883 在上面搭 ...

- 大数据Hadoop系列之HDFS命令讲解

1. 前言 HDFS命令基本格式:hadoop fs -cmd < args > 2. ls 命令 hadoop fs -ls / 列出hdfs文件系统根目录下的目录和文件 hadoop ...

- hadoop 2.8 hdfs 命令错误总结

第一步格式化namenode hadoop namenode -format 面我们再看每一个{dfs.name.dir}下存放的文件,执行-format后会在文件夹下生成{dfs.name.dir} ...

- 通过shell命令访问HDFS

### 实验名称 通过shell命令访问HDFS ### 实验目的 1.理解HDFS在Hadoop体系结构中的角色: 2.熟练使用常用的Shell命令访问HDFS: ### 实验背景 HDFS分布式存 ...

- Hadoop学习笔记之HDFS

Hadoop学习笔记之HDFS HDFS (Hadoop Distributed File System) 优点 缺点 HDFS操作 命令操作HDFS Web端操作HDFS Java操作HDFS HD ...

- Hadoop笔记整理(二):HDFS

[TOC] HDFS(Hadoop Distributed File System):分布式存储 NameNode 是整个文件系统的管理节点.它维护着整个文件系统的文件目录树,文件/目录的元信息和每个 ...

- Hadoop官网翻译 (HDFS命令)

HDFS命令行 用户命令 dfs envvars 获取hadoop环境变量 fsck <path> -delete -files -blocks -replicaDetails -list ...

- Hadoop的集群搭建及HDFS命令

环境信息 1. 硬件: 内存ddr3 4G及以上的x86架构主机一部 系统环境:windows 2. 软件: virtualbox 3. 其他: 无 步骤与方法 1. 安装Hadoop并进行集群搭建 ...

- Linux运行hadoop命令,将hadoop程序打成jar包,在linux下以命令行方式运行(例如单词计算程序)...

自定义Mapper import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.ha ...

最新文章

- 公开课报名 | 深入浅出理解A3C强化学习

- JVM---垃圾回收算法详解

- CubieBoard开发板不用ttl线也不用hdmi线的安装方法

- C语言实现随机生成0~100的数

- unity人物旋转移动代码_游戏诞生之日02 - 美术篇 快速制作人物动画

- NoSQL开篇——为什么要使用NoSQL

- Servlet中获取文件在服务器主机的真实路径

- win7下使用命令行关闭被某一端口占用的进程

- 使用svm 对参数寻优的时候出现错误

- TI CCS下载地址

- 【MySQL】聚合函数、group by、having、order by等语句的应用

- SpringBoot项目实战,附源码

- 裸机嵌入式开发和操作系统嵌入式开发

- jquery 身份证工具类插件

- 串口工具推荐——串口监视精灵v4.0

- 【python核心编程笔记+习题】-CH7-映射

- 计算机操作系统汤晓丹版的实验A.7源代码

- python 爬虫抓取网页数据导出excel_Python爬虫|爬取起点中文网小说信息保存到Excel...

- b站会员购独家发售限定手办 率先抢占Z世代消费心智

- Java之——汉字转换拼音(大小写)

热门文章

- finalcut剪切快捷键_Final Cut Pro X 常用快捷键大全 FCPX快捷键

- Oracle 备份失败报错ORA-04063: view SYS.KU_RADM_FPTM_VIEW has errors

- Image Caption Generation原理简介

- C++时间戳time_t和时间结构体tm

- 使用total commander打开当前目录

- 年薪30万+的HR这样做数据分析!(附关键指标免费模版)

- 后羿采集器怎么导出数据_免费爬虫工具:后羿采集器如何采集同花顺圈子评论数据...

- 建筑力学与结构【3】

- 自学Java语言网络编程局域网内与电脑无线传输视频,图片文件,调用系统媒体播放视频图片文件

- 第一阶段:2014年10月13日-12月14日,36天完成。每周5天,8周完成。