How Kafka’s Storage Internals Work

In this post I’m going to help you understand how Kafka stores its data.

I’ve found understanding this useful when tuning Kafka’s performance and for context on what each broker configuration actually does. I was inspired by Kafka’s simplicity and used what I learned to start implementing Kafka in Golang.

So how does Kafka’s storage internals work?

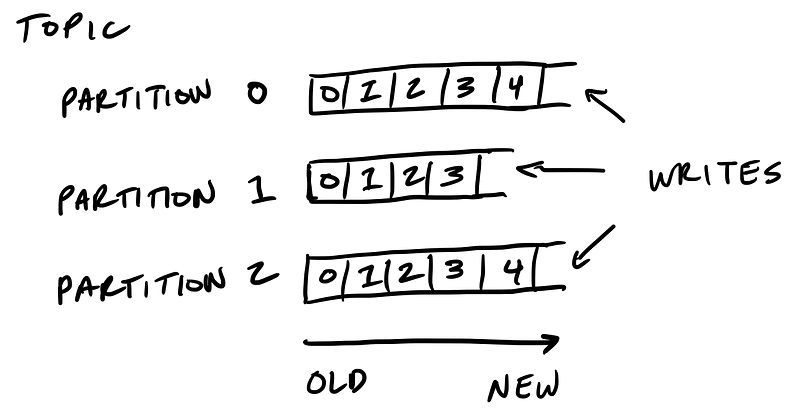

Kafka’s storage unit is a partition

A partition is an ordered, immutable sequence of messages that are appended to. A partition cannot be split across multiple brokers or even multiple disks.

The retention policy governs how Kafka retains messages

You specify how much data or how long data should be retained, after which Kafka purges messages in-order—regardless of whether the message has been consumed.

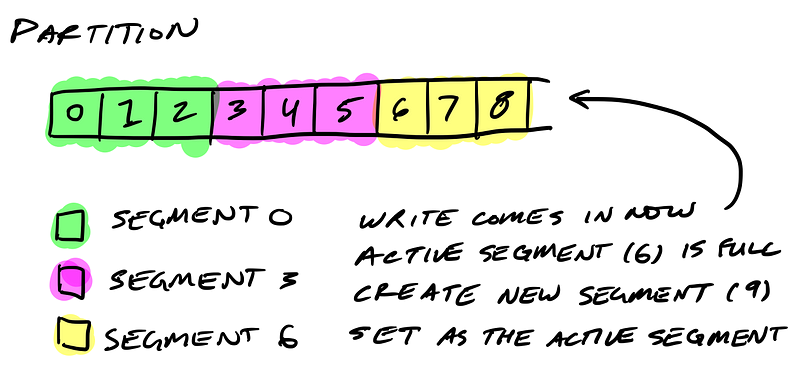

Partitions are split into segments

So Kafka needs to regularly find the messages on disk that need purged. With a single very long file of a partition’s messages, this operation is slow and error prone. To fix that (and other problems we’ll see), the partition is split into segments.

When Kafka writes to a partition, it writes to a segment — the active segment. If the segment’s size limit is reached, a new segment is opened and that becomes the new active segment.

Segments are named by their base offset. The base offset of a segment is an offset greater than offsets in previous segments and less than or equal to offsets in that segment.

On disk a partition is a directory and each segment is an index file and a log file.

$ tree kafka | head -n 6kafka├── events-1│ ├── 00000000003064504069.index│ ├── 00000000003064504069.log│ ├── 00000000003065011416.index│ ├── 00000000003065011416.log

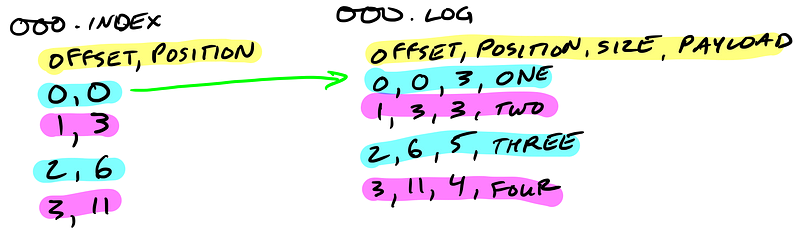

Segments logs are where messages are stored

Each message is its value, offset, timestamp, key, message size, compression codec, checksum, and version of the message format.

The data format on disk is exactly the same as what the broker receives from the producer over the network and sends to its consumers. This allows Kafka to efficiently transfer data with zero copy.

$ bin/kafka-run-class.sh kafka.tools.DumpLogSegments --deep-iteration --print-data-log --files /data/kafka/events-1/00000000003065011416.log | head -n 4Dumping /data/kafka/appusers-1/00000000003065011416.logStarting offset: 3065011416offset: 3065011416 position: 0 isvalid: true payloadsize: 2820 magic: 1 compresscodec: NoCompressionCodec crc: 811055132 payload: {"name": "Travis", msg: "Hey, what's up?"}offset: 3065011417 position: 1779 isvalid: true payloadsize: 2244 magic: 1 compresscodec: NoCompressionCodec crc: 151590202 payload: {"name": "Wale", msg: "Starving."}

Segment indexes map message offsets to their position in the log

The segment index maps offsets to their message’s position in the segment log.

The index file is memory mapped, and the offset look up uses binary search to find the nearest offset less than or equal to the target offset.

The index file is made up of 8 byte entries, 4 bytes to store the offset relative to the base offset and 4 bytes to store the position. The offset is relative to the base offset so that only 4 bytes is needed to store the offset. For example: let’s say the base offset is 10000000000000000000, rather than having to store subsequent offsets 10000000000000000001 and 10000000000000000002 they are just 1 and 2.

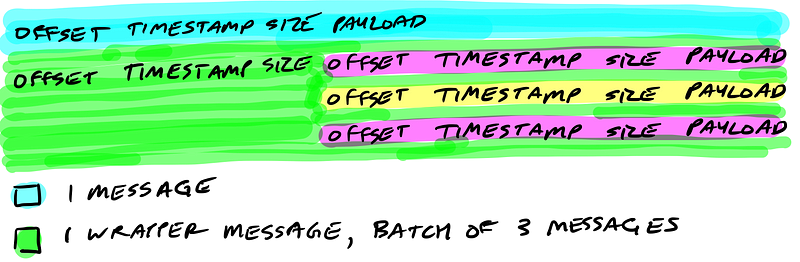

Kafka wraps compressed messages together

Producers sending compressed messages will compress the batch together and send it as the payload of a wrapped message. And as before, the data on disk is exactly the same as what the broker receives from the producer over the network and sends to its consumers.

Let’s Review

Now you know how Kafka storage internals work:

- Partitions are Kafka’s storage unit

- Partitions are split into segments

- Segments are two files: its log and index

- Indexes map each offset to their message’s position in the log, they’re used to look up messages

- Indexes store offsets relative to its segment’s base offset

- Compressed message batches are wrapped together as the payload of a wrapper message

- The data stored on disk is the same as what the broker receives from the producer over the network and sends to its consumers

Implementing Kafka in Golang

I’m writing an implementation of Kafka in Golang, Jocko. So far I’ve implemented reading and writing to segments on a single broker and am working on making it distributed. Follow along and give me a hand.

转载于:https://www.cnblogs.com/felixzh/p/6035675.html

How Kafka’s Storage Internals Work相关推荐

- 第八周翻译:《Pro SQL Server Internals, 2nd edition》CHAPTER 1 Data Storage Internals Data Pages and DataRow

译者:薛朝鹏,张鹏辉 数据库中的空间分为逻辑8KB页面. 这些页面从零开始连续编号,可以通过指定文件ID和页码来引用它们. 页面编号始终是连续的,这样当SQL Server增长数据库文件时,新页面将从 ...

- Pro SQL Server Internals, 2nd edition》CHAPTER 1 Data Storage Internals节选翻译

翻译原文:<Pro SQL Server Internals, 2nd edition>CHAPTER 1 Data Storage Internals 作者:Dmitri Korotke ...

- Kafka Offset Storage

1.概述 目前,Kafka 官网最新版[0.10.1.1],已默认将消费的 offset 迁入到了 Kafka 一个名为 __consumer_offsets 的Topic中.其实,早在 0.8.2. ...

- input hidden的值存储在哪儿_kafka内核:消息存储模块的工作机制

Medium网站:How Kafka's Storage Internals Work 本篇文章介绍kafka消息存储机制: 首先提出以下问题: 一个partition为什么被分割为多个segment ...

- OpenShift 4 之AMQ Streams(2) - 用Kafka Connect访问数据源

<OpenShift 4.x HOL教程汇总> Kafka Connect是一种可扩展的和可靠的连接Kafka框架与外部系统的框架.通过不同的Connector可以访问如数据库,键值存储, ...

- kafka中文文档(0.10.0)

kafka中文文档(0.10.0) 作者:链上研发-老杨叔叔 时间:2016-07-22 版本:Apache Kafka 0.10.0 (2016年5月底发布) .目录 kafka中文文档0100 目 ...

- kafka官方文档中文翻译(kafka参数解释)

目录 入门 1.1简介 kafka™是一个分布式流媒体平台.这到底意味着什么? 1.2使用案例 1.3快速入门 1.4生态系统 1.5从以前的版本升级 2. API 2.1生产者API 2.2消费者A ...

- 【MQ】Kafka笔记

笔记来源:尚硅谷视频笔记2.0版+2.1版 黑马视频:Kafka深入探秘者来了 kafka笔记地址:https://blog.csdn.net/hancoder/article/details/107 ...

- kafka 配置大全(中文,英文)

配置名 默认值 英文描述 中文描述 zookeeper.connect Zookeeper host string Zookeeper主机字符串 advertised.host.name null ...

最新文章

- python opencv image 转 c++ avframe

- 移动H5开发入门知识,CSS的单位汇总与用法

- Swift 协议protocol

- [前台]---js+jquery校验姓名,手机号,身份证号

- 建模大师怎么安装到revit中_工程师最爱的REVIT插件,让BIM建模溜到飞起!

- python学什么东西_什么是Python?你应该学习和使用它的13个理由

- AAAI'22 | 多模态摘要任务中的知识蒸馏和分层语义关联

- 设计模式,六大设计原则,类的特性

- 新浪财经三人行:专家谈萨班斯法案聊天实录

- 手机中文c语言编辑器,Turbo C中文(c语言编辑器)v3.7.8.9

- 51单片机入门——LCD1602

- 开源一款资源分享与下载工具 —— 电驴(eMule)

- JAVA自动装箱和拆箱功能是把双刃剑

- 毕业一年小结——说好的战斗呢?

- HiveQL整理总结

- javaScript 上传图片(oss阿里云)

- [生存志] 第122节 金匮真言脉要精微

- Color Constancy Datasets

- 逆向分析系列——查壳侦壳工具

- 常见状态码(200、403、404、500)