[ZZ] Adventures with Gamma-Correct Rendering

http://renderwonk.com/blog/index.php/archive/adventures-with-gamma-correct-rendering/

Adventures with Gamma-Correct Rendering

Aug 3rd, 2007 by Naty

I’ve been spending a fair amount of time recently making our game’s rendering pipeline gamma-correct, which turned out to involve quite a bit more than I first suspected. I’ll give some background and then outline the issues I encountered - hopefully others trying to “gamma-correct” their renderers will find this useful.

In a renderer implemented with no special attention to gamma-correctness, the entire pipeline is in the same color space as the final display surface - usually the sRGB color space (pdf). This is nice and consistent; colors (in textures, material editor color pickers, etc.) appear the same in-game as in the authoring app. Most game artists are very used to and comfortable with working in this space. The sRGB color space has the further advantage of being (approximately)perceptually uniform. This means that constant increments in this space correspond to constant increases in perceived brightness. This maximally utilizes the available bit-depth of textures and frame buffers.

However, sRGB is not physically uniform; constant increments do not correspond to constant increases in physical intensity. This means that computing lighting and shading in this space is incorrect. Such computations should be performed in the physically uniform linear color space. Computing shading in sRGB space is like doing math in a world where 1+1=3.

The rest of this post is a bit lengthy; more details after the jump.

Theoretical considerations aside, why should we care about our math being correct? The answer is that the results just don’t look right when you shade in sRGB space. To illustrate, here are two renderings of a pair of spotlights shining on a plane (produced using Turtle).

The first image was rendered without any color-space conversions, so the spotlights were combined in sRGB space:

The second image was rendered with proper conversions, so , so the spotlights were combined in linear space:

The first image looks obviously wrong - two dim lights are combining in the middle to produce an unrealistically bright area, which is an example of the “1+1=3″ effect I mentioned earlier. The second image looks correct.

So how do we ensure our shading is performed in linear space? One approach would be to move the entire pipeline to that space. This is usually not feasible, for three reasons:

- 8-bit-per-channel textures and frame buffers lack the precision to represent linear values without objectionable banding, and it’s usually not practical to use floats for all textures, render targets and display buffers.

- Most applications used by artists to manipulate colors work in sRGB space (a few apps like Photoshop do allow selection of the working color space).

- The hardware platform used sometimes requires that the final display buffer is in sRGB space.

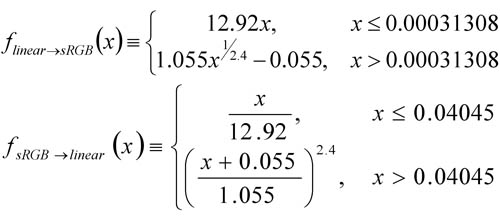

Since most of the inputs to the rendering pipeline are in sRGB space, as well as (almost always) the final output, and we want most of the “stuff in the middle” to be in linear space, we end up doing a bunch of conversions between color spaces. Such conversions are done using the following functions, implemented in hardware (usually in approximate form), in shader code or in tools code:

These functions are only defined over the 0-1 range; using them for values outside the range may yield unexpected results.

Most modern GPUs support automatic linear to sRGB conversions on render target writes, and these are fairly simple to set up (links to Direct3D andOpenGL documentation). However, not all GPUs with this feature necessarily handle blending properly.

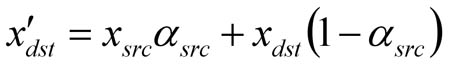

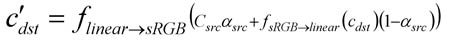

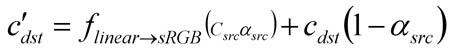

The correct thing to do is to perform blending like any other shading calculation - in linear space. Some GPUs screw this up, with varyingly bad results. I’ll discuss the alpha-blending case as an example, but similar problems exist for other blend modes. The following equations use lowercase c for values in sRGB space, and uppercase C for linear space (x is for generic values which can be in either space). Note that the alpha value itself represents a physical coverage amount, thus it is always in linear space and never has to be converted.

As is well known, the alpha blend equation is:

Since we are giving the GPU a color in linear space, and the render target color is in sRGB space before and after the blend operation, we want the GPU to do this:

The D3D10 and OpenGL extension specs require this behavior. However, most D3D9-class GPUs will convert to sRGB space, and then blend there:

In this situation, shader calculations occur in linear space, and blending calculations occur in sRGB space. This is incorrect in theory; how much of a problem it is in practice is another question. If your game only uses blending for special effects, the results might not be too horrible. If you are using a ‘pass-per-light’ approach to combine lights using the blending hardware, then lights are being combined in sRGB space, which eliminates most of the benefits of having a gamma-correct rendering pipeline. Note that this problem is equally bad whether you are using the automatic hardware conversion or converting in the shader, since in both cases the conversion happens before the blend.

What do you do if you don’t want sRGB-space blending, but you are stuck supporting GPUs which don’t handle blending across color spaces correctly? You could blend into an HDR linear buffer, which is then converted into sRGB in a post-process. If an HDR buffer is not practical, perhaps you could try an LDR linear-space buffer, but banding is likely to be a problem.

Using automatic hardware conversion on GPUs which don’t handle blending correctly is even worse if you use premultiplied alpha (Tom Forsyth has a nice explanation of why premultiplied alpha is good). Then the premultiplication occurs in the shader, before the hardware color-space conversion, and you get this:

Which looks really bad. In this case you can convert in the shader rather than using the automatic conversion, which leaves you no worse off than in the non-premultiplied case.

sRGB to linear conversions on texture reads are also simple to set up (links toDirect3D and OpenGL documentation) and inexpensive, but again much D3D9-class hardware botches the conversion by doing it after filtering, instead of before. This means that filtering is done in sRGB space. In practice, this is usually not so bad.

One detail which is often overlooked is the need to compute MIP-maps in linear space - given the cascading nature of MIP-map computations results can be quite wrong otherwise. Fortunately, most libraries used for this purpose used for this purpose support gamma-correct MIP generation (includingNVTextureTools and D3DX). Typically, the library will take flags which specify the color spaces for the input texture and the output MIP chain; it will then convert the input texture to linear space, do all filtering there (ideally in higher precision than the result format), and finally convert to the appropriate color space on output.

All color-pickers values in the DCC (Digital Content Creation; Maya, etc.) applications need to be converted from sRGB space to linear space before passing on to the rendering pipeline (since they are typically stored as floats, this conversion can be done in the tools). This includes material colors, light source colors, etc. Cutting corners here will bring down the wrath of the artists upon you when their carefully selected colors no longer match the shaded result. Note that only values between 0 and 1 can be meaningfully converted, so this doesn’t apply to unbounded quantities like light intensity sliders.

If your artists typically paint vertex colors in the DCC app, these are most likely visualized in sRGB space as well and will need to be converted to linear space (ideally at export time). Certain grayscale values like ambient occlusion may need to be converted as well if manually painted. As Chris Tchou points out in his talk (see list of references at end), vertex colors rarely need to be stored in sRGB space since quantization errors are hidden by the (high-precision) interpolation.

If lighting information (which includes environment maps, lightmaps, baked vertex lighting, SH light probes, etc. ) is stored in an HDR format (which is much preferable if you can do it!) then it is linear and no conversions are needed. If you have to store lighting in an LDR format then you might want to store it in sRGB space. Note that light baking tools will need to be configured to generate their result in the desired space, and to convert any input textures they use. Ideally light colors would also be converted while baking, so that baked light sources match dynamic ones.

All of these conversions need to be done in tools for export, light baking and previewing. All keyframes of any sRGB animated quantities need to be converted as well. All in all, there is a lot of code that needs to be touched.

After all this, you can sit back and bask in everyone’s adulation at the resulting realistic renders, right? Well, not necessarily; it turns out that there are some visual side-effects of moving to a gamma-correct rendering pipeline which may also need to be addressed. I have to start packing for SIGGRAPH, so the details will have to wait for a future post.

If you want to learn more about this subject, there are a bunch of good resources available. Simon Brown’s web site has a good summary. Charles Poynton’s Gamma FAQ goes into more details on the theory, and his book has a really good in-depth discussion. The slides for Chris Tchou’s Meltdown 2006 presentation, “HDR The Bungie Way” (available here) go into great detail on some of the more practical issues, like the approximations to the sRGB curve used by typical hardware.

Posted in Rendering | 1 Comment

One Response to “Adventures with Gamma-Correct Rendering”

- on 14 Apr 2008 at 12:29 am1realtimecollisiondetection.net - the blog » A brief graphics blog summary

[…] his article, but almost the very first day the system was up it helped catch a subtle bug where the gamma correction had just been broken in the tools. Visually the images looked almost the same, so had we not had […]

转载于:https://www.cnblogs.com/kylegui/p/3871183.html

[ZZ] Adventures with Gamma-Correct Rendering相关推荐

- gamma correct blurring

博主实现 来自wolf96的测试 未矫正的模糊颜色不准确,而且丢失了大量细节 正文 One mistake most of us have done at some point is to do pe ...

- [ZZ] [siggraph10]color enhancement and rendering in film and game productio

原文link:<color enhancement and rendering in film and game production> 是siggraph 2010,"Colo ...

- Rendering Linear lighting and color

Overview Working with "linear color" means lighting and rendering in a color space where t ...

- 图像的Gamma变换

什么是Gamma变换 Gamma变换是对输入图像灰度值进行的非线性操作,使输出图像灰度值与输入图像灰度值呈指数关系: Vout=AVinγV_{out}=AV_{in}^{\gamma}Vout=A ...

- Uncharted 2: HDR Lighting

http://www.gdcvault.com/ 这里找吧,非常巨大的pptx. 基本3各部分,gamma&tonemapping,ssao,rendering pipeline,前面很枯燥, ...

- Oculus VR SDK实现 -主要结构体及Api接口设计

其中的ovrHmdInfo是VR 头盔(眼镜)相关的一个结构体,包含,分辨率,刷新率,默认分辨率,和水平垂直视野角度. 显示一幅分辨率为2560*2440的图像的时候,每个像素在眼睛中所占用的视 ...

- 图像滤镜的一些加速和改进--笔记

原文:http://blog.csdn.net/zwlq1314521/article/details/50455782 滤镜有很多开源代码包,imageshop,tinyimage,还有安卓的源码包 ...

- Creating a Pager Control for ASP.NET以及Dino Esposito 分页组件的一个 Bug

我在使用MSDN 上 Dino Esposito 的分页组件对DataGrid进行操作的时候,发现在 PagingMode="NonCached" 时候,最后一页的时候,会报错误: ...

- python内turtle库应用

先看效果ho~ 1643527383985_哔哩哔哩_bilibili 怎么说,是不是觉得很牛逼? 不多哔哔,直接贴代码 # # turtle.py: a Tkinter based turtle g ...

- iOS 苹果官方Demo

GitHub 文章链接地址, 欢迎Star+Fork Mirror of Apple's iOS samples This repository mirrors Apple's iOS samples ...

最新文章

- Ubuntu下ibus在firefox浏览器中选中即删除的解决办法

- mysql中修改表的默认编码和表中字段的编码

- spring3: 4.4 使用路径通配符加载Resource

- GridView的全选与反选

- Acwing 1072. 树的最长路径

- 李洋疯狂C语言之编程实现统计某年某月份的天数

- java final class 性能_java中final修饰基本变量后的效率问题

- NoSQL之MongoDB安装

- java 拉钩技术_拉钩JAVA高薪训练营笔记汇总

- 深入浅出 MFC -WIN32基本概念

- Panabit安装snmp插件

- Linux文件系统格式EXT3,EXT4和XFS的区别

- python 抢票_亲测,python抢票成功!

- lavaral中文手册_Laravel5.3手册下载

- Python模块和包的导入

- matlab求随机过程的数学期望,密度函数已知,怎么用matlab求其数学期望和方差?...

- big code: Toward Deep Learning Software Repositories [MSR 2015]

- 世界上云平台有很多,但叫机智云的只有一个。

- Linux禅道安装步骤以及测试初认知

- Halium 9 尝鲜 -- 在小米平板4上的移植 (六)