Scrapy Architecture overview--官方文档

原文地址:https://doc.scrapy.org/en/latest/topics/architecture.html

This document describes the architecture of Scrapy and how its components interact.

Overview

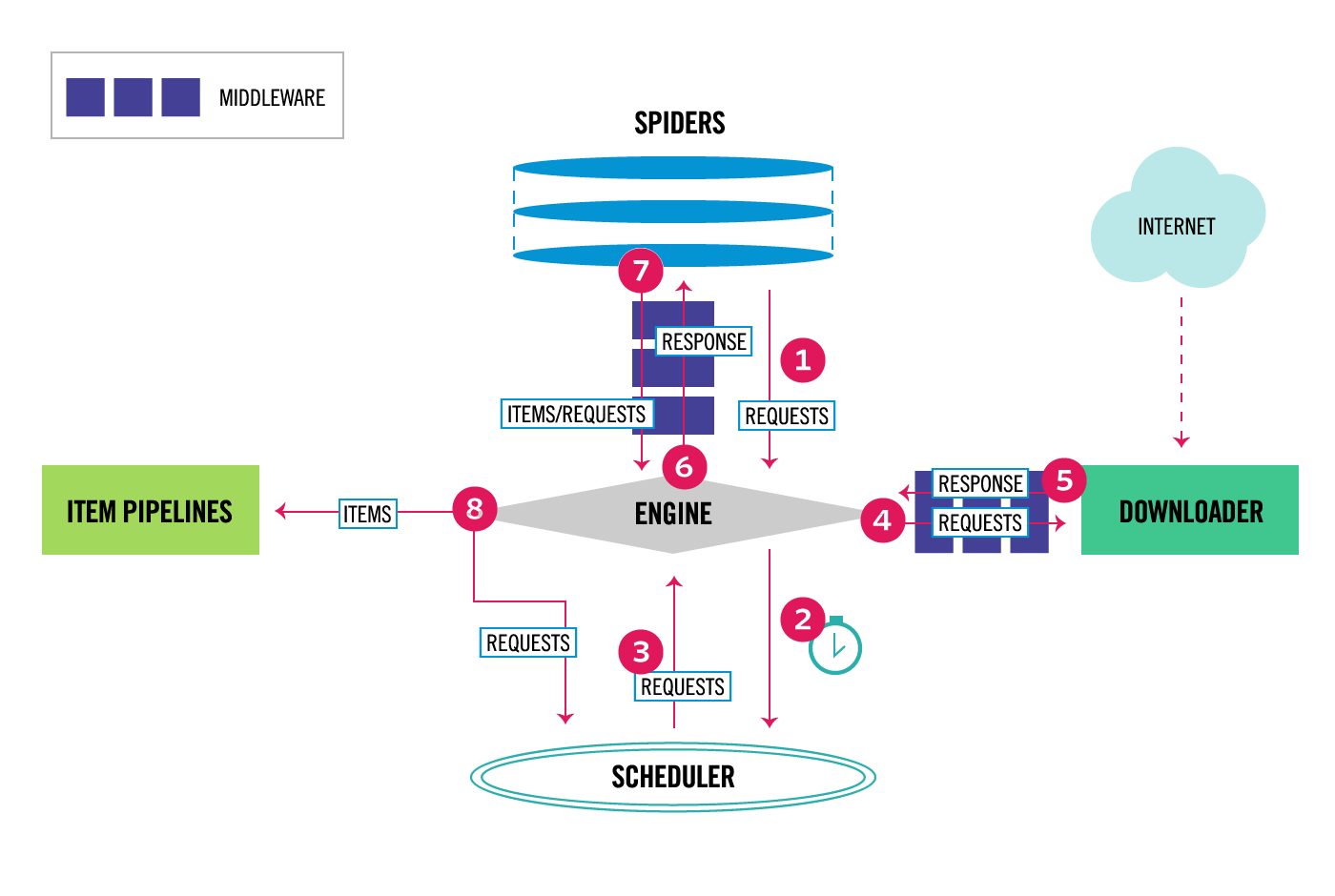

The following diagram shows an overview of the Scrapy architecture with its components and an outline of the data flow that takes place inside the system (shown by the red arrows). A brief description of the components is included below with links for more detailed information about them. The data flow is also described below.

Data flow

The data flow in Scrapy is controlled by the execution engine, and goes like this:

- The Engine gets the initial Requests to crawl from the Spider.

- The Engine schedules the Requests in the Scheduler and asks for the next Requests to crawl.

- The Scheduler returns the next Requests to the Engine.

- The Engine sends the Requests to the Downloader, passing through the Downloader Middlewares (see

process_request()). - Once the page finishes downloading the Downloader generates a Response (with that page) and sends it to the Engine, passing through the Downloader Middlewares (see

process_response()). - The Engine receives the Response from the Downloader and sends it to the Spider for processing, passing through the Spider Middleware (see

process_spider_input()). - The Spider processes the Response and returns scraped items and new Requests (to follow) to the Engine, passing through the Spider Middleware (see

process_spider_output()). - The Engine sends processed items to Item Pipelines, then send processed Requests to the Scheduler and asks for possible next Requests to crawl.

- The process repeats (from step 1) until there are no more requests from the Scheduler.

Components

Scrapy Engine

The engine is responsible for controlling the data flow between all components of the system, and triggering events when certain actions occur. See the Data Flow section above for more details.

Scheduler

The Scheduler receives requests from the engine and enqueues them for feeding them later (also to the engine) when the engine requests them.

Downloader

The Downloader is responsible for fetching web pages and feeding them to the engine which, in turn, feeds them to the spiders.

Spiders

Spiders are custom classes written by Scrapy users to parse responses and extract items (aka scraped items) from them or additional requests to follow. For more information see Spiders.

Item Pipeline

The Item Pipeline is responsible for processing the items once they have been extracted (or scraped) by the spiders. Typical tasks include cleansing, validation and persistence (like storing the item in a database). For more information see Item Pipeline.

Downloader middlewares

Downloader middlewares are specific hooks that sit between the Engine and the Downloader and process requests when they pass from the Engine to the Downloader, and responses that pass from Downloader to the Engine.

Use a Downloader middleware if you need to do one of the following:

- process a request just before it is sent to the Downloader (i.e. right before Scrapy sends the request to the website);

- change received response before passing it to a spider;

- send a new Request instead of passing received response to a spider;

- pass response to a spider without fetching a web page;

- silently drop some requests.

For more information see Downloader Middleware.

Spider middlewares

Spider middlewares are specific hooks that sit between the Engine and the Spiders and are able to process spider input (responses) and output (items and requests).

Use a Spider middleware if you need to

- post-process output of spider callbacks - change/add/remove requests or items;

- post-process start_requests;

- handle spider exceptions;

- call errback instead of callback for some of the requests based on response content.

For more information see Spider Middleware.

Event-driven networking

Scrapy is written with Twisted, a popular event-driven networking framework for Python. Thus, it’s implemented using a non-blocking (aka asynchronous) code for concurrency.

转载于:https://www.cnblogs.com/davidwang456/p/7576227.html

Scrapy Architecture overview--官方文档相关推荐

- python爬虫----(4. scrapy框架,官方文档以及例子)

为什么80%的码农都做不了架构师?>>> 官方文档: http://doc.scrapy.org/en/latest/ github例子: https://github.com ...

- 【甘道夫】Spark1.3.0 Cluster Mode Overview 官方文档精华摘要

引言 由于工作需要,即将拥抱Spark,曾经进行过相关知识的学习,现在计划详细读一遍最新版本Spark1.3的部分官方文档,一是复习,二是了解最新进展,三是为公司团队培训做储备. 欢迎转载,请注明出处 ...

- scrapy 中不同页面的拼接_scrapy官方文档提供的常见使用问题

Scrapy与BeautifulSoup或lxml相比如何? BeautifulSoup和lxml是用于解析HTML和XML的库.Scrapy是一个用于编写Web爬虫的应用程序框架,可以抓取网站并从中 ...

- boost官方文档同步机制Synchronization mechanisms overview

参考链接 官方文档 Synchronization mechanisms overview Named And Anonymous Synchronization Mechanisms Types O ...

- 什么!作为程序员你连英文版的官方文档都看不懂?

目录 一.笔者英文基础介绍 二.为啥程序员需要阅读官方文档? 三.如何才能无障碍阅读英文文档? 四.坚持!坚持!坚持! 五.来个约定吧! 这篇文章不聊技术,我们来聊一个某种程度上比技术更重要的话题:一 ...

- jca oracle官方文档,Oracle 官方文档说明

Oracle 官方文档说明 相关的部分并没有全部罗列,只列举了常用的文档. 没有罗列开发相关的部分(SQL / PL/SQL例外). 部分内容是在OCP课程之外的. oracle 错误号参考 Erro ...

- 【SpringBoot】SpringBoot、ThemeLeaf 官方文档地址

SpringBoot官方文档 - Spring Boot Reference Documentation 页面如下: 在 Documentation Overview 中,附有 PDF 版本下载地址, ...

- spark之4:基础指南(源自官方文档)

spark之4:基础指南(源自官方文档) @(SPARK)[spark, 大数据] spark之4基础指南源自官方文档 一简介 二接入Spark 三初始化Spark 一使用Shell 四弹性分布式数据 ...

- dubbo官方文档_狂神说SpringBoot17:Dubbo和Zookeeper集成

狂神说SpringBoot系列连载课程,通俗易懂,基于SpringBoot2.2.5版本,欢迎各位狂粉转发关注学习.未经作者授权,禁止转载 分布式理论 什么是分布式系统? 在<分布式系统原理与范 ...

- [译]1-Key-Value Coding Programming Guide 官方文档第一部分

Key-Value Coding Programming Guide 官方文档第一部分 2018.9.20 第一次修正 iOS-KVC官方文档第一部分 Key-Value Coding Program ...

最新文章

- 使用SSH上传部署WAR包到服务器

- python通过opencv使用图片制作简单视频(亲测)

- ROADS POJ - 1724(限制条件的最短路)【邻接表+深搜】

- Android复习强化笔记(二)

- TP5的类似TP3使用‘DEFAULT_THEME’的配置修改主题风格的方法,以及常见模板错误...

- mysql5.7.17启动失败_解决Mysql5.7.17在windows下安装启动时提示不成功问题

- rarlinux基于linux-x64

- FastStone Capture(定时自动截图)

- 用HFFS实例讲解PCB蛇形天线设计技巧

- matlab 中括号里面有分号是什么意思

- Alkyne-PEG2000-Maleimide,含有炔基和马来西安亚楠的PEG,Alk-PEG2000-MAL

- 二十三种设计模式(第十二种)-----代理模式(Proxy)

- 利用js制作的简单网页小游戏

- 大数据处理的关键技术(一)

- document.forms[0].submit();和document.forms[0].action = ““;问题

- 新版标准日本语初级_第三十课

- 经典C语言算法题之快乐数

- Lightroom 中照片的修改信息储存在哪了?

- php微信小程序服务商支付模式

- (转)智能制造大环境下PLC的发展趋势和路径

热门文章

- c# socket接收字符串_Python高级编程之 Socket 编程

- 13 登陆_13级!凌晨,“黑格比”登陆!对莆田的最新影响……

- linux vnc的小黑点和鼠标不同步_vnc连接windows,推荐三款非常好用的vnc连接windows软件...

- 网络情况不稳定 无法连接服务器,提示网络正常无法连接服务器

- 实用Java程序设计教程_java程序设计实用教程 书中代码.pdf

- alertdialog怎么水平排列_轻钢二级吊顶怎么安装

- 用加法器构造能够实现连续加法的电路

- oracle的server_name,配置Oracle Name Server的完全步骤

- MySQL删除退出后数据未更新,mysql一不小心删除了数据或更新了数据没有加where 条件...

- python pp 库实现并行计算