Win7(64位)上编译、安装、配置、运行hadoop-Ver2.5.2---单机版配置运行

2019独角兽企业重金招聘Python工程师标准>>>

1 参考博文

http://www.srccodes.com/p/article/38/build-install-configure-run-apache-hadoop-2.2.0-microsoft-windows-os

2 安装准备

1. Win7_x64

2. Hadoop 版本 hadoop-2.5.2-src.tar.gz :http://mirrors.ibiblio.org/apache/hadoop/common/hadoop-2.5.2/

3. JDK 版本 1.6(hadoop-Ver2.5.2不支持更高版本的JDK)

4. Microsoft Windows SDK v7.1

5. Maven 3.1.1

6. Protocol Buffers 2.5.0

7. Cygwin

由于时间关系,暂时无法完成安装笔记了,下面直接复制参考博文,已经很详细了。

安装过程中遇到两个编译问题,要注意的是下面两点:

1. JDK版本:x64 or i386需要和操作系统保持一致;当前hadoop-Ver2.5.2不支持高于JDK1.6的版本

2.cygwin安装过程中,需要手动追加相关插件,除了一般编译所用的插件外,zlib插件同样需要安装,否则hadoop无法编译通过。

3 Build Hadoop bin distribution for Windows

Download and install Microsoft Windows SDK v7.1.

Download and install Unix command-line tool Cygwin.(需要安装先关插件,曾因未安装zlib插件编译未通过,困扰多天)

Download and install Maven 3.1.1.

Download Protocol Buffers 2.5.0 and extract to a folder (say c:\protobuf).

Add Environment Variables JAVA_HOME, M2_HOME and Platform if not added already.

Add Environment Variables:

Note :

Variable name Platform is case sensitive. And value will be either x64 or Win32 for building on a 64-bit or 32-bit system.

If JDK installation path contains any space then use Windows shortened name (say 'PROGRA~1' for 'Program Files') for the JAVA_HOME environment variable.

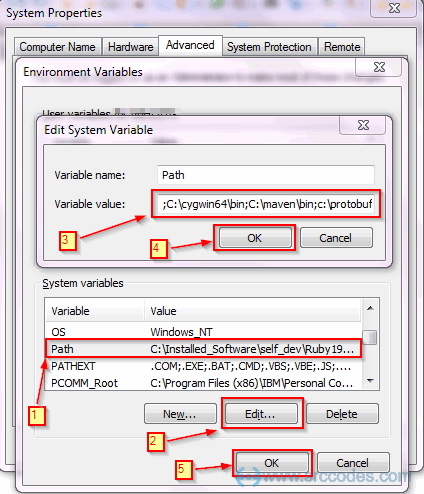

Edit Path Variable to add bin directory of Cygwin (say C:\cygwin64\bin), bin directory of Maven (say C:\maven\bin) and installation path of Protocol Buffers (say c:\protobuf).

Download hadoop-2.2.0-src.tar.gz and extract to a folder having short path (say c:\hdfs) to avoid runtime problem due to maximum path length limitation in Windows.

Select Start --> All Programs --> Microsoft Windows SDK v7.1 and open Windows SDK 7.1 Command Prompt. Change directory to Hadoop source code folder (c:\hdfs). Execute mvn package with options -Pdist,native-win -DskipTests -Dtar to create Windows binary tar distribution.

Windows SDK 7.1 Command Prompt

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172Setting SDK environment relative to C:\Program Files\Microsoft SDKs\Windows\v7.1\.Targeting Windows 7 x64 DebugC:\Program Files\Microsoft SDKs\Windows\v7.1>cdc:\hdfsC:\hdfs>mvn package -Pdist,native-win -DskipTests -Dtar[INFO] Scanningforprojects...[INFO] ------------------------------------------------------------------------[INFO] Reactor Build Order:[INFO][INFO] Apache Hadoop Main[INFO] Apache Hadoop Project POM[INFO] Apache Hadoop Annotations[INFO] Apache Hadoop Assemblies[INFO] Apache Hadoop Project Dist POM[INFO] Apache Hadoop Maven Plugins[INFO] Apache Hadoop Auth[INFO] Apache Hadoop Auth Examples[INFO] Apache Hadoop Common[INFO] Apache Hadoop NFS[INFO] Apache Hadoop Common Project[INFO] Apache Hadoop HDFS[INFO] Apache Hadoop HttpFS[INFO] Apache Hadoop HDFS BookKeeper Journal[INFO] Apache Hadoop HDFS-NFS[INFO] Apache Hadoop HDFS Project[INFO] hadoop-yarn[INFO] hadoop-yarn-api[INFO] hadoop-yarn-common[INFO] hadoop-yarn-server[INFO] hadoop-yarn-server-common[INFO] hadoop-yarn-server-nodemanager[INFO] hadoop-yarn-server-web-proxy[INFO] hadoop-yarn-server-resourcemanager[INFO] hadoop-yarn-server-tests[INFO] hadoop-yarn-client[INFO] hadoop-yarn-applications[INFO] hadoop-yarn-applications-distributedshell[INFO] hadoop-mapreduce-client[INFO] hadoop-mapreduce-client-core[INFO] hadoop-yarn-applications-unmanaged-am-launcher[INFO] hadoop-yarn-site[INFO] hadoop-yarn-project[INFO] hadoop-mapreduce-client-common[INFO] hadoop-mapreduce-client-shuffle[INFO] hadoop-mapreduce-client-app[INFO] hadoop-mapreduce-client-hs[INFO] hadoop-mapreduce-client-jobclient[INFO] hadoop-mapreduce-client-hs-plugins[INFO] Apache Hadoop MapReduce Examples[INFO] hadoop-mapreduce[INFO] Apache Hadoop MapReduce Streaming[INFO] Apache Hadoop Distributed Copy[INFO] Apache Hadoop Archives[INFO] Apache Hadoop Rumen[INFO] Apache Hadoop Gridmix[INFO] Apache Hadoop Data Join[INFO] Apache Hadoop Extras[INFO] Apache Hadoop Pipes[INFO] Apache Hadoop Tools Dist[INFO] Apache Hadoop Tools[INFO] Apache Hadoop Distribution[INFO] Apache Hadoop Client[INFO] Apache Hadoop Mini-[INFO][INFO] ------------------------------------------------------------------------[INFO] Building Apache Hadoop Main 2.2.0[INFO] ------------------------------------------------------------------------[INFO][INFO] --- maven-enforcer-plugin:1.3.1:enforce (default) @ hadoop-main ---[INFO][INFO] --- maven-site-plugin:3.0:attach-descriptor (attach-descriptor) @ hadoop-main ---Note : I have pasted only the starting few lines of huge logs generated by maven. This building step requires Internet connection as Maven will download all the required dependencies.

If everything goes well in the previous step, then native distribution hadoop-2.2.0.tar.gz will be created inside C:\hdfs\hadoop-dist\target\hadoop-2.2.0 directory.

4 Install Hadoop

Extract hadoop-2.2.0.tar.gz to a folder (say c:\hadoop).

Add Environment Variable HADOOP_HOME and edit Path Variable to add bin directory of HADOOP_HOME (say C:\hadoop\bin).

Add Environment Variables:

5 Configure Hadoop

Make following changes to configure Hadoop

File: C:\hadoop\etc\hadoop\core-site.xml

123456789101112131415161718192021222324<?xmlversion="1.0"encoding="UTF-8"?><?xml-stylesheettype="text/xsl"href="configuration.xsl"?><!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.--><!-- Put site-specific property overrides in this file. --><configuration><property><name>fs.defaultFS</name><value>hdfs://localhost:9000</value></property></configuration>fs.defaultFS:

The name of the default file system. A URI whose scheme and authority determine the FileSystem implementation. The uri's scheme determines the config property (fs.SCHEME.impl) naming the FileSystem implementation class. The uri's authority is used to determine the host, port, etc. for a filesystem.

File: C:\hadoop\etc\hadoop\hdfs-site.xml

1234567891011121314151617181920212223242526272829303132<?xmlversion="1.0"encoding="UTF-8"?><?xml-stylesheettype="text/xsl"href="configuration.xsl"?><!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.--><!-- Put site-specific property overrides in this file. --><configuration><property><name>dfs.replication</name><value>1</value></property><property><name>dfs.namenode.name.dir</name><value>file:/hadoop/data/dfs/namenode</value></property><property><name>dfs.datanode.data.dir</name><value>file:/hadoop/data/dfs/datanode</value></property></configuration>Note : Create namenode and datanode directory under c:/hadoop/data/dfs/.

dfs.datanode.data.dir:

Determines where on the local filesystem an DFS data node should store its blocks. If this is a comma-delimited list of directories, then data will be stored in all named directories, typically on different devices. Directories that do not exist are ignored.

dfs.namenode.name.dir:

Determines where on the local filesystem the DFS name node should store the name table(fsimage). If this is a comma-delimited list of directories then the name table is replicated in all of the directories, for redundancy.

dfs.replication:

Default block replication. The actual number of replications can be specified when the file is created. The default is used if replication is not specified in create time.

File: C:\hadoop\etc\hadoop\yarn-site.xml

1234567891011121314151617181920212223242526272829303132333435363738<?xmlversion="1.0"?><!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.--><configuration><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property><property><name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name><value>org.apache.hadoop.mapred.ShuffleHandler</value></property><property><name>yarn.application.classpath</name><value>%HADOOP_HOME%\etc\hadoop,%HADOOP_HOME%\share\hadoop\common\*,%HADOOP_HOME%\share\hadoop\common\lib\*,%HADOOP_HOME%\share\hadoop\mapreduce\*,%HADOOP_HOME%\share\hadoop\mapreduce\lib\*,%HADOOP_HOME%\share\hadoop\hdfs\*,%HADOOP_HOME%\share\hadoop\hdfs\lib\*,%HADOOP_HOME%\share\hadoop\yarn\*,%HADOOP_HOME%\share\hadoop\yarn\lib\*</value></property></configuration>yarn.application.classpath:

CLASSPATH for YARN applications. A comma-separated list of CLASSPATH entries.

yarn.nodemanager.aux-services.mapreduce.shuffle.class:

The auxiliary service class to use. Default value is org.apache.hadoop.mapred.ShuffleHandler

yarn.nodemanager.aux-services:

The auxiliary service name. Default value is omapreduce_shuffle

File: C:\hadoop\etc\hadoop\mapred-site.xml

123456789101112131415161718192021222324<?xmlversion="1.0"?><?xml-stylesheettype="text/xsl"href="configuration.xsl"?><!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.--><!-- Put site-specific property overrides in this file. --><configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property></configuration>mapreduce.framework.name:

The runtime framework for executing MapReduce jobs. Can be one of local, classic or yarn.

6 Format namenode

For the first time only, namenode needs to be formatted.

Command Prompt

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

|

Microsoft Windows [Version 6.1.7601]

Copyright (c) 2009 Microsoft Corporation. All rights reserved.

C:\Users\abhijitg> cd c:\hadoop\bin

c:\hadoop\bin>hdfs namenode - format

13 /11/03 18:07:47 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = ABHIJITG /x .x.x.x

STARTUP_MSG: args = [- format ]

STARTUP_MSG: version = 2.2.0

STARTUP_MSG: classpath = <classpath jars here>

STARTUP_MSG: build = Unknown -r Unknown; compiled by ABHIJITG on 2013-11-01T13:42Z

STARTUP_MSG: java = 1.7.0_03

************************************************************/

Formatting using clusterid: CID-1af0bd9f-efee-4d4e-9f03-a0032c22e5eb

13 /11/03 18:07:48 INFO namenode.HostFileManager: read includes:

HostSet(

)

13 /11/03 18:07:48 INFO namenode.HostFileManager: read excludes:

HostSet(

)

13 /11/03 18:07:48 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

13 /11/03 18:07:48 INFO util.GSet: Computing capacity for map BlocksMap

13 /11/03 18:07:48 INFO util.GSet: VM type = 64-bit

13 /11/03 18:07:48 INFO util.GSet: 2.0% max memory = 888.9 MB

13 /11/03 18:07:48 INFO util.GSet: capacity = 2^21 = 2097152 entries

13 /11/03 18:07:48 INFO blockmanagement.BlockManager: dfs.block.access.token. enable = false

13 /11/03 18:07:48 INFO blockmanagement.BlockManager: defaultReplication = 1

13 /11/03 18:07:48 INFO blockmanagement.BlockManager: maxReplication = 512

13 /11/03 18:07:48 INFO blockmanagement.BlockManager: minReplication = 1

13 /11/03 18:07:48 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

13 /11/03 18:07:48 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false

13 /11/03 18:07:48 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

13 /11/03 18:07:48 INFO blockmanagement.BlockManager: encryptDataTransfer = false

13 /11/03 18:07:48 INFO namenode.FSNamesystem: fsOwner = ABHIJITG (auth:SIMPLE)

13 /11/03 18:07:48 INFO namenode.FSNamesystem: supergroup = supergroup

13 /11/03 18:07:48 INFO namenode.FSNamesystem: isPermissionEnabled = true

13 /11/03 18:07:48 INFO namenode.FSNamesystem: HA Enabled: false

13 /11/03 18:07:48 INFO namenode.FSNamesystem: Append Enabled: true

13 /11/03 18:07:49 INFO util.GSet: Computing capacity for map INodeMap

13 /11/03 18:07:49 INFO util.GSet: VM type = 64-bit

13 /11/03 18:07:49 INFO util.GSet: 1.0% max memory = 888.9 MB

13 /11/03 18:07:49 INFO util.GSet: capacity = 2^20 = 1048576 entries

13 /11/03 18:07:49 INFO namenode.NameNode: Caching file names occuring more than 10 times

13 /11/03 18:07:49 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

13 /11/03 18:07:49 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

13 /11/03 18:07:49 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

13 /11/03 18:07:49 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

13 /11/03 18:07:49 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time

is 600000 millis

13 /11/03 18:07:49 INFO util.GSet: Computing capacity for map Namenode Retry Cache

13 /11/03 18:07:49 INFO util.GSet: VM type = 64-bit

13 /11/03 18:07:49 INFO util.GSet: 0.029999999329447746% max memory = 888.9 MB

13 /11/03 18:07:49 INFO util.GSet: capacity = 2^15 = 32768 entries

13 /11/03 18:07:49 INFO common.Storage: Storage directory \hadoop\data\dfs\namenode has been successfully formatted.

13 /11/03 18:07:49 INFO namenode.FSImage: Saving image file \hadoop\data\dfs\namenode\current\fsimage.ckpt_00000000000000

00000 using no compression

13 /11/03 18:07:49 INFO namenode.FSImage: Image file \hadoop\data\dfs\namenode\current\fsimage.ckpt_0000000000000000000 o

f size 200 bytes saved in 0 seconds.

13 /11/03 18:07:49 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

13 /11/03 18:07:49 INFO util.ExitUtil: Exiting with status 0

13 /11/03 18:07:49 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at ABHIJITG /x .x.x.x

************************************************************/

|

7 Start HDFS (Namenode and Datanode)

Command Prompt

|

1

2

|

C:\Users\abhijitg> cd c:\hadoop\sbin

c:\hadoop\sbin>start-dfs

|

Two separate Command Prompt windows will be opened automatically to run Namenode and Datanode.

8 Start MapReduce aka YARN (Resource Manager and Node Manager)

Command Prompt

|

1

2

3

|

C:\Users\abhijitg> cd c:\hadoop\sbin

c:\hadoop\sbin>start-yarn

starting yarn daemons

|

Similarly, two separate Command Prompt windows will be opened automatically to run Resource Manager and Node Manager.

9 Verify Installation

If everything goes well then you will be able to open the Resource Manager and Node Manager at http://localhost:8042 and Namenode at http://localhost:50070.

Node Manager: http://localhost:8042/

Namenode: http://localhost:50070

10 Run wordcount MapReduce job

Follow the post Run Hadoop wordcount MapReduce Example on Windows

11 Stop HDFS & MapReduce

Command Prompt

|

1

2

3

4

5

6

7

8

9

10

11

|

C:\Users\abhijitg> cd c:\hadoop\sbin

c:\hadoop\sbin>stop-dfs

SUCCESS: Sent termination signal to the process with PID 876.

SUCCESS: Sent termination signal to the process with PID 3848.

c:\hadoop\sbin>stop-yarn

stopping yarn daemons

SUCCESS: Sent termination signal to the process with PID 5920.

SUCCESS: Sent termination signal to the process with PID 7612.

INFO: No tasks running with the specified criteria.

|

转载于:https://my.oschina.net/u/2285247/blog/393713

Win7(64位)上编译、安装、配置、运行hadoop-Ver2.5.2---单机版配置运行相关推荐

- win7 64位下如何安装配置mysql-5.7.17-winx64

本人综合了两篇文章得以安装成功: win7 64位下如何安装配置mysql-5.7.4-m14-winx64 the MySQL service on local computer started a ...

- win7 64位下如何安装配置mysql

win7 64位下如何安装配置mysql 1. MySQL Community Server 5.6.10 官方网站下载mysql-5.6.10-winx64.zip 2.解压到d:\MySQL. ...

- win7 64位解决无法安装Visual Studio心酸经历

最近刚换了一台新电脑,配置还不错,酷睿i7-6700处理器,8G内存,2T高速硬盘,这配置现在来说还算不错了吧.可是安装个Visual Studio 2015花了我6个多小时...这是何其的忧伤呀. ...

- WIN7 64位系统,安装office 2010

在一台电脑上面,WIN7 64位系统,安装office 2010,提示如下错误: 由于下列原因,安装程序无法继续:若要安装Microsoft office 2010,需要在计算机上安装MSXML版本6 ...

- CATIA V5 R19 WIN7 64位系统的安装方法

CATIA V5 R19 WIN7 64位系统的安装方法 本文主要记录了根据参考文档安装Catia V5 R9的过程. 参考文档:CATIA V5 R19 WIN7 64位系统的安装方法 所需组件 表 ...

- Win7 64 位 Vcode Python安装与环境配置

一 . 对于win7 64位 的Python版本,官网目前是 Python 3.8.10 .千万不要装错哈哈 二 .Vcode 版本,可以直接在官网或者360软件管家安装,都比较方便.但安装之前请先 ...

- cocos2dx3.3在Win7(64位)上Android开发环境搭建(提要)

一.使用的工具 Win7 64位 jdk1.8.0_25 (jdk-8u25-windows-x64.exe) adt-bundle-windows-x86_64-20140702 (adt-bund ...

- oracle不兼容win7 64位系统,oracle 安装 win7 64位_已经下载好系统给电脑装系统的步骤 - Win7之家...

可选中1个或多个下面的关键词,搜索相关资料.也可直接点"搜索资料"搜索整个问题. 知道合伙人数码行家采纳数:19545获赞数:76436具备20年以上的计算机操作经验和16年以上的 ...

- win7 64位+python3+tensorflow安装

本文地址:http://blog.csdn.net/shanglianlm/article/details/79390460 1 安装Anaconda3和python3 1-1 下载 Anaconda ...

- Go在Ubuntu 14.04 64位上的安装过程

1. 从 https://golang.org/dl/ 或 https://studygolang.com/dl 下载最新的发布版本go1.10即go1.10.linux-amd64.tar.gz ...

最新文章

- linux 加密库 libsodium 安装

- 3DSlicer29:Debug or Dev-170918

- MongoDB 聚合操作之$group使用

- linux中shell变量$#,$@,$0,$1,$2的含义解释(转)

- Wow,一个免费、不怕打的评论插件!

- hazelcast_Java:如何在不到5分钟的时间内通过Hazelcast提高生产力

- 论文浅尝 - AAAI2020 | 基于知识图谱进行对话目标规划的开放域对话生成技术

- 大数据技术之kafka (第 3 章 Kafka 架构深入 ) offset讲解

- JS 中的foreach和For in比较

- centos7 卸载软件

- C++之继承探究(六):虚函数和多态

- IDC机房对接阿里云

- 工作总结 项目中如何处理重复提交问题

- [你必须知道的.NET] 调试技巧 - DebuggerDisplayAttribute

- python3,使用sys.setdefaultencoding('utf-8'),编译时报错

- 计算机专业考研电路原理,2016年南开大学综合基础课(模拟电路、数字电路、计算机原理)考研试题.pdf...

- MySQL之数据库编程(了解语言结构)

- excel 进行二叉树_常见的Excel模型有哪几种?

- 汇编语言 程序设计 顺序结构

- Creo 3.0-7.0 安装说明