K-Fold Cross Validation

2019独角兽企业重金招聘Python工程师标准>>>

1: K Fold Cross Validation

In the previous mission, we learned about cross validation, a technique for testing a machine learning model's accuracy on new data that the model wasn't trained on. Specifically, we focused on the holdout validation technique, which involved:

- splitting the full dataset into 2 partitions:

- a training set and

- a test set

- training the model on the training set,

- using the trained model to predict labels on the test set,

- computing an error metric (e.g. simple accuracy) to understand the model's accuracy.

Holdout validation is actually a specific example of a larger class of validation techniques called k-fold cross-validation. K-fold cross-validation works by:

- splitting the full dataset into

kequal length partitions,- selecting

k-1partitions as the training set and - selecting the remaining partition as the test set

- selecting

- training the model on the training set,

- using the trained model to predict labels on the test set,

- computing an error metric (e.g. simple accuracy) and setting aside the value for later,

- repeating all of the above steps

k-1times, until each partition has been used as the test set for an iteration, - calculating the mean of the

kerror values.

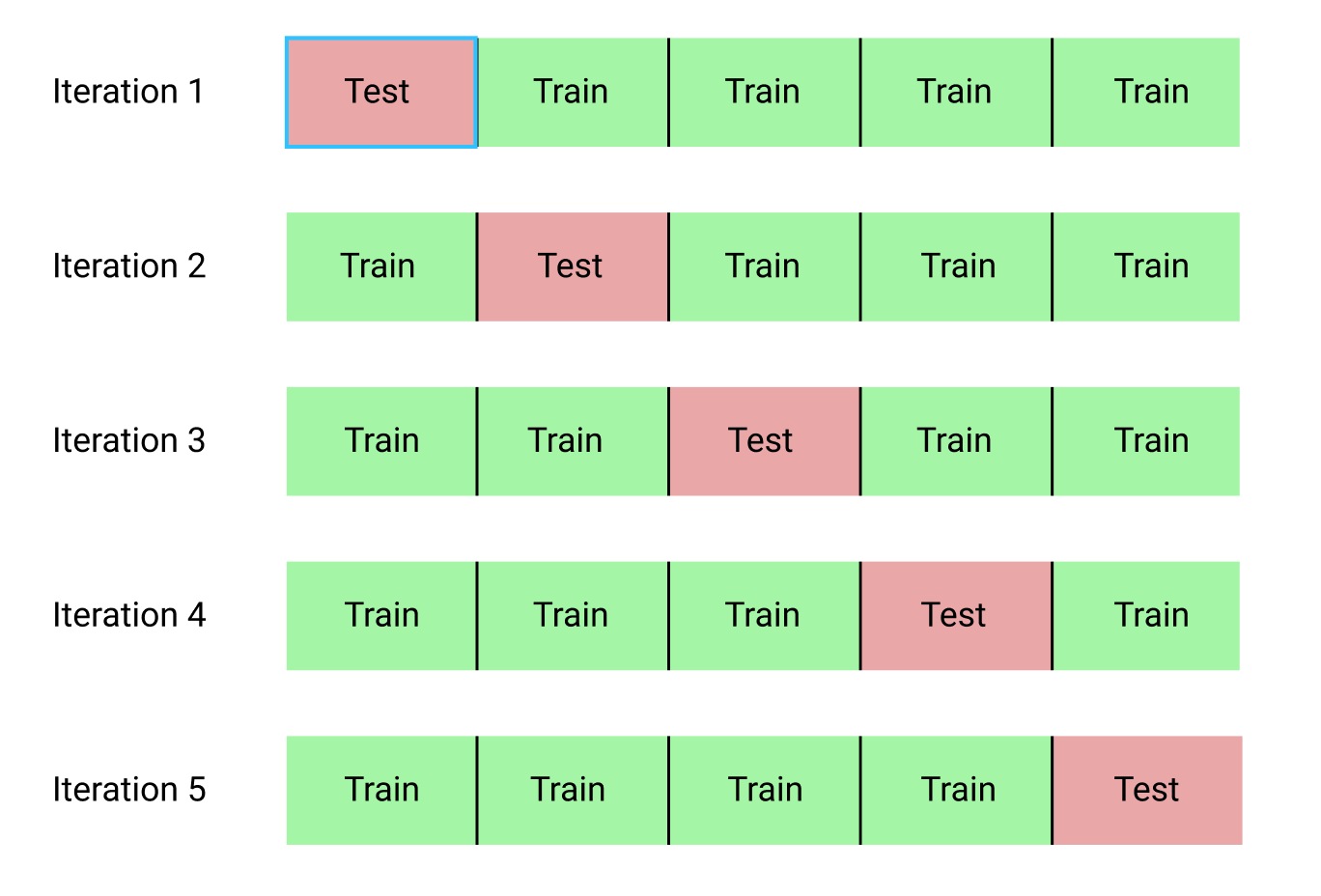

Using 5 or 10 folds is common for k-fold cross-validation. Here's a diagram describing each iteration of 5-fold cross validation:

Since you're training k models, the more number of folds you use the longer it takes. When working with large datasets, often only a few number of folds are used because of the time and cost it takes, with the tradeoff that having more training examples helps improve the accuracy even with less folds.

2: Partititioning The Data

To explore k-fold cross-validation, we'll continue to work with the dataset on graduate admissions. Recall that this dataset contains data on 644 applications with the following columns:

gre- applicant's store on the Graduate Record Exam, a generalized test for prospective graduate students.- Score ranges from 200 to 800.

gpa- college grade point average.- Continuous between 0.0 and 4.0.

admit- binary value- Binary value, 0 or 1, where 1 means the applicant was admitted to the program and 0 means the applicant was rejected.

To save you time, we've already imported the Pandas library, read in admissions.csv into a Dataframe, renamed the admit column toactual_label, and randomized the ordering of the rows.

Now, partition the dataset into 5 folds.

Instructions

Partition the dataset into 5 folds and store each row's fold in a new integer column named fold:

- Fold

1: rows from index0to128, including both of those rows. - Fold

2: rows from index129to257, including both of those rows. - Fold

3: rows from index258to386, including both of those rows. - Fold

4: rows from index387to514, including both of those rows. - Fold

5: rows from index515to644, including both of those rows.

Display the first 5 rows and the last 5 rows of the Dataframe to confirm.

import pandas as pd

admissions = pd.read_csv("admissions.csv")

admissions["actual_label"] = admissions["admit"]

admissions = admissions.drop("admit", axis=1)

shuffled_index = np.random.permutation(admissions.index)

shuffled_admissions = admissions.loc[shuffled_index]

admissions = shuffled_admissions.reset_index()

admissions.ix[0:128,"fold"]=1

admissions.ix[129:257,"fold"]=2

admissions.ix[258:386,"fold"]=3

admissions.ix[387:514,"fold"]=4

admissions.ix[515:644,"fold"]=5

admissions["fold"]=admissions["fold"].astype("int")

print(admissions.head())

print(admissions.tail())

3: First Iteration

In the first iteration, let's assign fold 1 as the test set and folds 2 to 5 as the training set. Then, train the model and use it to predict labels for the test set.

Instructions

- Train a logistic regression model using the

gpacolumn as the sole feature from folds2to5as the training set.c - Use the model to make predictions on the test set and assign the predicted labels to

labels. - Calculate the accuracy by comparing the predicted labels with the actual labels from the

actual_labelcolumn on the test set. - Assign the accuracy value to

iteration_one_accuracy.

from sklearn.linear_model import LogisticRegression

model=LogisticRegression()

train_iteration_one=admissions[admissions["fold"]!=1]

test_iteration_one=admissions[admissions["fold"]==1]

model.fit(train_iteration_one[["gpa"]],train_iteration_one[["actual_label"]])

labels=model.predict(test_iteration_one[["gpa"]])

test_iteration_one["prediction_label"]=labels

matches=test_iteration_one["prediction_label"]==test_iteration_one["actual_label"]

correct_predictions=test_iteration_one[matches]

iteration_one_accuracy=len(correct_predictions)/len(test_iteration_one)

print(iteration_one_accuracy)

4: Function For Training Models

From the first iteration, we achieved an accuracy score of 60.5% accuracy. Let's now run through the rest of the iterations to see how the accuracy changes after each iteration and to compute the mean accuracy.

To make the iteration process easier, wrap the code you in the previous screen in a function.

Instructions

- Write a function named

train_and_testthat takes in a Dataframe and a list of fold id values (1to5in our case) and returns a list of accuracy values, e.g.:

[0.5, 0.5, 0.5, 0.5, 0.5]

- Use the

train_and_testfunction to return the list of accuracy values for theadmissionsDataframe and assign toaccuracies. e.g.:

accuracies = train_and_test(admissions, [1,2,3,4,5])

- Compute the average accuracy and assign to

average_accuracy. average_accuracyshould be a float value whileaccuraciesshould be a list of float values (one float value per iteration).- Use the variable inspector or the

printfunction to display the values foraccuraciesandaverage_accuracy.

# Use np.mean to calculate the mean.

import numpy as np

fold_ids = [1,2,3,4,5]

def train_and_test(df, folds):

fold_accuracies = []

for fold in folds:

model = LogisticRegression()

train = admissions[admissions["fold"] != fold]

test = admissions[admissions["fold"] == fold]

model.fit(train[["gpa"]], train["actual_label"])

model.fit(train[["gpa"] = model.predict(test[["gpa"]])

matches = test["predicted_label"] == test["actual_label"]

correct_predictions = test[matches]

fold_accuracies.append(len(correct_predictions) / len(test))

return(fold_accuracies)

accuracies = train_and_test(admissions, fold_ids)

print(accuracies)

average_accuracy = np.mean(accuracies)

print(average_accuracy)

5: Sklearn

The average accuracy value was 64.8%, compared to an accuracy value of 63.6% using holdout validation from the last mission. In many cases, the resulting accuracy values don't differ much between a simpler, less time-intensive method like holdout validation and a more robust but more time-intensive method like k-fold cross-validation. As you use these and other cross validation techniques more often, you should get a better sense of these tradeoffs and when to use which validation technique.

In addition, the computed accuracy values for each fold stayed within 61% and 63%, which is a healthy sign. Wild variations in the accuracy values between folds is usually indicative of using too many folds (k value). By implementing your own k-fold cross-validation function, you hopefully acquired a good understanding of the inner workings of the technique.

When working in a production environment however, you should use scikit-learn. Scikit-learn has a few different tools that make performing cross validation easy. Similar to having to instantiate a LinearRegression or LogisticRegression object before you can train one of those models, you need to instantiate a KFold class before you can perform k-fold cross-validation:

kf = KFold(n, n_folds, shuffle=False, random_state=None)

where:

nis the number of observations in the dataset,n_foldsis the number of folds you want to use,shuffleis used to toggle shuffling(切换洗牌) of the ordering of the observations in the dataset,random_stateis used to specify a seed value(种子值) ifshuffleis set toTrue.

You'll notice here that only the first parameter depends on the dataset at all. This is because the KFold class returns an iterator object but won't actually handle the training and testing of models. If we're primarily only interested in accuracy and error metrics for each fold, we can use the KFold class in conjunction with the cross_val_score function, which will handle training and testing of the models in each fold.

Here are the relevant parameters for the cross_val_score function:

cross_val_score(estimator, X, Y, scoring=None, cv=None)

where:

estimatoris a sklearn model that implements thefitmethod (e.g. instance of LinearRegression or LogisticRegression),Xis the list or 2D array containing the features you want to train on,yis a list containing the values you want to predict (target column),scoringis a string describing the scoring criteria (list of accepted values here).cvdescribes the number of folds. Here are some examples of accepted values:- an instance of the

KFoldclass, - an integer representing the number of folds.

- an instance of the

Depending on the scoring criteria you specify, either a single value is returned (e.g. average_precision) or an array of values (e.g.accuracy), one value for each fold.

Here's the general workflow for performing k-fold cross-validation using the classes we just described:

- instantiate the model class you want to fit (e.g. LogisticRegression),

- instantiate the

KFoldclass and using the parameters to specify the k-fold cross-validation attributes you want, - use the

cross_val_scorefunction to return the scoring metric you're interested in.

Instructions

Create a new instance of the

KFoldclass with the following properties:nset to length ofadmissions,- 5 folds,

- shuffle set to

True, - random seed set to

8(so we can answer check using the same seed), - assigned to the variable

kf.

Create a new instance of the

LogisticRegressionclass and assign tolr.Use the

cross_val_scorefunction to perform k-fold cross-validation:- using the LogisticRegression instance

lr, - using the

gpacolumn for training, - using the

actual_labelcolumn as the target column, - returning an array of accuracy values (one value for each fold).

- using the LogisticRegression instance

Assign the resulting array of accuracy values to

accuracies, compute the average accuracy, and assign the average toaverage_accuracy.Use the variable inspector or the

printfunction to display the values foraccuraciesandaverage_accuracy.

from sklearn.cross_validation import KFold

from sklearn.cross_validation import cross_val_score

admissions = pd.read_csv("admissions.csv")

admissions["actual_label"] = admissions["admit"]

admissions = admissions.drop("admit", axis=1)

kf = KFold(len(admissions),5,shuffle=True, random_state=8)

lr=LogisticRegression()

accuracies=cross_val_score(lr, admissions[["gpa"]], admissions["actual_label"], scoring="accuracy", cv=kf)

average_accuracy=sum(accuracies)/len(accuracies)

print(accuracies)

print(average_accuracy)

6: Interpretation

Using 5-fold cross-validation, we achieved an average accuracy score of 64.4%, which closely matches the 63.6% accuracy score we achieved using holdout validation. When working with simple univariate models, often holdout validation is more than enough and the similar accuracy scores confirm this. When you're using multiple features to train a model (multivariate models), performing k-fold cross-validation can give you a better sense of the accuracy you should expect when you use the model on data it wasn't trained on.

7: Next Steps

In this mission, we explored a more robust cross validation technique called k-fold cross-validation. Cross-validation helps us understand a model's generalizability and reduce overfitting. In the next mission, we'll explore some more specific overfitting techniques.

转载于:https://my.oschina.net/Bettyty/blog/751627

K-Fold Cross Validation相关推荐

- 机器学习代码实战——K折交叉验证(K Fold Cross Validation)

文章目录 1.实验目的 2.导入数据和必要模块 3.比较不同模型预测准确率 3.1.逻辑回归 3.2.决策树 3.3.支持向量机 3.4.随机森林 1.实验目的 使用sklearn库中的鸢尾花数据集, ...

- 交叉验证 cross validation 与 K-fold Cross Validation K折叠验证

交叉验证,cross validation是机器学习中非常常见的验证模型鲁棒性的方法.其最主要原理是将数据集的一部分分离出来作为验证集,剩余的用于模型的训练,称为训练集.模型通过训练集来最优化其内部参 ...

- 交叉验证(cross validation)是什么?K折交叉验证(k-fold crossValidation)是什么?

交叉验证(cross validation)是什么?K折交叉验证(k-fold crossValidation)是什么? 交叉验证(cross validation)是什么? 交叉验证是一种模型的验 ...

- 机器学习--K折交叉验证(K-fold cross validation)

K 折交叉验证(K-flod cross validation) 当样本数据不充足时,为了选择更好的模型,可以采用交叉验证方法. 基本思想:把给定的数据进行划分,将划分得到的数据集组合为训练集与测试集 ...

- matlab 交叉验证 代码,交叉验证(Cross Validation)方法思想简介

本帖最后由 azure_sky 于 2014-1-17 00:30 编辑 2).K-fold Cross Validation(记为K-CV) 将原始数据分成K组(一般是均分),将每个子集数据分别做一 ...

- 深度学习:交叉验证(Cross Validation)

首先,交叉验证的目的是为了让被评估的模型达到最优的泛化性能,找到使得模型泛化性能最优的超参值.在全部训练集上重新训练模型,并使用独立测试集对模型性能做出最终评价. 目前在一些论文里倒是没有特别强调这样 ...

- 交叉验证(Cross Validation)最详解

1.OverFitting 在模型训练过程中,过拟合overfitting是非常常见的现象.所谓的overfitting,就是在训练集上表现很好,但是测试集上表现很差.为了减少过拟合,提高模型的泛化能 ...

- 十折交叉验证10-fold cross validation, 数据集划分 训练集 验证集 测试集

机器学习 数据挖掘 数据集划分 训练集 验证集 测试集 Q:如何将数据集划分为测试数据集和训练数据集? A:three ways: 1.像sklearn一样,提供一个将数据集切分成训练集和测试集的函数 ...

- 通过交叉验证(Cross Validation)KFold绘制ROC曲线并选出最优模型进行模型评估、测试、包含分类指标、校准曲线、混淆矩阵等

通过交叉验证(Cross Validation,CV)KFold绘制ROC曲线并选出最优模型进行模型评估.测试.包含分类指标.校准曲线.混淆矩阵等 Cross Validation cross val ...

最新文章

- Android面试真题解析火爆全网,薪资翻倍

- Android 使用RadioButton+Fragment构建Tab

- BZOJ4573:[ZJOI2016]大森林——题解

- linux线程间同步(1)读写锁

- 如何将彩色网页内容变成灰白的

- java日期加一天_Java 关于日期加一天(日期往后多一天)

- [LeetCode][easy]Reformat The String

- [转]《博客园精华集》ASP.NET分册第2论筛选结果文章列表

- 游戏数据库 TcaplusDB

- Pandoc安装与使用总结

- 图片显示上下有空白的解决办法

- 虚拟服务器鼠标左键被锁了,鼠标在网页里左键被锁怎么办

- 阐明量子力学到底为何物?

- Labview 编写TCP/IP 客户端断线重连机制程序,亲测可用

- 74LVC4245的作用及各管脚的定义

- 计算机禁用防病毒程序,win10系统windows Defender开启失败,提示“病毒和威胁防护”?可修改防病毒程序解决...

- python 角度判断_python的turtle也能仿抖音网红文字时钟的代码及分析

- java安全级别设置_怎么调整java安全级别

- c226打印机驱动安装_打印机驱动怎么安装?国产操作系统安装打印驱动方法图文步骤详解...

- 【活动回顾】BSV区块链协会参加「We Are Developers」世界大会

热门文章

- linux翻转字符串

- 【Mac】Mac键盘实现Home, End, Page UP, Page DOWN

- .Net资源文件全球化

- [转载]Informix平安特征庇护数据的详细方法

- 你是我心中永远抹不掉的痛

- ImportError: No module named cv2问题的解决方法(修改python默认版本)

- java获取当月1号 的时间chuo_java获取时间戳的方法

- 有没有安卓4.0的java模拟器_电脑端安装Android4.0模拟器使用教程

- wordpress 字符串翻译日期_WordPress强大搜索功能如何实现?安装Ivory Search插件

- java基础之 反射_Java基础之反射原理与用法详解