自然语言12_Tokenizing Words and Sentences with NLTK

sklearn实战-乳腺癌细胞数据挖掘(博主亲自录制视频教程)

https://study.163.com/course/introduction.htm?courseId=1005269003&utm_campaign=commission&utm_source=cp-400000000398149&utm_medium=share

https://www.pythonprogramming.net/tokenizing-words-sentences-nltk-tutorial/

# -*- coding: utf-8 -*-

"""

Created on Sun Nov 13 09:14:13 2016@author: daxiong

"""from nltk.tokenize import sent_tokenize,word_tokenizeexample_text="Five score years ago, a great American, in whose symbolic shadow we stand today, signed the Emancipation Proclamation. This momentous decree came as a great beacon light of hope to millions of Negro slaves who had been seared in the flames of withering injustice. It came as a joyous daybreak to end the long night of bad captivity."list_sentences=sent_tokenize(example_text)list_words=word_tokenize(example_text)

代码测试

Tokenizing Words and Sentences with NLTK

Welcome to a Natural Language Processing tutorial series, using the Natural Language Toolkit, or NLTK, module with Python.

The NLTK module is a massive tool kit, aimed at helping you with the entire Natural Language Processing (NLP) methodology. NLTK will aid you with everything from splitting sentences from paragraphs, splitting up words, recognizing the part of speech of those words, highlighting the main subjects, and then even with helping your machine to understand what the text is all about. In this series, we're going to tackle the field of opinion mining, or sentiment analysis.

In our path to learning how to do sentiment analysis with NLTK, we're going to learn the following:

- Tokenizing - Splitting sentences and words from the body of text.

- Part of Speech tagging

- Machine Learning with the Naive Bayes classifier

- How to tie in Scikit-learn (sklearn) with NLTK

- Training classifiers with datasets

- Performing live, streaming, sentiment analysis with Twitter.

- ...and much more.

In order to get started, you are going to need the NLTK module, as well as Python.

If you do not have Python yet, go to Python.org and download the latest version of Python if you are on Windows. If you are on Mac or Linux, you should be able to run an apt-get install python3

Next, you're going to need NLTK 3. The easiest method to installing the NLTK module is going to be with pip.

For all users, that is done by opening up cmd.exe, bash, or whatever shell you use and typing:

pip install nltk

Next, we need to install some of the components for NLTK. Open python via whatever means you normally do, and type:

import nltknltk.download()

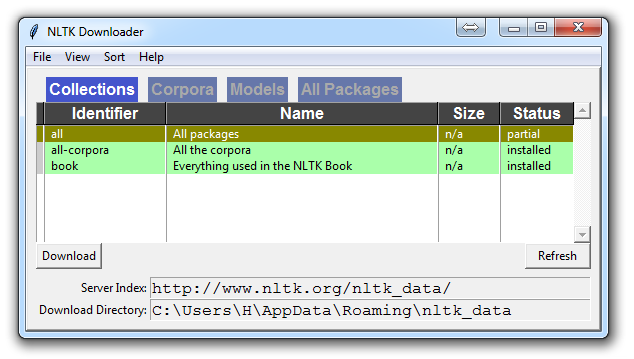

Unless you are operating headless, a GUI will pop up like this, only probably with red instead of green:

Choose to download "all" for all packages, and then click 'download.' This will give you all of the tokenizers, chunkers, other algorithms, and all of the corpora. If space is an issue, you can elect to selectively download everything manually. The NLTK module will take up about 7MB, and the entire nltk_data directory will take up about 1.8GB, which includes your chunkers, parsers, and the corpora.

If you are operating headless, like on a VPS, you can install everything by running Python and doing:

import nltk

nltk.download()

d (for download)

all (for download everything)

That will download everything for you headlessly.

Now that you have all the things that you need, let's knock out some quick vocabulary:

- Corpus - Body of text, singular. Corpora is the plural of this. Example: A collection of medical journals.

- Lexicon - Words and their meanings. Example: English dictionary. Consider, however, that various fields will have different lexicons. For example: To a financial investor, the first meaning for the word "Bull" is someone who is confident about the market, as compared to the common English lexicon, where the first meaning for the word "Bull" is an animal. As such, there is a special lexicon for financial investors, doctors, children, mechanics, and so on.

- Token - Each "entity" that is a part of whatever was split up based on rules. For examples, each word is a token when a sentence is "tokenized" into words. Each sentence can also be a token, if you tokenized the sentences out of a paragraph.

These are the words you will most commonly hear upon entering the Natural Language Processing (NLP) space, but there are many more that we will be covering in time. With that, let's show an example of how one might actually tokenize something into tokens with the NLTK module.

from nltk.tokenize import sent_tokenize, word_tokenize EXAMPLE_TEXT = "Hello Mr. Smith, how are you doing today? The weather is great, and Python is awesome. The sky is pinkish-blue. You shouldn't eat cardboard." print(sent_tokenize(EXAMPLE_TEXT))

At first, you may think tokenizing by things like words or sentences is a rather trivial enterprise. For many sentences it can be. The first step would be likely doing a simple .split('. '), or splitting by period followed by a space. Then maybe you would bring in some regular expressions to split by period, space, and then a capital letter. The problem is that things like Mr. Smith would cause you trouble, and many other things. Splitting by word is also a challenge, especially when considering things like concatenations like we and are to we're. NLTK is going to go ahead and just save you a ton of time with this seemingly simple, yet very complex, operation.

The above code will output the sentences, split up into a list of sentences, which you can do things like iterate through with a for loop.

['Hello Mr. Smith, how are you doing today?', 'The weather is great, and Python is awesome.', 'The sky is pinkish-blue.', "You shouldn't eat cardboard."]

So there, we have created tokens, which are sentences. Let's tokenize by word instead this time:

print(word_tokenize(EXAMPLE_TEXT))

Now our output is: ['Hello', 'Mr.', 'Smith', ',', 'how', 'are', 'you', 'doing', 'today', '?', 'The', 'weather', 'is', 'great', ',', 'and', 'Python', 'is', 'awesome', '.', 'The', 'sky', 'is', 'pinkish-blue', '.', 'You', 'should', "n't", 'eat', 'cardboard', '.']

There are a few things to note here. First, notice that punctuation is treated as a separate token. Also, notice the separation of the word "shouldn't" into "should" and "n't." Finally, notice that "pinkish-blue" is indeed treated like the "one word" it was meant to be turned into. Pretty cool!

Now, looking at these tokenized words, we have to begin thinking about what our next step might be. We start to ponder about how might we derive meaning by looking at these words. We can clearly think of ways to put value to many words, but we also see a few words that are basically worthless. These are a form of "stop words," which we can also handle for. That is what we're going to be talking about in the next tutorial.

python风控评分卡建模和风控常识

https://study.163.com/course/introduction.htm?courseId=1005214003&utm_campaign=commission&utm_source=cp-400000000398149&utm_medium=share

转载于:https://www.cnblogs.com/webRobot/p/6079892.html

自然语言12_Tokenizing Words and Sentences with NLTK相关推荐

- python和nltk自然语言处理 pdf_NLTK基础教程:用NLTK和Python库构建机器学习应用 完整版pdf...

本书主要介绍如何通过NLTK库与一些Python库的结合从而实现复杂的NLP任务和机器学习应用.全书共分为10章.第1章对NLP进行了简单介绍.第2章.第3章和第4章主要介绍一些通用的预处理技术.专属 ...

- 自然语言15_Part of Speech Tagging with NLTK

sklearn实战-乳腺癌细胞数据挖掘(博主亲自录制视频教程) https://study.163.com/course/introduction.htm?courseId=1005269003&am ...

- python自然语言处理 分词_Python 自然语言处理(基于jieba分词和NLTK)

Python 自然语言处理(基于jieba分词和NLTK) 发布时间:2018-05-11 11:39, 浏览次数:1038 , 标签: Python jieba NLTK ----------欢迎加 ...

- python自然语言处理工具NLTK各个包的意思和作用总结

[转]http://www.myexception.cn/perl-python/464414.html [原]Python NLP实战之一:环境准备 最近正在学习Python,看了几本关于Pytho ...

- 自然语言处理综述_自然语言处理

自然语言处理综述 Aren't we all initially got surprised when smart devices understood what we were telling th ...

- NLTK01 《NLTK基础教程--用NLTK和Python库构建机器学习应用》

01 关于NLTK的认知 很多介绍NLP的,都会提到NLTK库.还以为NLTK是多牛逼的必需品.看了之后,感觉NLTK对实际项目,作用不大.很多内容都是从语义.语法方面解决NLP问题的.感觉不太靠谱. ...

- Py之nltk:nltk包的简介、安装、使用方法、代码实现之详细攻略

Py之nltk:nltk包的简介.安装.使用方法.代码实现之详细攻略 目录 nltk包的简介 nltk包的安装 nltk包的使用方法 nltk包的代码实现 nltk包的简介 NLTK is a lea ...

- NLTK学习之一:简单文本分析

nltk的全称是natural language toolkit,是一套基于python的自然语言处理工具集. 1 NLTK的安装 nltk的安装十分便捷,只需要pip就可以. pip install ...

- NLTK(1.2)NLTK简介

文章目录 NLTK库简介 NLTK库重要模块及功能 安装NLTK库 NLTK中的语料库 英文文本语料库 标注文本语料库 其他语言的语料库 文本语料库常见结构 NLTK 中定义的基本语料库函数 加载自己 ...

最新文章

- Matplotlib可视化散点图、配置X轴为对数坐标、并使用线条(line)连接散点图中的数据点(Simple Line Plot with Data points in Matplotlib)

- 在python中排序元组

- was连接oracle rac集群,Oracle集群(RAC)及 jdbc 联接双机数据库

- PYTHON如何在内存中生成ZIP文件

- Spark 下操作 HBase

- 什么是自然语言处理,它如何工作?

- [毕业生的商业软件开发之路]C#语法基础结构

- k8s ReplicaSet

- MyEclipse的破解

- CocosCreator休闲游戏发布到字节跳动平台

- element-ui 固定弹窗底部的按钮

- linux下编写脚本文件 .sh

- IBM Tivoli Management Framework默认设置漏洞

- 算法:十六进制最大数

- 从“游击队”到“正规军”:虾神成长史

- 混合模式程序集是针对“v1.1.4322”版的执行时生成的,在没有配置其它信息的情况下,无法在 4.0 执行时中载入该程序集。...

- iOS 打开AppStore指定app下载页

- iOS应用跳转(包括iPhone原有应用跳转和第三方应用跳转)

- Docker——Tomcat部署

- ps保存html和图像格式不显示,photoshop保存web格式不能显示该怎样解决