深入 CoreML 模型定义

Core ML

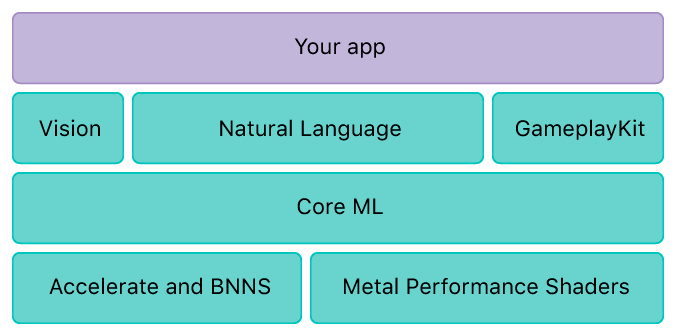

Core ML是apple在iOS和MAC上的机器学习框架, 开发者可以使用Core ML将机器学习模型集成到应用中. Core ML架构于Accelerate, BNNS, Metal之上, 是apple针对其硬件深度优化后的框架, 可以大大加速开发者的工作, 让开发者集中精力于模型的训练和优化上.

Core ML所支持的模型文件是后缀为.mlmodel的文件, 使用非常简单, 将模型文件拖入工程即可. 不过, 这个文件里头定义的到底是啥呢?

要了解Core ML是如何工作的, Core ML能支持什么样的学习模型, 在使用Core ML的时候怎么更快的trouble-shooting, 如何在Core ML和其他平台的机器学习框架之间切换,则需要了解Core ML的模型文件的规范.

Core ML Format

Apple官方的Core ML模型的Spec在这里: Core ML Model Format Specification

这种文档看起来比较枯燥, 让我们从一个例子入手来学习.mlmodel文件里的奥秘.

AgeNet 是一个开放的识别人的年龄和性别的模型, 可以在下面的链接下载:

https://github.com/volvet/InsideCoreMLModel/blob/master/python/AgeNet.mlmodel

.mlmode文件实质上是protobuf文件, proto文件定义于

https://github.com/apple/coremltools/tree/master/mlmodel/format

使用下面的命令将Core ML的proto文件转为 python代码

protoc --python_out=. *.proto

然后就可以开始解析mlmodel文件了.

最上层的Message是Model, 定义如下:

message Model {int32 specificationVersion = 1;ModelDescription description = 2;// start at 200 here// model specific parameters:oneof Type {// pipeline starts at 200PipelineClassifier pipelineClassifier = 200;PipelineRegressor pipelineRegressor = 201;Pipeline pipeline = 202;// regressors start at 300GLMRegressor glmRegressor = 300;SupportVectorRegressor supportVectorRegressor = 301;TreeEnsembleRegressor treeEnsembleRegressor = 302;NeuralNetworkRegressor neuralNetworkRegressor = 303;BayesianProbitRegressor bayesianProbitRegressor = 304;// classifiers start at 400GLMClassifier glmClassifier = 400;SupportVectorClassifier supportVectorClassifier = 401;TreeEnsembleClassifier treeEnsembleClassifier = 402;NeuralNetworkClassifier neuralNetworkClassifier = 403;// generic models start at 500NeuralNetwork neuralNetwork = 500;// Custom modelCustomModel customModel = 555;...}}

所以解析就从Model开始

if __name__ == '__main__':model = Model_pb2.Model()with open('AgeNet.mlmodel', 'rb') as f:model.ParseFromString(f.read())print(model.description)print(model.specificationVersion)if model.HasField('neuralNetworkClassifier'):parseNeuralNetworkClassifier(model.neuralNetworkClassifier)

model.description就是在Xcode中看到的模型的说明文字.

model.specificationVersion是模型的格式版本, 本文的例子是 1.

本文的模型是一个卷积神经网络模型, 所以就只解析了neuralNetworkClassifier.

解析neuralNetworkClassifier的代码如下:

def parseNeuralNetworkClassifier(neuralNetworkClassifier):for preprocess in neuralNetworkClassifier.preprocessing:print(preprocess.featureName)if preprocess.HasField('scaler'):print (preprocess.scaler)if preprocess.HasField('meanImage'):print (preprocess.meanImage)for layer in neuralNetworkClassifier.layers:print(layer.name, layer.input, layer.output)if layer.HasField('convolution'):parseConvolution(layer.convolution)elif layer.HasField('pooling'):parsePooling(layer.pooling)elif layer.HasField('activation'):parseActivation(layer.activation)elif layer.HasField('lrn'):parseLRNLayer(layer.lrn)elif layer.HasField('flatten'):parseFlattenLayer(layer.flatten)elif layer.HasField('innerProduct'):parseInnerProductLayer(layer.innerProduct)elif layer.HasField('softmax'):parseSoftmax(layer.softmax)pass

先解析preprocessing, preprocessing分为两种, scaler和meanImage. 定义如下:

/// Preprocessing parameters for image inputs.

message NeuralNetworkPreprocessing {string featureName = 1; /// must be equal to the input name to which the preprocessing is appliedoneof preprocessor {NeuralNetworkImageScaler scaler = 10;NeuralNetworkMeanImage meanImage = 11;}

}

scaler是用来定义输入图像像素值相乘和偏移的因子, 常见可以将0~255的整型像素处理成为0~1的浮点数.

meanImage用来定义build-in图像,输入的图像需与之相减再进行下一步处理.

神经网络层的定义如下

message NeuralNetworkLayer {string name = 1; //descriptive name of the layerrepeated string input = 2;repeated string output = 3;oneof layer {// start at 100 hereConvolutionLayerParams convolution = 100;PoolingLayerParams pooling = 120;ActivationParams activation = 130;InnerProductLayerParams innerProduct = 140;EmbeddingLayerParams embedding = 150;...}

}

卷积层的定义:

message ConvolutionLayerParams {/*** The number of kernels.* Same as ``C_out`` used in the layer description.*/uint64 outputChannels = 1;/*** Channel dimension of the kernels.* Must be equal to ``inputChannels / nGroups``, if isDeconvolution == False* Must be equal to ``inputChannels``, if isDeconvolution == True*/uint64 kernelChannels = 2;/*** Group convolution, i.e. weight reuse along channel axis.* Input and kernels are divided into g groups* and convolution / deconvolution is applied within the groups independently.* If not set or 0, it is set to the default value 1.*/uint64 nGroups = 10;/*** Must be length 2 in the order ``[H, W]``.* If not set, default value ``[3, 3]`` is used.*/repeated uint64 kernelSize = 20;/*** Must be length 2 in the order ``[H, W]``.* If not set, default value ``[1, 1]`` is used.*/repeated uint64 stride = 30;/*** Must be length 2 in order ``[H, W]``.* If not set, default value ``[1, 1]`` is used.* It is ignored if ``isDeconvolution == true``.*/repeated uint64 dilationFactor = 40;/*** The type of padding.*/oneof ConvolutionPaddingType {ValidPadding valid = 50;SamePadding same = 51;}/*** Flag to specify whether it is a deconvolution layer.*/bool isDeconvolution = 60;/*** Flag to specify whether a bias is to be added or not.*/bool hasBias = 70;/*** Weights associated with this layer.* If convolution (``isDeconvolution == false``), weights have the shape* ``[outputChannels, kernelChannels, kernelHeight, kernelWidth]``, where kernelChannels == inputChannels / nGroups* If deconvolution (``isDeconvolution == true``) weights have the shape* ``[kernelChannels, outputChannels / nGroups, kernelHeight, kernelWidth]``, where kernelChannels == inputChannels*/WeightParams weights = 90;WeightParams bias = 91; /// Must be of size [outputChannels]./*** The output shape, which has length 2 ``[H_out, W_out]``.* This is used only for deconvolution (``isDeconvolution == true``).* If not set, the deconvolution output shape is calculated* based on ``ConvolutionPaddingType``.*/repeated uint64 outputShape = 100;

}

解析代码如下:

def parseConvolution(convolution):print('\tkernal size: ', convolution.kernelSize)print('\tinput channels: ', convolution.kernelChannels)print('\toutput channels: ', convolution.outputChannels)print('\tkernel channles: ', convolution.kernelChannels)print('\tgroups: ', convolution.nGroups)print('\tstride: ', convolution.stride)print('\tdilation factor: ', convolution.dilationFactor)if convolution.HasField('valid'):print('\tvalid: ', convolution.valid)if convolution.HasField('same'):print('\tsame: ', convolution.same)print('\tis deconvolution: ', convolution.isDeconvolution)print('\thas bias: ', convolution.hasBias)if convolution.hasBias:parseWeightParam(convolution.bias)parseWeightParam(convolution.weights)print('\toutput shape: ', convolution.outputShape)pass

其他的池化, 激活, 全连接的定义都可以在proto文件中找到详细的定义. 最后解析代码的输出如下:

runfile('/Users/volvetzhang/Projects/InsideCoreMLModel/python/test_coreml_proto.py', wdir='/Users/volvetzhang/Projects/InsideCoreMLModel/python')

Reloaded modules: Model_pb2, VisionFeaturePrint_pb2, TextClassifier_pb2, DataStructures_pb2, FeatureTypes_pb2, WordTagger_pb2, ArrayFeatureExtractor_pb2, BayesianProbitRegressor_pb2, CategoricalMapping_pb2, CustomModel_pb2, DictVectorizer_pb2, FeatureVectorizer_pb2, GLMRegressor_pb2, GLMClassifier_pb2, Identity_pb2, Imputer_pb2, NeuralNetwork_pb2, Normalizer_pb2, OneHotEncoder_pb2, Scaler_pb2, NonMaximumSuppression_pb2, SVM_pb2, TreeEnsemble_pb2

input {name: "data"shortDescription: "An image with a face."type {imageType {width: 227height: 227colorSpace: RGB}}

}

output {name: "prob"shortDescription: "The probabilities for each age, for the given input."type {dictionaryType {stringKeyType {}}}

}

output {name: "classLabel"shortDescription: "The most likely age, for the given input."type {stringType {}}

}

predictedFeatureName: "classLabel"

predictedProbabilitiesName: "prob"

metadata {shortDescription: "Age Classification using Convolutional Neural Networks"author: "Gil Levi and Tal Hassner"license: "Unknown"

}1channelScale: 1.0conv1 ['data'] ['conv1_1relu1']kernal size: [7, 7]input channels: 3output channels: 96kernel channles: 3groups: 1stride: [4, 4]dilation factor: [1, 1]valid: is deconvolution: Falsehas bias: Trueweight paramweight paramoutput shape: []

relu1 ['conv1_1relu1'] ['conv1']relu

pool1 ['conv1'] ['pool1']type: 0kernel size: [3, 3]stride: [2, 2]includeLastPixel: paddingAmounts: 0

paddingAmounts: 0avgPoolExcludePadding: FalseglobalPooling: False

norm1 ['pool1'] ['norm1']alpha: 9.999999747378752e-05beta: 0.75local size: 5k: 1.0

conv2 ['norm1'] ['conv2_5relu2']kernal size: [5, 5]input channels: 96output channels: 256kernel channles: 96groups: 1stride: [1, 1]dilation factor: [1, 1]valid: paddingAmounts {borderAmounts {startEdgeSize: 2endEdgeSize: 2}borderAmounts {startEdgeSize: 2endEdgeSize: 2}

}is deconvolution: Falsehas bias: Trueweight paramweight paramoutput shape: []

relu2 ['conv2_5relu2'] ['conv2']relu

pool2 ['conv2'] ['pool2']type: 0kernel size: [3, 3]stride: [2, 2]includeLastPixel: paddingAmounts: 0

paddingAmounts: 0avgPoolExcludePadding: FalseglobalPooling: False

norm2 ['pool2'] ['norm2']alpha: 9.999999747378752e-05beta: 0.75local size: 5k: 1.0

conv3 ['norm2'] ['conv3_9relu3']kernal size: [3, 3]input channels: 256output channels: 384kernel channles: 256groups: 1stride: [1, 1]dilation factor: [1, 1]valid: paddingAmounts {borderAmounts {startEdgeSize: 1endEdgeSize: 1}borderAmounts {startEdgeSize: 1endEdgeSize: 1}

}is deconvolution: Falsehas bias: Trueweight paramweight paramoutput shape: []

relu3 ['conv3_9relu3'] ['conv3']relu

pool5 ['conv3'] ['pool5']type: 0kernel size: [3, 3]stride: [2, 2]includeLastPixel: paddingAmounts: 0

paddingAmounts: 0avgPoolExcludePadding: FalseglobalPooling: False

fc6_preflatten ['pool5'] ['pool5_12_flattened']mode: 0

fc6 ['pool5_12_flattened'] ['fc6_13relu6']input channels: 18816output channels: 512has bias: Trueweight paramweight param

relu6 ['fc6_13relu6'] ['fc6']relu

fc7_preflatten ['fc6'] ['fc6_15_flattened']mode: 0

fc7 ['fc6_15_flattened'] ['fc7_16relu7']input channels: 512output channels: 512has bias: Trueweight paramweight param

relu7 ['fc7_16relu7'] ['fc7']relu

fc8_preflatten ['fc7'] ['fc7_18_flattened']mode: 0

fc8 ['fc7_18_flattened'] ['fc8']input channels: 512output channels: 8has bias: Trueweight paramweight param

prob ['fc8'] ['prob']softmax

这就是AgeNet的详细定义

本文源代码在此:

https://github.com/volvet/InsideCoreMLModel

Reference

- https://github.com/apple/coremltools

- https://apple.github.io/coremltools/coremlspecification/index.html

- http://machinethink.net/blog/peek-inside-coreml/

- https://developer.apple.com/documentation/coreml

深入 CoreML 模型定义相关推荐

- MDA模型定义及扩展

2019独角兽企业重金招聘Python工程师标准>>> Tiny框架中,对模型本向没有任何强制性约束,也就是说你可以把任何类型的对象作为模型,也不必实现任何接口.因此简单的说,你定义 ...

- MetaModelEngine:域模型定义

每一个DSL的核心都是一个域模型,它定义了这一语言所代表的各种概念,以及这些概念的属性和它们之间的关系,在创建DSL每一个元素时都是使用域模型来描述.域模型还为语言的其他方面的建立提供了基础:图形符号 ...

- 【tfcoreml】tensorflow向CoreML模型的转换工具封装

安装tf向apple coreml模型转换包tfcoreml 基于苹果自己的转换工具coremltools进行封装 tfcoreml 为了将训练的模型转换到apple中使用,需要将模型转换为ios支持 ...

- Entity Framework 6 Recipes 2nd Edition(11-5)译 - 从”模型定义”函数返回一个匿名类型...

11-5. 从"模型定义"函数返回一个匿名类型 问题 想创建一个返回一个匿名类型的"模型定义"函数 解决方案 假设已有游客(Visitor) 预订(reserv ...

- PyTorch学习笔记(五):模型定义、修改、保存

往期学习资料推荐: 1.Pytorch实战笔记_GoAI的博客-CSDN博客 2.Pytorch入门教程_GoAI的博客-CSDN博客 本系列目录: PyTorch学习笔记(一):PyTorch环境安 ...

- 算法模型是什么意思,算法模型定义介绍

算法模型定义介绍 1.马尔科夫模型 1.1马尔科夫过程 马尔可夫过程(Markov process)是一类随机过程.它的原始模型马尔可夫链.已知目前状态(现在)的条件下,它未来的演变(将来)不依赖于它 ...

- PyTorch:模型定义

PyTorch模型定义的方式 模型在深度学习中扮演着重要的角色,好的模型极大地促进了深度学习的发展进步,比如CNN的提出解决了图像.视频处理中的诸多问题,RNN/LSTM模型解决了序列数据处理的问题, ...

- 【五一创作】使用Resnet残差网络对图像进行分类(猫十二分类,模型定义、训练、保存、预测)(二)

使用Resnet残差网络对图像进行分类 (猫十二分类,模型定义.训练.保存.预测)(二) 目录 (6).数据集划分 (7).训练集增强 (8).装载数据集 (9).初始化模型 (10).模型训练 (1 ...

- 隐马尔可夫模型定义与3个基本问题

隐马尔可夫模型(hidden Markov model,HMM)是可用于标注问题的统计学习模型,描述由隐藏的马尔可夫链随机生成观测序列的过程,属于生成模型. 隐马尔可夫模型定义 隐马尔可夫模型由初始概 ...

- 深入浅出PyTorch - Pytorch模型定义

深入浅出PyTorch 模型部署及定义 使用seqtoseq模型预测时序数据 Pytorch模型定义 深入浅出PyTorch 1.数据集 1.1数据读入 1.2数据集预处理 2模块化搭建模型 1.数据 ...

最新文章

- java命令行读入密码_java-在命令行上隐藏输入

- 中国航发牵手阿里云共同打造:航空新引擎

- python password函数_python – Flask-HTTPAuth verify_password函数未接收用户名或密码

- shell脚本触发java程序支持传参补跑_01

- 机器学习中生成模型和判别模型

- Linux nc命令详解

- android drawable 对象,Android Drawable开发简介

- 入门Vue.js要学习哪些知识?

- Analysis of variance(ANOVA)

- 免费的瓦片图集资源TiledMap

- 知到网课教师口语艺术考试题库(含答案)

- javascript模块化编程思想(转载网上专家)Javascript模块化编程(一)

- 智能硬件市场与产品概况整理

- 游戏角色设计具体步骤

- quartus如何生成sof_如何高效利用Arm DesignStart计划开放的处理器核-工具篇

- C#ObjectArx Cad将图形范围缩放至指定实体

- Thinkphp5手册学习笔记-配置项

- HTML5+CSS3前端入门教程---从0开始通过一个商城实例手把手教你学习PC端和移动端页面开发第3章初识CSS

- 网上邻居不能访问(方法总结)

- 刺激战场android闪退,刺激战场总是闪退怎么办?刺激战场闪退解决办法