python中knn_如何在python中从头开始构建knn

python中knn

k最近邻居 (k-Nearest Neighbors)

k-Nearest Neighbors (KNN) is a supervised machine learning algorithm that can be used for either regression or classification tasks. KNN is non-parametric, which means that the algorithm does not make assumptions about the underlying distributions of the data. This is in contrast to a technique like linear regression, which is parametric, and requires us to find a function that describes the relationship between dependent and independent variables.

k最近邻(KNN)是一种受监督的机器学习算法,可用于回归或分类任务。 KNN是非参数的,这意味着该算法不对数据的基础分布进行假设。 这与参数化的线性回归等技术形成对比,后者是参数化的,要求我们找到一个描述因变量和自变量之间关系的函数。

KNN has the advantage of being quite intuitive to understand. When used for classification, a query point (or test point) is classified based on the k labeled training points that are closest to that query point.

KNN具有非常直观易懂的优点。 当用于分类时,根据最接近该查询点的k个标记训练点对查询点(或测试点)进行分类。

For a simplified example, see the figure below. The left panel shows a 2-d plot of sixteen data points — eight are labeled as green, and eight are labeled as purple. Now, the right panel shows how we would classify a new point (the black cross), using KNN when k=3. We find the three closest points, and count up how many ‘votes’ each color has within those three points. In this case, two of the three points are purple — so, the black cross will be labeled as purple.

有关简化示例,请参见下图。 左面板显示了16个数据点的二维图-八个标记为绿色,八个标记为紫色。 现在,右面板显示了当k = 3时,如何使用KNN对新点(黑色十字)进行分类。 我们找到三个最接近的点,并计算出每种颜色在这三个点内有多少个“票数”。 在这种情况下,三个点中的两个是紫色的-因此,黑色十字将被标记为紫色。

Calculating Distance

计算距离

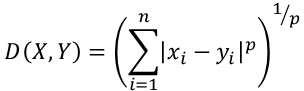

The distance between points is determined by using one of several versions of the Minkowski distance equation. The generalized formula for Minkowski distance can be represented as follows:

点之间的距离是通过使用Minkowski距离方程的几个版本之一确定的。 Minkowski距离的广义公式可以表示为:

where X and Y are data points, n is the number of dimensions, and p is the Minkowski power parameter. When p =1, the distance is known at the Manhattan (or Taxicab) distance, and when p=2 the distance is known as the Euclidean distance. In two dimensions, the Manhattan and Euclidean distances between two points are easy to visualize (see the graph below), however at higher orders of p, the Minkowski distance becomes more abstract.

其中X和Y是数据点, n是维数, p是Minkowski幂参数。 当p = 1时,该距离已知为曼哈顿(或出租车)距离,而当p = 2时,该距离称为欧几里得距离。 在两个维度上,两点之间的曼哈顿距离和欧几里得距离很容易可视化(请参见下图),但是在p的高阶处,明可夫斯基距离变得更加抽象。

Python中的KNN (KNN in Python)

To implement my own version of the KNN classifier in Python, I’ll first want to import a few common libraries to help out.

为了用Python实现我自己的KNN分类器版本,我首先要导入一些常见的库来提供帮助。

# Initial importsimport pandas as pd

import numpy as np

import matplotlib.pyplot as plt加载数据中 (Loading Data)

To test the KNN classifier, I’m going to use the iris data set from sklearn.datasets. The data set has measurements (Sepal Length, Sepal Width, Petal Length, Petal Width) for 150 iris plants, split evenly among three species (0 = setosa, 1 = versicolor, and 2 = virginica). Below, I load the data and store it in a dataframe.

为了测试KNN分类器,我将使用sklearn.datasets中的虹膜数据集。 数据集具有150种鸢尾植物的测量值(头长,萼片宽度,花瓣长度,花瓣宽度),均匀地分为三种(0 =刚毛,1 =杂色和2 =弗吉尼亚)。 在下面,我加载数据并将其存储在数据框中。

# Load iris data and store in dataframefrom sklearn import datasetsiris = datasets.load_iris()df = pd.DataFrame(data=iris.data, columns=iris.feature_names)

df['target'] = iris.target

df.head()

I’ll also separate the data into features (X) and the target variable (y), which is the species label for each plant.

我还将数据分为特征(X)和目标变量(y),目标变量是每种植物的种类标签。

# Separate X and y dataX = df.drop('target', axis=1)

y = df.target建立KNN框架 (Building out the KNN Framework)

Creating a functioning KNN classifier can be broken down into several steps. While KNN includes a bit more nuance than this, here’s my bare-bones to-do list:

创建功能良好的KNN分类器可以分为几个步骤。 尽管KNN包含的细微之处要多于此,但以下是我的基本工作清单:

- Define a function to calculate the distance between two points定义一个函数来计算两点之间的距离

- Use the distance function to get the distance between a test point and all known data points使用距离函数获取测试点与所有已知数据点之间的距离

- Sort distance measurements to find the points closest to the test point (i.e., find the nearest neighbors)对距离测量值进行排序,以找到最接近测试点的点(即,找到最近的邻居)

- Use majority class labels of those closest points to predict the label of the test point使用那些最接近的点的多数类标签来预测测试点的标签

- Repeat steps 1 through 4 until all test data points are classified重复步骤1至4,直到对所有测试数据点进行分类

1.定义一个函数来计算两点之间的距离 (1. Define a function to calculate distance between two points)

First, I define a function called minkowski_distance, that takes an input of two data points (a & b) and a Minkowski power parameter p, and returns the distance between the two points. Note that this function calculates distance exactly like the Minkowski formula I mentioned earlier. By making p an adjustable parameter, I can decide whether I want to calculate Manhattan distance (p=1), Euclidean distance (p=2), or some higher order of the Minkowski distance.

首先,我定义一个名为minkowski_distance的函数,该函数接受两个数据点( a & b )和一个Minkowski幂参数p的输入,并返回两个点之间的距离。 请注意,此函数计算距离的方式与我之前提到的Minkowski公式完全相同。 通过将p设置为可调参数,我可以决定是否要计算曼哈顿距离(p = 1),欧几里得距离(p = 2)或Minkowski距离的更高阶。

# Calculate distance between two pointsdef minkowski_distance(a, b, p=1):# Store the number of dimensionsdim = len(a)# Set initial distance to 0distance = 0# Calculate minkowski distance using parameter pfor d in range(dim):distance += abs(a[d] - b[d])**pdistance = distance**(1/p)return distance# Test the functionminkowski_distance(a=X.iloc[0], b=X.iloc[1], p=1)0.69999999999999932.使用距离功能获取测试点与所有已知数据点之间的距离 (2. Use the distance function to get distance between a test point and all known data points)

For step 2, I simply repeat the minkowski_distance calculation for all labeled points in X and store them in a dataframe.

对于第2步,我只需要对X中所有标记的点重复minkowski_distance计算,并将它们存储在数据框中。

# Define an arbitrary test pointtest_pt = [4.8, 2.7, 2.5, 0.7]# Calculate distance between test_pt and all points in Xdistances = []for i in X.index:distances.append(minkowski_distance(test_pt, X.iloc[i]))df_dists = pd.DataFrame(data=distances, index=X.index, columns=['dist'])

df_dists.head()

3.对距离测量值进行排序以找到最接近测试点的点 (3. Sort distance measurements to find the points closest to the test point)

In step 3, I use the pandas .sort_values() method to sort by distance, and return only the top 5 results.

在第3步中,我使用pandas .sort_values()方法按距离排序,并且仅返回前5个结果。

# Find the 5 nearest neighborsdf_nn = df_dists.sort_values(by=['dist'], axis=0)[:5]

df_nn

4.使用那些最近点的多数类标签来预测测试点的标签 (4. Use majority class labels of those closest points to predict the label of the test point)

For this step, I use collections.Counter to keep track of the labels that coincide with the nearest neighbor points. I then use the .most_common() method to return the most commonly occurring label. Note: if there is a tie between two or more labels for the title of “most common” label, the one that was first encountered by the Counter() object will be the one that gets returned.

对于这一步,我使用collections.Counter来跟踪与最近的邻居点重合的标签。 然后,我使用.most_common()方法返回最常见的标签。 注意:如果两个或两个以上标签之间的关系为“最常见”标签的标题,则Counter()对象首先遇到的标签将是返回的标签。

from collections import Counter# Create counter object to track the labelscounter = Counter(y[df_nn.index])# Get most common label of all the nearest neighborscounter.most_common()[0][0]15.重复步骤1至4,直到对所有测试数据点进行分类 (5. Repeat steps 1 through 4 until all test data points are classified)

In this step, I put the code I’ve already written to work and write a function to classify the data using KNN. First, I perform a train_test_split on the data (75% train, 25% test), and then scale the data using StandardScaler(). Since KNN is distance-based, it is important to make sure that the features are scaled properly before feeding them into the algorithm.

在这一步中,我将已经编写的代码投入使用,并编写了一个使用KNN对数据进行分类的函数。 首先,我对数据执行train_test_split (75%的火车,25%的测试),然后使用StandardScaler()缩放数据。 由于KNN是基于距离的,因此在将特征输入算法之前,确保正确缩放特征很重要。

Additionally, to avoid data leakage, it is good practice to scale the features after the train_test_split has been performed. First, scale the data from the training set only (scaler.fit_transform(X_train)), and then use that information to scale the test set (scaler.tranform(X_test)). This way, I can ensure that no information outside of the training data is used to create the model.

此外,为避免数据泄漏,优良作法是在train_test_split执行之后缩放功能。 首先,仅缩放训练集中的数据 ( scaler.fit_transform(X_train) ),然后使用该信息来缩放测试集( scaler.tranform(X_test) )。 这样,我可以确保没有使用训练数据之外的任何信息来创建模型。

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler# Split the data - 75% train, 25% testX_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25,random_state=1)# Scale the X datascaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)Next, I define a function called knn_predict that takes in all of the training and test data, k, and p, and returns the predictions my KNN classifier makes for the test set (y_hat_test). This function doesn’t really include anything new — it is simply applying what I’ve already worked through above. The function should return a list of label predictions containing only 0’s, 1’s and 2’s.

接下来,我定义一个名为knn_predict的函数,该函数接收所有训练和测试数据k和p ,并返回我的KNN分类器对测试集所做的预测( y_hat_test )。 该功能实际上并没有包含任何新功能-只是应用了我上面已经完成的工作。 该函数应返回仅包含0、1和2的标签预测列表。

def knn_predict(X_train, X_test, y_train, y_test, k, p):# Counter to help with label votingfrom collections import Counter# Make predictions on the test data# Need output of 1 prediction per test data pointy_hat_test = []for test_point in X_test:distances = []for train_point in X_train:distance = minkowski_distance(test_point, train_point, p=p)distances.append(distance)# Store distances in a dataframedf_dists = pd.DataFrame(data=distances, columns=['dist'], index=y_train.index)# Sort distances, and only consider the k closest pointsdf_nn = df_dists.sort_values(by=['dist'], axis=0)[:k]# Create counter object to track the labels of k closest neighborscounter = Counter(y_train[df_nn.index])# Get most common label of all the nearest neighborsprediction = counter.most_common()[0][0]# Append prediction to output listy_hat_test.append(prediction)return y_hat_test# Make predictions on test dataset

y_hat_test = knn_predict(X_train, X_test, y_train, y_test, k=5, p=1)print(y_hat_test)[0, 1, 1, 0, 2, 1, 2, 0, 0, 2, 1, 0, 2, 1, 1, 0, 1, 1, 0, 0, 1, 1, 2, 0, 2, 1, 0, 0, 1, 2, 1, 2, 1, 2, 2, 0, 1, 0]And there they are! These are the predictions that this home-brewed KNN classifier has made on the test set. Let’s see how well it worked:

在那里! 这些是这个自制的KNN分类器对测试集所做的预测。 让我们看看它的效果如何:

# Get test accuracy scorefrom sklearn.metrics import accuracy_scoreprint(accuracy_score(y_test, y_hat_test))0.9736842105263158Looks like the classifier achieved 97% accuracy on the test set. Not too bad at all! But how do I know if it actually worked correctly? Let’s check the result of sklearn’s KNeighborsClassifier on the same data:

看起来分类器在测试集上达到了97%的准确性。 一点也不差! 但是我怎么知道它是否真的正常工作呢? 让我们在相同数据上检查sklearn的KNeighborsClassifier的结果:

# Testing to see results from sklearn.neighbors.KNeighborsClassifierfrom sklearn.neighbors import KNeighborsClassifierclf = KNeighborsClassifier(n_neighbors=5, p=1)

clf.fit(X_train, y_train)

y_pred_test = clf.predict(X_test)print(f"Sklearn KNN Accuracy: {accuracy_score(y_test, y_pred_test)}")Sklearn KNN Accuracy: 0.9736842105263158Nice! sklearn’s implementation of the KNN classifier gives us the exact same accuracy score.

真好! sklearn对KNN分类器的实现为我们提供了完全相同的准确性得分。

探索变化k的影响 (Exploring the effect of varying k)

My KNN classifier performed quite well with the selected value of k = 5. KNN doesn’t have as many tune-able parameters as other algorithms like Decision Trees or Random Forests, but k happens to be one of them. Let’s see how the classification accuracy changes when I vary k:

我的KNN分类器在选定的k = 5时表现很好。KNN没有像决策树或随机森林之类的其他算法那么多的可调参数,但k恰好是其中之一。 让我们看看改变k时分类精度如何变化:

# Obtain accuracy score varying k from 1 to 99accuracies = []for k in range(1,100):y_hat_test = knn_predict(X_train, X_test, y_train, y_test, k, p=1)accuracies.append(accuracy_score(y_test, y_hat_test))# Plot the results fig, ax = plt.subplots(figsize=(8,6))

ax.plot(range(1,100), accuracies)

ax.set_xlabel('# of Nearest Neighbors (k)')

ax.set_ylabel('Accuracy (%)');

In this case, using nearly any k value less than 20 results in great (>95%) classification accuracy on the test set. However, when k becomes greater than about 60, accuracy really starts to drop off. This makes sense, because the data set only has 150 observations — when k is that high, the classifier is probably considering labeled training data points that are way too far from the test points.

在这种情况下,几乎使用任何小于20的k值,都可以在测试集上实现较高的分类精度(> 95%)。 但是,当k大于约60时,精度实际上开始下降。 这是有道理的,因为数据集只有150个观察值-当k很高时,分类器可能正在考虑与测试点相距太远的标记训练数据点。

每个邻居都有投票权吗? (Every neighbor gets a vote — or do they?)

In writing my own KNN classifier, I chose to overlook one clear hyperparameter tuning opportunity: the weight that each of the k nearest points has in classifying a point. In sklearn’s KNeighborsClassifier, this is the weights parameter, and it can be set to ‘uniform’, ‘distance’, or another user-defined function.

在编写自己的KNN分类器时,我选择忽略了一个明确的超参数调整机会: k个最近点中的每一个在对点进行分类时所具有的权重。 在sklearn的KNeighborsClassifier中 ,这是weights参数,可以将其设置为'uniform' , 'distance'或其他用户定义的函数。

When set to ‘uniform’, each of the k nearest neighbors gets an equal vote in labeling a new point. When set to ‘distance’, the neighbors in closest to the new point are weighted more heavily than the neighbors farther away. There are certainly cases where weighting by ‘distance’ would produce better results, and the only way to find out is through hyperparameter tuning.

当设置为'uniform'时 ,k个最近的邻居中的每一个在标记新点时都会得到平等的投票。 设置为“距离”时 ,最接近新点的邻居的权重要比更远的邻居的权重大。 当然,在某些情况下,按“距离”进行加权会产生更好的结果,唯一的找出方法是通过超参数调整。

最后的想法 (Final Thoughts)

Now, make no mistake — sklearn’s implementation is undoubtedly more efficient and more user-friendly than what I’ve cobbled together here. However, I found it a valuable exercise to work through KNN from ‘scratch’, and it has only solidified my understanding of the algorithm. I hope it did the same for you!

现在,请不要误解-sklearn的实现无疑比我在这里拼凑的实现更加有效和用户友好。 但是,我发现从“从头开始”通过KNN进行工作是一个有价值的练习,并且它仅巩固了我对算法的理解。 希望对您也一样!

翻译自: https://towardsdatascience.com/how-to-build-knn-from-scratch-in-python-5e22b8920bd2

python中knn

http://www.taodudu.cc/news/show-995349.html

相关文章:

- tb计算机存储单位_如何节省数TB的云存储

- 数据可视化机器学习工具在线_为什么您不能跳过学习数据可视化

- python中nlp的库_用于nlp的python中的网站数据清理

- 怎么看另一个电脑端口是否通_谁一个人睡觉另一个看看夫妻的睡眠习惯

- tableau 自定义省份_在Tableau中使用自定义图像映射

- 熊猫烧香分析报告_熊猫分析进行最佳探索性数据分析

- 白裤子变粉裤子怎么办_使用裤子构建构建数据科学的monorepo

- 青年报告_了解青年的情绪

- map(平均平均精度_客户的平均平均精度

- 鲜活数据数据可视化指南_数据可视化实用指南

- 图像特征 可视化_使用卫星图像可视化建筑区域

- 海量数据寻找最频繁的数据_在数据中寻找什么

- 可视化 nlp_使用nlp可视化尤利西斯

- python的power bi转换基础

- 自定义按钮动态变化_新闻价值的变化定义

- 算法 从 数中选出_算法可以选出胜出的nba幻想选秀吗

- 插入脚注把脚注标注删掉_地狱司机不应该只是英国电影历史数据中的脚注,这说明了为什么...

- 贝叶斯统计 传统统计_统计贝叶斯如何补充常客

- 因为你的电脑安装了即点即用_即你所爱

- 团队管理新思考_需要一个新的空间来思考讨论和行动

- bigquery 教程_bigquery挑战实验室教程从数据中获取见解

- java职业技能了解精通_如何通过精通数字分析来提升职业生涯的发展,第8部分...

- kfc流程管理炸薯条几秒_炸薯条成为数据科学的最后前沿

- bigquery_到Google bigquery的sql查询模板,它将您的报告提升到另一个层次

- 数据科学学习心得_学习数据科学时如何保持动力

- python多项式回归_在python中实现多项式回归

- pd种知道每个数据的类型_每个数据科学家都应该知道的5个概念

- xgboost keras_用catboost lgbm xgboost和keras预测财务交易

- 走出囚徒困境的方法_囚徒困境的一种计算方法

- 平台api对数据收集的影响_收集您的数据不是那么怪异的api

python中knn_如何在python中从头开始构建knn相关推荐

- python pop() ,如何在Python的列表或数组中移除元素

python pop() ,如何在Python的列表或数组中移除元素 在本文中,你将学习如何使用Python内置的 pop() 方法,最后,你将知道如何使用 pop() 从 Python 中的列表中删 ...

- python调用soap_如何在python zeep中调用soap api而不使用wsdl(非wsdl模式)?使用用户和密码身份验证调用位置URL...

我无法在Zeep python客户端中为SOAP API验证用户身份 . 我有两个网址: 1) http://credotrade.stg-tradingcrm.com:8093/mex - 它指定了 ...

- python hadoop streaming_如何在Hadoop中使用Streaming编写MapReduce(转帖)

作者:马士华 发表于:2008-03-05 12:51 最后更新于:2008-03-25 11:18 版权声明:可以任意转载,转载时请务必以超链接形式标明文章原始出处和作者信息. http://www ...

- python多项式回归_如何在Python中实现多项式回归模型

python多项式回归 Let's start with an example. We want to predict the Price of a home based on the Area an ...

- python大括号_如何在python字符串中打印文字大括号字符并在其上使用.format?

如何在python字符串中打印文字大括号字符并在其上使用.format? x = " \{ Hello \} {0} " print x.format(42) 给我:{Hello} ...

- python缓冲区_如何在Python中使用Google的协议缓冲区

python缓冲区 When people who speak different languages get together and talk, they try to use a languag ...

- python使用spark_如何在Python中编写简单代码,并且速度超越Spark?

全文共3482字,预计学习时长7分钟 如今,大家都在Python工具(pandas和Scikit-learn)的简洁性.Spark和Hadoop的可扩展性以及Kubernetes的操作就绪之间做选择. ...

- spyder python 使用_如何在spyder中使用vpython?

我试着用vpython,无论如何,但我失败了...在 首先,我在win8.1上安装了anacondapython2.7.10. 然后,我通过在命令行中输入以下命令来安装Vpython: conda安装 ...

- vscode怎么安装python库_如何在vscode中安装python库的方法步骤

免费资源网 - https://freexyz.cn/ vscode安装python库 1.已经在vscode中装了python并配置好python运行环境. 检查是否正确配置好运行环境,按Windo ...

最新文章

- Linux apt-get install无法定位问题

- 2020 年最全 Python 面试题汇总 (四)

- Python 中的numpy 库

- VMWare的The network bridge on device VMnet0 is not running故障解决

- 你写的代码扩展性高吗?快试试用Spring注入方式来解耦代码!

- javascript 使用drop元素实现拖动(ondragstart、ondrag、 ondragend、ondragenter,ondragover、ondragleave、ondrop )

- hdu 1754 I hate it (线段树)

- 《趣谈网络协议》学习笔记

- adguard和adblock哪个好_这可能是最全的广告屏蔽方案了!

- 文字图片灰度化matlab,采用matlab将图像灰度化的方法

- 手机微信开发上传图片到服务器,微信开发之通过微信接口上传图片到本地服务器...

- halcon之屌炸天的自标定(1)

- 中科大自主招生2018笔试数学之三

- 直流侧电力有源滤波器滤除谐波干扰的原理及方案

- cinder云硬盘type创建

- Geospatial-地理空间

- arcmap给tif添加地理坐标_如何将JPG格式的图片转化为带地理坐标的TIFF格式

- Github 之提交代码

- 计算机与网络安全经历了几个阶段,网络信息安全知识:根据互联网的发展阶段,互联网治理分为三个层面,即结构层面、功能层面、意识层面。确立网络规范属于互联网意识层面的治理。()...

- windows 2008/2012(64位) IIS配置asp程序 500 - 内部服务器错误。您查找的资源存在问题,因而无法显示。