控制算法和编程算法_算法中的编程公平性

控制算法和编程算法

重点 (Top highlight)

“Being good is easy, what is difficult is being just.” ― Victor Hugo

“做善事容易,而做困难就是正义。” ― 维克多·雨果

“We need to defend the interests of those whom we’ve never met and never will.” ― Jeffrey D. Sachs

“我们需要捍卫从未谋面和永远不会遇见的人的利益。” ― 杰弗里·萨克斯 ( Jeffrey D. Sachs)

Note: This article is intended for a general audience to try and elucidate the complicated nature of unfairness in machine learning algorithms. As such, I have tried to explain concepts in an accessible way with minimal use of mathematics, in the hope that everyone can get something out of reading this.

注意:本文旨在让一般读者尝试阐明机器学习算法中不公平的复杂性质。 因此,我试图以最少的数学方式以一种易于理解的方式解释概念,希望每个人都能从阅读本书中学到一些东西。

Supervised machine learning algorithms are inherently discriminatory. They are discriminatory in the sense that they use information embedded in the features of data to separate instances into distinct categories — indeed, this is their designated purpose in life. This is reflected in the name for these algorithms which are often referred to as discriminative algorithms (splitting data into categories), in contrast to generative algorithms (generating data from a given category). When we use supervised machine learning, this “discrimination” is used as an aid to help us categorize our data into distinct categories within the data distribution, as illustrated below.

有监督的机器学习算法本质上是有区别的。 从某种意义上说,它们是歧视性的,因为它们使用嵌入在数据特征中的信息将实例划分为不同的类别-实际上,这是它们在生活中的指定目的。 这些反映在这些算法的名称中,与生成算法(从给定类别生成数据)相比,这些算法通常称为判别算法(将数据分为类别)。 当我们使用监督机器学习时,这种“区分”被用作帮助我们将数据分类为数据分布内的不同类别,如下所示。

Whilst this occurs when we apply discriminative algorithms — such as support vector machines, forms of parametric regression (e.g. vanilla linear regression), and non-parametric regression (e.g. random forest, neural networks, boosting) — to any dataset, the outcomes may not necessarily have any moral implications. For example, using last week’s weather data to try and predict the weather tomorrow has no moral valence attached to it. However, when our dataset is based on information that describes people — individuals, either directly or indirectly, this can inadvertently result in discrimination on the basis of group affiliation.

当我们将歧视性算法(例如支持向量机,参数回归形式(例如,香草线性回归)和非参数回归(例如,随机森林,神经网络,boost))应用于任何数据集时,就会出现这种情况,但结果可能不会必然有任何道德影响。 例如,使用上周的天气数据来尝试预测明天的天气并没有附带道德上的意义。 但是,当我们的数据集基于描述人或个人的信息时,无论是直接还是间接,这都可能无意间导致基于群体隶属关系的歧视。

Clearly then, supervised learning is a dual-use technology. It can be used to our benefits, such as for information (e.g. predicting the weather) and protection (e.g. analyzing computer networks to detect attacks and malware). On the other hand, it has the potential to be weaponized to discriminate at essentially any level. This is not to say that the algorithms are evil for doing this, they are merely learning the representations present in the data, which may themselves have embedded within them the manifestations of historical injustices, as well as individual biases and proclivities. A common adage in data science is “garbage in = garbage out” to refer to models being highly dependent on the quality of the data supplied to them. This can be stated analogously in the context of algorithmic fairness as “bias in = bias out”.

显然,监督学习是一种双重用途的技术。 它可以用于我们的利益,例如用于信息(例如,预测天气)和保护(例如,分析计算机网络以检测攻击和恶意软件)。 另一方面,它有可能被武器化以在任何水平上进行区分。 这并不是说算法这样做是邪恶的,它们只是在学习数据中存在的表示形式,而这些表示形式本身可能已将历史不公正的表现以及个体的偏见和倾向嵌入其中。 数据科学中的一句常言词是“垃圾=垃圾”,它是指模型高度依赖于提供给他们的数据的质量。 可以在算法公平性的上下文中类似地表述为“偏置=偏置”。

数据原教旨主义 (Data Fundamentalism)

Some proponents believe in data fundamentalism, that is to say, that the data reflects the objective truth of the world through empirical observations.

一些支持者相信数据原教旨主义 ,也就是说,数据通过经验观察反映了世界的客观真理。

“with enough data, the numbers speak for themselves.” — Former Wired editor-in-chief Chris Anderson (a data fundamentalist)

“有了足够的数据,数字就说明了一切。” —前有线主编克里斯·安德森(Chris Anderson)(数据原教旨主义者)

Data and data sets are not objective; they are creations of human design. We give numbers their voice, draw inferences from them, and define their meaning through our interpretations. Hidden biases in both the collection and analysis stages present considerable risks, and are as important to the big-data equation as the numbers themselves. — Kate Crawford, principal researcher at Microsoft Research Social Media Collective

数据和数据集不是客观的; 它们是人类设计的创造。 我们给数字他们的声音,从他们那里得出推论,并通过我们的解释来定义他们的含义。 收集和分析阶段的隐性偏差都带来了相当大的风险,并且对大数据方程式而言,与数字本身一样重要。 —微软研究社交媒体集体首席研究员凯特·克劳福德(Kate Crawford)

Superficially, this seems like a reasonable hypothesis, but Kate Crawford provides a good counterargument in a Harvard Business Review article:

从表面上看,这似乎是一个合理的假设,但凯特·克劳福德(Kate Crawford)在《 哈佛商业评论》的一篇文章中提出了很好的反驳:

Boston has a problem with potholes, patching approximately 20,000 every year. To help allocate its resources efficiently, the City of Boston released the excellent StreetBump smartphone app, which draws on accelerometer and GPS data to help passively detect potholes, instantly reporting them to the city. While certainly a clever approach, StreetBump has a signal problem. People in lower income groups in the US are less likely to have smartphones, and this is particularly true of older residents, where smartphone penetration can be as low as 16%. For cities like Boston, this means that smartphone data sets are missing inputs from significant parts of the population — often those who have the fewest resources. — Kate Crawford, principal researcher at Microsoft Research

波士顿存在坑洞问题,每年大约修补20,000个坑洞。 为了有效地分配资源,波士顿市发布了出色的StreetBump智能手机应用程序 ,该应用程序利用加速度计和GPS数据来帮助被动检测坑洼,并立即向城市报告。 虽然当然是一种聪明的方法,但StreetBump还是存在信号问题。 在美国,低收入群体的人不太可能拥有智能手机,尤其是对于年长居民,智能手机普及率可低至16%。 对于像波士顿这样的城市,这意味着智能手机数据集缺少大量人口(通常是资源最少的人口)的输入。 — 微软研究院首席研究员凯特·克劳福德 ( Kate Crawford)

Essentially, the StreetBump app picked up a preponderance of data from wealthy neighborhoods and relatively little from poorer neighborhoods. Naturally, the first conclusion you might draw from this is that the wealthier neighborhoods had more potholes, but in reality, there was just a lack of data from poorer neighborhoods because these people were less likely to have smartphones and thus have downloaded the SmartBump app. Often, it is data that we do not have in our dataset that can have the biggest impact on our results. This example illustrates a subtle form of discrimination on the basis of income. As a result, we should be cautious when drawing conclusions such as these from data that may suffer from a ‘signal problem’. This signal problem is often characterized as sampling bias.

本质上,StreetBump应用程序从富裕社区中获取了大量数据,而从较贫困社区中获取的数据则相对较少。 自然,您可能从中得出的第一个结论是,较富裕的社区有更多坑洼,但实际上,较贫穷的社区仅缺少数据,因为这些人不太可能拥有智能手机,因此下载了SmartBump应用程序。 通常,数据集中没有的数据可能会对结果产生最大的影响。 这个例子说明了一种基于收入的歧视的微妙形式。 因此,在从可能遭受“信号问题”的数据得出诸如此类的结论时,我们应谨慎行事。 该信号问题通常被称为采样偏差 。

Another notable example is the “Correctional Offender Management Profiling for Alternative Sanctions” algorithm or COMPAS for short. This algorithm is used by a number of states across the United States to predict recidivism — the likelihood that a former criminal will re-offend. Analysis of this algorithm by ProPublica, an investigative journalism organization, sparked controversy when it seemed to suggest that the algorithm was discriminating on the basis of race — a protected class in the United States. To give us a better idea of what is going on, the algorithm used to predict recidivism looks something like this:

另一个值得注意的例子是“针对替代制裁的更正犯人管理分析”算法或简称COMPAS。 美国许多州都使用此算法来预测累犯-前罪犯将再次犯罪的可能性。 调查性新闻机构ProPublica对这种算法的分析引发了争议,当时它似乎表明该算法是基于种族的歧视—美国的受保护阶级。 为了让我们更好地了解发生了什么,用于预测累犯的算法如下所示:

Recidivism Risk Score = (age*−w)+(age-at-first-arrest*−w)+(history of violence*w) + (vocation education * w) + (history of noncompliance * w)

累犯风险评分 =(年龄* −w)+(初次逮捕年龄* −w)+(暴力历史* w)+(职业教育* w)+(违规历史* w)

It should be clear that race is not one of the variables used as a predictor. However, the data distribution between two given races may be significantly different for some of these variables, such as the ‘history of violence’ and ‘vocation education’ factors, based on historical injustices in the United States as well as demographic, social, and law enforcement statistics (which are often another target for criticism since they often use algorithms to determine which neighborhoods to patrol). The mismatch between these data distributions can be leveraged by an algorithm, leading to disparities between races and thus to some extent a result that is moderately biased towards or against certain races. These entrenched biases will then be operationalized by the algorithm and continue to persist as a result, leading to further injustices. This loop is essentially a self-fulfilling prophecy.

应该清楚的是,种族并不是用作预测变量的变量之一。 但是,对于其中某些变量,两个给定种族之间的数据分布可能存在显着差异,例如,基于美国的历史不公以及人口,社会和社会因素,“暴力历史”和“职业教育”因素执法统计数据(通常是批评的另一个目标,因为它们经常使用算法来确定要巡逻的街区)。 这些数据分布之间的不匹配可以通过算法加以利用,从而导致种族之间的差异,因此在某种程度上会导致某些种族偏向或反对某些种族的结果。 这些根深蒂固的偏差随后将由算法进行运算,并因此继续存在,从而导致进一步的不公正现象。 这个循环本质上是一个自我实现的预言 。

Historical Injustices → Training Data → Algorithmic Bias in Production

历史不公正→训练数据→生产中的算法偏差

This leads to some difficult questions — do we remove these problematic variables? How do we determine whether a feature will lead to discriminatory results? Do we need to engineer a metric that provides a threshold for ‘discrimination’? One could take this to the extreme and remove almost all variables, but then the algorithm would be of no use. This paints a bleak picture, but fortunately, there are ways to tackle these issues that will be discussed later in this article.

这就带来了一些难题:我们是否删除了这些有问题的变量? 我们如何确定某个功能是否会导致歧视性结果? 我们是否需要设计一个提供“歧视”门槛的指标? 可以将这一点发挥到极致并删除几乎所有变量,但是该算法将毫无用处。 这描绘了一个惨淡的景象,但是幸运的是,有一些解决这些问题的方法将在本文后面讨论。

These examples are not isolated incidents. Even breast cancer prediction algorithms show a level of unfair discrimination. Deep learning algorithms to predict breast cancer from mammograms are much less accurate for black women than white women. This is partly because the dataset used to train these algorithms is predominantly based on mammograms of white women, but also because the data distribution for breast cancer between black women and white women likely has substantial differences. According to the Center for Disease Control (CDC) “Black women and white women get breast cancer at about the same rate, but black women die from breast cancer at a higher rate than white women”.

这些示例不是孤立的事件。 甚至乳腺癌的预测算法也显示出一定程度的不公平歧视。 黑人女性的深度学习算法根据乳房X线照片预测乳腺癌的准确性要比白人女性低得多。 部分原因是,用于训练这些算法的数据集主要基于白人女性的乳房X线照片,还因为黑人女性与白人女性之间的乳腺癌数据分布可能存在实质性差异。 根据疾病控制中心(CDC)的说法, “ 黑人妇女和白人妇女患乳腺癌的比率大致相同,但黑人妇女死于乳腺癌的比率要高于白人妇女 ” 。

动机 (Motives)

These issues raise questions about the motives of algorithmic developers — did the individuals that designed these models do so knowingly? Do they have an agenda they are trying to push and trying to hide it inside gray box machine learning models?

这些问题引发了有关算法开发人员动机的疑问-设计这些模型的个人是否是故意这样做的? 他们是否有要推动的议程,并试图将其隐藏在灰盒机器学习模型中?

Although these questions are impossible to answer with certainty, it is useful to consider Hanlon’s razor when asking such questions:

尽管无法肯定地回答以下问题,但在询问以下问题时考虑使用汉隆剃刀是很有用的:

Never attribute to malice that which is adequately explained by stupidity — Robert J. Hanlon

永远不要将愚蠢能充分解释的恶意归因于— 罗伯特·J·汉隆

In other words, there are not that many evil people in the world (thankfully), and there are certainly less evil people in the world than there are incompetent people. On average, we should assume that when things go wrong it is more likely attributable to incompetence, naivety, or oversight than to outright malice. Whilst there are likely some malicious actors who would like to push discriminative agendas, these are likely a minority.

换句话说,世界上(邪恶的)邪恶的人并不多,世界上邪恶的人肯定少于无能的人。 平均而言,我们应该假设,当事情出错时,更可能归因于无能,天真或疏忽,而不是彻头彻尾的恶意。 尽管可能有一些恶意行为者想推动歧视性议程,但这些人可能只是少数。

Based on this assumption, what could have gone wrong? One could argue that statisticians, machine learning practitioners, data scientists, and computer scientists are not adequately taught how to develop supervised learning algorithms that control and correct for prejudicial proclivities.

基于此假设,可能出了什么问题? 有人可能会争辩说,统计学家,机器学习从业人员,数据科学家和计算机科学家没有得到足够的教导,他们如何开发可控制和纠正偏见倾向的监督学习算法。

Why is this the case?

为什么会这样呢?

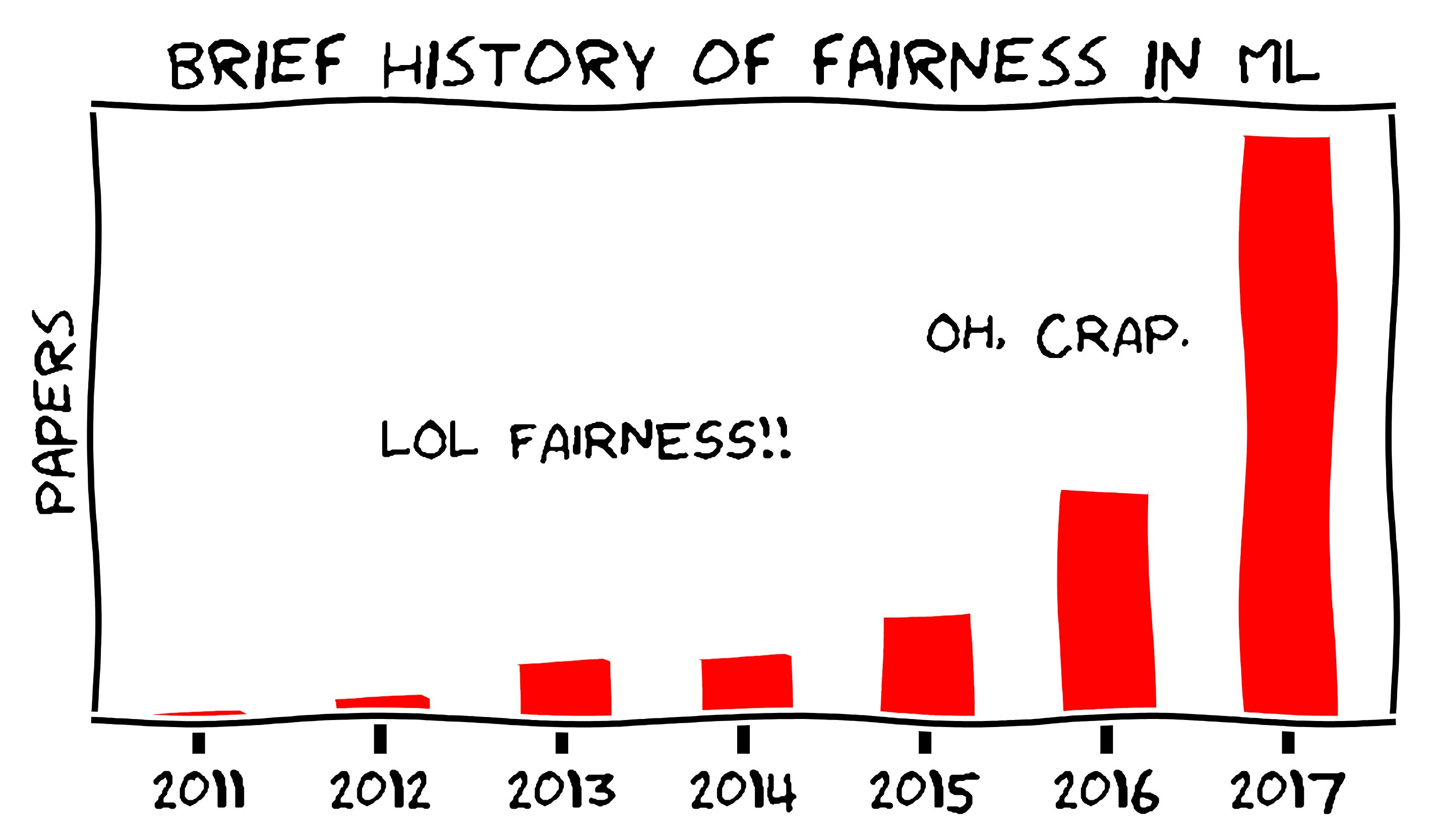

In truth, techniques that achieve this do not exist. Machine learning fairness is a young subfield of machine learning that has been growing in popularity over the last few years in response to the rapid integration of machine learning into social realms. Computer scientists, unlike doctors, are not necessarily trained to consider the ethical implications of their actions. It is only relatively recently (one could argue since the advent of social media) that the designs or inventions of computer scientists were able to take on an ethical dimension.

实际上,不存在实现此目的的技术。 机器学习的公平性是机器学习的一个新兴子领域,近年来,随着机器学习Swift融入社会领域,机器学习的公平性在不断提高。 与医生不同,计算机科学家不必经过培训就可以考虑其行为的伦理含义。 直到最近(自社会媒体问世以来,有人可以争论),计算机科学家的设计或发明才具有伦理意义。

This is demonstrated in the fact that most computer science journals do not require ethical statements or considerations for submitted manuscripts. If you take an image database full of millions of images of real people, this can without a doubt have ethical implications. By virtue of physical distance and the size of the dataset, computer scientists are so far removed from the data subjects that the implications on any one individual may be perceived as negligible and thus disregarded. In contrast, if a sociologist or psychologist performs a test on a small group of individuals, an entire ethical review board is set up to review and approve the experiment to ensure it does not transgress across any ethical boundaries.

事实证明,大多数计算机科学期刊不需要对提交的稿件进行道德声明或考虑。 如果您拥有一个包含数百万个真实人的图像的图像数据库,那么毫无疑问这可能会产生伦理影响。 由于物理距离和数据集的大小,计算机科学家与数据主体相距甚远,以至于对任何一个人的影响都可以忽略不计,因此可以忽略不计。 相反,如果社会学家或心理学家对一小群人进行测试,则将建立整个道德审查委员会来审查和批准该实验,以确保其不会超出任何道德界限。

On the bright side, this is slowly beginning to change. More data science and computer science programs are starting to require students to take classes on data ethics and critical thinking, and journals are beginning to recognize that ethical reviews through IRBs and ethical statements in manuscripts may be a necessary addition to the peer-review process. The rising interest in the topic of machine learning fairness is only strengthening this position.

从好的方面来说,这正在慢慢开始改变。 越来越多的数据科学和计算机科学计划开始要求学生参加有关数据伦理和批判性思维的课程,期刊也开始认识到通过IRB进行伦理审查和手稿中的伦理声明可能是同行评审过程的必要补充。 对机器学习公平性话题的日益浓厚的兴趣只是在巩固这一地位。

机器学习的公平性 (Fairness in Machine Learning)

As mentioned previously, widespread adoption of supervised machine learning algorithms has raised concerns about algorithmic fairness. The more these algorithms are adopted, and the increasing control they have on our lives will only exacerbate these concerns. The machine learning community is well aware of these challenges and algorithmic fairness is now a rapidly developing subfield of machine learning with many excellent researchers such as Moritz Hardt, Cynthia Dwork, Solon Barocas, and Michael Feldman.

如前所述,有监督的机器学习算法的广泛采用引起了人们对算法公平性的担忧。 这些算法被采用的次数越多,它们对我们生活的控制越来越强,只会加剧这些担忧。 机器学习社区已经充分意识到了这些挑战,算法公平已经成为机器学习的一个快速发展的子领域,其中包括Moritz Hardt,Cynthia Dwork,Solon Barocas和Michael Feldman。

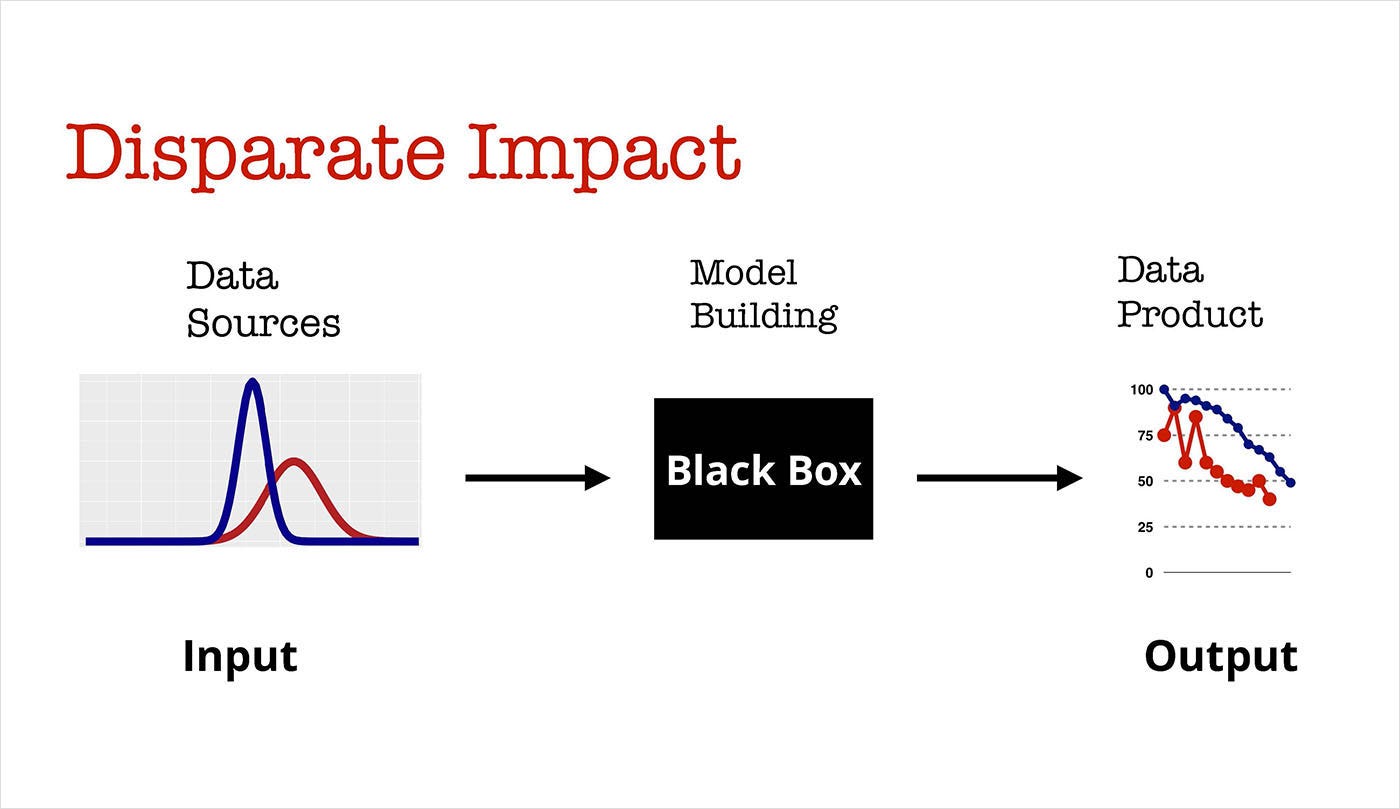

That being said, there are still major hurdles to overcome before we can achieve truly fair algorithms. It is fairly easy to prevent disparate treatment in algorithms — the explicit differential treatment of one group over another, such as by removing variables that correspond to these attributes from the dataset (e.g. race, gender). However, it is much less easy to prevent disparate impact —implicit differential treatment of one group over another, usually caused by something called redundant encodings in the data.

话虽如此,在我们实现真正公平的算法之前,仍有许多障碍需要克服。 防止在算法中进行不同的对待是很容易的,例如,通过从数据集中删除与这些属性相对应的变量(例如,种族,性别)来对一组进行明显的区别对待。 但是,要防止完全不同的影响要容易得多,这通常是由数据中称为冗余编码的某种东西造成的,从而导致对一组的隐式区别对待。

A redundant encoding tells us information about a protected attribute, such as race or gender, based on features present in our dataset that correlate with these attributes. For example, buying certain products online (such as makeup) may be highly correlated with gender, and certain zip codes may have different racial demographics that an algorithm might pick up on.

冗余编码会根据数据集中与这些属性相关的特征,告诉我们有关受保护属性(例如种族或性别)的信息。 例如,在线购买某些产品(例如化妆品)可能与性别高度相关,并且某些邮政编码可能具有不同的种族人口统计信息,因此算法可能会采用这种方法。

Although an algorithm is not trying to discriminate along these lines, it is inevitable that data-driven algorithms that supersede human performance on pattern recognition tasks might pick up on these associations embedded within data, however small they may be. Additionally, if these associations were non-informative (i.e. they do not increase the accuracy of the algorithm) then the algorithm would ignore them, meaning that some information is clearly embedded in these protected attributes. This raises many challenges to researchers, such as:

尽管算法并没有试图按照这些原则进行区分,但是不可避免的是,在模式识别任务中取代人类性能的数据驱动算法可能会吸收嵌入在数据中的这些关联,无论它们有多小。 另外,如果这些关联是非信息性的(即它们不会增加算法的准确性),则算法将忽略它们,这意味着某些信息显然嵌入了这些受保护的属性中。 这给研究人员提出了许多挑战,例如:

- Is there a fundamental tradeoff between fairness and accuracy? Are we able to extract relevant information from protected features without them being used in a discriminatory way?

公平与准确性之间是否存在根本的权衡? 我们是否能够从受保护的功能中提取相关信息,而无需歧视性地使用它们? - What is the best statistical measure to embed the notion of ‘fairness’ within algorithms?

将“公平”概念嵌入算法中的最佳统计量是什么? - How can we ensure that governments and companies produce algorithms that protect individual fairness?

我们如何确保政府和公司制定保护个人公平的算法? - What biases are embedded in our training data and how can we mitigate their influence?

培训数据中嵌入了哪些偏见,我们如何减轻其影响?

We will touch upon some of these questions in the remainder of the article.

在本文的其余部分,我们将探讨其中的一些问题。

数据问题 (The Problem with Data)

In the last section, it was mentioned that redundant encodings can lead to features correlating with protected attributes. As our data set scales in size, the likelihood of the presence of these correlations scales accordingly. In the age of big data, this presents a big problem: the more data we have access to, the more information we have at our disposal to discriminate. This discrimination does not have to be purely race- or gender-based, it could manifest as discrimination against individuals with pink hair, against web developers, against Starbucks coffee drinkers, or a combination of all of these groups. In this section, several biases present in training data and algorithms are presented that complicate the creation of fair algorithms.

在上一节中,提到了冗余编码可以导致与受保护属性相关的功能。 随着我们的数据集规模的扩大,这些相关性存在的可能性也随之扩大。 在大数据时代,这带来了一个大问题: 我们可以访问的数据越多 ,可用来区别的信息就越多 。 这种歧视不一定要完全基于种族或性别,它可以表现为对粉红色头发的人,对网络开发人员,对星巴克咖啡饮用者的歧视,或对所有这些人群的结合。 在本节中,将介绍训练数据和算法中存在的一些偏差,这些偏差会使公平算法的创建复杂化。

多数偏见 (The Majority Bias)

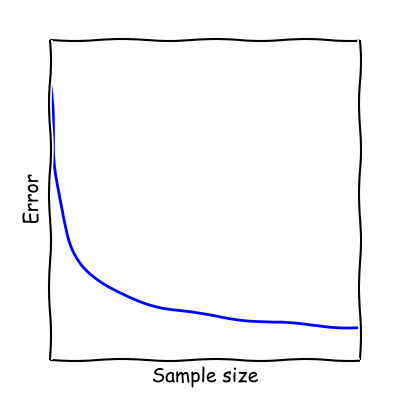

Algorithms have no affinity to any particular group, however, they do have a proclivity for the majority group due to their statistical basis. As outlined by Professor Moritz Hardt in a Medium article, classifiers generally improve with the number of data points used to train them since the error scales with the inverse square root of the number of samples, as shown below.

算法对任何特定的组都没有亲和力,但是,由于它们的统计基础,它们对于多数组确实具有倾向性。 正如Moritz Hardt教授在“中型” 文章中概述的那样,分类器通常会随着用于训练它们的数据点数量的增加而提高,因为误差与样本数量的平方根成反比,如下所示。

This leads to an unsettling reality that since there will, by definition, always be less data available about minorities, our models will tend to perform worse on those groups than on the majority. This assumption is only true if the majority and minority groups are drawn from separate distributions, if they are drawn from a single distribution then increasing sample size will be equally beneficial to both groups.

这导致了一个令人不安的现实,因为根据定义,关于少数群体的可用数据将始终较少,因此我们的模型在这些群体上的表现往往会比在大多数群体上差。 仅当多数和少数群体来自不同的分布时,该假设才是正确的;如果它们从单一分布中得出,则增加样本量将对这两个组同样有利。

An example of this is the breast cancer detection algorithms we discussed previously. For this deep learning model, developed by researchers at MIT, of the 60,000 mammogram images in the dataset used to train the neural network, only 5% were mammograms of black women, who are 43% more likely to die from breast cancer. As a result of this, the algorithm performed more poorly when tested on black women, and minority groups in general. This could partially be accounted for because breast cancer often manifests at an earlier age among women of color, which indicates a disparate impact because the probability distribution of women of color was underrepresented.

例如 ,我们之前讨论过的乳腺癌检测算法。 对于这个由MIT的研究人员开发的深度学习模型 ,在用于训练神经网络的数据集中的60,000幅乳房X线照片中,只有5%是黑人女性的乳房X线照片,死于乳腺癌的可能性增加了43%。 结果,该算法在对黑人妇女以及一般的少数族裔人群进行测试时,执行效果会更差。 这可能是部分原因,因为乳腺癌通常在有色女性中较早出现 ,这表明有不同的影响,因为有色女性的概率分布不足。

This also presents another important question. Is accuracy a suitable proxy for fairness? In the above example, we assumed that a lower classification accuracy on a minority group corresponds to unfairness. However, due to the widely differing definitions and the somewhat ambiguous nature of fairness, it can sometimes be difficult to ensure that the variable we are measuring is a good proxy for fairness. For example, our algorithm may have 50% accuracy for both black and white women, but if there 30% false positives for white women and 30% false negatives for black women, this would also be indicative of disparate impact.

这也提出了另一个重要的问题。 准确性是否适合作为公平的代理? 在上面的示例中,我们假设少数群体的分类准确度较低会导致不公平。 但是,由于定义的差异很大,并且公平性有些模棱两可,因此有时可能难以确保我们所测量的变量可以很好地替代公平性。 例如,我们的算法可能对黑人和白人女性都有50%的准确性,但是如果白人女性有30%的假阳性,黑人女性有30%的假阴性,这也将表明存在不同的影响。

From this example, it seems almost intuitive that this is a form of discrimination since there is differential treatment on the basis of group affiliation. However, there are times when this group affiliation is informative to our prediction. For example, for an e-commerce website trying to decide what content to show its users, having an idea of the individual’s gender, age, or socioeconomic status is incredibly helpful. This implies that if we merely remove protected fields from our data, we will decrease the accuracy (or some other performance metric) of our model. Similarly, if we had sufficient data on both black and white women for the breast cancer model, we could develop an algorithm that used race as one of the inputs. Due to the differences in data distributions between the races, it is likely that the accuracy would have increased for both groups.

从这个例子中,这几乎是直觉的,因为这是基于群体隶属关系的区别对待,因此这是一种歧视形式。 但是,有时候这个团体隶属关系对我们的预测很有帮助。 例如,对于试图确定要向用户显示什么内容的电子商务网站,了解个人的性别,年龄或社会经济地位非常有用。 这意味着如果仅从数据中删除受保护的字段,则会降低模型的准确性(或其他一些性能指标)。 同样,如果我们有足够的黑人和白人女性乳腺癌数据模型,我们可以开发一种将种族作为输入之一的算法。 由于种族之间数据分布的差异,两组的准确性都有可能提高。

Thus, the ideal case would be to have an algorithm that contains these protected features and uses them to make algorithmic generalizations but is constrained by fairness metrics to prevent the algorithm from discriminating.

因此,理想的情况是拥有一个包含这些受保护特征的算法,并使用它们进行算法概括,但受到公平性指标的约束,以防止该算法受到歧视。

This is an idea proposed by Moritz Hardt and Eric Price in ‘Equality of Opportunity in Supervised Learning’. This has several advantages over other metrics, such as statistical parity and equalized odds, but we will discuss all three of these methods in the next section.

这是Moritz Hardt和Eric Price在“ 监督学习中的机会均等”中提出的想法。 与其他指标(例如统计奇偶校验和均等赔率)相比,它具有一些优势,但是我们将在下一节中讨论所有这三种方法。

公平的定义 (Definitions of Fairness)

In this section we analyze some of the notions of fairness that have been proposed by machine learning fairness researchers. Namely, statistical parity, and then nuances of statistical parity such as equality of opportunity and equalized odds.

在本节中,我们分析了机器学习公平性研究人员提出的一些公平概念。 即统计均等,然后是统计均等的细微差别,例如机会均等和均等机会。

统计平价 (Statistical Parity)

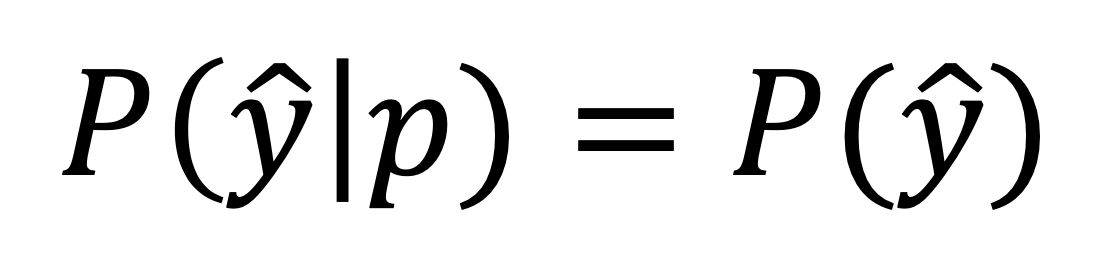

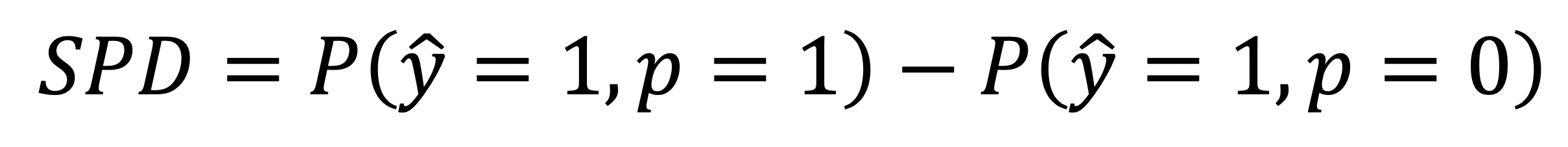

Statistical parity is the oldest and simplest method of enforcing fairness. It is expanded upon greatly in the arXiv article “Algorithmic decision making and the cost of fairness” The formula for statistical parity is shown below.

统计平价是执行公平性最古老,最简单的方法。 在arXiv文章“ 算法决策和公平性 ”中对其 进行了很大的扩展,统计奇偶校验的公式如下所示。

For statistical parity, the outcome will be independent of my group affiliation. What does this mean intuitively? It means that the same proportion of each group will be classified as positive or negative. For this reason, we can also describe statistical parity as demographic parity. For all demographic groups subsumed within p, statistical parity will be enforced.

为了实现统计均等,结果将独立于我的团队隶属关系。 这直观上是什么意思? 这意味着每组的相同比例将被归为正面或负面。 因此,我们也可以将统计奇偶性描述为人口统计学奇偶性 。 对于包含在p内的所有人口统计组,将强制执行统计奇偶校验。

For a dataset that has not had statistical parity applied, we can measure how far our predictions deviate from statistical parity by calculating the statistical parity distance shown below.

对于尚未应用统计奇偶校验的数据集,我们可以通过计算以下所示的统计奇偶校验距离来测量我们的预测与统计奇偶校验的偏离程度。

This distance can provide us with a metric for how fair or unfair a given dataset is based on the group affiliation p.

这个距离可以为我们提供一个度量,用于基于组隶属关系p确定给定数据集是否公平。

What are the tradeoffs of using statistical parity?

使用统计奇偶校验的权衡是什么?

Statistical parity doesn’t ensure fairness.

统计均等不能确保公平。

As you may have noticed though, statistical parity says nothing about the accuracy of these predictions. One group may be much more likely to be predicted as positive than another, and hence we might obtain large disparities between the false positive and true positive rates for each group. This itself can cause a disparate impact as qualified individuals from one group (p=0) may be missed out in favor of unqualified individuals from another group (p=1). In this sense, statistical parity is more akin to equality of outcome.

正如您可能已经注意到的那样,统计奇偶校验并不能说明这些预测的准确性。 一组可能比另一组更可能被预测为阳性,因此我们可能在每一组的假阳性率和真实阳性率之间获得很大的差异。 这本身可能会造成不同的影响,因为可能会错过一组的合格个人( p = 0 ), 而对另一组的不合格个人( p = 1 ) 会有所帮助。 从这个意义上讲,统计均等更类似于结果的平等 。

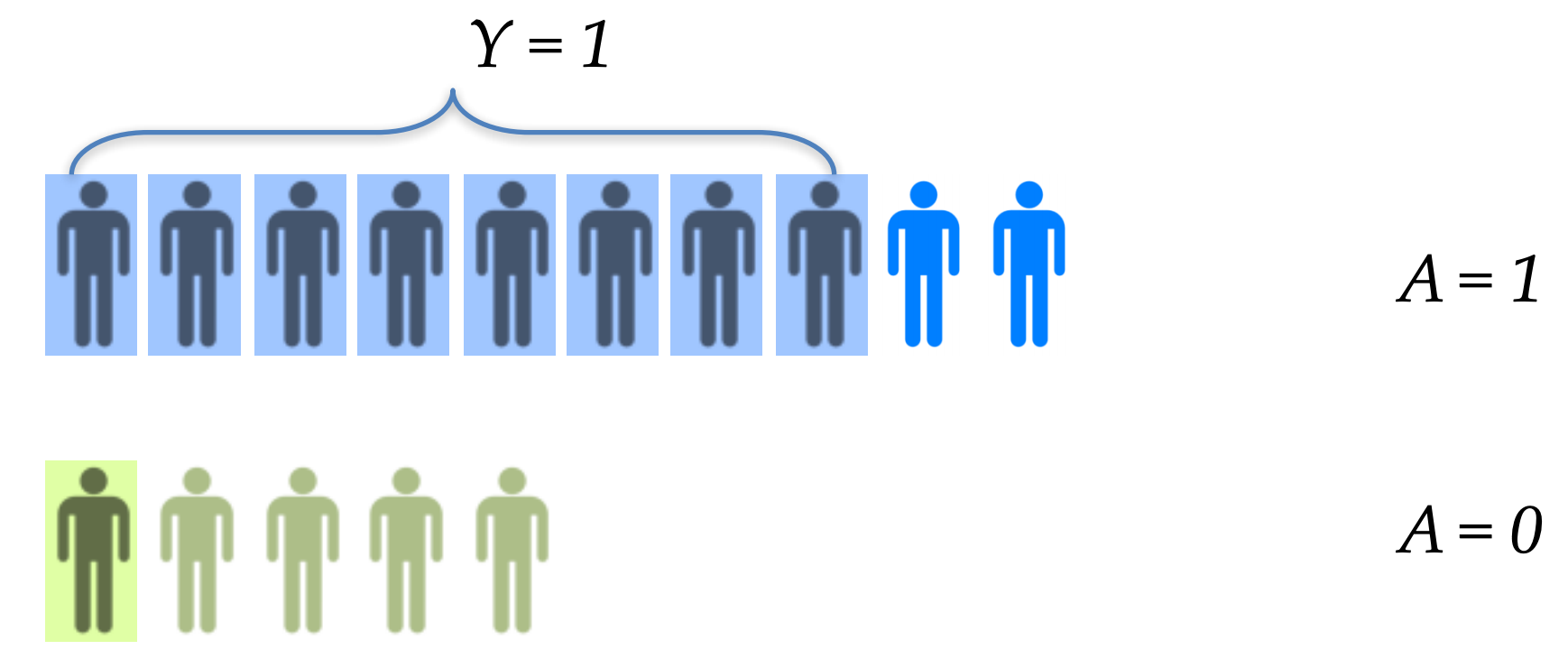

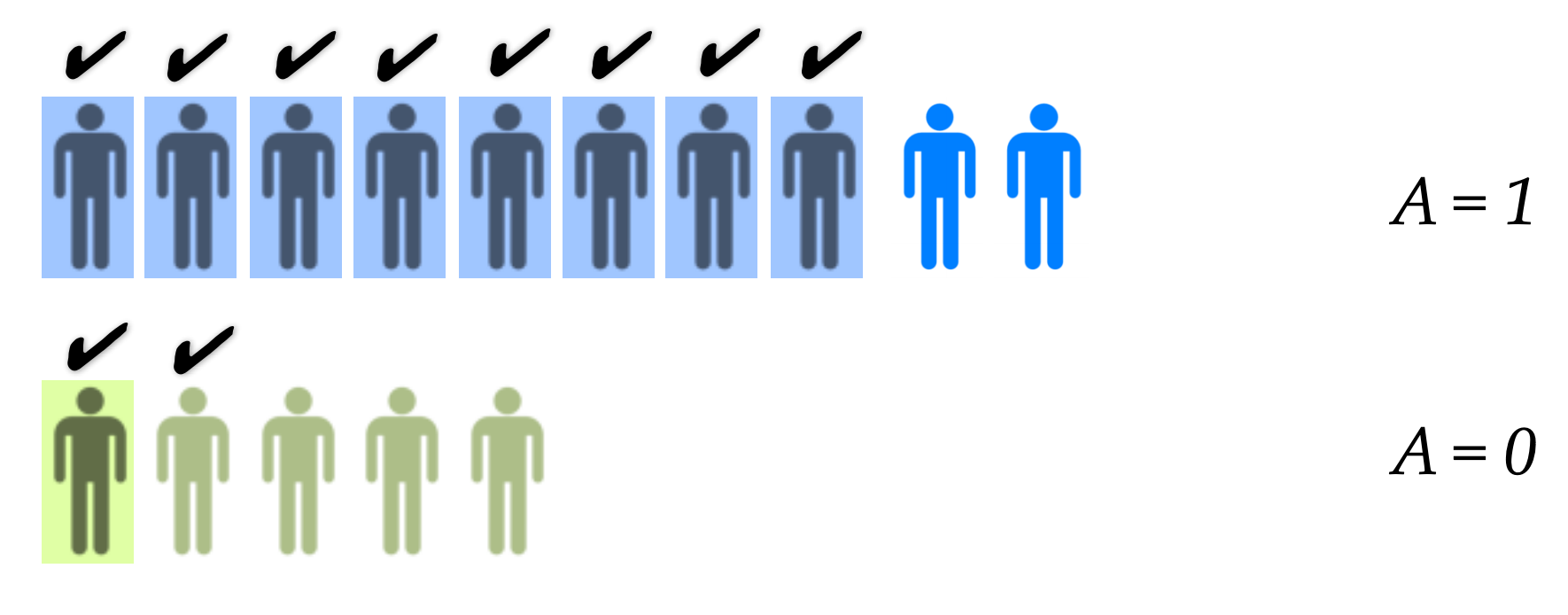

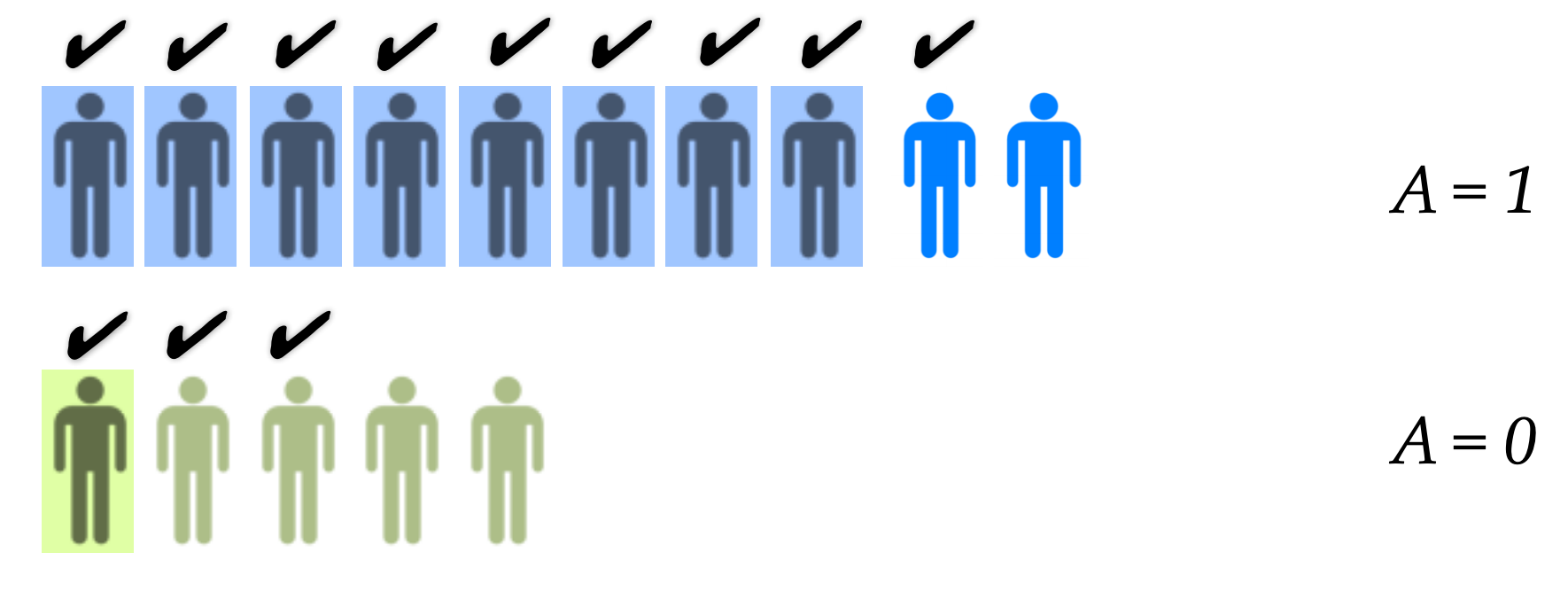

The figures below illustrate this nicely. If we have two groups — one with 10 people (group A=1), and one with 5 people (group A=0) — and we determine that 8 people (80%) in group A=1 achieved a score of Y=1, then 4 people (80%) in group A=0 would also have to be given a score of Y=1, regardless of other factors.

下图很好地说明了这一点。 如果我们有两个小组-一组10个人(A = 1组)和一组5个人(A = 0组)-并且我们确定A = 1组中的8个人(80%)的得分为Y = 1,那么不管其他因素如何,A = 0组中的4个人(80%)也必须获得Y = 1的分数。

Statistical parity reduces algorithmic accuracy

统计奇偶校验降低了算法精度

The second problem with statistical parity is that a protected class may provide some information that would be useful for a prediction, but we are unable to leverage that information because of the strict rule imposed by statistical parity. Gender might be very informative for making predictions about items that people might buy, but if we are prevented from using it, our model becomes weaker and accuracy is impacted. A better method would allow us to account for the differences between these groups without generating disparate impact. Clearly, statistical parity is misaligned with the fundamental goal of accuracy in machine learning — the perfect classifier may not ensure demographic parity.

统计奇偶校验的第二个问题是,受保护的类可能会提供一些对预测有用的信息,但是由于统计奇偶校验施加的严格规则,我们无法利用这些信息。 性别对于人们可能会购买的商品做出预测可能会提供很多信息,但是如果我们不能使用它,我们的模型将变得虚弱并且准确性会受到影响。 一种更好的方法将使我们能够解释这些群体之间的差异,而不会产生不同的影响。 显然,统计奇偶校验与机器学习准确性的基本目标不一致—完美的分类器可能无法确保人口统计学的奇偶校验。

For these reasons, statistical parity is no longer considered a credible option by several machine learning fairness researchers. However, statistical parity is a simple and useful starting point that other definitions of fairness have built upon.

由于这些原因,一些机器学习公平性研究人员不再认为统计均等是一种可靠的选择。 但是,统计均等是其他公平性定义所基于的简单而有用的起点。

There are slightly more nuanced versions of statistical parity, such as true positive parity, false positive parity, and positive rate parity.

统计奇偶校验的细微差别版本更多,例如真实正校验,假正校验和正比率校验。

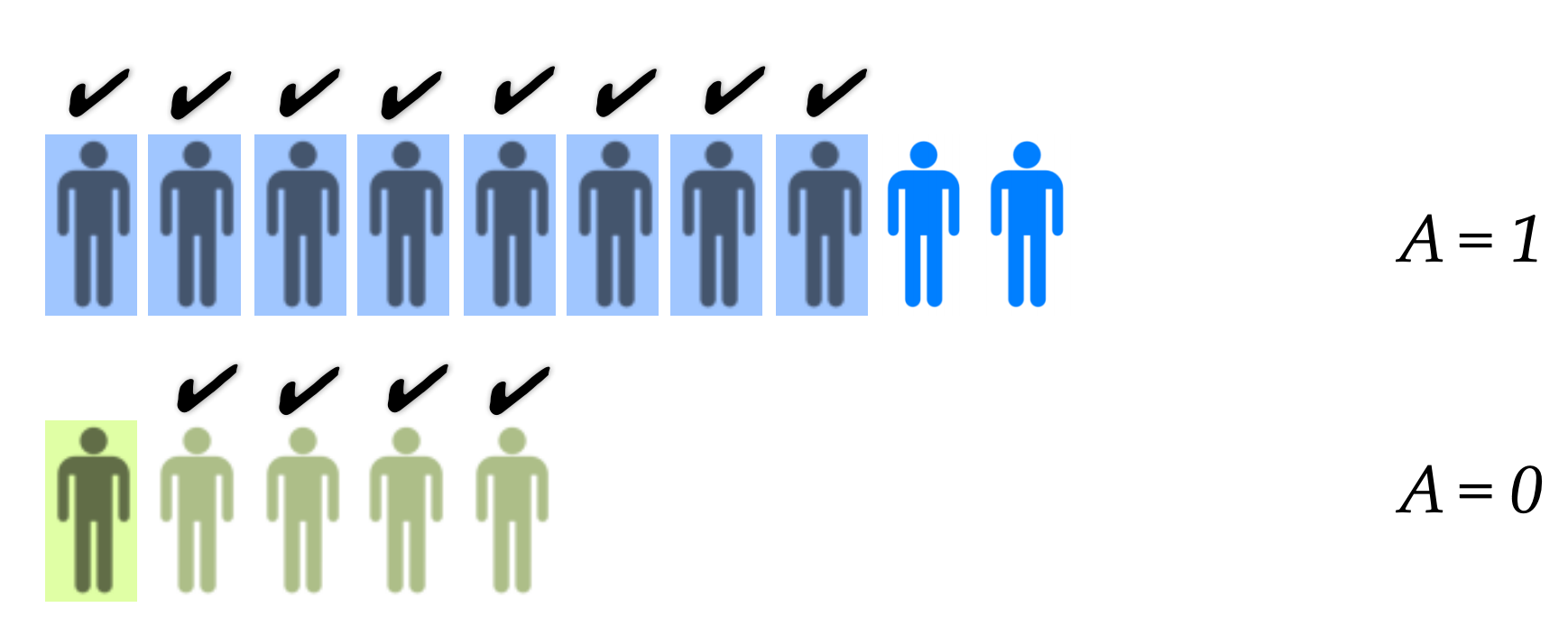

真正的积极平等(机会平等) (True Positive Parity (Equality of Opportunity))

This is only possible for binary predictions and performs statistical parity on true positives (the prediction output was 1 and the true output was also 1).

这仅适用于二进制预测,并且对真实的正数执行统计奇偶校验(预测输出为1,真实输出也为1)。

It ensures that in both groups, of all those who qualified (Y=1), an equal proportion of individuals will be classified as qualified (C=1). This is useful when we are only interested in parity over the positive outcome.

它确保了在两个组中,所有合格的人(Y = 1)中,相等比例的个人将被归类为合格的(C = 1)。 当我们只对平分秋色的积极结果感兴趣时,这很有用。

误报 (False Positive Parity)

This is also only applicable to binary predictions and focuses on false positives (the prediction output was 1 but the true output was 0). This is analogous to the true positive rate but provides parity across false positive results instead.

这也仅适用于二进制预测,并且侧重于误报(预测输出为1,但实际输出为0)。 这类似于真实的阳性率,但是相反提供了假阳性结果之间的奇偶校验。

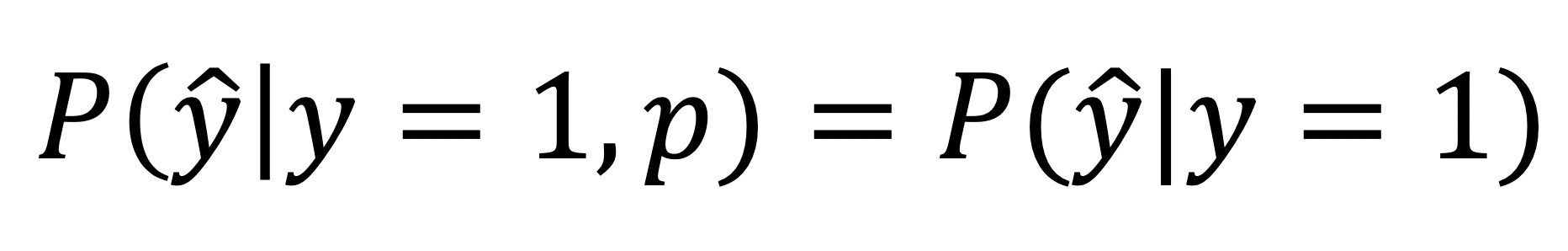

正利率平价(赔率均等) (Positive Rate Parity (Equalized Odds))

This is a combination of statistical parity for true positives and false positives simultaneously and is also know as equalized odds.

这是同时对真阳性和假阳性进行统计奇偶校验的组合,也称为均等赔率 。

Notice that for equal opportunity, we relax the condition of equalized odds that odds must be equal in the case that Y=0. Equalized odds and equality of opportunity are also more flexible and able to incorporate some of the information from the protected variable without resulting in disparate impact.

请注意,对于机会均等,我们放宽了均等赔率的条件,即在Y = 0的情况下,赔率必须相等。 机会均等和机会均等也更加灵活,能够合并受保护变量中的某些信息而不会产生不同的影响。

Notice that whilst all of these provide some form of a solution that can be argued to be fair, none of these are particularly satisfying. One reason for this is that there are many conflicting definitions of what fairness entails, and it is difficult to capture these in algorithmic form. These are good starting points but there is still much room for improvement.

请注意,尽管所有这些都提供了某种形式的解决方案,可以说是公平的,但是这些解决方案都不是特别令人满意的。 造成这种情况的原因之一是,关于公平意味着什么有许多相互矛盾的定义,并且很难以算法的形式来捕获它们。 这些是很好的起点,但仍有很大的改进空间。

其他提高公平性的方法 (Other Methods to Increase Fairness)

Statistical parity, equalized odds, and equality of opportunity are all great starting points, but there are other things we can do to ensure that algorithms are not used to unduly discriminate individuals. Two such solutions which have been proposed are human-in-the-loop and algorithmic transparency.

统计均等,均等机会和机会均等都是很好的起点,但是我们可以做其他事情来确保不使用算法来过度歧视个人。 已提出的两个此类解决方案是“人在环”和算法透明性。

人在环 (Human-in-the-Loop)

This sounds like some kind of rollercoaster ride, but it merely refers to a paradigm whereby a human oversees the algorithmic process. Human-in-the-loop is often implemented in situations that have high risks if the algorithm makes a mistake. For example, missile detection systems that inform the military when a missile is detected allow individuals to review the situation and decide how to respond — the algorithm does not respond without human interaction. Just imagine the catastrophic consequences of running nuclear weapon systems with AI that had permission to fire when they detected a threat — one false positive and the entire world would be doomed.

这听起来像是过山车,但它仅指的是人类监督算法过程的范例。 如果算法出错,则经常在存在高风险的情况下实施“人在回路” 。 例如,当检测到导弹时通知军方的导弹检测系统使个人可以查看情况并决定如何做出响应-该算法在没有人为干预的情况下不会做出响应。 试想一下,如果使用人工智能运行核武器系统会带来灾难性的后果,当它们检测到威胁时就被允许开火-一个假阳性,整个世界注定要失败。

Another example of this is the COMPAS system for recividism — the system does not categorize you as a recidivist and make a legal judgment. Instead, the judge reviews the COMPAS score and uses this as a factor in their evaluation of the circumstance. This raises new questions such as how humans interact with the algorithmic system. Studies using Amazon Mechanical Turk have shown that some individuals will follow the algorithm’s judgment wholeheartedly, as they perceive it to have greater knowledge than a human is likely to, other individuals take its output with a pinch of salt, and some ignore it completely. Research into human-in-the-loop is relatively novel but we are likely to see more of it as machine learning becomes more pervasive in our society.

这方面的另一个示例是COMPAS累犯制度-该系统不会将您归类为累犯并做出法律判断。 取而代之的是,法官会审查COMPAS评分并将其用作评估情况的因素。 这就提出了新的问题,例如人类如何与算法系统进行交互。 使用Amazon Mechanical Turk进行的研究表明,有些人会全心全意地遵循该算法的判断,因为他们认为该算法比人类可能拥有的知识更多,其他一些人则花了一小撮盐就可以接受它的输出,而有些人则完全忽略了它。 环人研究相对新颖,但是随着机器学习在我们社会中的普及,我们可能会看到更多的研究。

Another important and similar concept is human-on-the-loop. This is similar to human-in-the-loop, but instead of the human being actively involved in the process, they are passively involved in the algorithm’s oversight. For example, a data analyst might be in charge of monitoring sections of an oil and gas pipeline to ensure that all of the sensors and processes are running appropriately and there are no concerning signals or errors. This analyst is in an oversight position but is not actively involved in the process. Human-on-the-loop is inherently more scalable than human-in-the-loop since it requires less manpower, but it may be untenable in certain circumstances — such as looking after those nuclear missiles!

另一个重要且类似的概念是“人在环”。 这类似于循环中的人,但是他们没有主动地参与流程,而是被动地参与了算法的监督。 例如,数据分析员可能负责监视石油和天然气管道的各个部分,以确保所有传感器和过程均正常运行,并且没有相关的信号或错误。 该分析师处于监督位置,但没有积极参与该过程。 “在环人员”比“在环人员”具有更高的可伸缩性,因为它需要的人力更少,但在某些情况下(例如照顾那些核导弹)可能难以为继!

算法透明度 (Algorithmic Transparency)

The dominant position in the legal literature for fairness is through algorithmic interpretability and explainability via transparency. The argument is that if an algorithm is able to be viewed publicly and analyzed with scrutiny, then it can be ensured with a high level of confidence that there is no disparate impact built into the model. Whilst this is clearly desirable on many levels, there are some downsides to algorithmic transparency.

在法律文献中,关于公平的主导地位是通过算法的可解释性和通过透明度的可解释性。 有论点是,如果能够公开查看算法并进行仔细分析,那么就可以高度确信地确保该模型不会产生不同的影响。 尽管这在许多层面上显然是理想的,但算法透明性也有一些缺点。

Proprietary algorithms by definition cannot be transparent.

专有算法的定义不能透明 。

From a commercial standpoint, this idea is untenable in most circumstances — trade secrets or proprietary information may be leaked if algorithms and business processes are provided for all to see. Imagine Facebook or Twitter being asked to release their algorithms to the world so they can be scrutinized to ensure there are no biasing issues. Most likely I could download their code and go and start my own version of Twitter or Facebook pretty easily. Full transparency is only really an option for algorithms used in public services, such as by the government (to some extent), healthcare, the legal system, etc. Since legal scholars are predominantly concerned with the legal system, it makes sense that this remains the consensus at the current time.

从商业角度来看,这种想法在大多数情况下都是站不住脚的-如果提供算法和业务流程供所有人查看,则商业秘密或专有信息可能会泄露。 想象一下,Facebook或Twitter被要求向世界发布其算法,以便对其进行仔细检查以确保没有偏见。 最有可能的是,我可以下载他们的代码,然后轻松启动自己的Twitter或Facebook版本。 对于政府(在一定程度上),医疗保健,法律体系等公共服务中使用的算法,完全透明实际上只是一种选择。由于法律学者主要关注法律体系,因此这仍然有意义目前的共识。

In the future, perhaps regulations on algorithmic fairness may be a more tenable solution than algorithmic transparency for private companies that have a vested interest to keep their algorithms from the public eye. Andrew Tutt discusses this idea in his paper “An FDA For Algorithms”, which focused on the development of a regulatory body similar to the FDA to regulate algorithms. Algorithms could be submitted to the regulatory body, or perhaps third party auditing services, and analyzed to ensure they are suitable to be used without resulting in disparate impact.

将来,对于那些既有利益让自己的算法不受公众关注的私人公司,也许算法公平性的法规可能比算法透明性更可行。 安德鲁·塔特(Andrew Tutt)在他的论文“ FDA的算法 ”中讨论了这个想法,该论文的重点是开发类似于FDA的算法来监管算法。 可以将算法提交给监管机构或第三方审核服务,并进行分析,以确保算法适合使用而不会造成不同的影响。

Clearly, such an idea would require large amounts of discussion, money, and expertise to implement, but this seems like a potentially workable solution from my perspective. There is still a long way to go to ensure our algorithms are free of both disparate treatment and disparate impact. With a combination of regulations, transparency, human-in-the-loop, human-on-the-loop, and new and improved variations of statistical parity, we are part of the way there, but this field is still young and there is much work to be done — watch this space.

显然,这种想法需要大量的讨论,金钱和专业知识才能实施,但是从我的角度来看,这似乎是一个可行的解决方案。 要确保我们的算法不受不同的处理和不同的影响,还有很长的路要走。 结合法规,透明度,循环中的人,循环中的人以及统计奇偶校验的新方法和改进的方法,我们已经成为其中的一部分,但是这个领域还很年轻,并且需要做的很多工作-观看此空间。

最后评论 (Final Comments)

In this article, we have discussed at length multiple biases present within training data due to the way in which it is collected and analyzed. We have also discussed several ways in which to mitigate the impact of these biases and to help ensure that algorithms remain non-discriminatory towards minority groups and protected classes.

在本文中,我们详细讨论了由于训练数据的收集和分析方式而在训练数据中存在的多个偏差。 我们还讨论了减轻这些偏见影响并帮助确保算法对少数群体和受保护的类别保持非歧视性的几种方法。

Although machine learning, by its very nature, is always a form of statistical discrimination, the discrimination becomes objectionable when it places certain privileged groups at a systematic advantage and certain unprivileged groups at a systematic disadvantage. Biases in training data, due to either prejudice in labels or under-/over-sampling, yields models with unwanted bias.

尽管从本质上来说,机器学习始终是统计歧视的一种形式,但是当歧视将某些特权群体置于系统优势而某些非特权群体置于系统劣势时,歧视就变得令人反感。 由于标签上的偏见或采样不足/过高,训练数据中的偏差会导致模型产生不必要的偏差。

Some might say that these decisions were made on less information and by humans, which can have many implicit and cognitive biases influencing their decision. Automating these decisions provides more accurate results and to a large degree limits the extent of these biases. The algorithms do not need to be perfect, just better than what previously existed. The arc of history curves towards justice.

有人可能会说,这些决定是在较少的信息下由人做出的,而人可能会因许多隐性和认知偏见而影响其决定。 使这些决策自动化可提供更准确的结果,并在很大程度上限制了这些偏差的程度。 这些算法不需要是完美的,仅比以前存在的算法更好。 历史的弧线走向正义。

Some might say that algorithms are being given free rein to allow inequalities to be systematically instantiated, or that data itself is inherently biased. That variables related to protected attributes should be removed from data to help mitigate these issues, and any variable correlated with the variables removed or restricted.

有人可能会说,可以自由控制算法,以系统地实例化不平等,或者数据本身存在固有偏差。 That variables related to protected attributes should be removed from data to help mitigate these issues, and any variable correlated with the variables removed or restricted.

Both groups would be partially correct. However, we should not remain satisfied with unfair algorithms, there is also room for improvement. Similarly, we should not waste all of this data we have and remove all variables, as this would make systems perform much worse and would render them much less useful. That being said, at the end of the day, it is up to the creators of these algorithms and oversight bodies, as well as those in charge of collecting data, to try to ensure that these biases are handled appropriately.

Both groups would be partially correct. However, we should not remain satisfied with unfair algorithms, there is also room for improvement. Similarly, we should not waste all of this data we have and remove all variables, as this would make systems perform much worse and would render them much less useful. That being said, at the end of the day, it is up to the creators of these algorithms and oversight bodies, as well as those in charge of collecting data, to try to ensure that these biases are handled appropriately.

Data collection and sampling procedures are often glazed over in statistics classes, and not understood well by the general public. Until such a time as a regulatory body appears, it is up to machine learning engineers, statisticians, and data scientists to ensure the equality of opportunity is embedded in our machine learning practices. We must be mindful of where our data comes from and what we do with it. Who knows who our decisions might impact in the future?

Data collection and sampling procedures are often glazed over in statistics classes, and not understood well by the general public. Until such a time as a regulatory body appears, it is up to machine learning engineers, statisticians, and data scientists to ensure the equality of opportunity is embedded in our machine learning practices. We must be mindful of where our data comes from and what we do with it. Who knows who our decisions might impact in the future?

“The world isn’t fair, Calvin.”“I know Dad, but why isn’t it ever unfair in my favor?”― Bill Watterson, The Essential Calvin and Hobbes: A Calvin and Hobbes Treasury

“The world isn't fair, Calvin.”“I know Dad, but why isn't it ever unfair in my favor?” ― Bill Watterson, The Essential Calvin and Hobbes: A Calvin and Hobbes Treasury

Newsletter (Newsletter)

For updates on new blog posts and extra content, sign up for my newsletter.

For updates on new blog posts and extra content, sign up for my newsletter.

进一步阅读 (Further Reading)

[1] Big Data: A Report on Algorithmic Systems, Opportunity, and Civil Rights. The White House. 2016.

[1] Big Data: A Report on Algorithmic Systems, Opportunity, and Civil Rights . The White House. 2016。

[2] Bias in computer systems. Batya Friedman, Helen Nissenbaum. 1996

[2] Bias in computer systems . Batya Friedman, Helen Nissenbaum. 1996年

[3] The Hidden Biases in Big Data. Kate Crawford. 2013.

[3] The Hidden Biases in Big Data . Kate Crawford. 2013。

[4] Big Data’s Disparate Impact. Solon Barocas, Andrew Selbst. 2014.

[4] Big Data's Disparate Impact . Solon Barocas, Andrew Selbst. 2014。

[5] Blog post: How big data is unfair. Moritz Hardt. 2014

[5] Blog post: How big data is unfair . Moritz Hardt. 2014年

[6] Semantics derived automatically from language corpora contain human-like biases. Aylin Caliskan, Joanna J. Bryson, Arvind Narayanan

[6] Semantics derived automatically from language corpora contain human-like biases . Aylin Caliskan, Joanna J. Bryson, Arvind Narayanan

[7] Sex Bias in Graduate Admissions: Data from Berkeley. P. J. Bickel, E. A. Hammel, J. W. O’Connell. 1975.

[7] Sex Bias in Graduate Admissions: Data from Berkeley . PJ Bickel, EA Hammel, JW O'Connell. 1975.

[8] Simpson’s paradox. Pearl (Chapter 6). Tech report

[8] Simpson's paradox. Pearl (Chapter 6). Tech report

[9] Certifying and removing disparate impact. Michael Feldman, Sorelle Friedler, John Moeller, Carlos Scheidegger, Suresh Venkatasubramanian

[9] Certifying and removing disparate impact . Michael Feldman, Sorelle Friedler, John Moeller, Carlos Scheidegger, Suresh Venkatasubramanian

[10] Equality of Opportunity in Supervised Learning. Moritz Hardt, Eric Price, Nathan Srebro. 2016.

[10] Equality of Opportunity in Supervised Learning . Moritz Hardt, Eric Price, Nathan Srebro. 2016。

[11] Blog post: Approaching fairness in machine learning. Moritz Hardt. 2016.

[11] Blog post: Approaching fairness in machine learning . Moritz Hardt. 2016。

[12] Machine Bias. Julia Angwin, Jeff Larson, Surya Mattu and Lauren Kirchner, ProPublica. Code review: github.com/probublica/compas-analysis, github.com/adebayoj/fairml

[12] Machine Bias . Julia Angwin, Jeff Larson, Surya Mattu and Lauren Kirchner, ProPublica. Code review: github.com/probublica/compas-analysis , github.com/adebayoj/fairml

[13] COMPAS Risk Scales: Demonstrating Accuracy Equity and Predictive Parity. Northpointe Inc.

[13] COMPAS Risk Scales: Demonstrating Accuracy Equity and Predictive Parity . Northpointe Inc.

[14] Fairness in Criminal Justice Risk Assessments: The State of the ArtRichard Berk, Hoda Heidari, Shahin Jabbari, Michael Kearns, Aaron Roth. 2017.

[14] Fairness in Criminal Justice Risk Assessments: The State of the Art Richard Berk, Hoda Heidari, Shahin Jabbari, Michael Kearns, Aaron Roth. 2017。

[15] Courts and Predictive Algorithms. Angèle Christin, Alex Rosenblat, and danah boyd. 2015. Discussion paper

[15] Courts and Predictive Algorithms . Angèle Christin, Alex Rosenblat, and danah boyd. 2015. Discussion paper

[16] Limitations of mitigating judicial bias with machine learning. Kristian Lum. 2017.

[16] Limitations of mitigating judicial bias with machine learning . Kristian Lum. 2017。

[17] Probabilistic Outputs for Support Vector Machines and Comparisons to Regularized Likelihood Methods. John C. Platt. 1999.

[17] Probabilistic Outputs for Support Vector Machines and Comparisons to Regularized Likelihood Methods . John C. Platt. 1999.

[18] Inherent Trade-Offs in the Fair Determination of Risk Scores. Jon Kleinberg, Sendhil Mullainathan, Manish Raghavan. 2016.

[18] Inherent Trade-Offs in the Fair Determination of Risk Scores . Jon Kleinberg, Sendhil Mullainathan, Manish Raghavan. 2016。

[19] Fair prediction with disparate impact: A study of bias in recidivism prediction instruments. Alexandra Chouldechova. 2016.

[19] Fair prediction with disparate impact: A study of bias in recidivism prediction instruments . Alexandra Chouldechova. 2016。

[20] Attacking discrimination with smarter machine learning. An interactive visualization by Martin Wattenberg, Fernanda Viégas, and Moritz Hardt. 2016.

[20] Attacking discrimination with smarter machine learning . An interactive visualization by Martin Wattenberg, Fernanda Viégas, and Moritz Hardt. 2016。

[21] Algorithmic decision making and the cost of fairness. Sam Corbett-Davies, Emma Pierson, Avi Feller, Sharad Goel, Aziz Huq. 2017.

[21] Algorithmic decision making and the cost of fairness . Sam Corbett-Davies, Emma Pierson, Avi Feller, Sharad Goel, Aziz Huq. 2017。

[22] The problem of Infra-marginality in Outcome Tests for Discrimination. Camelia Simoiu, Sam Corbett-Davies, Sharad Goel. 2017.

[22] The problem of Infra-marginality in Outcome Tests for Discrimination . Camelia Simoiu, Sam Corbett-Davies, Sharad Goel. 2017。

[23] Equality of Opportunity in Supervised Learning. Moritz Hardt, Eric Price, Nathan Srebro. 2016.

[23] Equality of Opportunity in Supervised Learning . Moritz Hardt, Eric Price, Nathan Srebro. 2016。

[24] Elements of Causal Inference. Peters, Janzing, Schölkopf

[24] Elements of Causal Inference . Peters, Janzing, Schölkopf

[25] On causal interpretation of race in regressions adjusting for confounding and mediating variables. Tyler J. VanderWeele and Whitney R. Robinson. 2014.

[25] On causal interpretation of race in regressions adjusting for confounding and mediating variables . Tyler J. VanderWeele and Whitney R. Robinson. 2014。

[26] Counterfactual Fairness. Matt J. Kusner, Joshua R. Loftus, Chris Russell, Ricardo Silva. 2017.

[26] Counterfactual Fairness . Matt J. Kusner, Joshua R. Loftus, Chris Russell, Ricardo Silva. 2017。

[27] Avoiding Discrimination through Causal Reasoning. Niki Kilbertus, Mateo Rojas-Carulla, Giambattista Parascandolo, Moritz Hardt, Dominik Janzing, Bernhard Schölkopf. 2017.

[27] Avoiding Discrimination through Causal Reasoning . Niki Kilbertus, Mateo Rojas-Carulla, Giambattista Parascandolo, Moritz Hardt, Dominik Janzing, Bernhard Schölkopf. 2017。

[28] Fair Inference on Outcomes. Razieh Nabi, Ilya Shpitser

[28] Fair Inference on Outcomes . Razieh Nabi, Ilya Shpitser

[29] Fairness Through Awareness. Cynthia Dwork, Moritz Hardt, Toniann Pitassi, Omer Reingold, Rich Zemel. 2012.

[29] Fairness Through Awareness . Cynthia Dwork, Moritz Hardt, Toniann Pitassi, Omer Reingold, Rich Zemel. 2012.

[30] On the (im)possibility of fairness. Sorelle A. Friedler, Carlos Scheidegger, Suresh Venkatasubramanian. 2016.

[30] On the (im)possibility of fairness . Sorelle A. Friedler, Carlos Scheidegger, Suresh Venkatasubramanian. 2016。

[31] Why propensity scores should not be used. Gary King, Richard Nielson. 2016.

[31] Why propensity scores should not be used . Gary King, Richard Nielson. 2016。

[32] Raw Data is an Oxymoron. Edited by Lisa Gitelman. 2013.

[32] Raw Data is an Oxymoron . Edited by Lisa Gitelman. 2013。

[33] Blog post: What’s the most important thing in Statistics that’s not in the textbooks. Andrew Gelman. 2015.

[33] Blog post: What's the most important thing in Statistics that's not in the textbooks . Andrew Gelman. 2015年。

[34] Deconstructing Statistical Questions. David J. Hand. 1994.

[34] Deconstructing Statistical Questions . David J. Hand. 1994.

[35] Statistics and the Theory of Measurement. David J. Hand. 1996.

[35] Statistics and the Theory of Measurement . David J. Hand. 1996年。

[36] Measurement Theory and Practice: The World Through Quantification. David J. Hand. 2010

[36] Measurement Theory and Practice: The World Through Quantification . David J. Hand. 2010

[37] Survey Methodology, 2nd Edition. Robert M. Groves, Floyd J. Fowler, Jr., Mick P. Couper, James M. Lepkowski, Eleanor Singer, Roger Tourangeau. 2009

[37] Survey Methodology, 2nd Edition . Robert M. Groves, Floyd J. Fowler, Jr., Mick P. Couper, James M. Lepkowski, Eleanor Singer, Roger Tourangeau. 2009年

[38] Man is to Computer Programmer as Woman is to Homemaker? Debiasing Word Embeddings. Tolga Bolukbasi, Kai-Wei Chang, James Zou, Venkatesh Saligrama, Adam Kalai. 2016.

[38] Man is to Computer Programmer as Woman is to Homemaker? Debiasing Word Embeddings . Tolga Bolukbasi, Kai-Wei Chang, James Zou, Venkatesh Saligrama, Adam Kalai. 2016。

[39] Men Also Like Shopping: Reducing Gender Bias Amplification using Corpus-level Constraints. Jieyu Zhao, Tianlu Wang, Mark Yatskar, Vicente Ordonez, Kai-Wei Chang. 2017.

[39] Men Also Like Shopping: Reducing Gender Bias Amplification using Corpus-level Constraints . Jieyu Zhao, Tianlu Wang, Mark Yatskar, Vicente Ordonez, Kai-Wei Chang. 2017。

[40] Big Data’s Disparate Impact. Solon Barocas, Andrew Selbst. 2014.

[40] Big Data's Disparate Impact . Solon Barocas, Andrew Selbst. 2014。

[41] It’s Not Privacy, and It’s Not Fair. Cynthia Dwork, Deirdre K. Mulligan. 2013.

[41] It's Not Privacy, and It's Not Fair . Cynthia Dwork, Deirdre K. Mulligan. 2013。

[42] The Trouble with Algorithmic Decisions. Tal Zarsky. 2016.

[42] The Trouble with Algorithmic Decisions . Tal Zarsky. 2016。

[43] How Copyright Law Can Fix Artificial Intelligence’s Implicit Bias Problem. Amanda Levendowski. 2017.

[43] How Copyright Law Can Fix Artificial Intelligence's Implicit Bias Problem . Amanda Levendowski. 2017。

[44] An FDA for Algorithms. Andrew Tutt. 2016

[44] An FDA for Algorithms . Andrew Tutt. 2016年

翻译自: https://towardsdatascience.com/programming-fairness-in-algorithms-4943a13dd9f8

控制算法和编程算法

相关文章:

- 阅读理解技巧

- 应聘时计算机水平怎么说,计算机能力怎么填_计算机水平怎么写_简历

- 2021年全国计算机能力挑战赛C++决赛,题目分享

- 【2021全国高校计算机能力挑战赛C++题目】17.信息整理 某机房上线了一套系统,和每台计算机都相连,以便监控各计算机相关外设的运行状态。

- 2021年全国大学生计算机能力挑战赛(Java)决赛试题代码(外加部分试题)

- 2021全国高校计算机能力挑战赛程序设计赛Python组区域赛(初赛)试题及部分个人解答

- 2020全国高校计算机能力挑战赛(word模拟题)

- 2019年全国高校计算机能力挑战赛C++组题解

- 【C++决赛】2019年全国高校计算机能力挑战赛决赛C++组题解

- 【2021全国高校计算机能力挑战赛Python题目】17.学科竞赛 现有六门功课(语文、数学、物理、化学、政治、历史)的成绩,现在需要从中选拔优秀同学参加如下学科竞赛

- 全国高校计算机能力挑战赛真题(一)

- 2021年计算机能力挑战赛真题总结C++版

- 全国高校计算机能力挑战赛赛事通知

- 全国高校计算机能力挑战赛试题,2019年全国高校计算机能力挑战赛 C语言程序设计决赛(示例代码)...

- Centos 安装SVN

- LINUX(CENTOS7.X)SVN部署文档+pycharmSvn

- Linux下搭建SVN

- 阿里云centos7.4安装并部署svn1.10.0版本(配置多仓库,加入开机自启动)

- Linux下安装SVN服务(CentOS7下)单仓库版(老威改良版)

- linux centos7 安装svn,linux centos7安装svn并配置同步更新web项目

- CentOS7 安装svn

- 底部标签页+ViewPager+Fragment

- 计算机二级Python笔记——第一部分

- 阿里云ESC搭建SVN服务端-----实测有效,并补充了一些坑点

- 阶段巨献 - centos+php-fpm+mariaDB+svn+nodejs+redis(开机启动及配置远程连接),配置linux的php和nodejs网站运行环境。

- centos7.3安装与配置SVN

- Spring学习(二)IOC

- Linux下安装SVN与使用

- linux 在本地创建svn服务器_linux下搭建SVN

- svn环境搭建 linux

控制算法和编程算法_算法中的编程公平性相关推荐

- mysql的联接算法_【MySQL—SQL编程】联接

联接查询 联接查询是一种常见的数据库操作,即在两张表(或更多表)中进行行匹配的操作.一般称之为水平操作,这是因为对几张表进行联接操作所产生的结果集可以包含这几张表中所有的列. CROSS JOIN C ...

- python实现五大基本算法_算法基础:五大排序算法Python实战教程

排序是每个算法工程师和开发者都需要一些知识的技能. 不仅要通过编码实现,还要对编程本身有一般性的了解. 不同的排序算法是算法设计如何在程序复杂性,速度和效率方面具有如此强大影响的完美展示. 让我们来看 ...

- 算法导论 算法_算法导论

算法导论 算法 Algorithms are an integral part of the development world. Before starting coding of any soft ...

- python dfs算法_算法工程师技术路线图

前言 这是一份写给公司算法组同事们的技术路线图,其目的主要是为大家在技术路线的成长方面提供一些方向指引,配套一些自我考核项,可以带着实践进行学习,加深理解和掌握. 内容上有一定的通用性,所以也分享到知 ...

- java编程学习方法_在线学习Java编程的最佳方法

java编程学习方法 1.简介 Java是使用最广泛的编程语言之一. 根据Github的最新报告 ,Java被列为仅次于JavaScript的第二大最常用的编程语言. 掌握Java的人有很多话题. 好 ...

- 锻炼编程逻辑_通过锻炼提高编程技巧

锻炼编程逻辑 我们中的许多人都有一个2017年的目标,即提高我们的编程技能或首先学习如何编程. 尽管我们可以使用许多资源,但独立于特定工作来实践代码开发技巧需要一些计划. Exercism.io是为此 ...

- soul刷屏编程代码_奔涌吧,编程!少儿编程教育在未来会像语文,数学一样重要!...

想必最近你的朋友圈一定被<奔涌吧,后浪>刷屏了,的确,时代在变好,我们能够更自由的学习,读书,很多孩子在年轻时就已经接触到许多的兴趣活动,他们早早的就在发展一项"事业" ...

- python在线编程练习_有哪些在线编程练习网站?

原标题:有哪些在线编程练习网站? 现在学编程的程序员小伙伴越来越多了,追求高薪和理想是众多程序员梦寐以求的事情. 在线学编程的网站哪家强呢?下面给程序员小伙伴们推荐5个高大上的编程网站: 1.Udem ...

- adadelta算法_神经网络中常用的优化算法

优化算法的目的:1. 跳出局部极值点或鞍点,寻找全局最小值:2.使训练过程更加稳定,更加容易收敛. 优化算法的改进无非两方面:1.方向--加动量,2.学习速率--加衰减 1.SGD 2.[Moment ...

- md5不是对称密码算法_密码学中的消息摘要算法5(MD5)

md5不是对称密码算法 In cryptography, MD5 (Message-Digest algorithm 5) is a mainly used cryptographic hash fu ...

最新文章

- ubuntu 环境下调试mysql源码_Linux中eclipse调试mysql源代码

- OBIEE打补丁教程

- C++counting sort计数排序(针对string)的实现算法(附完整源码)

- vue8种组件通信方式

- 一文带你了解数据中心大二层网络演进之路

- Pycharm知识点

- 大数据分析需要什么技术架构

- PHP 将二维数组转成一维数组

- makefile编写

- 读书笔记丨《数据产品经理修炼手册:从零基础到大数据产品实践》丨DAY4

- 计算机毕业设计Java物流信息管理系统录像演示(源码+系统+mysql数据库+Lw文档)

- java购物车设计_Java简单购物车设计

- python过京东app图形验证勾股定理_Python模拟登陆 —— 征服验证码 7 京东

- 分布式系统中的Tracer

- 【经典控制理论】| 自动控制原理知识点概要(上)

- 亚马逊云科技 + 英特尔 + 中科创达为行业客户构建 AIoT 平台

- 对DELL R720机子进行重做RAID 重装操作系统

- Tiptop开发工具 Genero Studio 2.40.11软件汉化包

- 基于新形态下变电站智慧消防管理体系构建研究

- 8月30日云栖精选夜读:Nodejs进阶:使用DiffieHellman密钥交换算法