大样品随机双盲测试_训练和测试样品生成

大样品随机双盲测试

This post aims to explore a step-by-step approach to create a K-Nearest Neighbors Algorithm without the help of any third-party library. In practice, this Algorithm should be useful enough for us to classify our data whenever we have already made classifications (in this case, color), which will serve as a starting point to find neighbors.

这篇文章旨在探索逐步方法,以在无需任何第三方库的帮助下创建K最近邻居算法 。 在实践中,只要我们已经进行了分类(在这种情况下为颜色),该算法就足以对我们进行数据分类,这将成为寻找邻居的起点。

For this post, we will use a specific dataset which can be downloaded here. It contains 539 two dimensional data points, each with a specific color classification. Our goal will be to separate them into two groups (train and test) and try to guess our test sample colors based on our algorithm recommendation.

对于这篇文章,我们将使用一个特定的数据集,可以在此处下载 。 它包含539个二维数据点,每个数据点都有特定的颜色分类。 我们的目标是将它们分为两组(训练和测试),并根据算法建议尝试猜测测试样本的颜色。

训练和测试样品生成 (Train and test sample generation)

We will create two different sample sets:

我们将创建两个不同的样本集:

Training Set: This will contain 75% of our working data, selected randomly. This set will be used to generate our model.

训练集:这将包含我们75%的工作数据,是随机选择的。 该集合将用于生成我们的模型。

Test Set: Remaining 25% of our working data will be used to test the out-of-sample accuracy of our model. Once our predictions of this 25% are made, we will check the “percentage of correct classifications” by comparing predictions versus real values.

测试集:我们剩余的25%的工作数据将用于测试模型的样本外准确性。 一旦做出25%的预测,我们将通过比较预测值与实际值来检查“ 正确分类的百分比 ”。

# Load Datalibrary(readr)RGB <- as.data.frame(read_csv("RGB.csv"))RGB$x <- as.numeric(RGB$x)RGB$y <- as.numeric(RGB$y)print("Working data ready")# Training Datasetsmp_siz = floor(0.75*nrow(RGB))train_ind = sample(seq_len(nrow(RGB)),size = smp_siz)train =RGB[train_ind,]# Testting Datasettest=RGB[-train_ind,]OriginalTest <- testpaste("Training and test sets done")训练数据 (Training Data)

We can observe that our train data is classified into 3 clusters based on colors.

我们可以观察到,我们的火车数据基于颜色分为3类。

# We plot test colored datapointslibrary(ggplot2)colsdot <- c("Blue" = "blue", "Red" = "darkred", "Green" = "darkgreen")ggplot() + geom_tile(data=train,mapping=aes(x, y), alpha=0) + ##Ad tiles according to probabilities ##add points geom_point(data=train,mapping=aes(x,y, colour=Class),size=3 ) + scale_color_manual(values=colsdot) + #add the labels to the plots xlab('X') + ylab('Y') + ggtitle('Train Data')+ #remove grey border from the tile scale_x_continuous(expand=c(0,.05))+scale_y_continuous(expand=c(0,.05))

测试数据 (Test Data)

Even though we know the original color classification of our test data, we will try to create a model that can guess its color based solely on an educated guess. For this, we will remove their original colors and save them only for testing purposes; once our model makes its prediction, we will be able to calculate our Model Accuracy by comparing the original versus our prediction.

即使我们知道测试数据的原始颜色分类,我们也将尝试创建一个仅可以根据有根据的猜测来猜测其颜色的模型。 为此,我们将删除其原始颜色,并仅将其保存以用于测试; 一旦我们的模型做出了预测,我们就可以通过比较原始预测和我们的预测来计算模型精度 。

# We plot test colored datapointscolsdot <- c("Blue" = "blue", "Red" = "darkred", "Green" = "darkgreen")ggplot() + geom_tile(data=test,mapping=aes(x, y), alpha=0) + ##Ad tiles according to probabilities ##add points geom_point(data=test,mapping=aes(x,y),size=3 ) + scale_color_manual(values=colsdot) + #add the labels to the plots xlab('X') + ylab('Y') + ggtitle('Test Data')+ #remove grey border from the tile scale_x_continuous(expand=c(0,.05))+scale_y_continuous(expand=c(0,.05))

K最近邻居算法 (K-Nearest Neighbors Algorithm)

Below is a step-by-step example of an implementation of this algorithm. What we want to achieve is for each selected gray point above (our test values), where we allegedly do not know their actual color, find the nearest neighbor or nearest colored data point from our train values and assign the same color as this one.

下面是该算法实现的分步示例。 我们想要实现的是针对上面每个选定的灰点(我们的测试值),据称我们不知道它们的实际颜色,从我们的火车值中找到最近的邻居或最接近的彩色数据点,并为其分配相同的颜色。

In particular, we need to:

特别是,我们需要:

Normalize data: even though in this case is not needed, since all values are in the same scale (decimals between 0 and 1), it is recommended to normalize in order to have a “standard distance metric”.

归一化数据:即使在这种情况下不需要,由于所有值都在相同的标度(0到1之间的小数),建议进行归一化以具有“标准距离度量”。

Define how we measure distance: We can define the distance between two points in this two-dimensional data set as the Euclidean distance between them. We will calculate L1 (sum of absolute differences) and L2 (sum of squared differences) distances, though final results will be calculated using L2 since its more unforgiving than L1.

定义测量距离的方式:我们可以将此二维数据集中的两个点之间的距离定义为它们之间的欧式距离。 我们将计算L1(绝对差之和)和L2(平方差之和)的距离,尽管最终结果将使用L2来计算,因为它比L1更加不容忍。

Calculate Distances: we need to calculate the distance between each tested data point and every value within our train dataset. Normalization is critical here since, in the case of body structure, a distance in weight (1 KG) and height (1 M) is not comparable. We can anticipate a higher deviation in KG than it is on the Meters, leading to incorrect overall distances.

计算距离:我们需要计算每个测试数据点与火车数据集中每个值之间的距离。 归一化在这里至关重要,因为在身体结构的情况下,重量(1 KG)和高度(1 M)的距离不可比。 我们可以预期到KG的偏差要比仪表多,从而导致总距离不正确。

Sort Distances: Once we calculate the distance between every test and training points, we need to sort them in descending order.

距离排序:一旦我们计算出每个测试点与训练点之间的距离,就需要对它们进行降序排序。

Selecting top K nearest neighbors: We will select the top K nearest train data points to inspect which category (colors) they belong to in order also to assign this category to our tested point. Since we might use multiple neighbors, we might end up with multiple categories, in which case, we should calculate a probability.

选择最接近的K个最近的邻居:我们将选择最接近的K个火车数据点来检查它们属于哪个类别(颜色),以便将该类别分配给我们的测试点。 由于我们可能使用多个邻居,因此我们可能会得到多个类别,在这种情况下,我们应该计算一个概率。

# We define a function for predictionKnnL2Prediction <- function(x,y,K) {

# Train data Train <- train # This matrix will contain all X,Y values that we want test. Test <- data.frame(X=x,Y=y)

# Data normalization Test$X <- (Test$X - min(Train$x))/(min(Train$x) - max(Train$x)) Test$Y <- (Test$Y - min(Train$y))/(min(Train$y) - max(Train$y)) Train$x <- (Train$x - min(Train$x))/(min(Train$x) - max(Train$x)) Train$y <- (Train$y - min(Train$y))/(min(Train$y) - max(Train$y)) # We will calculate L1 and L2 distances between Test and Train values. VarNum <- ncol(Train)-1 L1 <- 0 L2 <- 0 for (i in 1:VarNum) { L1 <- L1 + (Train[,i] - Test[,i]) L2 <- L2 + (Train[,i] - Test[,i])^2 }

# We will use L2 Distance L2 <- sqrt(L2)

# We add labels to distances and sort Result <- data.frame(Label=Train$Class,L1=L1,L2=L2)

# We sort data based on score ResultL1 <-Result[order(Result$L1),] ResultL2 <-Result[order(Result$L2),]

# Return Table of Possible classifications a <- prop.table(table(head(ResultL2$Label,K))) b <- as.data.frame(a) return(as.character(b$Var1[b$Freq == max(b$Freq)]))}使用交叉验证找到正确的K参数 (Finding the correct K parameter using Cross-Validation)

For this, we will use a method called “cross-validation”. What this means is that we will make predictions within the training data itself and iterate this on many different values of K for many different folds or permutations of the data. Once we are done, we will average our results and obtain the best K for our “K-Nearest” Neighbors algorithm.

为此,我们将使用一种称为“交叉验证”的方法。 这意味着我们将在训练数据本身中进行预测,并针对数据的许多不同折叠或排列对许多不同的K值进行迭代。 完成之后,我们将平均结果并为“ K最近”邻居算法获得最佳K。

# We will use 5 foldsFoldSize = floor(0.2*nrow(train)) # Fold1piece1 = sample(seq_len(nrow(train)),size = FoldSize ) Fold1 = train[piece1,]rest = train[-piece1,] # Fold2piece2 = sample(seq_len(nrow(rest)),size = FoldSize)Fold2 = rest[piece2,]rest = rest[-piece2,] # Fold3piece3 = sample(seq_len(nrow(rest)),size = FoldSize)Fold3 = rest[piece3,]rest = rest[-piece3,] # Fold4piece4 = sample(seq_len(nrow(rest)),size = FoldSize)Fold4 = rest[piece4,]rest = rest[-piece4,] # Fold5Fold5 <- rest# We make foldsSplit1_Test <- rbind(Fold1,Fold2,Fold3,Fold4)Split1_Train <- Fold5Split2_Test <- rbind(Fold1,Fold2,Fold3,Fold5)Split2_Train <- Fold4Split3_Test <- rbind(Fold1,Fold2,Fold4,Fold5)Split3_Train <- Fold3Split4_Test <- rbind(Fold1,Fold3,Fold4,Fold5)Split4_Train <- Fold2Split5_Test <- rbind(Fold2,Fold3,Fold4,Fold5)Split5_Train <- Fold1# We select best KOptimumK <- data.frame(K=NA,Accuracy=NA,Fold=NA)results <- trainfor (i in 1:5) { if(i == 1) { train <- Split1_Train test <- Split1_Test } else if(i == 2) { train <- Split2_Train test <- Split2_Test } else if(i == 3) { train <- Split3_Train test <- Split3_Test } else if(i == 4) { train <- Split4_Train test <- Split4_Test } else if(i == 5) { train <- Split5_Train test <- Split5_Test } for(j in 1:20) { results$Prediction <- mapply(KnnL2Prediction, results$x, results$y,j) # We calculate accuracy results$Match <- ifelse(results$Class == results$Prediction, 1, 0) Accuracy <- round(sum(results$Match)/nrow(results),4) OptimumK <- rbind(OptimumK,data.frame(K=j,Accuracy=Accuracy,Fold=paste("Fold",i)))

}}OptimumK <- OptimumK [-1,]MeanK <- aggregate(Accuracy ~ K, OptimumK, mean)ggplot() + geom_point(data=OptimumK,mapping=aes(K,Accuracy, colour=Fold),size=3 ) + geom_line(aes(K, Accuracy, colour="Moving Average"), linetype="twodash", MeanK) + scale_x_continuous(breaks=seq(1, max(OptimumK$K), 1))

As seen in the plot above, we can observe that our algorithm’s prediction accuracy is in the range of 88%-95% for all folds and decreasing from K=3 onwards. We can observe the highest consistent accuracy results on K=1 (3 is also a good alternative).

如上图所示,我们可以观察到我们算法的所有折叠的预测准确度都在88%-95%的范围内,并且从K = 3开始下降。 我们可以在K = 1上观察到最高的一致精度结果(3也是一个很好的选择)。

根据最近的1个邻居进行预测。 (Predicting based on Top 1 Nearest Neighbors.)

模型精度 (Model Accuracy)

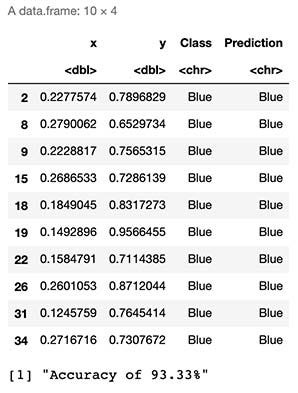

# Predictions over our Test sampletest <- OriginalTestK <- 1test$Prediction <- mapply(KnnL2Prediction, test$x, test$y,K)head(test,10)# We calculate accuracytest$Match <- ifelse(test$Class == test$Prediction, 1, 0)Accuracy <- round(sum(test$Match)/nrow(test),4)print(paste("Accuracy of ",Accuracy*100,"%",sep=""))

As seen by the results above, we can expect to “guess the correct class or color” 93% of the time.

从上面的结果可以看出,我们可以期望在93%的时间内“猜测正确的类别或颜色”。

原始色彩 (Original Colors)

Below we can observe the original colors or classes of our test sample.

下面我们可以观察测试样品的原始颜色或类别。

ggplot() + geom_tile(data=test,mapping=aes(x, y), alpha=0) + geom_point(data=test,mapping=aes(x,y,colour=Class),size=3 ) + scale_color_manual(values=colsdot) + xlab('X') + ylab('Y') + ggtitle('Test Data')+ scale_x_continuous(expand=c(0,.05))+scale_y_continuous(expand=c(0,.05))

预测的颜色 (Predicted Colors)

Using our algorithm, we obtain the following colors for our initially colorless sample dataset.

使用我们的算法,我们为最初的无色样本数据集获得了以下颜色。

ggplot() + geom_tile(data=test,mapping=aes(x, y), alpha=0) + geom_point(data=test,mapping=aes(x,y,colour=Prediction),size=3 ) + scale_color_manual(values=colsdot) + xlab('X') + ylab('Y') + ggtitle('Test Data')+ scale_x_continuous(expand=c(0,.05))+scale_y_continuous(expand=c(0,.05))

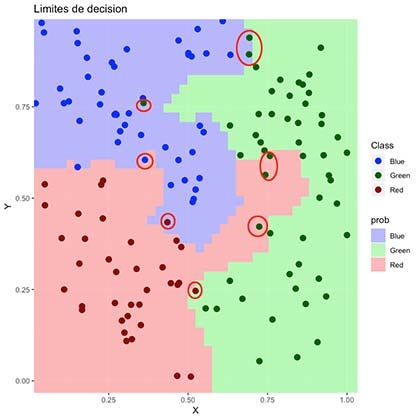

As seen in the plot above, it seems even though our algorithm correctly classified most of the data points, it failed with some of them (marked in red).

如上图所示,即使我们的算法正确地对大多数数据点进行了分类,但其中的某些数据点还是失败了(用红色标记)。

决策极限 (Decision Limits)

Finally, we can visualize our “decision limits” over our original Test Dataset. This provides an excellent visual approximation of how well our model is classifying our data and the limits of its classification space.

最后,我们可以可视化我们原始测试数据集上的“决策限制”。 这为模型对数据的分类及其分类空间的局限性提供了极好的视觉近似。

In simple words, we will simulate 160.000 data points (400x400 matrix) within the range of our original dataset, which, when later plotted, will fill most of the empty spaces with colors. This will help us express in detail how our model would classify this 2D space within it’s learned color classes. The more points we generate, the better our “resolution” will be, much like pixels on a TV.

简而言之,我们将在原始数据集范围内模拟160.000个数据点(400x400矩阵),当稍后绘制时,这些数据点将用颜色填充大部分空白空间。 这将帮助我们详细表达我们的模型如何在其学习的颜色类别中对该2D空间进行分类。 我们生成的点越多,我们的“分辨率”就越好,就像电视上的像素一样。

# We calculate background colorsx_coord = seq(min(train[,1]) - 0.02,max(train[,1]) + 0.02,length.out = 40)y_coord = seq(min(train[,2]) - 0.02,max(train[,2]) + 0.02, length.out = 40)coord = expand.grid(x = x_coord, y = y_coord)coord[['prob']] = mapply(KnnL2Prediction, coord$x, coord$y,K)# We calculate predictions and plot decition areacolsdot <- c("Blue" = "blue", "Red" = "darkred", "Green" = "darkgreen")colsfill <- c("Blue" = "#aaaaff", "Red" = "#ffaaaa", "Green" = "#aaffaa")ggplot() + geom_tile(data=coord,mapping=aes(x, y, fill=prob), alpha=0.8) + geom_point(data=test,mapping=aes(x,y, colour=Class),size=3 ) + scale_color_manual(values=colsdot) + scale_fill_manual(values=colsfill) + xlab('X') + ylab('Y') + ggtitle('Decision Limits')+ scale_x_continuous(expand=c(0,0))+scale_y_continuous(expand=c(0,0))

As seen above, the colored region represents which areas our algorithm would define as a “colored data point”. It is visible why it failed to classify some of them correctly.

如上所示,彩色区域表示我们的算法将哪些区域定义为“彩色数据点”。 可见为什么无法正确分类其中一些。

最后的想法 (Final Thoughts)

K-Nearest Neighbors is a straightforward algorithm that seems to provide excellent results. Even though we can classify items by eye here, this model also works in cases of higher dimensions where we cannot merely observe them by the naked eye. For this to work, we need to have a training dataset with existing classifications, which we will later use to classify data around it, meaning it is a supervised machine learning algorithm.

K最近邻居是一种简单的算法,似乎可以提供出色的结果。 即使我们可以在这里按肉眼对项目进行分类,该模型也可以在无法仅用肉眼观察它们的较高维度的情况下使用。 为此,我们需要有一个带有现有分类的训练数据集,稍后我们将使用它来对周围的数据进行分类 ,这意味着它是一种监督式机器学习算法 。

Sadly, this method presents difficulties in scenarios such as in the presence of intricate patterns that cannot be represented by simple straight distance, like in the cases of radial or nested clusters. It also has the problem of performance since, for every classification of a new data point, we need to compare it to every single point in our training dataset, which is resource and time intensive since it requires replication and iteration of the complete set.

可悲的是,这种方法在诸如无法以简单直线距离表示的复杂图案的情况下(例如在径向或嵌套簇的情况下)会遇到困难。 它也存在性能问题,因为对于新数据点的每个分类,我们都需要将其与训练数据集中的每个点进行比较,这是资源和时间密集的,因为它需要复制和迭代整个集合。

翻译自: https://towardsdatascience.com/k-nearest-neighbors-classification-from-scratch-6b31751bed9b

大样品随机双盲测试

http://www.taodudu.cc/news/show-997413.html

相关文章:

- 从数据角度探索在新加坡的非法毒品

- python 重启内核_Python从零开始的内核回归

- 回归分析中自变量共线性_具有大特征空间的回归分析中的变量选择

- python 面试问题_值得阅读的30个Python面试问题

- 机器学习模型 非线性模型_机器学习:通过预测菲亚特500的价格来观察线性模型的工作原理...

- pytorch深度学习_深度学习和PyTorch的推荐系统实施

- 数据库课程设计结论_结论:

- 网页缩放与窗口缩放_功能缩放—不同的Scikit-Learn缩放器的效果:深入研究

- 未越狱设备提取数据_从三星设备中提取健康数据

- 分词消除歧义_角色标题消除歧义

- 在加利福尼亚州投资于新餐馆:一种数据驱动的方法

- 近似算法的近似率_选择最佳近似最近算法的数据科学家指南

- 在Python中使用Seaborn和WordCloud可视化YouTube视频

- 数据结构入门最佳书籍_最佳数据科学书籍

- 多重插补 均值插补_Feature Engineering Part-1均值/中位数插补。

- 客户行为模型 r语言建模_客户行为建模:汇总统计的问题

- 多维空间可视化_使用GeoPandas进行空间可视化

- 机器学习 来源框架_机器学习的秘密来源:策展

- 呼吁开放外网_服装数据集:呼吁采取行动

- 数据可视化分析票房数据报告_票房收入分析和可视化

- 先知模型 facebook_Facebook先知

- 项目案例:qq数据库管理_2小时元项目:项目管理您的数据科学学习

- 查询数据库中有多少个数据表_您的数据中有多少汁?

- 数据科学与大数据技术的案例_作为数据科学家解决问题的案例研究

- 商业数据科学

- 数据科学家数据分析师_站出来! 分析人员,数据科学家和其他所有人的领导和沟通技巧...

- 分析工作试用期收获_免费使用零编码技能探索数据分析

- 残疾科学家_数据科学与残疾:通过创新加强护理

- spss23出现数据消失_改善23亿人口健康数据的可视化

- COVID-19研究助理

大样品随机双盲测试_训练和测试样品生成相关推荐

- 编写junit 测试_编写JUnit测试的另一种方法(Jasmine方法)

编写junit 测试 最近,我为一个小型个人项目编写了很多Jasmine测试. 我花了一些时间才终于感到正确地完成了测试. 在此之后,当切换回JUnit测试时,我总是很难过. 由于某种原因,JUnit ...

- 机器学习中qa测试_机器学习项目测试怎么做?(看实例)

机器学习交付项目通常包含两部分产物,一部分是机器学习模型,另一部分是机器学习应用系统.机器学习模型是嫁接在应用之上产生价值的.比如:一款预测雷雨天气的APP,它的雷雨预测功能就是由机器学习模型完成的. ...

- 随机森林需要分训练集测试集吗_讨论记录用随机森林对生存数据降维,筛选signature...

昨晚,小伙伴收到了大鱼海棠为我们带来的FigureYa182RFSurv,使用随机森林对生存数据降维,根据变量重要性排序并筛选基因组成prognostic signature. 这是我们第二次众筹随机 ...

- spock测试_使用Spock测试您的代码

spock测试 Spock是针对Java和Groovy应用程序的测试和规范框架. Spock是: 极富表现力 简化测试的"给定/何时/然后" 语法 与大多数IDE和CI服务器兼容. ...

- idea使用junit测试_在JUnit测试中使用Builder模式

idea使用junit测试 这并不是要成为技术含量很高的职位. 这篇文章的目的是为您提供一些指导,以使您的JUnit测试生活更加轻松,使您能够在几分钟内编写复杂的测试场景,并获得具有高度可读性的测试. ...

- 万兆网络传输速度测试_用万兆网卡测试超五类网线传输速度,颠覆你的认知

经常在网上看见一些提问,说要用六类网线才能跑满千兆,有些甚至说要七类.有些商家把各类网线冠上百兆网线.千兆网线.万兆网线的名字.其实网线是不该按速度来分类的,现在多数常见的是超五类(Cat5e).六类 ...

- spock测试_用Spock测试AKKA应用程序

spock测试 AKKA是基于消息驱动和基于AKKA模型的并发工具包. 尽管AKKA用Scala编写, AKKA可以在任何基于JVM的语言项目中使用. 这篇文章试图填补关于在利用AKKA框架的多语言J ...

- sysbench mysql测试_用sysbench测试mysql和服务器性能

关于sysbench测试步骤 关于sysbench调试和安装请参考: http://blog.chinaunix.net/uid-20682026-id-3138466.html 下载地址: [roo ...

- 智能手表音频特性测试_儿童手表电磁辐射测试这一环节不可少

随着智能设备的推广,高效.安全.防丢失的儿童手表越来越多地出现在孩子们的手腕上,家长联系和监护孩子也便捷了不少.然而,由于儿童正处于生长发育时期,身体各器官的功能都未发育完善儿童手表手机在使用时又贴近 ...

最新文章

- 1 图片channels_深度学习中各种图像库的图片读取方式

- Android驱动学习-内部机制_回顾binder框架关键点

- property_get 与 property_set 的返回值(转载)

- poj3713 Transferring Sylla 枚举+tarjan判割点

- arcgis创建剖面线execl文件

- 知名教授:希望论文一作发Nature后去当公务员的那名学生能看到我的这篇文章...

- android elf 加固_android so加固之section加密

- Visual Studio C++6.0下载地址

- Material Design(九)--CoordinatorLayout和App Bar

- Atitit 利用前端cache indexdb localStorage 缓存提升性能优化attilax总结 1.1. indexdb 更加强大点,但是结果测试,api比较繁琐 使用叫麻烦些 1

- ntp server

- 树莓派3连接ps4无线手柄

- php c端,tob端和toc端是什么意思

- 用优盘装系统看不到计算机本身的硬盘,给电脑装系统!的时候找不到硬盘只能看到u盘数据我怀疑硬盘坏了主机? 爱问知识人...

- 关于maxIdle ,MaxActive,maxWait介绍

- 局域网怎么查看单位摄像头_简单易用,夜里看的更清楚,360新品水滴摄像头夜视版实测...

- 【ArcGIS】连接到数据库失败,临时文件I/O错误--可能$SDEHOME/temp已满

- dom4j生成xml节点内容换行

- html如何既能应用于pc端也能用于手机端_如何选择一个 vue ui 框架?

- 马克思主义理论-资本主义的发展及趋势

热门文章

- 解决ionic3 android 运行出现Application Error - The connection to the server was unsuccessful

- c/c++连接mysql数据库设置及乱码问题(vs2013连接mysql数据库,使用Mysql API操作数据库)...

- 76. Minimum Window Substring

- Android学习之查看网络图片

- Eclipse 构建Maven项目

- ASP.NET MVC下的四种验证编程方式[续篇]

- ReportViewer不连接数据库,自定义DataSet导出到报表

- poj1681 Painter's Problem高斯消元

- IE8无法调试?IE进入不了调试状态

- Harbor:私有企业级Registry仓库--快速搭建